16-bit float conversion

Hello, I have recive 16-bit data that represents 16-bit float (half precision) is all easy way how to get the value of data without writing my own conversion from labview supports only 32, 64, 128, float formats. Thank you

Hello, there is no option to convert the number in easy (without writing any code conversion) mode.

According to: document 'A very Simple compiler' (http://acm.pku.edu.cn/JudgeOnline/problem?id=3202), you can use the code that is found in an attachment.

Best regards

MK

Tags: NI Software

Similar Questions

-

Conversion of IEEE 754 32-bit floating point values readable numbers.

Hi, I read data from a N1912A of keysight power meter. If I read a single point, it is in ASCII format, no problem. But when I try to get the full trace, he returned in the standard IEEE 754 32-bit floating (binary) format. Now, I need to convert binary data into readable data so I can read the pic, calculate the rise time, fall, flatness, etc... What is the command of C/CVI to do? I continue to seek to sprintf and ssprintf but can't seem to do things... Thank you. Each single instruments I've ever used sent back data in ASCII format and I just to analyze the data with strtok, but not this guy... Very frustrating indeed. Thanks for the help.

Here is the Manual, see page 642 (612 printed at the bottom of page) If you need to know the trace command to the unit. ViRead uses to retrieve data. The data header is here and seems correct and if I look in the data with the glasses I see it's all there...

Hello

did you see (and try) this response to Roberto?

-

How do you get the 32-bit floating-point effects of working with an alpha channel?

I do a few bugs, and lower thirds, but I find it very difficult to obtain effects of 32-bit floating point for export with transparency properly.

When I do translucent animations with brilliant light effects, they look great in AE but I can't seem to find a way to bring Final Cut and get them to look as they should.

I have attached this example of a bug with a screenshot of EI on the left with the CPF on the right. The Sun of colors and gradients of vibrant blue get flattened in a dull greenish color.

As far as I know, all my alpha and color management settings are correct. I tried the options endless and codec settings to try to solve this problem, nothing helps.

You can create an extreme, as for example by creating a translucent shape, crank the path of color to a saturated color superwhite and moving it around with the motion blur. The rendering looks pretty good to make an 8/16-bit, and nothing like he did in your Web.

I have the feeling that superwhite with alpha channel color values are multiplied by the way AE gets ' lost in translation ' when it is rendered 4-channel 8-bit. Is it possible to look the way it does in the Web?

Thank you

Clint

There is nothing wrong with AE, you have just a poor understanding of the works of floating point processing. In short: you're confusing mix of transparency, and this is why everything falls apart when you export. The color is still there, there is simply no opaque pixels to process on and so she never shows upward, much less in an environment that only works in 8 and 16 bits as FCP, limited killing already loyalty by using an 8-bit format. That's all that happens, and unfortunately there really isn't much you can do. Unmultiplying the result based on luminance should give you a usable result, but it remains an approximation, and regardless of whether you use the same blend modes in FCP, it will never be the same work. So in conclusion, a better way to do this would be to work 8 or 16bpc and make it look as if she were 32bpc. That is, if you can't make the final stage in AE, where even once, you'd be able to get a good mix in the float.

Mylenium

-

Conversion of 16-bit unsigned 32-bit float

Hi, I'm new to labview and data types in the electronics. Due to a shortage of labour in my company, I am required to do it myself. I've been through several labview basic tutorials and examples, but I am unable to do anything properly with labview. Any help is very appreciated.

I'm using labview 8.6.1 and I downloaded the drivers for modbus. Currently, I am required to read data out of a sensor and write the data to a file. Using the ".vi MB series Master query Read Input registers (poly)", I am able to read the data in the registers. However, the data in the registers are 16-bit unsigned and does not give the "real" value I'm not sure about this, but I tried to use simply Modbus 6.3.6 and change the bit 16 32-bit unsigned type float makes the job of showing the real value.

So, my question is, how do I get the actual values of the VI? I apologize if this is a simple question but I am new to this and don't have the time to study further in this regard. In addition, I apologize in advance if not enough information is given. Her updated the VI of "Master Series MB query Read Input registers (poly) .vi" that I used to write data to the file, as well as 1 text files, I got is attached.

Thank you for your help and sorry for any trouble.

Here's my VI registered under LV8.6.

You have pretty cables through your VI, but it is ridiculously little handy, easily scalable and uses some Rube Goldberg constructions.

Your Rubes:

1. table to the cluster to unbundle converty. Then grouping to a cluster and then to a table. No need to go through the songs and dances of cluster. You could have used the Index table and table build and not had to convert anything to a cluster or backward.

2. using a constant Dbl to change than one through the digital conversion function to determine the data type for the type cast. Eliminating the conversion and has created the representation of the single precision instead of double precision constant.

3 code dozens of times when he should have been treated in a loop of duplication.

If you use me as a Subvi, your screen real estate will be less than a 1/4 of what it is now.

You say you want to read a bunch of records that are not adjacent to each other? You can make several requests of reading. Write a query command, then read the answer. Then write another order of demand for various registers and read this response. It depends on how different readings, you do or how far records of interest are about whether it makes sense. If you need read a two pairs of registers, which are some distance apart, it might be more efficient to perform a single read multiple registers if they are close together and just throw the records don't care you. It will be more bytes to read at the back, but it might be faster because it can be done in only one reading. Or you could do two readings targeting your specific records. It might be less bytes to read in each message, but it will take more time to make two requests to read and wait for responses between the two.

I don't know if the limit of 125 records is something to make your device, modbus or modbus in general protocol library. I want to say that it might be that the modbus Protocol usually means that records the 125 250 bytes of data. Factor of a few bytes of the command of the response and the checksum, you will be 256 bytes. Modbus packages are designed to take less than 256 bytes so that only a single byte is needed to tell how many bytes of data will be forthcoming in response to the message.

With selective targeting of your records to read and using the table of Index to read specific float32 values of interest, you can write the 12 values you want in the file instead of all those you don't need.

-

dynamic double bit data conversion

It could be very simple, but I was confused. I have current data and average data as in code. I want to build the table using these data, save them on my hard drive and open in word with header (current and average)

Problems:

I have data from two sources in lead generation and versions table as shown entry table to write on the worksheet vi. I get the error source is dynamic and double sink is a bit. It is the most difficult part of my life to find out what range of conversion to use.

When I run the vi (lets assume the good range of conversion is connected and there is no conversion error) pops up the dialog for path that comes up constantly on dialog

Ideally I want appears on the screen when I press on would go so that I can browse location to save the file, and once the path is specified vi works continuously and the data is written to the worksheet while vi is running.

Thanks a lot for reading and help

See you soon

Welcome to the evil world of dynamic data. What you really want to use is the merging of signals feature. When you do this a dynamic data conversion will be automatically added between the signals to merge and writing on a spreadsheet file.

You can consider using writing to the file position as directly accepts dynamic data.

With respect to the path, use a shift register.

-

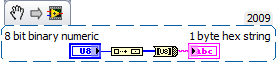

Binary 8-bit digital conversion to a string of bytes 1

Hello..

I want to convert a binary number to 8 bits for hex string.

Examples;

binary - 00000000-> 00 (Hex)

binary - 11110000-> F0 (Hex)

binary - 01110000-> 70 (Hex)

And the STRING of the hexagon is 1 byte.

I tried but its not showing the exact as string above.

Help, please.

I have attached the VI.

Hi Pascal,.

Cannot open your VI here, but your question translates this excerpt:

-

Hexadecimal string to 32-bit float

I get the data to the serial port as a string of bytes U8.

I converts the string to a string of void who reads B 3, 43 CD7B, which corresponds to a value of 410.0. (Or in other words, this value should result in my conversion)

I tried to feed the string above in a double game void. But the value reads as 3.27607E - 23.

Any tips?

-

How to force all imported pictures be 32-bit float...

Why is it so hard? How is is not in the preferences / file manipulation section? Like really?

So, where is your it? How can I configure photoshop to work always in 32 bit on my images... I hate having to remember to put once they were brought...

I should be able to choose the number of bits that I want to work at as a preference all the... And I saw somethings that he worked with camera raw, but what happens if I use a jpg file and to add a gradient and I want the gradient to be smooth, so I work at 32...

Thank you very much!!!

Color image are usually processed using 8-bit or 16-bit color where 8 bits means 24-bit full color and 16 bits 48-bit full color. Some display and display adapter support 30 bit color, sometimes referred to as 10-bit color. More display and graphics to 8 full color 24 bits of color b. Most of the devices are color 8-bit OS features some may not supported for device driver support most 8-bit color.

To be able to spread out and do better tone HDR treatment uses the 32-bit color depth. I think most of the filters don't support 32-bit color and a final output of HDR converts 32 16 or 8-bit color. I not call sure of what actually happens in treatment HDR, I did not make this type of treatment.

Depth of color of image files is encoded in the file format. If you work on a document in 16-bit color and that you place in like 8-bit colors color image will be 16-bit at the pyou color will be not gain any color fidelity you can not create from nothing and if it's the other way around a 16-bit to 8-bit color color you will lose some color in the pixels rendered for the layer However, the embedded object will still have 16-bit colors. If you change the 32-bit mode, the pixel layers must be re-rendering, you could win some color. You can set you ACR workflow by default to convert to 16-bit color. This is important. If you want to process in 16-bit color which is good, you will have more latitude, but your not going to win any better color import 8 bit jpeg, png, tiff, psd etc then the 8-bit color, they have these colors just do 16-bit.

If you want to treat always in 16-bit color, you can define an open event using the event handler script trigger a script or use 16-bit mode. After the event the script will automatically Open mode image 16

-

8.14 Acrobat Pro crashes (Win 7 pro 32 bit) Tiff conversion

I can not the scanned images to TIFF (registered to the PC running Win 7 pro 32 bit) to convert to pdf format.

The following details with the error message:

Signature of the problem:

Problem event name: APPCRASH

Application name: Acrobat.exe

Application version: 8.1.0.137

Application timestamp: 46444c 82

Fault Module name: ImageConversion.api

Fault Module Version: 8.1.2.86

Timestamp of Module error: 4788536e

Exception code: c0000005

Exception offset: 00074a 13

OS version: 6.1.7600.2.0.0.256.48

Locale ID: 1033

Additional information 1: 0a9e

More information 2: 0a9e372d3b4ad19135b953a78882e789

Additional information 3: 0a9e

Additional information 4: 0a9e372d3b4ad19135b953a78882e789

Any help would be greatly appreciated

Thank you

Adobe Acrobat does not support this format TIFF.

-

Export Source 24 Bit WAV as float 32 bits before the conversion in Adobe Media Encoder?

Hello

I am exporting movies from After Effects as .avi and I was wondering what I need to do to preserve my audio is close to the source as possible. My audio file of the source is 24 - bit / 48K. I use Adobe Media Encoder to encode audio/video like YouTube Widescreen HD but since After Effects doesn't offer 24-bit export, should I export as a 32-bit float? And is that going to change my audio somehow before moving to Adobe Media Encoder?

I've read about it on some different forums, but am still not not clear about this...

Thank you

Henry

Sorry, but you've made a fundamental mistake - a non-standard rate. That's a no-no. A big. You will have to use a standard frame rate and come up with another way to get things to work. You'll foolin' with frame rates.

-

Float 32 bits skeleton example - CC 2015

Hi all!

I'm new to after effects SDK, and I am trying to create a very simple plugin.

Installation of the SDK and compiling of samples was not a problem because everything is very well documented, thanks to the authors.

I would like to create a plugin since the skeleton of the model, but it is necessary to make it work on 32 bit, float images (I have to manipulate a position AOV CG rendering). I found a few examples in the SDK software that deals with 32 bit (gear lever and smarty pants) and I read Chapter 4 of the pdf SDK (without figure this out, I must admit, probably because I'm not fluent in C++).

Yet, I could not know how to say in pre made, I want to operate on a per-pixel basis. As PixelBender has done, or what is available in the Nuke Scripts blink. The examples I found always seem to do a lot of things during pre render, and I can't wrap my head around this...

In the end, I think my question is "is an example of 'skeleton-like' for SmartFX"?

Sorry if I missed something obvious...

Thanks a lot for your interest and your help!

You must put something in output_worldP. For the moment, you are just checking but doing nothing with it.

The simplest thing you can do is to copy the pixels between the input and output:

ERR (PF_COPY (input_worldP, output_worldP, NULL, NULL)); values both null say the copy function to copy all the pixels

Also, don't forget to check in your pixels in entry (the example of SmartyPants does this...):

Err2 (extra-> cb-> checkin_layer_pixels (in_data-> effect_ref, 0));

-

Convert the hexadecimal string of Little Endian floating-point single precision

I made a lot of tinkering and research, but despite all the very similar examples, I found, I think that I am limited by my lack of knowledge in programming. I gives me values such as 0000c 641 I need to convert to

41C60000who must convert single precision IEEE754 to 24.75 as a standard 32-bit float. I saw very similar examples with boxing and unboxing conversions, but I simply can't understand it. Thank you.OK, so if you have actual letters coming, there are a few ways that you can do. You can basically change the string in order to get in order waiting for LabVIEW. Otherwise, you can do the same using data manipulation functions:

-

How the output voltage is coded on 16 bits DAQmx devices?

In our laboratory, we have two devices DAQmx, the NOR-PCIe-6363 and the NOR-PCI-6733. Both have 16-bit for bipolar analog output precision. I understand that the small voltage difference that can be made is 2 * Vref/2 ^ 16, where Vref is the reference AO voltage (10 Volts or externally provided for 6733, 10 or 5 Volts or externally supplied for the 6363).

I wanted to know how the output voltage is coded. DAQmx functions take 64 bit floats as input, and at some point, they must have their reduced accuracy. How is this done rounding, is a floating point around the nearest possible tension, or is always rounded down or always rounded upward?

What is all the possible output voltages? Some diagrams in NOR-DAQmx help/measurement Fundamentals, signals, Analog, sampling considerations seem like they could involve the maximum voltage + Vref is not achievable, so I think that is all of the possible tensions - Vref + 2 * Vref * n/2 ^ 16, with n ranging from 0 to 2 ^ 16-1 included. This includes - Vref and does not zero but + Vref.

Could I get confirmation on this point, or be corrected if it is wrong?

Hi Chris,

The scale of writing DAQmx version performs double floating precision scaling and then he made a turn, the closest to convert the resulting code of the DAC to double int16_t (or uint16_t for unipolar devices). Floating point scale includes the custom scale AO if you have configured one, the conversion of volts or AMPS to the codes of the DAC and for some devices, the calibration scale.

You can check the coefficients of scaling using the AO. Property of DevScalingCoeff. It takes V / A-> CAD codes and scaling into account calibration, but not the scales to customized AO.

The PCIe-6363 X series devices preset scaling in software. The internal reference of the AO is slightly higher than 10V, to correct the errors of gain and offset does not limit the output range. It also means that you are not limited to 9.9997 V on this device when you are using an internal reference.

The PCI-6733 uses calibration DAC instead of software scaling. RAW - 32768 means - 10 V, 0 corresponds to 0 V, 32768 is impossible because of two of the 16 bits of the add-in and 32767 translates 9.9997 V. When you continue 10 V to write DAQmx with this device, DAQmx he forced into 9.9997 V.

Note that for these two devices, the absolute accuracy full scale includes over 305 uV of error. Look at the tables of absolute precision AO in the specs of the device for the full story.

Brad

-

Y at - it a 16 - bit file format which will edit as a RAW file?

Can I export the files of a RAW converter (and use the converter color conversion/tone mapping) and still be able to edit the files as a RAW file in another converter (let's say Lightroom)?

I tried to export in TIFF format, your mapped with a tone curve average. The problem I encounter is that the recovery of light/shadow cursor does not work with the same efficiency that I am used to when working with RAW files.

Or maybe it's an inherent limitation of working with non-linear 16-bit files? If so, it would help export the file in linear TIFF (light/shadow recovery sliders would work as they do with RAW files)?

Thank you!

I think that I just got my answer:

http://www.magiclantern.FM/Forum/index.php?topic=15689.0Here's the big takeaway of the forum, which refers in fact to another Adobe forum:

Our concept of ACR/LR is to make images for display or printing. When starting with images of scene (e.g., raw), this means the tone and color mapping linear light input data (for example, if entire 12-bit or 32-bit floating-point) values adapted to reproduction (output-referred). Given that the result is always intended to be referred to the exit, the resulting images are always stored using 8-bit or 16-bit values. We do not all need to represent the data referred to in the output using the 32-bit values.

(ACR/LR is not intended to be a "transmission system" that can say, take the 32-bit input image data and also broadcast to end 32-bit image data. It is possible to do so, although clumsily.)

It appears from this forum, we could actually use DaVinci Resolve to successfully create a high dynamic range EXRs of raw data and don't lose any of your original data.

-

Can anything convert ALAC 24-bit or higher?

Personally, I would like to convert it to a minimum of 32-bit floating-point ALAC, but I'd settle for 24-bit integer. 16-bit is too. I heard that the ALAC has the ability to support 384 kHz, 32-bit floating point, maybe even 64-bit Floating Point (IEEE) the way WAV files can.

Can not export or convert to 32-bit ALAC with a minimum of 44.1 kHz and 256 Kbps?

SteveG (AudioMasters) thanks for the recommendation of Foobar2000, but it seems sketchy. Whatever it is, I did a short study in order to determine which program should provide the best quality of conversion ALAC as well as inadvertently determine best quality (in my opinion) output formats:

Surprisingly (not really), iTunes is the best converter ALAC (free), may be replaced by Logic Pro X, nothing else. It is possible to convert to 32-bit Float ALAC, 192 kHz and maybe even of 384 kHz, but not unless the source file is already 384 kHz. For 32-bit conversion, the source must be exported from Adobe Audition (CS6 or above I think) as 32-bit integer. In iTunes, it converts the floating-point format. 44.1 kHz being more than substantial, a float of 32 bit 44.1 kHz ALAC equals perfect compressed lossless Apple available.Furthermore, I have determined that supplied iTunes perfect conversion of any waveform is registration, although ALAC iTunes conversions, as well as others, perhaps, reduce the peaks that exceed the threshold, but convert otherwise perfectly. without zooming on the threshold, you would never know there was a difference. This is recommended only with files that have reduced overall gain or who have already had dynamic range compression or fixed limitation.

Better yet, Adobe Audition can export files of Point floating (IEEE) FLAC 32 bits with original sampling frequencies (so you can resume 44.1 kHz) for perfect conversion, and I mean perfect. I checked the sections that were exact matches as far down as 1 hudred-millionieme of a second.

Perfect lossless Compression: Minimum 44.1 kHz, 32-bit floating point (IEEE), FLAC

I edit in WAV format, export WAV to Apple to convert to the ALAC, FLAC WAV export and destroy all WAV, FLAC become my source. The routine is perfect for me.

I can share my pages of notes of my studies over the past two weeks to determine all the data and the conclusions that I have collected with anyone.

Maybe you are looking for

-

Satellite Pro C50-A-153 does not not turn on more - overheat?

LS,A week ago I bought a new laptop, a Toshiba Satellite Pro C50-A-153. Last night I was watching some micro-credit (looking for a new holiday) and I noticed that the laptop was overheating.I hit the market / stop butten and it turned of... later.And

-

Hello I am Emilie and I am new to HP & windows 8.1. I bought HP R005TX notebook last month. My dealer said that my windows is original and which is incorporated with my knees. It does not have a disk to windows. Now I have a question, where can I get

-

My girlfriends pc running XP does not open windows more. I tried to upgrade to service pack 2 service pack 3, it showed that installed fine but after the reboot it would not open windows more. It comes back with an error message indicating there is a

-

How to reformat hard disk and write the hard drive C, of zeros

I gatewayway 500 x with windows xp home ditiond... This is a manufactured office 05/28/02. I have all the restore disks. How to write c or a hard drive, of zeros to make clean reinstall? It must be real simple, but people at the door give me a hard t

-

STOP: 0x0000007e (0xc000001d, ox8537008 0xba4c3508 0xba4c3204) and the system would not come to the top. Error blue screen STOP: 0x0000007e. I powercycled the sys, unable to choose for sure (keys do not work when you try to select a mode) sys is tryi