9 bit signed temperature

Hello

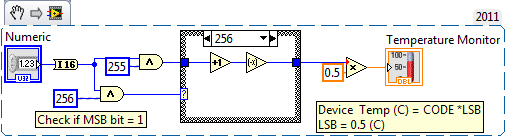

I tried to do the 2 9 bitwise complement to determine the signed bit. I wrote text mode, and it was a job all right; However, I tried to convert LabVIEW. It seems that the digital thermometer does not have a negative temperature. Could someone help me to look through this code? Here is the text-based code.

If ((tempdata & 256) == 256)

TempData = (((tempdata & 255) ^ 255) + 1) *-1);

on the other

TempData;

When I used the module 'Playing field' to read a temperature of 16 data bits, but I'm only looking for bit 7-15. However, the "Playing field" module takes care extract data in 9 bits. The data I've read from the 'Playing field' module is returned to digital U32. So I used the returned data determine if the MSB is equal to one.

When you perform a bitwise operation, you should not forget to check the position again. Your case '1' statement is not valid. It must be "256".

This should be the result you're looking for.

Tags: NI Software

Similar Questions

-

Do not understand why "the node fpga Audio IN Terminal is 16-bit signed integer"?

Hello

I work with myRIO 1900 for my project of ANC.

Audio IN of the fpga node gives its type terminal data as integer signed 16-bit. So, finally the exit on the nodes of the fpga is fluctuating between two values - 1 and 1. But I want the actual values of the audio data, I did not understand how to address this problem.

Audio In on the side of RT gives type of terminal of data such as actual values, but I did not understand why the terminal of Audio In FPGAs is 16-bit integer. ??

Please help me solve this problem.

Thank you.

If your analog range of +/-2.5 V. 32768 then--would be the equivalent of-2.5 V. 32767 would be + 2, 5V.

If you get + /-1 V, then you should see somewhere between + / 13 107 on the analog input of the number I16.

Basically, take the n ° I16, divide by 32767, multiply by 2.5. You will have your analog input in volts.

I don't know why you thing it's just rounding up to the-1 to + 1. Something must be wrong with your code or configuration.

-

Hello

I need to convert integer to signed decimal value is 16 bytes.

For example a xCC lower byte and the high xFF who in decimal-52.

The string coming from the instrument is xFFCC. What can I use with Labview?

LabVIEW 6.1

Windows XP

In my view, a type of cast string I16 must resolve.

-

Waveform manipulation of the bytes in a byte array and display in 16 bit int signed in a chart

Hello, I read in two bytes using a VISA READ and then output is a string. However, I want to be able to access these bytes individually because I need left move the first octet 8 places and then add it to the second byte and then left move their sum 4 places to the left gives me a 16-bit signed int. Then I need to do this show in a waveform chart. Can anyone help please?

-

signed 32-bit of Modbus data member

I need to read and write data in 32-bit signed integer format modbus address members

in my modbus device, this format is [for example] low word 44097 - Hi word 44098

by example, if the address value 44097 is 1 and 44098 0 is the value of sd44097 is 1

(1) + (2 ^ 16 x 0) = (1) + (65536 x 0) = 1

but lookout interprets these data as (2 ^ 16 x 1) + (0) = (65536 x 1) + (0) = 65536

modbus object of the advanced options, you can consult the modicon for variable value option, but I can't find similar parameter for double precision signed whole and don't find any solution

Anyone know a solution?

Thank you

If you use Lookout 6.1, follow this KB to create a modbus.ini file.

http://digital.NI.com/public.nsf/allkb/2E64D5CF87CA6A1086256BB30070DC1A?OpenDocument

Entry

ReverseByteOrder = 1

It will work on the SD as you want.

But if you are using version 6.0 or lower, I don't know a way to do it. I don't know if the F work. You can use the expression to calculate the value.

-

How is an image in 16-bit grayscale in LabVIEW without IMAQ important?

Hello

I am writing a simple demonstration of Imaging for a camera to infrared imaging IR-160 of Solutions. I do not have IMAQ and unfortunately, there are no funds at this stage. (If the demo is successful, funds may become available.) I have LV 8.5.1, complete edition.

The camera creates 16-bit grayscale images, but the format of the image is not well documented. I know that the image is 160 pixels pixels wide x high 120, that gray values are stored as simple bytes (not ASCII), and that the data format is 16-bit signed words, the most significant byte first.

I attach two sample images (8 bits and 16 bits) and two live test.

I am able to read the image, but instead of shades of gray, everything is blue, and there are artifacts in the upper part of the image.

The camera also creates 8 bits to the pgm format images, but I have the same problems there. (The image of pgm opens well in for example, corel paintshop pro.).

What should I do so that the image appears in grayscale, then save it as a bitmap?

Thank you for your help.

Peter

-

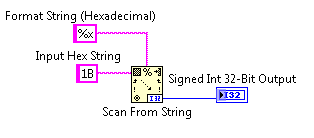

Converts a hexadecimal string to a signed Int

Hey,.

I was wondering if there was a way to convert a whole string. Now, here's the problem, the string is hexadecimal characters actually so in normal mode, I see my hexadecimal characters. I've attached a screenshot of my normal string indicator. What I want to do is to take these 4 characters and a 32-bit signed integer. Thoughts?

You can do it with the analysis of string function:

This would release "27".

-

16-bit binary 8-bit conversion

Im having a little trouble making a labview VI for this process, where var1send and var2send are 16 whole bits signed

int8_t var1low = var1send & 0xff;

int8_t var1high = (var1send > 8);

int8_t var2low = var2send & 0xff;

int8_t var2high = (var2send > 8);Any help would be great!

-

integer of 32 bits carried by gpib

Hello

I currently write software for the acquisition of data and control for a testing machine Instron with a GPIB Bus with Labview. The machine is not the youngest, so I cannot use the 488 commands, not the VISA controls. My control software works this far, but with the acquisition of data, I encountered some problems for which I couldn't find a solution that far. I just taught Labview to myself two months there, so sorry if my questions seem silly to you. I searched the forum and the internet and could not find an answer to them.

So here's my problem:

I like to read my Machine with the command "GPIB-read" data, it gives a string, so I gues it automatically assumes that the data are encoded in ASCII. Well, the machine uses its own coding, described in its manual! I read the header of the measure (it's '#I' in the ASCII format), after that there is a signed 32-bit integer that represents the length of my block of data. Then there is a 16-bit byte and after a lot of 32-bit signed bytes that represent my measurement data. There is no mark of separation.

When I got out, I just get a string of crazy ASCII code (outside the "#I" at the beginning)

My approach to decode the message, that is that:

Convert the ASCII code in binary code (not with Labview, I couldn't know how it works, either. there at - it an easy way to do this?), so I ignore the first 64 Bits (2x8Bits of the entire + 32 bits wide + 16-bit header) and then I try to divide the string into an array of 32 bytes long strings and see if the data is good. In my assessment, I had three values that when it is constant, it should be just three alternate values. I always get the wrong data and especially not, I work with Labview, but later I have to because I want to view the data in real time.

There is a lot of work and I just wanted to know, if I'm on the right track or if there is a simpler solution. Later, it shouldn't be a problem to display an array of integers as a wave, right? Is there any advice or you can give me any advice?

Sincerely,

Simon

- Request VISA 488. So I don't see why you can't use the VISA.

- A byte is 8 bits. There is therefore no such thing as a 16-bit byte. It's a Word.

- The string Unflatten is your friend here. You can use it to decode every part of your order. And since your actual data is just a string of 32-bit words, you can use the Unflatten of string to turn that into an array directly, just to make sure "Data includes table size" is set to false.

-

How to read data from 16-bit unsigned IMAQdx?

I was using my firewire camera to take pictures in unsigned 16-bit grayscale and processing of these data in matlab. However, the data becomes incorrect when the integration time of the camera is great. I discovered that the reasons are: 1, IMAQdx decoded data monochrome 16-bit signed 16-bit, which returns a negative value if the amplitude of each pixel is greater than 32768. 2, IMAQdx automatically adds a constant, which is equal to the amplitude of the largest negative value pixel, to all the pixel values, to change the smallest value to zero, but this process ruin the rest of my data and makes them almost irreversible. I think the only method could solve this problem is to try to do in labview read image form of unsigned data, but I don't know if it's possible, because it is not optional in IMAQdx manual.

Any suggestion will be appreciated.

Hi Hosni,

FireWire (especially earlier on) had some ambiguities on the endianness, signed-ness and depth of the pixel data that is returned. IMAQdx tries to take the best prognosis for 16-bit data using specific registers defined by the IIDC and other information she can deduct on the camera. However, sometimes these assumptions are incorrect. If you go to the acquiring of your camera attributes tab in MAX, you should be able to replace all these parameters correspond to what the camera expects its data should be interpreted.

Eric

-

iteration signed integer and not a integer unsigned, why?

I was wondering if there is a reason to keep the iteration of a loop to a 32-bit signed integer variable/indicator.

He will never take a negative value... then why sign; unsigned could serve the purpose

Bloop Shah wrote:

but it wouldn't be better to introduce the NaN in full to assign a set bit just for a - 1?...

who and where negative numbers are used?

or is there something more than what satisfies my intelligence?

Integers use every bit, which means that each combinations only bit is a real value. It must be this way to the full support of all the features of math. For example, if you take the difference between the two U32s tick counts, you get the correct time (as long as it is shorter than 2 ^ 32ms), even if the counter wrapped around between the two graduations.

Because of the representation of the floating point (mantissa from the exponent, sign) numbers, we can define combinations unusual bit with a special meaning (NaN, - Inf + Inf, etc.). It is not possible for integers.

-

Hello

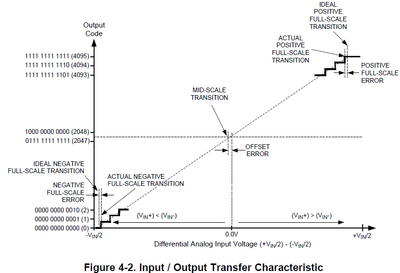

For my application, I need to integrate the analog 5772-01 entry over time, so I need to extend the 5772 32-bit input before joining. My attempts to implement the sum is clearly incorrect, and I think it's because I do not properly understand the 5772 data format.

Page 19 States Manual 5772digital data resolution is 12 bits, signed, binary, accessible data using a left-justified data I16 type.

Page 32 of the ADC12D800RF data sheetshows the code for output from the analog input voltage:

They are not lining up in my mind, I guess because the CLIP between the two? Can someone help me understand how to extend the entry 5772-01 to 32-bit and run on it? What the I16 convert voltage? Thanks in advance for any help.

-Steve K

-

Exhibitor block signal - Xilinx FFT v7.1 - FlexRIO - FPGA

I use Xilinx FFT v7.1 IP (FPGA - OR 7965R, LabVIEW 2012). I am computing the FFT of real integer 16 bit signed.

Bit 5 signal exposing block block floating point FFT in module v7.1 Xilinx FFT signed or unsigned? Pdf document talks shift to the right of the data output to use the dynamic range, mentions not moving to the left, neither gives sufficient detail on this subject.

Is there a base value as format IEEE floating point should I use to find the correct output value?

I guess that it is unsigned unless you have comments to the contrary. The basic behavior is to keep bits on the left in order to prevent any overflow, so the scale always implies move on to when you think that some of the more significant bits are unused. They provide an example of b00101 = 5, so that indicates there is no bias to apply.

-

I have a LabVIEW and DLL C of the code.

The C DLL takes a wide range of UINT16 values and it decodes a form of sense. Data that results are stored in a static table inside the DLL structure. The Structure consists of integers, floats, strings, and arrays.

The DLL also has a function to return the elements of the structure. Each structural element is returned as a separate parameter entry. Some of them are arrays, for example:

void GetMyData (..., unsigned char * myArray [],...);

I know how great 'myArray' is.

In LabVIEW, I have a "call library function Node" for the above function. For the berries, I used "Pointer digital, full 32-bit signed value". The "address" that is returned by this call I switch to 'LabVIEW:MoveBlock' call as defined in the examples on this forum. pre-allocating table that is the correct size and then copying the bytes inside the 'address '.

If I just run my LabVIEW VI crashes. If I walk through it however (spanning the node library DLL function calls) and then I get the data from the DLL as expected. However if I then close LabVIEW it crashes.

MoveBlock is safe to use? Is there another way to get data from a DLL tables?

Thank you.

Looks like you have the library node call correctly put in place, but you initialize the table you're passing in there? You need to initialize an array of sufficient size or set the minimum table size in the library node call so LabVIEW can do for you. If you pass an array too short you will probably get an accident since your DLL will attempt to write to this memory location and overwrite something else.

You should also initialize the string that you pass to your DLL if you wait the DLL to fill.

It may also be useful for defining the level of error control dial the node of the library to the maximum for debugging purposes; This can help catch some memory problems.

-

MODBUS U16 to simple, back from setting in the form

Hello everyone, I am working with a couple inverters Kollmorgen that give and receive data via MODBUS. Using the LabView user's library, I had no problem in the reading and writing of data on their part (assuming I do not more often than 10 times per second). However, I am having some problems with the data format. Kollmorgen has not settled exactly according to the standard and therefore some values are 32-bit registers, some are 64-bit and to initiate, some are signed and some are not; they are all however, delivered in 16-bit of size segments by the function 'read record keeping. Right now I'm only interested in the 32-bit signed value variety.

While I am able to read and I am sure I read the values I want (I can change the value of Kollmorgen workbench and LabView reflects the change of value), the output does not much sense. For positive values, it is fairly intuitive, like the first U16 (I read an array of 2 values U16) is always a zero, and the other is a x 1000 of what positive real value is. So if I read for example a current 3.5 amps, the first U16 is a zero, and the second is 3500; If I read 1.23 amperes is zero and 1230, and so on.

However, when reading negative values, I failed to see a pattern, so that it almost always go to a pretty high value (65 324 or something) in the two registers in low as - number 2. I already tried several data manipulation of the methods listed here, including:

-Conversion of type one in 2 U16 table, then return via join numbers

-Convert the number in binary, binary table then back to number

-Force in single, then using convert in I32 and divide those

So far, no luck, anyone had a similar experience or has worked with these players in the past? Also can't find much information on the documentation of Kollmorgen and their website States that the MSB is sent as the first registry on 64-bit size records. No doubt the positive data format gives a clue, but so far no chance on guessing it.

Convert an I32 U16 table. Divide by 1000.

In the opposite direction. Take your floating point number. Multiply by 1000. Constrain to a U32. Convert to an array of U16.

EDIT: Don't mess with the fixed Point. This is for very special cases, usually involving the FPGA.

Maybe you are looking for

-

Interference extreme airport to another router... or not?

Hello. I installed my new Airport Extreme at home. It works very well. I have a new MacBook Pro then use ' 802. 11A ' on the 5 GHz band. It is connected to the router which was provided by the telephone company. The router also provides Wifi (11 b/g/

-

Why can I not use windows touchpack on IQ772.UK?

I am running Windows7 RC on my touchsmart Iq772.uk and recently installed windows touchpack but when I try to run it it tells me that I have not the hardware on my computer, I downloaded the nextwindow driver even though it says its only compatible w

-

B590 touchpad only presents itself as a PS/2 mouse

Hello, I have looked around and could not find the answer, so I'll ask here. As the title indicates the touchpad watch just like a mouse and scrolling & the fn + f6 option not worked, I got it on win 8 & 7, worked on none of them. I couldn't find a d

-

Downloaded updates and the blue screen now

I installed Kb970653, kb973874, kb972036 & kb973879 and when I restarted the computer I got a blue screen of death. I did a system restore and I tried to update again, and the blue screen of death came. I restored the system, but how can I stop this

-

error 43 for computer bluetooth adapter installation

I can not install on my computer keep getting error code 43 usb bluetooth adapter operating system is xp