bad time stamp difference. Why?

Hi all

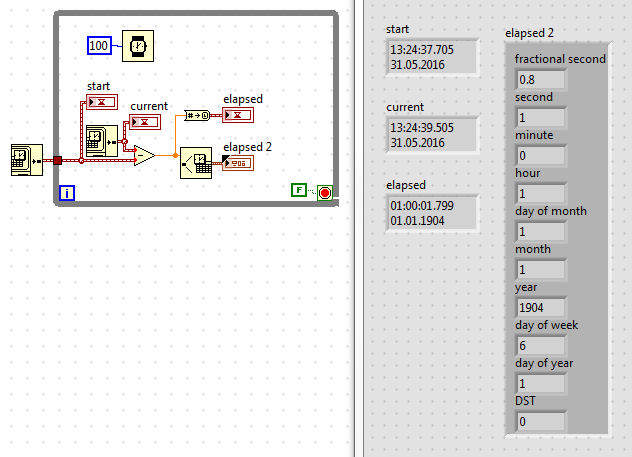

I have a very intuitive when behavior time subtracting one from the other stamps. Can you tell me why 'hour' shows '1' where obviously '0' would be correct?

Subtract the two time stamps as you are now. Which feed a digital indicator. Set the display format for this digital indicator be relative time. There is a choice for days to hours and minutes that resembles % <%H:%M:%S> in the output format t advanced. If you want to display days, so do % <%D:%H:%M:%S> t there is no need to mess with cords.

Tags: NI Software

Similar Questions

-

overloading a DATE with time STAMP function to avoid the "too many declarations.

Originally, I had just the a function with the arguments of VARCHAR2. It worked not correctly because when the dates were gone, the automatic conversion into VARCHAR2 lowered the timestamp. So, I added a 2nd function with the arguments to DATE. Then I started to get "too many declarations of is_same exist" error during the passage of time stamps. This made no sense to me, so, although documentation Oracle says you can't do this, I created a 3rd version of the function, to manage the TIMESTAMPS explicitly. Surprisingly, it works fine. But then I noticed that he did not work with TIMESTAMP with time zones. Therefore, the fourth version of the function. Docs of the Oracle say that if your arguments are of the same family, you can't create an overloaded function, but in the example above shows, it's very bad.CREATE OR REPLACE PACKAGE util AS FUNCTION yn (bool IN BOOLEAN) RETURN CHAR; FUNCTION is_same(a varchar2, b varchar2) RETURN BOOLEAN; FUNCTION is_same(a date, b date) RETURN BOOLEAN; /* Oracle's documentation says that you cannot overload subprograms * that have the same type family for the arguments. But, * apparently timestamp and date are in different type families, * even though Oracle's documentation says they are in the same one. * If we don't create a specific overloaded function for timestamp, * and for timestamp with time zone, we get "too many declarations * of is_same match" when we try to call is_same for timestamps. */ FUNCTION is_same(a timestamp, b timestamp) RETURN BOOLEAN; FUNCTION is_same(a timestamp with time zone, b timestamp with time zone) RETURN BOOLEAN; /* These two do indeed cause problems, although there are no errors when we compile the package. Why no errors here? */ FUNCTION is_same(a integer, b integer) return boolean; FUNCTION is_same(a real, b real) return boolean; END util; / CREATE OR REPLACE PACKAGE BODY util AS /******************************************************************************** NAME: yn PURPOSE: pass in a boolean, get back a Y or N ********************************************************************************/ FUNCTION yn (bool IN BOOLEAN) RETURN CHAR IS BEGIN IF bool THEN RETURN 'Y'; END IF; RETURN 'N'; END yn; /******************************************************************************** NAME: is_same PURPOSE: pass in two values, get back a boolean indicating whether they are the same. Two nulls = true with this function. ********************************************************************************/ FUNCTION is_same(a in varchar2, b in varchar2) RETURN BOOLEAN IS bool boolean := false; BEGIN IF a IS NULL and b IS NULL THEN bool := true; -- explicitly set this to false if exactly one arg is null ELSIF a is NULL or b IS NULL then bool := false; ELSE bool := a = b; END IF; RETURN bool; END is_same; FUNCTION is_same(a in date, b in date) RETURN BOOLEAN IS bool boolean := false; BEGIN IF a IS NULL and b IS NULL THEN bool := true; -- explicitly set this to false if exactly one arg is null ELSIF a is NULL or b IS NULL then bool := false; ELSE bool := a = b; END IF; RETURN bool; END is_same; FUNCTION is_same(a in timestamp, b in timestamp) RETURN BOOLEAN IS bool boolean := false; BEGIN IF a IS NULL and b IS NULL THEN bool := true; -- explicitly set this to false if exactly one arg is null ELSIF a is NULL or b IS NULL then bool := false; ELSE bool := a = b; END IF; RETURN bool; END is_same; FUNCTION is_same(a in timestamp with time zone, b in timestamp with time zone) RETURN BOOLEAN IS bool boolean := false; BEGIN IF a IS NULL and b IS NULL THEN bool := true; -- explicitly set this to false if exactly one arg is null ELSIF a is NULL or b IS NULL then bool := false; ELSE bool := a = b; END IF; RETURN bool; END is_same; /* Don't bother to fully implement these two, as they'll just cause errors at run time anyway */ FUNCTION is_same(a integer, b integer) return boolean is begin return false; end; FUNCTION is_same(a real, b real) return boolean is begin return false; end; END util; / declare d1 date := timestamp '2011-02-15 13:14:15'; d2 date; t timestamp := timestamp '2011-02-15 13:14:15'; t2 timestamp; a varchar2(10); n real := 1; n2 real; begin dbms_output.put_line('dates'); dbms_output.put_line(util.yn(util.is_same(d2,d2) )); dbms_output.put_line(util.yn(util.is_same(d1,d2) )); dbms_output.put_line('timestamps'); -- why don't these throw exception? dbms_output.put_line(util.yn(util.is_same(t2,t2) )); dbms_output.put_line(util.yn(util.is_same(t,t2) )); dbms_output.put_line('varchars'); dbms_output.put_line(util.yn(util.is_same(a,a))); dbms_output.put_line(util.yn(util.is_same(a,'a'))); dbms_output.put_line('numbers'); -- dbms_output.put_line(util.yn(util.is_same(n,n2))); -- this would throw an exception end; /

Finally, just for grins, I created the functions of number two, one number, the other with REAL and even these are allowed - they are compiled. But then, at runtime, it fails. I'm really confused.

Here's the apparently erroneous Oracle documentation on this subject: http://docs.oracle.com/cd/B12037_01/appdev.101/b10807/08_subs.htm (see overload subprogram names) and here are the different types and their families: http://docs.oracle.com/cd/E11882_01/appdev.112/e17126/predefined.htm.

Published by: hot water on 9 January 2013 15:38

Published by: hot water on 9 January 2013 15:46>

So, I added a 2nd function with the arguments to DATE. Then I started to get "too many declarations of is_same exist" error during the passage of time stamps. It makes no sense for me

>

This is because when you pass a TIMESTAMP Oracle cannot determine whether to implicitly convert to VARCHAR2 and use your first function or implicitly convert to DATE and use your second function. Where the "too many declarations" error exist.

>

, even if said Oracle documentation you can not do, so I created a 3rd version of the function to manage the TIMESTAMPS explicitly. Surprisingly, it works fine. But then I noticed that he did not work with TIMESTAMP with time zones.

>

Perhaps due to another error "too many declarations? Because now, there will be THREE possible implicit conversions that might be made.

>

Therefore, the fourth version of the function. Docs of the Oracle say that if your arguments are of the same family, you can't create an overloaded function, but in the example above shows, it's very bad.

>

I think that the documentation, of the family of 'date', is wrong as you suggest. For WHOLE and REAL, the problem is that those are the ANSI data types and are really the same Oracle data type; they are more like "alias" that different data types.See the doc of SQL language

>

ANSI SQL/DS and DB2 data typesThe SQL statements that create tables and clusters allows also ANSI data types and products IBM SQL/DS and DB2 data types. Oracle recognizes the ANSI or IBM data type name that differs from the Oracle database data type name. It converts the data type for the equivalent Oracle data type, stores the Oracle data type under the name of the column data type and stores the data in the column in the data type Oracle based on the conversions listed in the following tables.

INTEGER NUMBER

INT

SMALLINT

NUMBER (38)FLOAT (Note b)

DOUBLE-PRECISION (Note c)

REAL (Note d)

FLOAT (126)FLOAT (126)

FLOAT (63)

-

Hi guys

I have a table and the date in a column value is stored in varchar2 (35)

It is stored to take time up to milliseconds as below

Select transaction_date in the table

12:43:04:651 09/09/2009

now, I want to vatchar2 convert timestamp data type in my table

to keep the data in the sound and start with the exception of the new data according to the timestamp data type

I don't know why the previous developers took varchar instead of timestamp

our production server is in the United States and the data entered through the India and business also

So they used the varchar data type to store the milliseconds

Use a procedure to have time ist

now I can change the timestamp data type without affecting the data

I think that its really need and it is not good to store the date in varchar

the procedure I know to change the data type is as below

1. Create Table smsrec_dup as select Rowid Row_Id, transaction_date sms_rec;

2 update sms_rec Set transaction_date = Null;

3.commit;

4 Alter Table sms_rec change transaction_date timestamp (3);

5 setting a day sms_rec B Set transaction_date = (Select transaction_date of smsrec_dup A where B.Rowid = A.Row_Id);

6.commit;

above to change the data of TIMESTAMP type

NOW, I WANT TO HOW COULD I INSERT TIME IST

CURRENTLY developers use a procedure for STI

AS

CREATE OR REPLACE PROCEDURE current_tstamp (real out varchar2)

AS

var_sign varchar2 (2);

var_zone varchar2 (35);

time TIMESTAMP;

BEGIN

Select substr (systimestamp, 30.1), to_char (systimestamp, 'tzr') in var_sign, double var_zone;

IF var_sign = "+" THEN

IF var_zone = "+ 05:30 ' THEN"

SELECT to_char (systimestamp, ' dd/mm/yyyy hh24:mi:ss:ff3 "") in the double real;

dbms_output.put_line (actual);

ON THE OTHER

Select to_char (systimestamp + (1/1440 * (330-(to_number (substr (systimestamp, 31,2)) * 60 +))))

TO_NUMBER (substr (systimestamp, 34.2))), "dd/mm/yyyy hh24:mi:ss")

||':'||

TO_CHAR (systimestamp, 'ff3'),

SYSTIMESTAMP

real-time,.

Double;

dbms_output.put_line (actual);

END IF;

ON THE OTHER

Select

to_char(SYSTIMESTAMP +)

(1/1440 *)

(to_number (substr (systimestamp, 31.2)) * 60 +)

TO_NUMBER (substr (systimestamp, 34.2)) + 330)), "dd/mm/yyyy hh24:mi:ss")

||':'||

TO_CHAR (systimestamp, 'ff3'),

SYSTIMESTAMP

real-time,.

Double;

dbms_output.put_line (actual);

END IF;

END;

/

and to call that trigger in

as

CREATE OR REPLACE TRIGGER 'TEST'.trg_tctn_dtinsrt_sms

BEFORE INSERT ON sms_received

FOR EACH LINE

DECLARE

VARCHAR2 (55) DT;

BEGIN

current_tstamp (DT);

: new.transaction_date: = DT;

END;Hello

I just want to explain about this a bit...

change time zone at the database level is quite a bad idea...

because if you change the time zone then what about all the data that might be present in the same database used by other applications, as appropriate.

I'm not sure, but if dbtimezone is changed so if you have date or timestamp columns with tables so the values inside of get them adjusted to the time zone has changed.first of all understand the diff between...

If you use systimestamp (which is not really a timestamp data type appear perfectly what timestamp with time zone) must always dbtimezone time zone (as obtained from timezone database).

You use localtimestamp (which is a timestamp data type) if the timestamp at the session that you set for him. by default, it will take the client's time zone.

If you use current_timestamp (which is a local timestamp with time zone data type), we have to the time stamp and the time zone at the session that you define for him...

SQL> alter session set time_zone='+05:30' 2 ; Session altered. SQL> select systimestamp,current_timestamp,localtimestamp from dual; SYSTIMESTAMP --------------------------------------------------------------------------- CURRENT_TIMESTAMP --------------------------------------------------------------------------- LOCALTIMESTAMP --------------------------------------------------------------------------- 10-SEP-09 05.15.03.531687 AM -04:00 10-SEP-09 02.45.03.531716 PM +05:30 10-SEP-09 02.45.03.531716 PM SQL> alter session set time_zone='+04:30'; Session altered. SQL> select systimestamp,current_timestamp,localtimestamp from dual; SYSTIMESTAMP --------------------------------------------------------------------------- CURRENT_TIMESTAMP --------------------------------------------------------------------------- LOCALTIMESTAMP --------------------------------------------------------------------------- 10-SEP-09 05.15.39.840319 AM -04:00 10-SEP-09 01.45.39.840350 PM +04:30 10-SEP-09 01.45.39.840350 PMSee above see you systimestamp does not change but current_timestamp and localtimestamp change with session timestamp...

Until this is localtimestamp... speak frankly... it will get the time stamp of the client... If you use a sql developer then it will show the timestamp on your desktop... But in a 3-tier application... I mean applicationlayer-customer data base, the database client is application layer not the end user PC. He will get the time zone of the server application. So using timestamp with local time zone can be waived if you use 2-tier application or desktop.

Here's the real story...

So what I mean is that... This is the application layer send the time zone of the client to the database... If the database when inserting (indirectly, your willingness to insert queries) convert the timestamp to whatever you want...for example...

I am inserting a record into the database table that has a timestamp data type... so... I inserted at 14:56 ISTSQL> insert into test_tz(col1) values(systimestamp); 1 row created. SQL> select col1 from test_tz; COL1 --------------------------------------------------------------------------- 10-SEP-09 05.25.41.497759 AM SQL>If you see he showed he showed the time server by deleting the part of zone. Since then, you would like to insert in the TSI data then...

SQL> truncate table test_Tz; Table truncated. SQL> insert into test_tz(col1) values(systimestamp at time zone 'Asia/Calcutta'); 1 row created. SQL> select col1 from test_tz; COL1 --------------------------------------------------------------------------- 10-SEP-09 02.59.57.087032 PMHe showed the real time of the insert as IST.

.....................................

If you want to set the level of the session then you must use localtimestamp as you used in your post...But regardless of how you use... you must spend the time zone of the client to the server, I mean 5:30 ' or "Asia/Calcutta" whatever inside your sql queries.

Ravi Kumar

-

seconds of falling for the time stamp

I guess someone has had this problem before, but do not see in the forum anywhere. I add a timestamp in a file so I used the 'get time in seconds' and connected to a "convert to DBL. Then this son in an indicator on the façade and in the Express VI "write to a measurement file.

The indicator shows the time stamp very well, but in the file it puts it in scientific notation and cut the last 4 digits and fractions of a second. Timestamping writes to the file every 5 seconds, so it is important to have the rest of the time stamp included. I tried using probes on wires to see where things are weird but I get the same value truncated in the wire before and after the flag. I thought it had something to do with the VI Express first, given that the indicator is fine, but with her truncated in the thread, it seems that the Express VI is indeed record exactly what is given to... but then again the indicator... you can see that I've been running in circles in this! Someone knows the reason why he does this and a possible solution?

Thank you very much!

-

Rough terrain when the data is plotted with time stamp of AM / PM or to the right!

Hi to all programmers.

I'm attached Datafile. Can someone tell me how to draw this line! I need all of the data plotted on the right and the x - axis with the date and time stamp.

I understand that the chart cannot understand that after the time: 12:59:59.999, it's 01:00:00.000 afternoon.

Thanks to Labview 7.1

Concerning

HFZ

Apart from a code that can be simplified by the use of other functions, what I see, it's that the file you provided does not match the format that expects the VI.

Other comments:

- I don't understand why you're going to all the trouble of the evolution of the time string when all what you need to do is:

- All the way at the beginning, use the function of path of the band to get the file name rather than the gymnastics that you're doing.

- Your time loop must be indexed for auto-loop.

- I can't quite understand what you're trying to do with all these sliders.

- I don't understand why you're going to all the trouble of the evolution of the time string when all what you need to do is:

-

For connecting the unit time zone difference

Hello

We have used CUC located in GMT + 2. But we have a lot of users worldwide who have access to their voicemail this CUC. Let's say a user who's in Washington (UTC - 5) goes to voicemail, then he gets a wrong time stamp. Difference is about 7/8 hours.

What is the solution to locate the time settings while accessing voicemail. Or counsel for solving this problem. Thank you

Hello

have you tried to set the zone schedule corect for these in the configuration of the mailbox of the user.

-

BEFORE the UPDATE of relaxation with time stamp does not work as expected

We have a scenario where I check update operations on a table.

I created a before update TRIGGER, so that every time he goes an update on the main table statement, one before the image of the lines is captured in the table of audit with timestamp.

Since it is before updating, ideally the audit table timestamp (TRG_INS_TMST) should be less main table timestamp (IBMSNAP_LOGMARKER) VALUE, I mean TRIGGER should happen before the update.

(I could understand in a way that the UPDATE statement is formulated with SYSTIMESTAMP earlier before the TRIGGER is evaluated and so UPDATE is to have a time stamp prior to TRIGGER, but this isn't what we wanted. We want PRIOR update)

'Table' IBM_SNAPOPERATION IBM_SNAPLOGMARKER ---- ----------------- ------------------------------- T1 U 13-OCT-15 03.07.01.775236 AM <<---------- This is the main table, This should have the latest timestamp T2 I 13-OCT-15 03.07.01.775953 AM

Here is my test case.

DELETE FROM TEST_TRIGGER_1; DELETE FROM TEST_TRIGGER_2; SELECT 'T1', ibm_snapoperation, ibm_snaplogmarker FROM TEST_TRIGGER_1 UNION SELECT 'T2', ibm_snapoperation, TRG_INS_TMST FROM TEST_TRIGGER_2; INSERT INTO TEST_TRIGGER_1 (ID,ibm_snapoperation, ibm_snaplogmarker) VALUES (1, 'I', SYSTIMESTAMP); COMMIT; SELECT 'T1', ibm_snapoperation, ibm_snaplogmarker FROM TEST_TRIGGER_1 UNION SELECT 'T2', ibm_snapoperation, TRG_INS_TMST FROM TEST_TRIGGER_2; UPDATE TEST_TRIGGER_1 SET IBM_SNAPOPERATION = 'U', ibm_snaplogmarker = SYSTIMESTAMP; COMMIT; SELECT 'T1', ibm_snapoperation, ibm_snaplogmarker FROM TEST_TRIGGER_1 UNION SELECT 'T2', ibm_snapoperation, TRG_INS_TMST FROM TEST_TRIGGER_2;

Def trigger:

CREATE OR REPLACE TRIGGER etl_dbo.TEST_TRIGGER_1_TRG BEFORE UPDATE OF IBM_SNAPOPERATION ON TEST_TRIGGER_1 REFERENCING OLD AS OLD NEW AS NEW FOR EACH ROW WHEN ( NEW.IBM_SNAPOPERATION= 'U' ) DECLARE V_SQLCODE VARCHAR2(3000); --PRAGMA AUTONOMOUS_TRANSACTION; BEGIN INSERT INTO etl_dbo.TEST_TRIGGER_2 (ID, IBM_SNAPOPERATION, IBM_SNAPLOGMARKER, TRG_INS_TMST ) VALUES (:OLD.ID,:OLD.IBM_SNAPOPERATION,:OLD.IBM_SNAPLOGMARKER,SYSTIMESTAMP) ; --COMMIT; END; /

Output is something like this

1 row deleted. 1 row deleted. no rows selected. 1 row created. Commit complete. 'T1' IBM_SNAPOPERATION IBM_SNAPLOGMARKER ---- ----------------- ------------------------------- T1 I 13-OCT-15 03.07.00.927546 AM 1 row selected. 1 row updated. Commit complete. 'T1' IBM_SNAPOPERATION IBM_SNAPLOGMARKER ---- ----------------- ------------------------------- T1 U 13-OCT-15 03.07.01.775236 AM <<---------- This is the main table, This should have the latest timestamp T2 I 13-OCT-15 03.07.01.775953 AM 2 rows selected.

But for some reason, even after the creation of the 'AFTER' trigger for update, it works as expected. Sense - the main table is not having the last timestamp given

It's OKAY - I told you in my reply earlier. Reread my answer.

could understand somehow that the UPDATE statement is made with earlier

SYSTIMESTAMP until the TRIGGER is assessed and updated so is to have

time stamp prior to the trigger, but this isn't what we wanted. We want to

BEFORE the update)

As I told you before that your UPDATE statement occurs BEFORE the trigger is activated.

Despite what the other speakers have said, it makes NO DIFFERENCE if you use a BEFORE UPDATE or an AFTER UPDATE trigger. Your UPDATE statement runs ALWAYS BEFORE the trigger.

HE has TO - it's your update processing statement that causes the trigger to fire.

Your update statement includes SYSTIMESTAMP. If during the processing of your return to update the value of SYSTIMESTAMP "at this exact time" is captured.

Then your trigger is activated and starts to run. ANY reference to SYSTIMESTAMP that you use in your trigger cannot be earlier than the value of until the trigger was executed. It's IMPOSSIBLE.

The trigger can use the SAME value by referencing: NEW and the column name you store the value. Or the trigger can get its own value that your code is doing.

But the SYSTIMESTAMP value in the trigger will NEVER earlier than the value in your query.

And none of these values can actually be used to tell when the changes are really ENGAGED since the trigger does not work and CAN NOT, to know when, or if, a validation occurs.

Reread my first answer - he explains all this.

-

Date and time stamp not updated

Following my previous posts, the date and time stamp of the files updated don't are not updated in the RoboSource Control, i.e. the column changed in the Explorer RoboSource Control. However, it seems that changes are stored on the server when the topics are archived, as I can right-click a file to source code control that I've changed, choose the command view and see the changes in the HTML code.

Basically, this means that source code control is not recognizing that the files have changed and that's why when someone is trying to get the last version he thinks he's one because the date and time are the same as the previous version.

Everyone knows this behavior before or know why the modified column suddenly stopped updating?

Thank you

Jonathan

After a few Google searches of the digging and random, the following article seems to have solved my problem.

It seems that if you run RoboSource Control on 64-bit computers, there are problems with checking in files.

http://helpx.adobe.com/robohelp/kb/cant-check-files-robosource-control.html.

-

CQL join with 2-channel time-stamped application

Hello

I am trying to join the two channels that are time-stamped application, both are total-ordered. Each of these 2 channels retrieve data in a table source.

The EPN is something like this:

Table A-> processor A-> A channels->

JoinProcessor-> channel C

Table B-> processor B-> B-> channel

My question is, how are "simulated" in the processor events? The first event that happens in any channel and the Treaty as the timestamp, starting point get it? Or fact block treatment until all channels have published its first event?

Channel A

--------------

timestamp

1000

6000

Channel B

-------------

timestamp

4000

12000

Channel B arrived both of system a little later due to the performance of the db,

I would like to know if the following query won't work, whereas I would like to match the elements of channel A and B which are 10 sec window and out matches once in a stream.

The following query is correct?

Select a.*

A [here], B [slide 10 seconds of range 10 seconds]

WHERE a.property = b.property

I'm having a hard time to produce results of the join well I checked the time stamp of application on each stream and they are correct.

What could possibly cause this?

Thank you!

Published by: Jarell March 17, 2011 03:19

Published by: Jarell March 17, 2011 03:19Hi Jarrell,

I went through your project that had sent you to Andy.

In my opinion, the following query should meet your needs-

ISTREAM (select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bparty)

I used the test of CatSender and TrafficaSender data (I show only the part bparty fields and here and that too as the last 4 characters of each)

TrafficaSender (, bparty)

At t = 3 seconds, (9201, 9900)

At t = 14 seconds (9200, 9909)

To t = 28 seconds (9202, 9901)CatSender (, bparty)

At t = 16 seconds, (9200, 9909)

At t = 29 seconds (9202, 9901)For query q1 ISTREAM = (select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bparty)

the results were-

At t = 16 seconds, (9200, 9909)

At t = 29 seconds (9202, 9901)and it seems OK for me

Please note the following-

(1) in the query above I do NOT require a "abs1(t.ELEMENT_TIME-c.ELEMENT_TIME)".<= 15000"="" in="" the="" where="" clause.="" this="" is="" because="" of="" the="" way="" the="" cql="" model="" correlates="" 2="" (or="" more)="" relations="" (in="" a="" join).="" time="" is="" implicit="" in="" this="" correlation="" --="" here="" is="" a="" more="" detailed="">

In the above query, either W1 that designating the window TrafficaSender [range 15] and W2 means the CatSender [range 15] window.

Now both W1 and W2 are evaluated to CQL Relations since in the CQL model WINDOW operation over a Creek gives a relationship.

Let us look at the States of these two relationships over time

W1 (0) = W2 (0) = {} / * empty * /.

... the same empty up to t = 2

W1 (3) = {(9201, 9900)}, W2 (3) = {}

same content for W1 and W2 until t = 13

W1 (14) = {9201 (9900) (9200, 9909)}, W2 (14) = {}

same content at t = 15

W1 (16) = {9201 (9900) (9200, 9909)}, W2 (16) = {(9200, 9909)}

same content at t = 17

W1 (18) = {(9200, 9909)}, W2 (18) = {(9200, 9909)}

same content at up to t = 27

W1 (28) = {(9200, 9909), (9202, 9901)}, W2 (28) = {(9200, 9909)}

W1 (29) = {(9202, 9901)}, W2 (29) = {(9200, 9909), (9202, 9901)}Now, the result.

Let R = select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bpartyIt is the part of the application without the ISTREAM. R corresponds to a relationship since JOINING the 2 relationships (W1 and W2 in this case) that takes a relationship according to the CQL model.

Now, here's the most important point about the correlation in the JOINTS and the implicit time role. R (t) is obtained by joining the (t) W1 and W2 (t). So using this, we must work the content of R over time

R (0) = JOIN of W1 (0), W2 (0) = {}

the same content up to t = 15, since the W2 is empty until W2 (15)

R (16) = JOIN of W1 (16), W2 (16) = {(9200, 9909)}

same content up to t = 28, even if at t = 18 and t = 28 W1 changes, these changes do not influence the result of the JOIN

R (29) = JOIN of W1 (29), W2 (29) = {(9202, 9901)}Now the actual query is ISTREAM (R). As Alex has explained in the previous post, ISTREAM leads a stream where each Member in the difference r (t) - R (t-1) is associated with the t. timestamp applying this to R above, we get

R (t) - R (t-1) is empty until t = 15

(16) R - R (16 seconds - 1 nanosecond) = (9200, 9909) associated with timestamp = 16 seconds

R (t) - R (t-1) is again empty until t = 29

(29) R - R (29 seconds - 1 nanosecond) = (9202, 9901) associated with timestamp = 29 secondsThis explains the output

-

Mac Mini (mid-2011), 2.5 GHz mid-range Core i5, 16 GB 1600 MHz DDR3, 256 GB Solid State Drive Storage

10.12 MacOS

I upgraded to MacOS Sierra recently, since then, my Mac Mini must start 5 times before it actually starts.

It starts and restarts in the middle of the boot process, 4 times.

Why?

I have experienced a similar curiosity, but often with messing around on a Linux partition. I do not have a definitive answer for you, but check on this thread (MacBook Pro will restart several times during the boot process) and maybe here further (oots http://apple.stackexchange.com/questions/88213/how-to-find-cause-of-repeated-reb)

I hope this helps!

-

Script Automator for the DATE and TIME stamped record

Hi all

I'm not a scripter, but are in need of a DATE and time-STAMPED folder (or file) I would like to put on my desktop and have updated automatically so that I can use this tool to quickly see if a backup external (or internal) is current. probably I could also use it to quickly find out how /old/ a backup is.

for now, I do this manually if I want to quickly verify that a backup works by creating a "date named folder" on the desktop - such as '-2016 03 26 "."» so I can quickly see if a backup I just ran ran.

I have a lot of backups (internal, external, off site, etc.) and it would be super useful for me to have.

I consider the name of the folder to be customizable (potentially) in case I need to change it, but a good default would be "-YEAR MONTH DAY" so that I could see easily when this backup has been but also I name my files in this way so they can appear in chronological order "."

is anyone able to help me with something like that or suggest another forum for cross-post this?

Thank you

Jon

Hello

Create the the ""new folder " action, like this:"

---------------

Drag and drop the 'Shell Script' variable in the "name:" field.

--------------

Double click on the variable in the "name:" field:

Copy and paste this text in the field 'Script ':

date "+%Y %m %d"

-

Time-stamped comments adding in a DDHN file during logging

I'm trying to find the best way to add comments in a DDHN file during logging. I know that the fragmentation occurs when the properties are written for TDMS logging. Should I write comments in the level in the logging file (maybe a case of Structure fires when a new comment occurs) or is there another way to incorporate comments into a DDHN file during logging that I'm missing? Any help would be appreciated.

Nathan, do you really care about fragmentation? If this isn't the case, you could do it just like that. If you really care fragmantation, you could write Time-Stamped comments in a separate file, PDM, or any, Time-Stamped cache and write cached put comments in the PDM file after cutting.

-

Acquisition of data high-speed with time stamp

I am acquiring data at a fairly fast speed (5 to 25 kHz) for a few seconds and then writing in a spreadsheet file. Is there a way to set up so that it displays the time stamp for each data point instead of just the data point number?

Of course. Change the type of data returned by DBL 2D to 1 D wave form. This is doen by clicking on the polymorphic selector or right-click and choose 'select the Type '.

-

How to add a time stamp to a PDM file

I need to add a time stamp to my PDM file. Currently, I take an array of doubles, their conversion to a type of dynamic data, then send to tdms_write. I have to add a timestamp, but I can't find a way to do it. I also tried holding the timestamp, converting it into a doube, U64 and I64 and adding that to my table before the dynamic data conversion type, but when I lose precision. I need to have at least millisecond resolution. I know that I lose accuracy because the timestamp is two 64-bit values. top 64-bit are set to seconds since the epoch and lower 64 bit are fractions of a second. I would even send these two numbers divided in my happy tdms file and conversion at a later date, but can't seem to do it again. Any help would be appreciated.

The natural way would be using a waveform.

You can add your timestamp as two U64 (using cast to array U64) as properties of the channels as data t0.

Tone

-

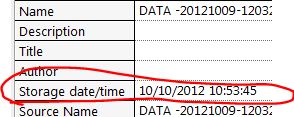

TDMS of MDF time stamp conversion error / storage date time change

I fought it for a while, I thought I'd throw it out there...

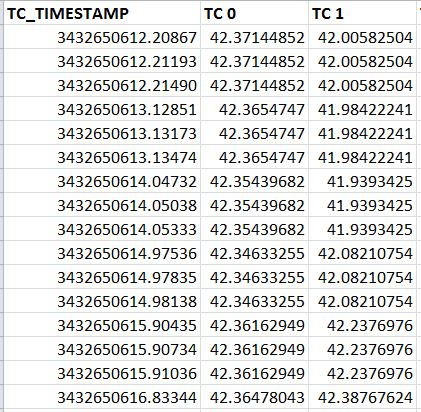

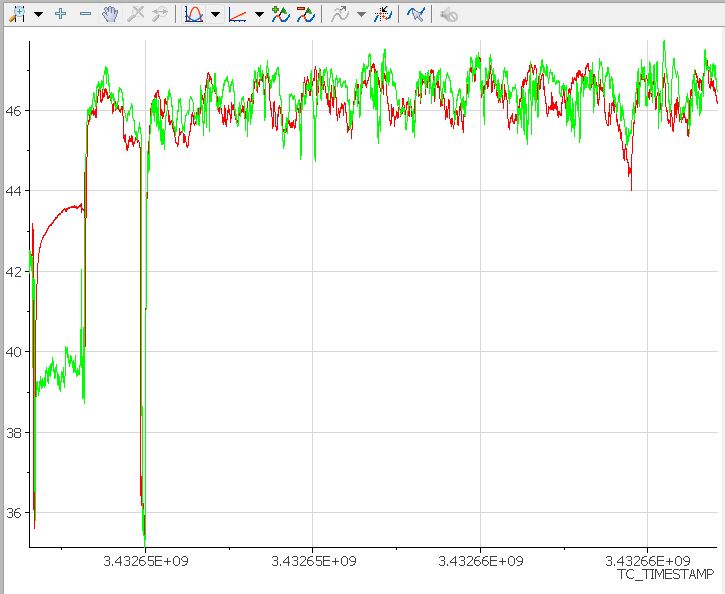

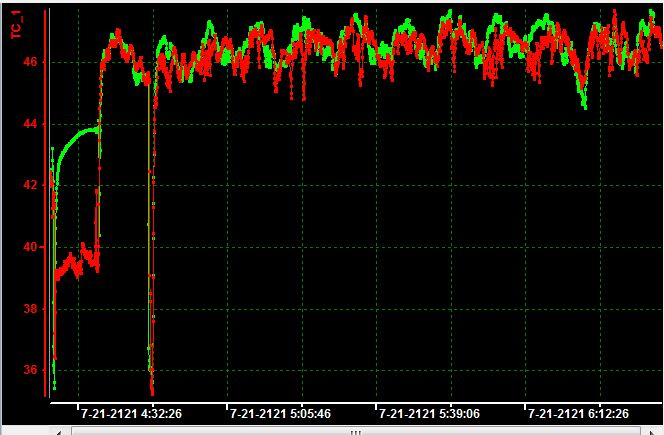

Let's say I have a file TDMS which has a channel of labview time stamp and thermocouple 2.

I load in DIADEM, I get this:

Perfect! But now let's say I want to save the PDM as a MDF file so I can see him in Vector sofa. I right click and save as MDF, perfect. I started couch and get this:

The year 2121, yes I take data on a star boat! It seems to be taking the stamp of date/time storage TDMS as starting point and adding the TC_Timestamp channel.

If I change the channel of TC_Timestamp to 1, 2, 3, 4, 5, 6, 7 etc... and save as MDF, I get this:

Very close, 2012! But what I really want is what to show of the time, it was recorded what would be the 10/09/2012.

The problem is whenever I do like recording, date storage time is updated right now, then the MDF plugin seems to use it as a starting point.

is it possible to stop this update in TIARA?

Thank you

Ben

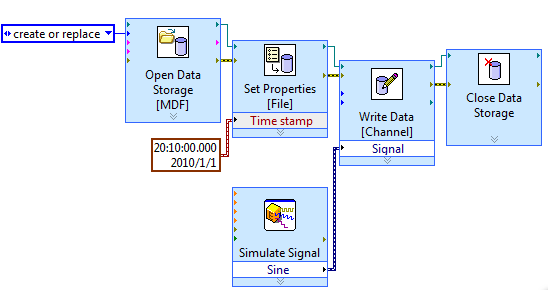

Hi Ben,

You got it right that the MDF use written the time of storage to start MDF that is updated by DIAdem when writing time. We are working on this and will return to you, if there is no progress.

To work around the problem, you can try convert TDMS MDF in LabVIEW storage vis.

Something like the following, you can write your start time of measurement to the MDF file.

Hope this helps,

Mavis

Maybe you are looking for

-

What is Photoshop 5.1 works in Sierra

Hello I would like to know, before moving my El Capitan on my iMac 21 in Sierra, if my Photoshop 5.1 would work on Sierra as it works on El captain?

-

Hello. I want to know what are these device name, so I can download driver for them. : Unknow device 1 ACPI\HPQ6007\3 & 11583659 & 1 2 ACPI\INT33A0\0

-

Battery not charging on Satellite Pro L300D

I've had this laptop about four months and had just returned a repair under warranty (replacement of the motherboard). He had light use since I bought it. On his return, I ran a Windows 7 upgrade as installation clean but noticed that the battery cha

-

Maximum time that my processor of the computer used 100% in windows XP.

CPU P4 2.34 1 GB OF RAM Windows Xp.

-

Impossible to enter the XP laptop, I forgot the admin password

Recently, I forgot my admin password on my computer laptop xp. I can't. All the suggestions I got works only when I am logged in as admin. Now locked. I can't even to the configuration to change the boot sequence to reinstall xp. Please help me. Am j