Best practices the iSCSI target LUN

I connect to my ESX laboratory to a QNAP iSCSI target and wonder what best practices to create LUNS on the target. I have two servers each connection to its own target. Do I create a LUN for each virtual machine, I intend to create in the laboratory, or is it better to create a LUN of the largest and have multiple VMs per LUN? Place several virtual machines on a LUN has concequenses HA or FA?

Concerning

It is always a compromise...

ISCSI reservations are per LUN. If you get many guests on the same logical unit number, it becomes a problem and Yes we saw it.

Slim sure to layout. This way you can make your smaller LUN and still get several s VM we each their.

Tags: VMware

Similar Questions

-

Could not see the iSCSI target in the adapters iSCSI software under Configuration details page

Detailed description:

After you add the IP address of the iSCSI target in the target iSCSI dynamic discovery Tab names can be seen under static discovery. But, iSCSI target is not considered as adapters iSCSI software tab Configuration details page.

This iSCSi target is already mounted as a data store VMFS5 with some VMS on an another ESX which is part of the different ESX cluster in the same center of work data.

thinking the im network configuration

You vm kernel ports configured correctly?

you use VLAN? If so see if your config of vlan is correct

-

Best practices for iscsi - esx4

I am trying to determine what a good or best practice would be for this situation. We have a Server Blade esx4 so in vmware, it is already connected to our system of back-end storage. I want to know is this:

Can I install the iscsi initiator on the side of the OS (software) to connect and make a second drive (d:\) in this way I can push the disc, etc. and also still available for failover, etc...

Or is there a different way or advised to do this.

Thank you

Yes, you are right.

Create a new data store (if that's what you want to do). Present this data store for all your hosts in your Cluster. Add a new disk to the virtual machine, specify the size of the drive, and then choose where you want the VMDK to be stored. You want to store this particular VMDK on the new data store that you just introduced.

Your .vmx file will know where to find this VMDK at startup.

Once the data store is visible to all the hosts, then he will be protected by HA (if you have HA as a feature under license)

-

Best practice: the name of each picture?

Hi all. It is more a question about best practices and what advanced suggest users.

As I create simple, static webpages with clusters of images and text, it is important to assign a name to each graph? I know it's crucial when you try to set animated buttons, but each image on the page have a unique name?

Thanks in advance!

My vote is "no".

More thought - what do you mean by 'name' evey image? A "name" attribute or an attribute "id"? IMG tags do not get a NAME attribute and an ID attribute on each image would be overkill, unless you have a very specific accessibility or CSS reasons to need that.

-

Unable to see the iSCSI target

Hello

I just configured an Openfiler area supposed to be some storage additional iSCSI for backup of the virtual computer.

The target seems to be configured fine that I am able to connect to storage successfully from a machine of W2K3.

However when I try a scan of the host ESX 3.02 they fail to find the storage.

I entered the details contained in the dynamic discovery, the service console is in the same subnet and I opened the necessary firewall port.

Our main storage is a SAN DS3400 of fiber and they use LUNS 0 & 1

When I try to map a LUN to the target in Openfiler he tries to use LUN 0, this could be the cause, or am I missing something else?

Any help would be appreciated

What you added is good news: vSwitch0 1) speaks through a NIC team, and 2) vSwitch0 only SC and VMotion only: you can go ahead, put your Openfiler box on your network admin/VMotion. If your vmkping did not before, I guess it's because the VMotion IP subnet field is different from the ordinary SC subnet IP field, right? If so, adds an extra port SC vSwitch0 in VMotion, to get the subnet of the ping responses and vmkping in the table. Otherwise, if the VMotion network, it's the same as SC network, so I don't understand why your old vmkpings didn't work. Well... This solution is simple, but what I don't like in it, it's that time VMotion traffic and iSCSI will use the same physical link because you don't have a single vmkernel port, that is too bad, when you have 2 physical links. You would think something even more intelligent. The idea would be to force the VMotion traffic to run priority through a vmnic and iSCSI traffic running through the other, each vmnic being the road to recovery on the other.

To achieve this, at first glance, I would say that should you be the Openfiler box on a subnet dedicated Y. Then, on vSwitch0, create 1 SC port and port 1 vmkernel having both a subnet address Y. Then play with team vSwitch0 NIC settings, the idea would be to configure ports VMK and SC on the subnet Y with an explicit failover of vmnic0 command then vmnic1, and then configure the port VMotion and the SC current port with also an explicit failover command, but with an order of vmnic1 then vmnic0 (i.e., the opposite direction). In this way, iSCSI through vmnic0, VMotion, through vmnic1, and even if there is some VMotion overnight, everyone will not work correctly. And if a vmnic fails, it will suffer a degraded mode, both using the same link, but it will be temporary... The limitation of this solution will potentially come from your physical network architecture: your physical switches will allow all these subnets routed properly through these 2 vmnic? May be that VLAN would help...

Let me know how it goes!

Kind regards

Pascal.

-

ESXi 4 installed on the iSCSI target

Hello

I'm looking using an IBM 3650 M2 series with external DS3300 iSCSI storage using a Qlogic Single-Port PCIe HBA iSCSI for system IBM 3650 x series and ESx4i installation as the hypervisor

I noticed that initiating equipment thePCI WHAT HBAS is supported.

My question is can I install ESX4i on storage that presents the target iSCSI on the server?

Thank you

Ml «»

?:|

Hello.

"You use the CD of ESXi 4.0 to install ESXi 4.0 on a hard drive, SAS, SATA, or SCSI software.

Installation on a Fibre Channel SAN network is supported experimentally. Do not try to install ESXi with a fixed SAN, unless you want to try this experimental feature.

Install the IP storage, such as NAS or iSCSI SAN, is not supported. "- p.22 of the ESXi Installable and vCenter Server Installation Guide

Good luck!

-

Having dead path to the iSCSI target

What I had:

1 host: ESX3.5i

2 host: Solaris 10 (SunOS 5.10 Sun Generic_138889-02 i86pc i386 i86pc)

2 parts ZFS on it - 1 iSCSI share and 1 NFS Sharing.

What I did:

Upgrade to ESX4i

What I have so far:

Everything works but iSCSI.

It shows 'path of death' for me, but vmkping to host Sun is ok.

tail of unpacking here:

20 June at 16:24:07 vmkernel: 0:01:22:15.659 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_TransportConnSendPdu: vmhba37:CH:0 T: 0 CN:0: didn't request passthru queue: no connection

20 June at 16:24:07 iscsid: send_pdu failed rc - 22

20 June at 16:24:07 vmkernel: 0:01:22:15.659 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: vmhba37:CH:0 T: 0 CN:0: iSCSIconnection is marked 'OFFLINE '.

20 June at 16:24:07 iscsid: reported core connection iSCSI error state (1006) (3) 1:0.

20 June at 16:24:07 vmkernel: 0:01:22:15.659 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0 TPGT: TSIH 1: 0

20 June at 16:24:07 vmkernel: 0:01:22:15.659 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Conn CID: 0 L: 10.0.0.2:51908 r: 10.0.0.1:3260

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StartConnection: vmhba37:CH:0 T: 0 CN:0: iSCSI connection is marked as "ONLINE."

20 June at 16:24:10 iscsid: connection1:0 is operational after recovery (2 attempts)

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StartConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0 TPGT: TSIH 1: 0

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StartConnection: Conn CID: 0 L: 10.0.0.2:55471 r: 10.0.0.1:3260

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: vmhba37:CH:0 T: 0 CN:0: Invalid residual of the SCSI response overflow: residual 0, expectedXferLen 0

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0 TPGT: TSIH 1: 0

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: Conn CID: 0 L: 10.0.0.2:55471 r: 10.0.0.1:3260

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: vmhba37:CH:0 T: 0 CN:0: connection failure notification rx: residual invalid. State = online

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: Sess ISID: 00023 d 000001 TPGT TARGET:iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0: TSIH 1: 0

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: Conn CID: 0 L: 10.0.0.2:55471 r: 10.0.0.1:3260

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: vmhba37:CH:0 T: 0 CN:0: event CLEANING treatment

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0 TPGT: TSIH 1: 0

20 June at 16:24:10 vmkernel: 0:01:22:18.538 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Conn CID: 0 L: 10.0.0.2:55471 r: 10.0.0.1:3260

20 June at 16:24:10 vmkernel: 0:01:22:18.789 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_TransportConnSendPdu: vmhba37:CH:0 T: 0 CN:0: didn't request passthru queue: no connection

20 June at 16:24:10 iscsid: failure of send_pdu rc-22

20 June at 16:24:10 vmkernel: 0:01:22:18.789 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: vmhba37:CH:0 T: 0 CN:0: iSCSIconnection is marked 'OFFLINE '.

20 June at 16:24:10 iscsid: reported core connection iSCSI error state (1006) (3) 1:0.

20 June at 16:24:10 vmkernel: 0:01:22:18.789 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:3dd0df28-79b2-e399-fe69-e34161efb9f0 TPGT: TSIH 1: 0

20 June at 16:24:10 vmkernel: 0:01:22:18.789 cpu1:11899) WARNING: iscsi_vmk: iscsivmk_StopConnection: Conn CID: 0 L: 10.0.0.2:55471 r: 10.0.0.1:3260

and so on.

What I did wrong, and how can I remedy this situation?

Out of curiosity, I advanced and tried the target solaris. It seems that there is a problem in the target solaris before «solaris 10 update7» I tried u6 and I see the question and this is clearly the problem of the target.

...

iSCSI (SCSI command)

Opcode: SCSI Command (0x01)

.0... ... = I: in queue delivery

Flags: 0x81

1... = final F: PDU sequence

.0... ... = R: no data is read from target

.. 0... = w: no data will be written in the target

.... . 001 = Attr: Simple (0x01)

TotalAHSLength: 0x00

DataSegmentLength: 0x00000000

MON: 0000000000000000

InitiatorTaskTag: 0xad010000

ExpectedDataTransferLength: 0x00000000

CmdSN: 0x000001ab

ExpStatSN: 0x000001ad

SCSI CDB Test Unit Ready

Set command: Direct Access Device (0x00) (using default commandset)

Opcode: Test Unit Ready (0x00)

Single vendor = 0, NACA = 0, link = 0

...

iSCSI (SCSI response)

Opcode: SCSI response (0 x 21)

Flags: 0 x 82

... 0... = o: no overflow to only read a part of two-way remote

.... 0... = sup: No exceedances of the ability to only read a part of two-way remote

.... . 0 = o: no residual overflow occurred

.... .. 1 = sup: residual overflow occurred < < < < < =.

Answer: Command completed at the target (0x00)

Condition: Good (0x00)

TotalAHSLength: 0x00

DataSegmentLength: 0x00000000

InitiatorTaskTag: 0xad010000

Statsdon't: 0x000001ad

ExpCmdSN: 0x000001ac

MaxCmdSN: 0x000001ea

ExpDataSN: 0x00000000

BidiReadResidualCount: 0x00000000

ResidualCount: 0x00000000

Demand: 10

Request time: 0.001020000 seconds

SCSI response (Test Unit Ready)

Set command: Direct Access Device (0x00) (using default commandset)

SBC Opcode: Test Unit Ready (0x00)

Request time: 0.001020000 seconds

...

14:02:12:42.569 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: vmhba35:CH:0 T:2 CN:0: Invalid residual of the SCSI response overflow: residual 0, expectedXferLen 0

14:02:12:42.584 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:fa2cdf18-2141-e633-b731-f89f47ddd09f.test0 TPGT: TSIH 1: 0

14:02:12:42.602 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_ConnSetupScsiResp: Conn CID: 0 L: 10.115.153.212:59691 r: 10.115.155.96:3260

14:02:12:42.614 cpu2:7026) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: vmhba35:CH:0 T:2 CN:0: Connection failure notification rx: residual invalid. State = online

14:02:12:42.628 cpu2:7026) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:fa2cdf18-2141-e633-b731-f89f47ddd09f.test0 TPGT: TSIH 1: 0

14:02:12:42.644 cpu2:7026) iscsi_vmk: iscsivmk_ConnRxNotifyFailure: Conn CID: 0 L: 10.115.153.212:59691 r: 10.115.155.96:3260

14:02:12:42.655 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: vmhba35:CH:0 T:2 CN:0: Treatment of CLEANING event

14:02:12:42.666 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:fa2cdf18-2141-e633-b731-f89f47ddd09f.test0 TPGT: TSIH 1: 0

14:02:12:42.683 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: Conn CID: 0 L: 10.115.153.212:59691 r: 10.115.155.96:3260

14:02:12:42.731 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_TransportConnSendPdu: vmhba35:CH:0 T:2 CN:0: Didn't request passthru queue: no connection

14:02:12:42.732 cpu3:4119) vmw_psp_fixed: psp_fixedSelectPathToActivateInt: "Unregistered" device has no way to use (APD).

14:02:12:42.745 cpu2:7026) iscsi_vmk: iscsivmk_SessionHandleLoggedInState: vmhba35:CH:0 T:2 CN:-1: State of Session passed of "connected to" to "in development".

14:02:12:42.756 cpu3:4119) vmw_psp_fixed: psp_fixedSelectPathToActivateInt: "Unregistered" device has no way to use (APD).

14:02:12:42.770 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: vmhba35:CH:0 T:2 CN:0: iSCSI connection is marked as 'offline'

14:02:12:42.782 cpu3:4119) NMP: nmp_DeviceUpdatePathStates: the PSP has not selected a path to activate for NMP device 'no '.

14:02:12:42.794 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: Sess ISID: 00023 000001 d TARGET: iqn.1986-03.com.sun:02:fa2cdf18-2141-e633-b731-f89f47ddd09f.test0 TPGT: TSIH 1: 0

14:02:12:42.823 cpu2:7026) WARNING: iscsi_vmk: iscsivmk_StopConnection: Conn CID: 0 L: 10.115.153.212:59691 r: 10.115.155.96:3260

In response, 'U' bit must not be set. Solaris 10 update7 seem to have this problem.

-

Doesn anyone know where I can find a guide to best practices, VMware ISCSI? They have best practice NFS Guides but not an ISCSI. I downloaded the Guide of ISCSI VMware, but I expect a guide of best practices. If anyone knows where they have a guide please send me a link. Thanks in advance.

The reason that there are choices, is that it is not better. It depends on your situation, SAN vendor, etc.

http://www.VMware.com/PDF/Perf_Best_Practices_vSphere4.0.PDF

-

Dell MD3620i connect to vmware - best practices

Hello community,

I bought a Dell MD3620i with 2 x ports Ethernet 10Gbase-T on each controller (2 x controllers).

My vmware environment consists of 2 x ESXi hosts (each with 2ports x 1Gbase-T) and a HP Lefthand (also 1Gbase-T) storage. The switches I have are the Cisco3750 who have only 1Gbase-T Ethernet.

I'll replace this HP storage with DELL storage.

As I have never worked with stores of DELL, I need your help in answering my questions:1. What is the best practices to connect to vmware at the Dell MD3620i hosts?

2. What is the process to create a LUN?

3. can I create more LUNS on a single disk group? or is the best practice to create a LUN on a group?

4. how to configure iSCSI 10GBase-T working on the 1 Gbit/s switch ports?

5 is the best practice to connect the Dell MD3620i directly to vmware without switch hosts?

6. the old iscsi on HP storage is in another network, I can do vmotion to move all the VMS in an iSCSI network to another, and then change the IP addresses iSCSI on vmware virtual machines uninterrupted hosts?

7. can I combine the two iSCSI ports to an interface of 2 Gbps to conenct to the switch? I use two switches, so I want to connect each controller to each switch limit their interfaces to 2 Gbps. My Question is, would be controller switched to another controller if the Ethernet link is located on the switch? (in which case a single reboot switch)Tahnks in advanse!

Basics of TCP/IP: a computer cannot connect to 2 different networks (isolated) (e.g. 2 directly attached the cables between the server and an iSCSI port SAN) who share the same subnet.

The corruption of data is very likely if you share the same vlan for iSCSI, however, performance and overall reliability would be affected.

With a MD3620i, here are some configuration scenarios using the factory default subnets (and for DAS configurations I have added 4 additional subnets):

Single switch (not recommended because the switch becomes your single point of failure):

Controller 0:

iSCSI port 0: 192.168.130.101

iSCSI port 1: 192.168.131.101

iSCSI port 2: 192.168.132.101

iSCSI port 4: 192.168.133.101

Controller 1:

iSCSI port 0: 192.168.130.102

iSCSI port 1: 192.168.131.102

iSCSI port 2: 192.168.132.102

iSCSI port 4: 192.168.133.102

Server 1:

iSCSI NIC 0: 192.168.130.110

iSCSI NIC 1: 192.168.131.110

iSCSI NIC 2: 192.168.132.110

iSCSI NIC 3: 192.168.133.110

Server 2:

All ports plug 1 switch (obviously).

If you only want to use the 2 NICs for iSCSI, have new server 1 Server subnet 130 and 131 and the use of the server 2 132 and 133, 3 then uses 130 and 131. This distributes the load of the e/s between the ports of iSCSI on the SAN.

Two switches (a VLAN for all iSCSI ports on this switch if):

NOTE: Do NOT link switches together. This avoids problems that occur on a switch does not affect the other switch.

Controller 0:

iSCSI port 0: 192.168.130.101-> for switch 1

iSCSI port 1: 192.168.131.101-> to switch 2

iSCSI port 2: 192.168.132.101-> for switch 1

iSCSI port 4: 192.168.133.101-> to switch 2

Controller 1:

iSCSI port 0: 192.168.130.102-> for switch 1

iSCSI port 1: 192.168.131.102-> to switch 2

iSCSI port 2: 192.168.132.102-> for switch 1

iSCSI port 4: 192.168.133.102-> to switch 2

Server 1:

iSCSI NIC 0: 192.168.130.110-> for switch 1

iSCSI NIC 1: 192.168.131.110-> to switch 2

iSCSI NIC 2: 192.168.132.110-> for switch 1

iSCSI NIC 3: 192.168.133.110-> to switch 2

Server 2:

Same note on the use of only 2 cards per server for iSCSI. In this configuration each server will always use two switches so that a failure of the switch should not take down your server iSCSI connectivity.

Quad switches (or 2 VLAN on each of the 2 switches above):

iSCSI port 0: 192.168.130.101-> for switch 1

iSCSI port 1: 192.168.131.101-> to switch 2

iSCSI port 2: 192.168.132.101-> switch 3

iSCSI port 4: 192.168.133.101-> at 4 switch

Controller 1:

iSCSI port 0: 192.168.130.102-> for switch 1

iSCSI port 1: 192.168.131.102-> to switch 2

iSCSI port 2: 192.168.132.102-> switch 3

iSCSI port 4: 192.168.133.102-> at 4 switch

Server 1:

iSCSI NIC 0: 192.168.130.110-> for switch 1

iSCSI NIC 1: 192.168.131.110-> to switch 2

iSCSI NIC 2: 192.168.132.110-> switch 3

iSCSI NIC 3: 192.168.133.110-> at 4 switch

Server 2:

In this case using 2 NICs per server is the first server uses the first 2 switches and the second server uses the second series of switches.

Join directly:

iSCSI port 0: 192.168.130.101-> server iSCSI NIC 1 (on an example of 192.168.130.110 IP)

iSCSI port 1: 192.168.131.101-> server iSCSI NIC 2 (on an example of 192.168.131.110 IP)

iSCSI port 2: 192.168.132.101-> server iSCSI NIC 3 (on an example of 192.168.132.110 IP)

iSCSI port 4: 192.168.133.101-> server iSCSI NIC 4 (on an example of 192.168.133.110 IP)

Controller 1:

iSCSI port 0: 192.168.134.102-> server iSCSI NIC 5 (on an example of 192.168.134.110 IP)

iSCSI port 1: 192.168.135.102-> server iSCSI NIC 6 (on an example of 192.168.135.110 IP)

iSCSI port 2: 192.168.136.102-> server iSCSI NIC 7 (on an example of 192.168.136.110 IP)

iSCSI port 4: 192.168.137.102-> server iSCSI NIC 8 (on an example of 192.168.137.110 IP)

I left just 4 subnets controller 1 on the '102' IPs for more easy changing future.

-

can we use USB HDD for the iscsi target?

Hello Manu,

1. which Windows operating system you are using on the computer?

2. are you connected to a domain?

Please provide more information to help you best.

Suggestions for a question on the help forums

-

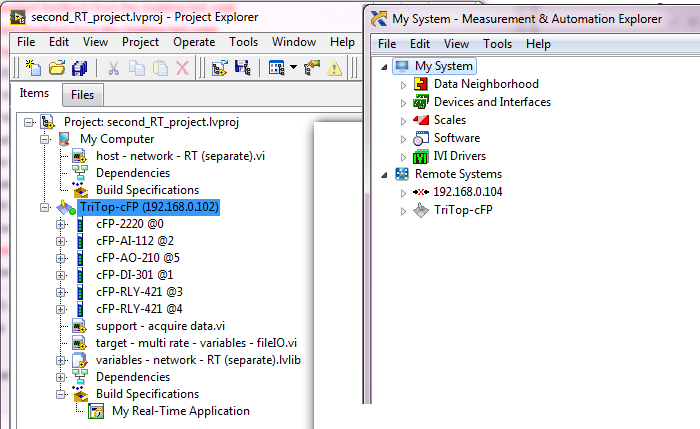

Material LV real-time Ethernet com best practices

Hello

I just started to learn the LV in real-time, and until I get a new cRIO I just played with a former PSC-2220.

Everything works, I am reading the tutorals nice about RT and deployment/running example to this target applications.

However, I don't know what is the best practice, the IP address of this device handling. For easy installation, after a device reset (and install the new RT runtimes, etc) I put just the HW to obtain the dynamic IP address of my router (DHCP). My laptop connects to the same router via wifi.

However, since after a few resets the target Gets a new IP (192.168.0.102, previous IP was... (104), I have to manually change the IP address in my project. Is it possible that the LV auto detects the target in my project? In addition, it seems that MAX retains the old information and creates a new line for the same target... so I guess that if the problem persists, MAX is going to fill?

See screenshots below.

See screenshots below.As a solution, I'll try to use static IP for the target, so it must always use the same IP address.

What is the common procedure to avoid this kind of problems? Just using static IP? Or miss me him too something else here?

Thank you!

I just always use static IP addresses. It avoids just all kinds of questions, especially if you have several systems on the same network.

-

Kickstart install the iscsi disk not found

I can't get my order of kickstart to install the iscsi disk.

the line

install - firstdisk = distance does not sutable found records

I also tried

Installer--disk=/vmfs/devices/disks/EUI.XXXXXXXXXXXXXXXXXXXXXXXXX

install - disk = mpx.vmhba32:C0:T0:L0

None of them sees the iscsi drive

But if I run the Setup manually, I don't see the iscsi drive.

any idea?

I think that I understand it. In cisco ucs, I had to change my font of startup and add iscsi as secondary targets.

the policy must be

1 LAN

2. the ISCSI targets

The kickstart file can always use install - firstdisk

-

I have 300 GB local disks on some of my hosts connected to our SAN. I would like to take advantage of this local space for models and ISO files. I intend to install the iSCSI Target Framework (tgt). My question is...

If this CentOS VM that turns our iSCSI Target just plug the local disks on VMFS or should I try to present the local disk as a RDM (Raw Device Mapping).

Since the machine will use local disks, vMotion and anything that is out of the question anyway, so I think this could be a good situation for the use of RDM.

Hello.

If this CentOS VM that turns our iSCSI Target just plug the local disks on VMFS or should I try to present the local disk as a RDM (Raw Device Mapping).

I would use VMFS for this. You will have more flexibility with VMFS and RDM the local approach seems to add unnecessary complexity.

Good luck!

-

Removed VMFS3 iSCSI target volume - I'm screwed?

Hello. I "tried" to move virtual machines between servers and the San. On a single server, ESXi 3.5, I 'got' a VMFS volume on a target iSCSI on a basic SAN device.

The volume "was" listed in the configuration of the server/storage tab. I removed thinking it would be just to delete the reference to the volume from the point of view of the host, but he seems to have deleted the iSCSI target volume.

Is this correct - is my missing volume? If I'm screwed? Is there anyway to recover the volume?

Any help or advice would be really appreciated. Thanks in advance.

We must thank Edward Haletky, not me

In the VMware communities, his nickname is Texiwill.

André

-

iSCSI treatment new target LUN in the additional paths for target Lun

Hello

I have a 5.5.0 ESXi host (build 1331820) I am trying place some new iSCSI LUNS to, but I have a problem with it that I am hoping someone can help me solve.

The back-end iSCSI server is running CentOS 6.5 with tgtd providing iSCSI targets. There are four iSCSI targets in production, with another two created and used here. When I re-scan the iSCSI environment after adding two new objectives, I see more than 4 disks in the Details pane of map iSCSI under Configuration-> storage adapters.

In addition, two of the LUNS stop working and inspection also shows that new iSCSI targets are seen as paths separate for the two broken LUNS. It is:

Existing LUNS:

IQN.2014 - 06.storage0.jonheese.local:datastore0

IQN.2014 - 06.storage1.jonheese.local:datastore1

IQN.2014 - 06.storage2.jonheese.local:datastore2

IQN.2014 - 06.storage3.jonheese.local:datastore3

New LUN:

IQN.2014 - 06.storage4.jonheese.local:datastore4

IQN.2014 - 06.storage5.jonheese.local:datastore5

Of the "Managing paths" dialog for each found discs, I see that "datastore4" presents itself as an additional path to "datastore0" and "datastore5" presents itself as a path to "datastore1" - even if the target names are clearly different.

So can someone tell me why vSphere client iSCSI is the treatment target separate iSCSI LUNS as having multiple paths to the same target? I compared the configuration of tgtd between the original LUN 4 and 2 new LUNS, and everything seems OK. They are all attached to different backend disks (not that vSphere should know/care about this) and I wrote zeros to the new back-end disks LUNS to ensure that there is nothing odd about the disc, confusing the iSCSI client.

I can post some newspapers/config/information required. Thanks in advance.

Kind regards

Jon Heese

For anyone who is on this same situation, my supposition above proved to be correct. But in addition to renumbering of the scsi_id each of the LUNS, I also had to renumber the field controller_tid to the controllers on the second node would be present with unique IDS. Here is an example of the stanza of config I used for each LUN:

# device LUN storage medium

-storage/dev/drbd5 store

# SCSI for storage LUN identifier

scsi_id EIT 00050001

# Identifier for the LUN controller SCSI

controller_tid 5

# iSCSI initiator IP address (ed) allowed to connect

initiator-address 192.168.24.0/24

It is a problem of configuration of tgtd after all! Thanks to all for the questions that led me to the right answer!

Kind regards

Jon Heese

Maybe you are looking for

-

I also tried to remove the modules FireFTP and reinstall again. "FireFTP" is the show it Enable. But why that is hidden in the 'Web Developer' Menu. I see "Firebug" Option.

-

Upgrading RAM on the C670D-115 Satellite

What kind of ram I should buy to add two other GB on my computer ram for a C-satellite c670D-115?I use windows 7. (µ - Pro is AMD-E300) Thank you for choosing Kingston ram, Michel

-

I use a MacBook Pro, and I have a Scanjet 3970 is fine. But when I went to download Yosemite, new Apple operating system, I got a message saying that the connection of Power PC with my scanner no longer works. Is there any solution for this?

-

BlackBerry Smartphones Facebook places for 9100 pearl

Hello, why the custom of places working on the Pearl? Thank you

-

I wonder if someone can point me in the right direction.I want that my visitors of site Web of Muse to search a text list to find a specific hyperlink.Simple (I hope) - entered into a text box returns the appropriate URL:URL textdog - http://anima