Compensation of temperature for the strain gauges

I'm trying to compensate for the effects of temperature on an extensometer placed on a sample of carbon fiber. The sample will be only responsible uniaxialement. The temperature is a major concern here, for an electrical current will be executed by him for purposes of measurement, which should also heat the sample. I read in the document 'Strain gauges measure' on the website of NOR (http://www.ni.com/white-paper/3642/en), but he speaks with a Measurer of mannequin, he speaks not just how this second pledge, placed perpendicularly to the axis of the applied force, we are not talking of how connect to the strain gauge module , in my case the NI 9235, to use for temperature compensation. My first thought was just connect each pledge to the module as their own separate channels, using quarter-bridge in the LabVIEW software set up, calibrate the two gauges while not external physical load or temperature effects are applied, and then, after the test, by subtracting the measure pledge Sham in the values of assets pledged. But then I started wondering, is there a better way to do this? Can I use the half-bridge configuration, or some other configuration with the 9235 to automatically merge the readings two pledge during the test?

I'd appreciate it really any advice that anyone can give me on this. I don't want to build my own external wheatstone bridge (other than the built-in NI 9235), and I would like to make these measurements as accurate as possible. I have also considered the possibility of the compensators measurers, but I don't think this would be applicable in this case, the complexity of the matter - being a composite.

Hello WyoEng,

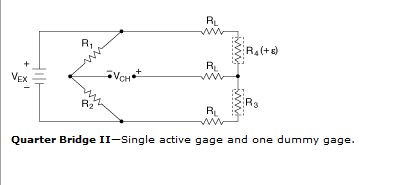

You want to use the temperature compensation is referred to as a quarter bridge II configuration (image below). This configuration is very similar to a half of the bridge, while the second element (R3) is inactive because it is placed transversely to the direction of the load, without strain and in the same location the active gage (R4) to take account of thermal affect.

NI 9235 supports only a quarter bridge I configuration for measures of constraints. You will get an error if you try to use this module for any other configuration of pledge of strain that is not quarter bridge I. Thus, active policy that you asked to use two channels to read the pledge and the dummy gage will be the best option to use NI 9235 to compensate for thermal effects on your extensometer.

Another option would be to look on the NI 9237 which supports a quarter bridge II configuration.

Best regards

Izzy O.

Technical sales engineer

National Instruments

NI.com/support

Tags: NI Hardware

Similar Questions

-

With the help of the strain gauge convert read vi

I'm having trouble with the wiring of the strain gauge convert reading vi. Are there examples of this vi showing how to connect?

Thank you

HS

Have you checked the help file to this topic?

http://zone.NI.com/reference/en-XX/help/371361G-01/lvinstio/conv_strain_read/

There seems to be some examples of stock to use, but the help files for these screws can be VERY useful.

-

Reading of temperature for the use of Thinkpad T510

Hi all

I have a question about the standard temperature / acceptable range for computers thinkpad t510 operation. My setup is currently my t510 is connected to an external monitor, speakers, keyboard and mouse. Therefore basically a desktop computer except using the innerds of the laptop as the "heart" of the system. My power manager put the CPU to adapt. The temperature readings that I get for the processor and the motherboard download the program called of Piriform Speccy are on average 60 to 61 degrees C. I wonder if it is within the normal temperature of operation for the laptop and if it is acceptable. What is the temperature not okay and why it is so high, it's that I use external references. Also, I even use heavy workload for the laptop and more for the web, browsing and listening to music and work on documents / spread sheet so basically, office work.

And finally, the problem of temperature would resolve if I would in the future to get a computer laptop w530 since these laptops are more "powerful"? Or is that evil and the temperature is normal.

Thanks in advance for answers

unit88888888 wrote:

The temperature readings that I get for the processor and the motherboard download the program called of Piriform Speccy are on average 60 to 61 degrees C. I wonder if it is within the normal temperature of operation for the laptop and if it is acceptable.

Yes ~ 60 ° C is quite normal. I would get concerned if temperature readings consistently exceeded 80 c or if the fan was constantly. Otherwise you will see the temperature fluctuates as activity processor and graphics vary.

-

Hello

hope I posted in the right place. I'm about ready to buy a cDAQ-9172 chassis and modules temperature 9211 or 9219 universal. I have not yet taken my mind yet. Then, I thought that one of them would make the temperature range required that I need. I need to go from-40 ° C to + 200 ° C and I will use a K type thermocouple. Does anyone know what are these upper and lower limits with this configuration?

Thank you

Mike

9211 entry record indicates the tension... measuring range ± 80 mV

A quick check on Omega web page http://www.omega.com/temperature/z/pdf/z204-206.pdf indicates this combination will be easilly cover your range of operation.

-

Temperature for the host hardware status

Hello to all,

mia domanda, come da oggetto, e' come fare a parameter dei valori sui alarm set definition by it check della temperatura.

Dove devo kindly do I valori di Re?

Non riesco a quali siano soglie by cui notification parta oppure understand not.

Grazie a tutti

The healt del sistema e Marin automatically in base alle impostazioni del relativo CIM.

Quando qualcosa e fuori scala viene triggerato.

-

Installation of bench beginner for the introduction of analog measures

Hello world

I'm looking to install a system to help make some simle measures. This configuration will be used only by me at my desk/Workbench to help me better understand some parts of machine, that I as well as various other hardware troubleshooting. The I want to be able to take common measures are: stress/strain, vibration, temperature, force, torque and movement.

At this stage, this project is on a small scale. I'm not running from PLC or using data in order to operate a plant. This configuration will be about just myself, my computer and a piece of equipment, I need to test.

I currently have one 6003 NOR for my use that would be preferable to use, but if I need a stronger DAQ so I can get to the need.

My main question is if I can get aqeuate with hardware DAQ 6003 and results if I would be able to condition enough signls accompaniment or if I need a DAQ with higher resoluion and a signal conditioner sufficient. My concern is whether or not I will be able to properly signals on status of piezoelectric sensors at vibration action. Is there a way to produce viable results, or I'll have to come back a more capable DAQ? I wish I could do an analysis of the frequency on trees engines operating normally at about 60 Hz. It would be nice if I had the Betacam to observe signals of up to 150-200 Hz at least. In my case I don't need extremely accurate results if I can go out with an afforable configuration more. I think that a level of trust of value p final (90%) will go well at this time.

I'm still quite new to data acquisition and signal conditioning. My purpose behind this, especially to learn how to take the right steps correctly more than I worry accurate results.

If someone could give me their thoughts about this I would be very grateful.

Thank you kindly,

James

James

I think you need to split the signal conditioning in data acquisition. I suggest you watch the series 7B Analog Devices and some of the imitators, for example Acromag.

http://www.analog.com/en/products/landing-pages/001/7B/7B-Series-Overview.html

Essentially, you choose a module specialized for each measure of your choice. The modules are isolated, at least reduce signal interference problems. Sensor excitation, e.g. for the strain gauges is provided on certain modules. You will need to study the performance of frequency to get the 200 Hz on some modules.

The modules plug in a motherboard that can then be connected to any data acquisition system that you like.

Or the versatile NI9219 can better meet your needs.

-

Calibration with NI9237 and NI9944 strain gauge.

Gentlemen.

I have a cDAQ9172 OR with NI9237 and the bridge 1/4 NI 9944. Practically, I'm working on measures of strain gauge issues using a strain than 120 ohms connected to the NI9944 to build the bridge half happening inside of 9237. I have a continuous doubt how is the calibration for the strain gauges. The manual speaks of a shunt resistance which, in the case of NI9944, is already in the system. The manual says that I don't have the shunt resistance external nee. It is clear.

My question is this:

the menu for calibration requires a resistance value that I don't know, I'm leaving in the value proposed by the menu of NOR. The strain gauges takes easily compensate, so I always have to recalibrate the channels in the NI9237. Is this normal? Can a (application to 2.0 V strain gage) voltage of 2.5 [V] generates a continuous drift of the measure?

Strain gauges are: EA-06-125BT-120

Hi cgenco,

Because the NOR-9237 with 9944 uses an internal resistance for shunt calibration, you need not to worry about the value of the shunt resistance. Take a look at the following article that specifies how connections are made. Calibration article will show you the basics behind how to exploit.

Also, since there is a ratiometric measurement, the voltage is 2.5V shouldn't matter as long as your pawn takes care of everything.

-

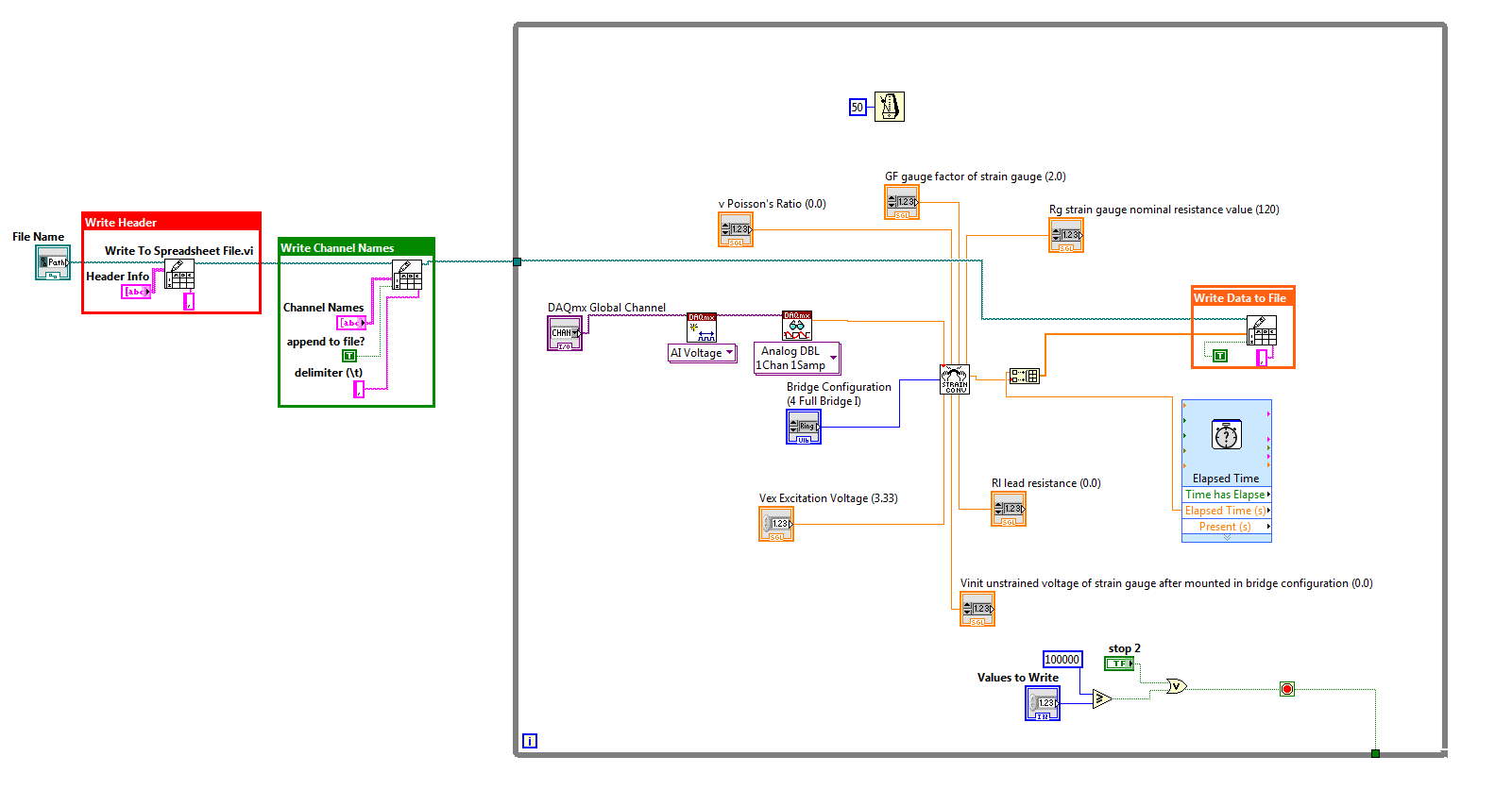

Strain gauge: calibrate, relaxation and to write to the file

Hello

I'm new to labVIEW please bare with me. My title says I'm trying to do. I want to capture data from strain gauge using a trigger and write data to a file. However, I also want to be able to calibrate the strain gauges.

My attached program reads the data correctly with relaxation and stores it in a file, but it does not calibrate properly. If I run the program several times and press the button "calibrate" each time, finally get gauges calibrated after two or three iterations.

Is it possible to change the program so that the gauges are calibrated and then data can be triggered and written to the file?

I'm using LabVIEW 8.6 and NI-DAQmx 8.8.

A screenshot of the front panel is also attached.

So I thought about it, but I'm not completely sure why it works. I didn't remove the loop condition around the calibration block and the program works beautifully. All I have to do is wait about 20 seconds before I hit the trigger for the calibration to be completed, and my gauges will be calibrated when I pull the trigger.

This program works very well for this application. However, it is difficult to change because all parameters are constant in the block diagram.

-

Hello

Currently, I'm trying to convert the reading of the strain gauge allows to get readings of the strain of a game of strain gauges of configuration of full-bridge at an angle of 45 degrees on a hollow cyllinder. It is set at 45 degrees, because we are trying to get traction and compression strains resulting from the application of the twist, or a moment of torsion, to the cyllinder hollow. Finally, we want to convert these readings of strain in order to get the couple. Our problem is that full-bridge options to convert the strain gauges appear only apply to configurations 0 or 90 degrees. My question is how can I take my current VI and set it to take readings of the strain of an alignment of 45 degrees. Also suggestions on how to add couple of this VI measures would be appreciated. We have scoured the site, but we are very new to Labview.

Thank you!

This is not a very good example, they show. Full-bridge 1 will also read the constraint of torsion. Full-bridge 1 is for any configuration where you get 2 gauges of tensile and compression 2 meters and you expect roughly equal magnitudes. With a twisting bridge, the only thing you need to do is to make sure that the wiring and pay attention to the numbering of gage. Think just mentally on what measurers are in tension and in compression as you twist the stem or tube.

If you're wrong, you pretty much know immediately because you will see very little change in the output when you go to the torsion of the shaft because the templates of traction and compression will liquidate cancel each other in the bridge rather than expand their effects. To fix it, all you will need to exchange a few threads to effectively rewire the bridge correctly.

Good luck in your project.

-

Negative results with strain gauges

When I run my VI the results are always negative. I use the NI9237 with the NI9945. I wired my installation as one quarter bridge. There are three wires from the strain gauge. I went on the wires and I think it's okay / characteristics of NEITHER. Is there something in the MAX that I should be looking. Not sure why the values are negative.

Thank you

Harry Stone

Hi Harry,.

There are a few things I want to clarify:

-Traction deformation is positive and compression deformation is negative, what is described a high level in the tutorial below.

Strain with gauges

http://zone.NI.com/DevZone/CDA/tut/p/ID/3642As strain compression is negative, you would see negative within MAX results if your strain gauge knows any compression. Please keep in mind that a shift can be associated with each transducer, that's why some sensors use a calibration certificate. It is produced by the manufacturer and is provided with the sensor as is the specific sensor. The sensor goes through a testing process to determine its actual response compared to the ideal. In this case, a scale of table can be created to include these values.

How to do a custom able scale & Automation Explorer (MAX)?

http://digital.NI.com/public.nsf/allkb/3F6558112FD2C776862575B5004F7F87?OpenDocumentNot all manufacturers of sensors provide a calibration certificate. Or you can create your own table by placing known quantities of pressure, force, etc. on the sensor and map it to the corresponding voltage, or you can create a linear scale in MAX adjusting the intercept (b) the value necessary to remove any compensation.

You use the NI 9237 that compensated supports deletion. A null offset is executed with the sensor fixed without load placed on the sensor. Actually, a measurement of voltage is taken and this value is subtracted off the coast of each subsequent measure therefore removing the start offset. This takes up space you creating a linear scale and in doing so manually.

The two links below show how to use a custom scale created in MAX in LabVIEW, as well as coding the custom in LabVIEW scale to remove the dependency of MAX.

Acquisition of DAQmx with custom scale

http://decibel.NI.com/content/docs/doc-3706Create a linear scale customized for each channel AI in LabVIEW using DAQmx

http://decibel.NI.com/content/docs/doc-11136I recommend using a task sequence. Input parameters for the information about your strain gauge needed to perform the conversions of strain. There is an example of a measure of deformation in the example Finder LabVIEW (* open LabVIEW * help > find examples) designed specifically for the NI9237 that incorporate deleting the offset and shunt calibration devices. If you do not have external wires connected for calibrating shunt such as cited in this document , you will receive an error. Here is an explanation from the NI-DAQmx help Shunt calibration (start > all programs > National Instruments > NOR-DAQ > NOR-DAQmx help) to help better explain this feature.

Shunt calibration (adjustment of Gain)

You can check the output of a measurement system based on a bridge by comparing the measured output bridge with a calculated value if the physical load on the sensor is known. NOR-DAQmx can then use the difference (if any) between calculated and measured values as a factor of adjustment of gain for each measure. You can simulate the application of a load at the bridge by connecting a significant resistance in parallel with the bridge. This resistance, known as a shunt resistance, compensates for the voltage from zero of the bridge. Because the value of the shunt resistance is known, you can calculate the physical load corresponding to the voltage drop of the resistance.Use the Shunt calibration perform the Assistant DAQ or DAQmx VI/function to perform a calibration shunt, which defines the the gain setting for a virtual channel. NOR-DAQmx then uses this adjustment of gain when you descale readings from the bridge. Some National Instruments products are internal resistance.

This may seem like information overload, but I wanted to provide you with a detailed explanation of your understanding, in addition to immediate responses. As a logbook, I recommend that you use the 9237 strain example and use the removal compensation. Negative values are expected for compression and positive for blood. The handy Guide below gives an excellent overview of the strain gauges, which also includes a video.

Measurements with strain strain gauges: practical Guide

http://zone.NI.com/DevZone/CDA/tut/p/ID/7130Hope this helps!

-

Re: Room Temperature for Satellite A100-999

Hi all

I have a Toshiba Satellite A100-999 (old lil :/) with XP

I was wondering what are the temperature ranges at low, medium or high performance for the motherboard and CPU.

at the present time about the temperature I have are: (Celsius)

Motherboard: 60 + -.

CPU: 65 years and more-When it has a high performance, it reached 90 - 95 +-. And if im right... the CPU with this temperature control can be activated automatically right?

As im not an expert in the field, can someone guide me a bit?From now to detect temperatures im using the software: Everest AIDA64. (I guess that temperatures are not 100% Reliable, but they give me an idea).

If there are ways to cool the laptop (I know cleaning is one of them of course) I would be grateful to receive some information.Thanks in advance

concerning

> I was wondering what are the temperature ranges at low, medium or high performance for the motherboard and CPU.

It is not easy to say dude. The temperature can be always different and always depends on the use of the laptop.

On the Intel page, you will find details about your CPU and you can find the temperature for the CPU limit. It s mainly around 100 C.However, if the temperature is too high, then the laptop would shut down automatically to prevent the equipment from damage. If the laptop is secure with such protection.

By the way; the temperature can occur at a higher value if cooling modules and grids are obstructed by dust. So it s advisable to clean the grilles and fans of compressed air for usinf time spraying time.

-

Temperature of the GPU on Satellite P100-102

Does anyone know the values of normal temperature for the GPU from NVIDIA 7600 Go series? After replacing the motherboard by the service, the GPU reached 82 degrees Celsius (180 deg F) (without executing 3d application) and only the main cooler blows. Under 3D performance he attained values around 100 degrees Celsius.

The values were obtained with the Everest program!Thank you

JanHello

Have you noticed the same temperature before replacing the motherboard?

AFAIK the P100 supports a clean cooler graphics card.

Two heat sinks are placed on the map: one for the CPU and a GPU.It seems that the GPU heatsink does not work. Why? Maybe the technician didn't properly connect I n don't know

But try to contact the service guys again I think they should check again before the GPU goes dead -

Controller of temperature for Alienware 15R2?

I'm looking for a monitor of temperature for the Alienware 15R2... Someone at - it try one of them?

I tried CPUID HWMonitor of located here. It seems to work for me on the very rare occasion wherever I use it.

-

Temp average for the m1264n processor?

I just bought a used HP Media Center m1264n desktop PC.

I would like to know what I would expect the average temperature for the CPU.

I have rebooted, F1 selected to enter the "BIOS Setup Utility", selected "Advanced", select "Hardware Monitor" and showed the following:

CPU temperature: 58/136F

I installed several programs of different monitors:

CPUID

SpeedFan

Core Temp

Core Temp says:

This Intel processor is not supported.

CPUID and SpeedFan both show the temperature is the same as in Settings/Advanced/Hardware Monitor.

My question is what is the acceptable operating (high and low) for CPU temperature?

Can someone tell me?

Thank you

According to this page: http://www.cpu-world.com/CPUs/Pentium_4/Intel-Pentium%204%20530-530J%203.0%20GHz%20-%20JM80547PG0801M%20-%20HH80547PG0801M%20%28BX80547PG3000E%29.html

The CPU max 67C temperature, which is typical. Anything less than 60 c should be OK.

Minimum/maximum temperature (° C) 5-67, 7

-

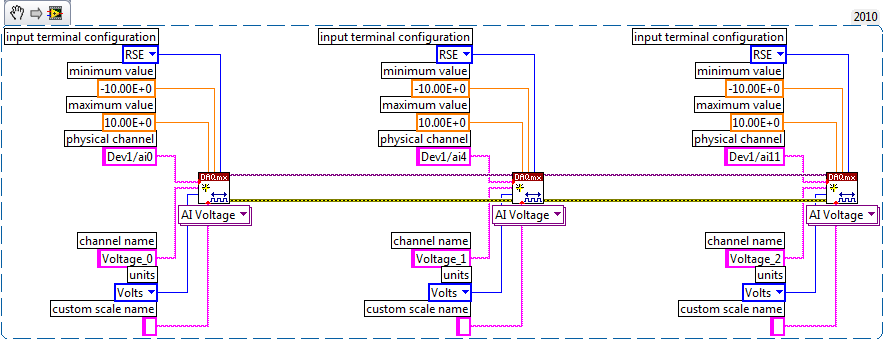

Measure the voltage of strain gauge

I have connected my 9237 to a 9945. I use a 350 ohm strain gauge. I have the voltage set to 2, 5V Max is there a way to measure physically to be sure that it is 2.5V? Also, in my vi I use a DAQmx create function of the channel. I want to add another channel for this but can't see how to do it.

Thank you

HS

Hi, Harry, it's Paul with engineering Applications to the OR.

My first question is why you are wanting to physically measure the voltage?

If you are wondering how that tension may vary, it is limited by the maximum capacity of 150mW of your device, as explained here: http://digital.ni.com/public.nsf/allkb/7CBC67482CC9FB318625758C0048FF73?OpenDocument

If you want to continue to measure externally, you have a few options. You can use another DAQ hardware to measure the voltage, or you can use another external device, like a digital multimeter.

If you want to see in the excitement that is actually supplied LabVIEW code, you can use the node property DAQmx 'Value of real excitement'.

As far as playback of multiple channels, theres two ways you can go about it. If your channels are sequential and all have the same settings, then you can change your name of the physical channel to something like 'Dev1/ai0' to ' Dev1 / ai0:3: to specify the first 4 channels.» Alternatively, if you wanted to select non sequential channels, you can chain create channel set tasks, as long as they are of the same type of task (AI voltage, etc.) and the same device, as shown below.

Let us know if you have any other questions.

Kind regards

Paul

Maybe you are looking for

-

Why do I connect when I start Firefox?

When I start Firefox it asks user name and password.I want to remove that.Thank youFran

-

HARD drive recovery is available for Satellite Pro 450 L?

Hello I was wondering is the recovery for 450L installed on the laptop or pressing certain commands when it starts it will launch? or do you have a cd coming with him.

-

I have a Photosmart Plus B209a-m and I'll try to find there e-mail address. Can someone help me.

-

Windows does not recognize the Powershot A85 digital camera

my camera is plugged in the Device Manager it is said that it works correctly, but it is not recognized by my windows 7 ultimate can someone help

-

I just replaced the motherboard on my sisters machine because the ATA controller is dead. The old installation of windows 7 has said there were errors so I had to restore. After the restoration and the restart, he began "configuration for first use