data packets

Hello

In my application, I'm voting given by a fireplace on a module (COTS). And I am tring to create data package which contaain 100ms to a data value. Each package must have a data header that contains the length of the data (the amount of data in a package). I have packages create difficulties.

right now I'm reading the stack (which puts the data in a structure) at the same time, I am writing to the disk. In this method, I can't calculate the length of data because I write the header first, and then the data. Is there way I can accomplish this task? Is there way to data in the buffer for 100 ms in a sort of buffer can calculate the length of the data and then write the whole package (header and data) on the disk? what I'm trying to do, it's yo follow a standard called IRIG 106 Chapter 10. Any help will be greatly appreciated.

Thank you

I can't recognize the error message you posted and the code snippet is conceptually ok: the problem may be related to the rest of the code you don't have not shown us. You can also report the exact error message text?

Tags: NI Software

Similar Questions

-

Re: Reading data from a data packet

We didn't find a driver for our instrument as we try to enter the data using the method I explained before. Here is an example of the data packets we receive in our serial port monitoring software.

First data packet 13 88 04 4 00 00 01 00 00 7 b 37 02 EE F3

Second data packet 13 88 04 EE 4 00 00 01 00 00 79 D9 02 EE

Third package of data 13 88 04 4 00 00 01 00 00 78 BA EE 02 EB

Here, as we have seen above 13 88 (5000 = frequency in decimal) 04 4 (represents1100 = voltage in decimal) is constant and the endpoint is represented by 02EE. Put apart is the rest (for the first package 01 F3 = 499 asuuming current and 7 b 37 = 31543 its seizure of power continues to change now in each package and they all have a different scaling). The 00 00 just represents the filling between them. Now we read the values in value mean in different boxes and produce them in LabVIEW front pannel. Here, I have attached a picture of the instrument for reading side by side with the monitoring series reading software. How do I do that? I read something called visa read and write but they only accepts strings, not in bytes. Any kind of help is appreciated.

gvcjgzj wrote:

I've read that something called visa read and write, but they only accepts strings not in bytes.

I don't understand what you're trying to say here. The strings ARE bytes!

Apparently, you read the 14 byte strings. Easier to analyze would be type conversion, because your data is already big-endian. (cutting the chain as suggested above seems tedious).

Here's a quick example that includes the padding assuming current I32 and power. To read them as I16, add two of the elements of the cluster and make all 16 bits and make sure that the cluster command is correct. Use obvious names for constants. Most likely, you need to do a few other adjustments. Also for other values, you need to know if they are whole signed or unsigned.

Of course, that you can access individual data to aid ungroup by name... For example, I graph current vs power on a xy chart. An entry is an array of strings, one element for each line.

-

Send and receive data packets on the network

Hi all.

Let's say I have a GPRS Modem that sends a data packet to an IP address of a server.

How can I receive and read the packet data using Labview?

In other words, how can I send a packet of data to the server using Labview?

Thank you.

Dear UOI,

First of all, in my opinion, you must decide which protocol you we will use to connect both sides of the application.

Is that UDP or TCP, for example?

Reading of the package depends on the Protocol, according to me. But it is possible.

About these two examples of Protocol that I quoted, that's two white papers for you to analyze.

LabVIEW basic TCP/IP communication

Kind regards

-

Satellite L100-120: Wlan does not allow the transmission of large data packets

Satellite L100-120, Intel 3945ABG.

Router Wi - fi is 3COM OfficeConnect Wireless, same problem with Dlink DI-524.By default my wi - fi card does not allow the transmission of packets of data.

I use ping-f-l 1464 192.168.1.1 to check if it is possible to send a large package. All packages more then 600 large fail to be sent.

It is tragically wireless performance and I almost cannot use internet at all. I solved the problem partially by setting the MTU to map wi - fi at 548. Connection is now stable, although I can not yet send massive emails. Anyway, it is not a good situation to have such a low MTU value.

Everybody respected this problem?

I think you will find the solution in this announcement:

http://forums.computers.Toshiba-Europe.com/forums/thread.jspa?threadID=15101I think the secret is the update of the BIOS!

-

Reading only the numerical value of data packet?

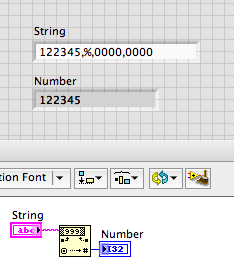

I have an entry that gives me: 122345, %, 0000 0000

I want just the 122345 part so that I can graph the value in real time as data are to be listened to in the computer. How can I choose for this? Now I just have a straight read command and read buffer indicator, I did just unroll the package according to the number of bytes I tell her to read.

Watch the channel for several functions in the palette of the format string. If the values are always integers, and always at the beginning of the string, the decimal string to number feature will work without extra effort.

Lynn

-

USB Interrupt IN endpoint data

I am developing firmware for a USB instrument and widely using the NI-VISA to test and debug. I would like to be able to read the data packets that comes on the Interrupt IN endpoint during a USBTMC service request - USB488.2 (SRQ). I think that the firmware did the right thing because this code works:

ViSession defaultRM = VI_NULL, instr = VI_NULL;

Turn off State; allow to Set mutual FUND (done) in the State of the event;

BSE (event status bit) scheduled status Byte enable Register; start a

order with overlap; and generate a service request (SRQ) when

the operation is complete.

ViByte buf [] = ' * CLS; * ESE 1; * SRE 32; : SYST:ARR:CLK 2304; * MUTUAL FUND ";

ViUInt32 cnt;

ViUInt16 STB;

viOpenDefaultRM(&defaultRM);

viOpen (defaultRM, "USB0::0x0000:0 x 0001: 0:INSTR", VI_NULL, VI_NULL, &instr);)

Service request events go in the standard event queue

viEnableEvent (instr, VI_EVENT_SERVICE_REQ, VI_QUEUE, VI_NULL);

Launch a command with overlapping that takes awhile to finish and wait that the

service request indicating that it is finished.

viWrite (instr, buf, sizeof (buf)-1, &cnt);)

viWaitOnEvent (instr, VI_EVENT_SERVICE_REQ, VI_TMO_INFINITE, VI_NULL, VI_NULL);

When you receive a VI_EVENT_SERVICE_REQ on an INSTR session, you must call

viReadSTB() to ensure delivery of future service request events on the

given session.

viReadSTB(instr, &STB);)viWaitOnEvent Gets the event, and the status byte, I read the right fixed flags (SRQ and BSE). As the value of status byte is transmitted the USB device to the host computer in a package from the Interrupt IN endpoint, it seems that he must do this package.

I would like to see this package. But several things, I tried to get it from NI-VISA have so far failed. For example, I tried to install this reminder Manager:

public static ViStatus _VI_FUNCH interrupt_in_hndlr (ViSession vi, ViEventType type, ViEvent, ViAddr userHandle evt)

{

HANDLE interrupt_in_event = userHandle;

ViUInt16 size;

VI_ERROR (viGetAttribute (evt, VI_ATTR_USB_RECV_INTR_SIZE, &size));))If (type! = VI_EVENT_USB_INTR)

Return VI_ERROR_INV_EVENT;If (size > 0) {}

ViPByte buf = new ViByte [size];

VI_ERROR (viGetAttribute (evt, VI_ATTR_USB_RECV_INTR_DATA, buf));

printf ("interruption-IN = data");

for (int i = 0; i)

printf ("% 02 x", buf [i]);

printf ("\n");

} else {}

printf ("data without interruption-IN! \n") ;

}SYSERR (SetEvent (interrupt_in_event), FALSE);

Return VI_SUCCESS;

}with this code

HANDLE interrupt_in_event;

SYSERR (interrupt_in_event = CreateEvent (NULL, TRUE, FALSE, NULL), NULL);

Install a handler for the events of interruption USB-IN

VI_ERROR (viInstallHandler (instr, VI_EVENT_USB_INTR, interrupt_in_hndlr, interrupt_in_event));

VI_ERROR (viEnableEvent (instr, VI_EVENT_USB_INTR, VI_HNDLR, VI_NULL));and he is never called. I tried to put these events in the standard event queue, and the call to viEnableEvent fails. I tried to open the: RAW device instead of the: INSTR device and who fails.

Can someone lend me a clue?

For interruptions of the standard USB488 subclass (related to the status of the request READ_STATUS_BYTE SRQ), interrupts are handled by VISA. So, if it is an interruption of the SRQ and you have SRQ active event, which should be raised (that you see in your example). It would be the only time wherever your USB_INTR event is raised for other types of interruptions (i.e. vendor-specific interruptions). Is there a particular reason you are trying to get the data of interruption for the standard interruptions? The only data is the status that you get with viReadSTB call anyway, right?

-

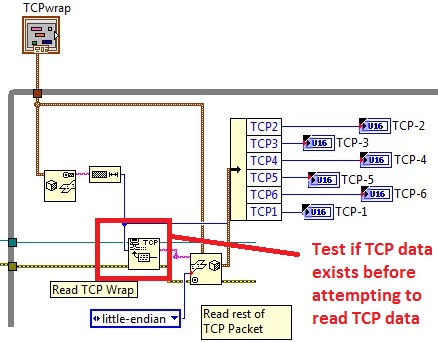

I am putting together a VI to read data TCP with a 12 byte TCP wrap (the first 12 bytes of each TCP data packet), but I have problems when there is no TCP data.

Is it possible to determine if there are TCP data for a specific device (IP address) before I try to read the

TCP is used for each TCP packet envelope?

Thank you

0x20 is 0d 32 - that is the number of bytes in your package. If you type run your string of length 1 byte for a U8, you should get the size of the packets.

-

Convert data from text file to display for hex UDP transmission controls

Hello

I'm reading packets ethernet from a text file containing the actual hex data packets to then send these accurate return through a writing UDP hex data. I can't understand how to feed data into the function of writing UDP as real rather than characters ASCII hex data, as it does by default. I had the screen on the last VI before the writing of the UDP to "hexadecimal display mode" and if I manually type the hexadecimal values in the VI (hexadecimal string to binary String.vi - attached), then it passes the commands correctly. However,... when I fed the string of text in my text file data in this VI, it seems to be the substitution of this hexadecimal display on the VI input mode and the resulting entry in my UDP is still ASCII character mode. I tried to use a cast inside this VI, type... but that doesn't seem to work right. I have attached the main VI and VI which tries to prepare data before reading the UDP protocol. I've also attached an example of text file of data that I am an attempt of analysis.

Any help would be appreciated,

Thank you

Hi jsrocket,

the attached example should work as a transformation.

Mike

-

Smart way to save large amounts of data using the circular buffer

Hello everyone,

I am currently enter LabView that I develop a measurement of five-channel system. Each "channel" will provide up to two digital inputs, up to three analog inputs of CSR (sampling frequency will be around 4 k to 10 k each channel) and up to five analog inputs for thermocouple (sampling frequency will be lower than 100 s/s). According to the determined user events (such as sudden speed fall) the system should save a file of PDM that contains one row for each data channel, store values n seconds before the impact that happened and with a specified user (for example 10 seconds before the fall of rotation speed, then with a length of 10 minutes).

My question is how to manage these rather huge amounts of data in an intelligent way and how to get the case of error on the hard disk without loss of samples and dumping of huge amounts of data on the disc when recording the signals when there is no impact. I thought about the following:

-use a single producer to only acquire the constant and high speed data and write data in the queues

-use consumers loop to process packets of signals when they become available and to identify impacts and save data on impact is triggered

-use the third loop with the structure of the event to give the possibility to control the VI without having to interrogate the front panel controls each time

-use some kind of memory circular buffer in the loop of consumer to store a certain number of data that can be written to the hard disk.

I hope this is the right way to do it so far.

Now, I thought about three ways to design the circular data buffer:

-l' use of RAM as a buffer (files or waiting tables with a limited number of registrations), what is written on disk in one step when you are finished while the rest of the program and DAQ should always be active

-broadcast directly to hard disk using the advanced features of PDM, and re-setting the Position to write of PDM markers go back to the first entry when a specific amount of data entry was written.

-disseminate all data on hard drive using PDM streaming, file sharing at a certain time and deleting files TDMS containing no abnormalities later when running directly.

Regarding the first possibility, I fear that there will be problems with a Crescent quickly the tables/queues, and especially when it comes to backup data from RAM to disk, my program would be stuck for once writes data only on the disk and thus losing the samples in the DAQ loop which I want to continue without interruption.

Regarding the latter, I meet lot with PDM, data gets easily damaged and I certainly don't know if the PDM Set write next Position is adapted to my needs (I need to adjust the positions for (3analog + 2ctr + 5thermo) * 5channels = line of 50 data more timestamp in the worst case!). I'm afraid also the hard drive won't be able to write fast enough to stream all the data at the same time in the worst case... ?

Regarding the third option, I fear that classify PDM and open a new TDMS file to continue recording will be fast enough to not lose data packets.

What are your thoughts here? Is there anyone who has already dealt with similar tasks? Does anyone know some raw criteria on the amount of data may be tempted to spread at an average speed of disk at the same time?

Thank you very much

OK, I'm reaching back four years when I've implemented this system, so patient with me.

We will look at has a trigger and wanting to capture samples before the trigger N and M samples after the outbreak. The scheme is somewhat complicated, because the goal is not to "Miss" samples. We came up with this several years ago and it seems to work - there may be an easier way to do it, but never mind.

We have created two queues - one samples of "Pre-event" line of fixed length N and a queue for event of unlimited size. We use a design of producer/consumer, with State Machines running each loop. Without worrying about naming the States, let me describe how each of the works.

The producer begins in its state of "Pre Trigger", using Lossy Enqueue to place data in the prior event queue. If the trigger does not occur during this State, we're staying for the following example. There are a few details I am forget how do ensure us that the prior event queue is full, but skip that for now. At some point, relaxation tilt us the State. p - event. Here we queue in the queue for event, count the number of items we enqueue. When we get to M, we switch of States in the State of pre-event.

On the consumer side we start in one State 'pending', where we just ignore the two queues. At some point, the trigger occurs, and we pass the consumer as a pre-event. It is responsible for the queue (and dealing with) N elements in the queue of pre-event, then manipulate the M the following in the event queue for. [Hmm - I don't remember how we knew what had finished the event queue for - we count m, or did you we wait until the queue was empty and the producer was again in the State of pre-event?].

There are a few 'holes' in this simple explanation, that which some, I think we filled. For example, what happens when the triggers are too close together? A way to handle this is to not allow a relaxation to be processed as long as the prior event queue is full.

Bob Schor

-

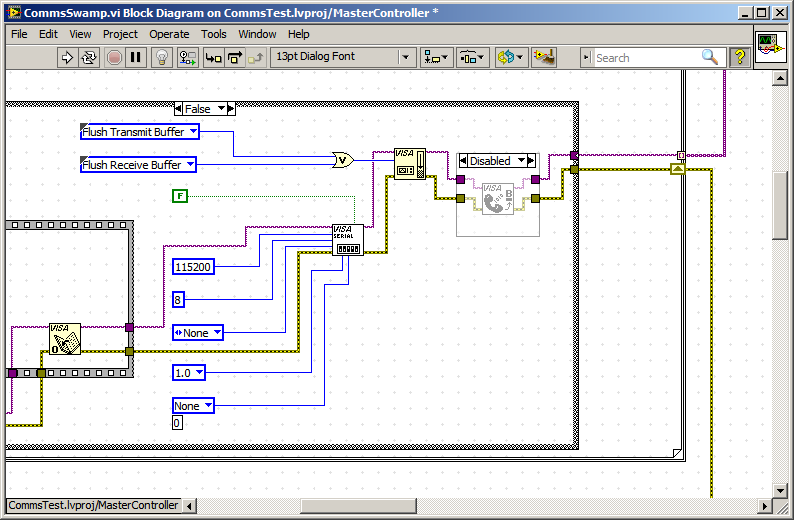

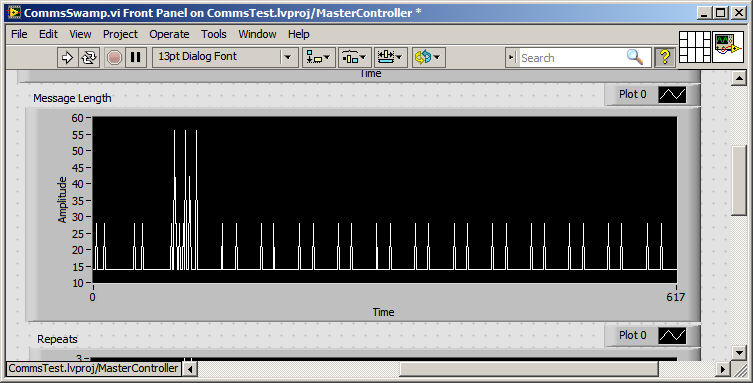

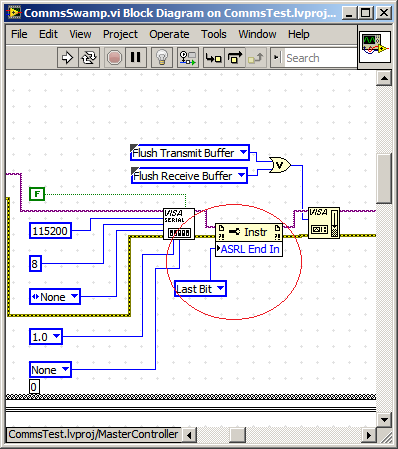

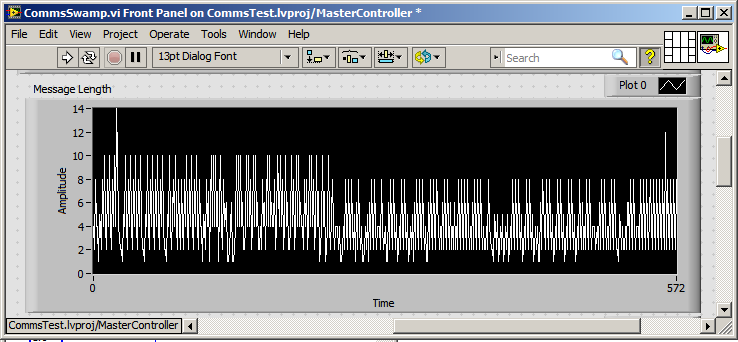

How to stop the series 'VISA read' the sends packets instead of bytes available.

Dear Labvillians,

Highlights:

How can I stop series "VISA read" send me packages rather bytes?

Background:

I have a system which publishes series of 14 bytes on a semi-regular interval packets.

At busy times, the producer of these these queues of data packets, effectively producing Super-paquets multiple of 14 times larger than 8 packages (112 bytes) bytes.

My protocol handler is designed to process bytes, packets, or packets Super.

Now, my request has multiple devices and the order of message processing is essential to the proper functioning.

My observation is that read VISA waits until the end of a package / super package before moving the data to the application code. (See chart below)

My expectation is that VISA read should give me bytes available, get too smart for itself and not wait for a package.

.

I noticed this on PXI, PC shipped, PSC and, more recently, cRIO

I've experimented with Scan interface the cRIO rate, which helps to reduce the backlog of packages but don't decide to package under read byte.

I understand that a solution is FPGA code write to handle and pass the bytes by R/T-FIFO, and there are some great examples on this site.

Unfortunately, it does not help with FPGA devices not.

I have also dabbled in the event based sequential reads, but he is evil on vxWorks devices.

Any help is appreciated

It is helpful to sometimes talk to yourself.

I hope that is useful for someone sprinkle in the future

-

Hello

I have an application high visibility which has a bug in it I want some

long live the spirits of discussion forum OR make a suggestion on.The app is on the International Space Station. I have a LV executable on a

laptop on the edge of the ISS that sends TCP and UDP data to a binary of LV on a down to Earth

computer.The path is not an IP standard or "trivial", but it is supposed to be equivalent to

a standard IP address. The capture comes when a few bytes of data are lost paired.

When this happens, the land LV says something like "not enough memory

to complete the operation"Here's what we found...

=======================================================

The TCP server (on the edge of the ISS) send a TCP stream with the following format...... LLLLSTART d1$ $d2... $dN #llllSUMccccENDLLLLSTART...

where

* LEMAÎTRE is a binary 32-bit integer with the number of bytes in the following package starting with the START text string and ending with the string of text END.

* $, #, BEGINNING, SUM and END are the delimiters.

* d1, d2,..., dN are data text string values.

* Lemaître is a text string with the decimal number of bytes starting with the START text string and ending with the symbol #.

* cccc is a text string with the checksum decimal number of bytes starting with the START text string and ending with the symbol #.TCP Client (on Earth) indicates 4 bytes as LEMAITRE, then reads the LEMAÎTRE (usually about 85 bytes) bytes as the package starting with the START text string and ending with the string of text END.

The error comes when LEMAITRE data are lost, so that the TCP Client is four bytes (probably S, T, A and R) as LEMAITRE, who converted a number which is very large, then reads LLLL bytes (maybe 50 M) but this read fails because there is not enough memory to read that much data.

The fix is sure that LEMAÎTRE is a reasonable size (such as 20-200) and to ensure that the data packet begins with START. The difficulty is how to restructure the code to reset the loop package when LEMAITRE is off limits, or when this START header is not present.

=================================My dilemma is that in LV, I do not see what is happening in the TCP server or

Client TCP live.Any suggestions will be appreciated.

JIM

As said Miha, a TCP connection should not lose all the data (not in silence, at least), but from your description, I understand that you can't really control the implementation of intellectual property.

Since it is a data flow, you can probably is just read N bytes each time (say 200) and simply pass a buffer of the last N * X bytes. Then you can check the data in this buffer. If you are absent LEMAITRE bytes for some reason any (and you don't mind losing all the data package), you can simply look at the end of the message, check what follows, it is the correct start of message and just continues from there.

-

Help the analysis of UDP packets

Hello, I am new to Labview and working on a program to read the UDP packets. I was looking at the example UDP receive Labview and read also older messages. The data consists of 1-Header Word (32 bits), 24-data words (each word is 32 - bits), and the rest of the data is data filling which is all zeros. Each package is the same size. Far by the UDP Receiver.vi example I am able to receive the data and could probably push on with what I have, but I would like to know if there is an easier way to analyze a UDP data packet? I have read others who used a Cluster and the TypeCast but can't seem to make it work. When I try to connect to my Cluster until the TypeCast it wire goes to the dotted line.

If anyone has any suggestions on the easiest ways to analyze a UPD packet please let me know.

Thank you

Joe

-

HP slate 7 with pass... purpose of data 2 years 2 years of purchase or installation?

I hope that there is someone here who has ever bought one of these directly from HP

Subject he says it all really... when the 2 years start? on the purchase or installation? I ask because I can buy this to give to my daughter for her birthday, which is not until September.

Also, I noticed the power adapter is shown and separately the price indicated on the Web page but had assumed he would come with it, anyone can please clarify?

Thanks in advance!

Google is your friend!

I found this on two research for 'HP Datapass':

"Monthly offers of included data are provided for 24 months from the date of purchase of your device. After this, you can choose to use a Wi - Fi connection only, or continue to use HP DataPass by purchasing additional data packets when you want, always with no contract. »

You can view this page and this page for verification.

WyreNut

-

Is that the HDS rejects fragmented packets?

Hello

I'm trying to understand if it's funny question on our SC8000 6.6 OS or on purpose (and if so, why?).

Sending packets (ping - l) larger that the MTU configured on the server returns a timeout.

It doesn't matter if I'll put any 9014/4088/1650. These servers have no problem Ping between them in any scenario.I went through the example pages 22-23 here http://en.community.dell.com/cfs-file/__key/telligent-evolution-components-attachments/13-4491-00-00-20-43-79-24/Windows_5F00_Server_5F00_2012_5F00_R2_5F00_Best_5F00_Practices_5F00_v2.pdf

It was nice to find. but phrases it give me not defiant conclusion if when not using the parameter f fragmentation works or not.Here is my result when it is configured for 9014:

non-fragmented larger than server MTU to HDS - failed (planned)

C:\>ping 10.1.158.13 f-l 10000

Ping 10.1.158.13 with 10000 bytes of data:

Packet needs to be fragmented but DF parameter.non-fragmented works smaller than server MTU to HDS - (planned)

C:\>ping 10.1.158.13 f-l 8968

Ping 10.1.158.13 with 8968 bytes of data:

Reply from 10.1.158.13: bytes = 8968 times<1ms ttl="">fragmented to HDS - failed (it is planned?)

C:\>ping 10.1.158.13-l 10000

Ping 10.1.158.13 with 10000 bytes of data:

Request timed out.fragmented to another server - works (planned)

C:\>ping 10.1.158.26-l 10000

Ping 10.1.158.26 with 10000 bytes of data:

Reply from 10.1.158.26: bytes = 10000 time = 2ms TTL = 128Thank you

Gidi

Hello

So. It's always nice to come back on the forum with the answer.and cannot be the following through the void. So here it is.

YES. the HDS is not to respond to ping fragmented. but we had a different behavior that we could not explain, even with the indicator f. instead to get the answer that the packet needs to be fragmented, we got the ping timeout for the size of the packets between 8968 8972:

C:\>ping 10.1.158.13 f-l 8969

Ping 10.1.158.13 with 8969 bytes of data

Request timed out.So we saw this as a black - hole.we we are really concerned to find that point, but when you take the packet trace that I found something interesting. on any ping response above 62 bytes the HDS also return four bytes of the trash at the end (well that's the look as it puts even more of the CBA after the image)

We tested it on another system that has a different configuration, on that we that we have had no black hole. another trace fast package (down at the bottom), we saw that the server receives frames of to1918 bytes (the card configured to 9014 accepts but still above).

so - two questions, both seems to be with HDS pings to the

1 compellent adds garbage four bytes to packets of bytes 62 more ping response.

2 compellent allow the ping response on 9014Bytes that are lost by our NIC (but seems to be only for ping).because we dug it good enough only to ICMP. I don't have keep trying and captured anywhere else on the wire.

Our configuration:

Compellent SC8000 OS 6.6.5.19, Chelsio T320 Dual Port LP Vlan tag, related to the Nexus 5 k. Political status for all the ports mtu 9216 connected to a Board card intel X 520 configured with MTU 9014.Map of Mellanox on another system that receives more than 9014bytes framework, BTW, that system also receives no responses to pings fragmented)

-

socket InputStream does not get all the data, please help

I've been doing stuff of network on blackberry for quite awhile, but still using the http connection.

I have to use the socket for a piece of my application connection, but could not do the work.

This code is what I do

String url =

"socket: / / ' + rmserver + ': ' + rmport;

Socket SocketConnection = Connector.open (url) (SocketConnection);

socket.setSocketOption (SocketConnection.KEEPALIVE, 1);

OutputStream = socket.openOutputStream ();

InputStream = socket.openInputStream ();

send something here using outputstream

int

If (f > 0) {}

do somwthing

}

AV is always 0.

I don't know that my server sends data successfully. I run the network packet capture tool etheal on the MDS Server, I can see given reached MDS Server, but they never come to my device app.

The outputstream works very well, I can send data from my application and my server code gets all of them.

Where should I check? If it is http, I can turn on the debugging http on MDS log and see all network traffic, but for the socket connection, data packet is not connected (or I missed where it is).

Is someone can you please tell me where I should go to understand why?

Please try the suggestion to remove the check mark on InputStream.available.

Maybe you are looking for

-

Cannot charge the battery of the iphone

Charger not step to the iPhone to charge the battery

-

Satellite L300-1BW - BSOD and then the password at startup appeared

Hello I'm new hear that ive just got a laptop Toshiba L300 - 1bw Windows vista, intel celeron. I changed the laptop on it gives the initial toshiba screen then goes to a blue screen and request a password. I tried f8 when turning on pc ive tried f12

-

Hello I have a NI 9225 in a cDAQ-9174 chassis. I have the software to set a calendar of 1000 Hz sample clock, and then trigger a reminder once 1000 samples are acquired. Acquisition mode is continuous. The callback is triggered after 620 mSec. No

-

Windows KB2572067 for Windows XP security update shows every day. I'm updating, appearantly successfully and 15-20 min. trying to update again. How to solve this?

-

Internet randomly hangs and says he is close. When I look at the details, it shows the name of the module as "mshtml.dll" and the offset is "0038C93C". It is a major PITA and I need to know what to do to fix it. Help, please. E-mail * address email