Frequency of an analogue signal measurement and noise reduction

Hello everyone.

I'm reading a flow rate sensor that generates output signal square whose frequency has a linear relationship with the flow rate and varies from 37 to 550 Hz. My hardware does not support playback of the frequency, so I have to calculate. What I ended up doing was reading signal every 1 millisecond (1000 Hz), taking two samples spread and store the value of the signal and the time during which it was read. Every 400 milliseconds, I loop over the data and know how many cycles have taken place and the time between the first and the last cycle, divide the number of cycles at this time here.

Here's a graph of the signal.

I never use software loops timed then performing operations of data acquisition as a general rule. He's many trendy on negative issues. And as you mentioned that you cannot run a timed loop faster than 1 kHz. You should go to the Help menu in the toolbar, then select search examples. Then you look up "continuous acq. It is best to leave all the details of the calendar to the data acquisition unit and just retrieve data from the buffer of data acquisition, then it is needed. I have your case I would recommend a sample equal to 6 kHz freq. That's more than 10 times your highest frequency. You can always update your data at intervals to 400msec. Your signal also looks good. I can't have a lot of noise in your signal. If my guess is that your software is not optimal. You should not get 120 Hz, so there is no flow. If you post we can take a look.

Tags: NI Software

Similar Questions

-

Missing pallets of sharpness and noise reduction

I just exported some pictures for the web of Lightroom 5, which had to be reduced considerably. Now, I find that the range of reduction sharpening and noise disappeared completely from the right tools palette. What is going on? I tried quitting and reopening of Lightroom and also stop and restart my computer. (Macbook Pro retina and Mountain Lion)

If you talk to develop Panel, right-click on a group header and make sure that the detail is checked.

-

X 201 micro - quality and noise reduction

Hello. I was wondering if someone managed to make a reasonable use of microphones on the X 201 tablet. I don't know if I configure them or if they are simply poor quality, but the microphones seem to be horrible. When I turn off noise cancelling and echo cancellation, there is a humming sound important and crackled in the background. When I turn on the noise cancelling, it is totally impossible for the microphones to be used to record or transmit any intelligible voice, even if I yell.

In short, the pickups are almost useless for Skype and similar and of course completely useless for any sort of speech recognition applications.

Someone had a bit of luck in tweaking perhaps some special configuration settings that I'm not aware of? However, if the microphones are just fundamentally wrong, someone at - it recommendations for an extra helmet (preferably without wireless/bluetooth) microphone?

Hey dm09,.

try to uninstall the audio drivers for your device.

Let windows install its generic drivers and test again.

It made a difference?

Note that the version of the sound driver for the X 201 is at 3.66.147.0/4.95.48.50. If yours is not to this version update for this.

at the same time, do a full update of the system of your device.

-

Noise reduction, clarity and sharpness of Vs masking

Maybe I used too much clarity for the birds and noise reduction. Some recommend on dpReview, no noise reduction and now I tend to believe them. Recently, I tried using little to no noise reduction, little or no clarity, a lot of sharpness and about 40% of masking. This gives the details of good bird feather, and anything with less detail has little better bokeh and noise. In areas of low detail it seems to me that the masking reduced the noise caused by the sharpening, but it has less effect on the noise increased clarity. Is this true? If this is the case, birds, photography is better clarity used sparingly and selectively, as on the head?

Another reason for all of this, is that once I read even a little masking degrades the sharpness but now I doubt that. Maybe LR which has improved over the years.

Thank you

Doug

Reducing noise from luminance indeed (and the extended color noise reduction) tends to erase fine feather detail.

I recommend:

* lowered the noise reduction and if you are not using it, how the cursor nr.detail up crank - this will help maintain fine feather detail and is superior to sharpen detail to maintain details otherwise lost pen due to the reduction of noise.

* a lowered sharpening in detail, to reduce noise and reduce the "need" to reduce the noise.

* and sharpen masking to taste...

Also note: exactly 50 masks all global sharpening sharpening room and can therefore be used in conjunction with noise reduction to smooth bokeh areas.

And of course you can add sharpening or clarity locally too.

I know that I have not responded to your question perfectly as asked, but I don't know what to say, then...

Have fun

Rob

-

noise reduction shows do not develop the module

I noticed today that when I noise reduction in the develop module, the changes are not be represented on the image, I work with, except in the top left hand corner... too small for me to see clearly. But when I go to the library module, the changes actually took place. If anyone has experienced this? I need a fix because it's a bad guessing game my slider without seeing results until I have the switch modules. Have not had this problem before. A lot of my work turns out too smooth! I appreciate any help I can get with that.

beeeeezzz2 wrote:

Thank you very much, Lee. So, there is no problem with the software? For clarity, in this case then, Lightroom is holding useless NR, but this change, discuss that with me visually? Do you know if I can mount it too?

No dysfunction, no override. There has been a lot of discussion on this and it is possible in a future version that will add a preference or other corrective action can be taken, but for the moment, just use library or more large image sizes. In reality, it is always better to judge sharpening and noise reduction to 1:1.

-

Signal strength and/or coverage measurement tool

Does anyone know a software or a device that I could use for this purpose?

Is a pretty broad question, and the answers range from: free, but you must know what you do to the solutions goes from US$ 5000.00 - 10, 000.00 (and you should know what you're doing).

In the simplest form, you may be able to get away with something like NetStumbler (free, www.netstumbler.com). NetStumbler give levels, the use of channel and an indication of the interference of signals.

Another popular (free) system is Kismet, that runs on Linux. It will also give you an indication of the intensity of the signal, the use of channel and noise/interference. Kismet can also be extended and use as a form of Wireless Intrusion detection.

It's great that you are looking to do a study on place, but ultimately you will need to refresh operation, technology and parametric requirements to be able to correctly interpret the information that provide the tools.

There are no tools for an exit that will give you Explicit instructions on how to build your network wireless based on the collected data.

There are some management systems, like the WLSE and tools provided with the Cisco LWAP, which will show you the cover you RF, rogues, performance, etc. .in real / near real time. The use of these you can set a permanent system, or there are even tools 'auto adjustment' in systems to balance out of your APs for the best coverage with the material available.

It would be useful that you could provide some details, so we can guide you in a direction.

FWIW

Scott

-

Asks the plugin noise reduction effect-made clip jerky and slow down after application?

I apply an effect - the neat video Plugin to reduce noise. for some reason when I apply the effect, playback of the clip is really slow and jerky. Why is this?

Hi Dragonsfire,

I'm looking at the rendering time and it's extremely slow, like the clip is 30 secs long 2mins but render time estimate is like 3 hours! My laptop is not the fastest, but it is not no more ancient. Any suggestions on the reduction to make time-no understood them with a much more powerful computer!

You have met one of the intensive more rendered effects on the stage of post-production. Sorry if you think that something goes wrong. Likely, that's fine, except that it takes very long time to process images with that plugin.

Here are some tips on the neat video forum:

The real rendering for your clip speed should be around approximately 2 images per second. This value seems normal for a HD (1920 x 1080) image size and material to the average (you don't mention your hardware specifications). You can speed up a bit by disabling some of these filter options:

- disable the very low freq, high quality options

- some of the fixed noise reduction in some or all channels amounts (luminance / chrominance or Y/Cr/Cb) and/or ranges of frequency at 0%

- reduction of the time filter RADIUS

- disable the option of adaptive filtering

These measures allow the filter a little faster, but at the expense of the quality of filtration reduced. Noise reduction is usually a compromise between quality and processing speed. When you need good quality, it makes sense to give some time to the process with the top of the settings page. When that is not necessary, you can use a less accurate and more rapid filtration.

Thank you

Kevin

-

I'm just starting using the measurement and Automation Explorer. Half an hour ago, I performed a calibration of temperature to get familiar with the process. It seemed to work.

I tried calibrate again. It says my current calibration is 31/12/1903 - Question 1) why is this?

I pressed the button

, then came the name of my Stallion, then I left the characteristics of calibration because it was one of the screen: 1000 samples at a frequency of 1000 hz, then I pressed

I received the following message is displayed:

Error 50103 the DAQ Assistant. -Question 2) what is this error code for

Question 3) where can I find a list of error code numbers and their meaning?

Thank you

jdsnyder

OK, now the problem is finally solved! I re - adjust the device.

At work the first time, he then had to be reset.

Thank you

Jon SNyder

-

continuous measurement and logging, LV2014

In LabView 2012, I examined how project templates QueuedMessageHandler and the measure continuously and logging (CML). In addition, there are all of a lengthy and detailed documentation for the QMS. There is a much shorter documentation for CML, but readers of mention the QMS, given CML is based on this project template of course.

I just got LV2014, and I began to consider the continuous measurement and model of forestry (DAQmx) inside LabView 2014 project. There is here a change which is not documented: a state machine additional typedef enum in the loop of paremeter. But there is no change in the QMS 2014 version, while this change is not explained in detail.

You can direct me to some docs or podcasts more explaining the feature of the new version of CML?

Also, when I try to run the continuous measurement and model of forestry project (DAQmx), just to see how it works, I see strange behavior: even though I always select "connect" in the trigger section, force the trigger button starts to blink, and two messages begin to iterate through the channel indicator : logging and waiting for release. But I always chose "log"

is this a bug?

is this a bug?I was reading an "interesting debate" since the year last too:

I understand that these models are only starting points, but I'd be very happy for some documentation to understand how to properly use these models (I was quite OK with the original models, but the new ones obtained more complex due to the state machine).

Thanks for the tips!

Hello

The additional Typedef is necessary to ensure that only data acquisition-task is started.

This is because the mechanical action is defined as "lock when released. For example, when you press on the

Start button, then release, the changes of signal from false to true, and then it goes from true to false.

It would be two events. During the first event, a new Message is created. Now, when you take a look at the "Message loop will vary."

you will also see an additional type of def. In this VI data acquisition task is created and started. The problem now is the second event.

Now another Message is created to start an acquisition process. And if another task-acquisition of data is started, you would get a problem with LabVIEW and DAQ hardware.

But due to the fact that in the loop of the Acquisition, the State has the value of Acquisition with the first Message, the second Message does not start another DAQ-task.In the QMH there no need of this, because you don't start a data acquisition task.

Two indicators during indexing, strength of trigger is set to false. If the program connects

and force trigger is disabled. After the registration process, the flag is bit set to Wait on trigger

because, as already stated, it is disabled. Now Force trigger is true once again, and it connects again. This process

is repeated and the indicator is switching between these two States.

When you open the VI "Loop of Message Logging", you will see that the trigger for the Force is set to false.Kind regards

Whirlpool

-

Possible bug in sv_Harmonic distortion and Noise.vi

Hello

I ran into what seems to be a bug in distortion and Noise.vi sv_Harmonic

I call this VI into a higher level VI which is part of the box sound & Vibration tool (SVT SNR without harmonic (time) (1ch) .vi)

What is happening is that I sometimes get error 0xFFFFB1A3 (-20061):

Error-20061 occurred at NI_MABase.lvlib

ine Waveform.vi:22 > NI_MABase.lvlib:ma_Trap Fgen parameter Errors.vi:1

ine Waveform.vi:22 > NI_MABase.lvlib:ma_Trap Fgen parameter Errors.vi:1

frequency must be<= sampling="">Possible reasons:

Analysis: The selection is not valid.

This error is actually produced in distortion and Noise.vi sv_Harmonic by one of his subVIs (your unique information (complex) .vi svc_Extract) although I'm not able to activate debugging for this VI so I can't dig deeper.

What seems to be the case, it is for certain fundamental frequencies that result in higher order harmonic which is located very close to Fs/2, you get the error. I have attached a waveform and a simple VI that generates the error. My debugging, it seems that the error occurred when the code attempts to extract the harmonic at 5119,53 Hz. FS/2 is 5120Hz, so it must be valid, but generates an error.

Thank you

-mat

Hey Matt,

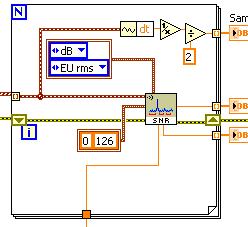

I spoke with R & D and the source of this problem is we're detects peaking at exactly the Nyquist frequency for the 127th harmonic, while it should work, we get the false error. To work around the problem, you can specify the frequency range of 0-126 harmonics as seen in the image below. Have one less harmonic should not be a big problem as the harmonic 127th is anyway in the noise. R & D is now aware of the issue and look forward to it. Thank you!

-

Continuously measure and write waveform using PDM

Hi all

I'm doing my thesis by using Labview 2010 (since this is the only version currently available in University

). I need to read and save data from the microphone (currently to simulate a signal because I need to make the program work first) then save and analise IT (has not reached this point yet). I tried to use the structure of the event in order to record and then play tdms files. But unfortunately it saves only a small piece, then I inserted while loop, so it will record continuously but the program is not responding after registration, I push and I can only manually close the toolbar it. Please does someone could help me or suggest that something since I'm not very good for Labview and any comment is welcome. Here's what I've done so now. I tried searching forums for a similar solution but did not find anything useful (some had a much newer version so I could not open). Thank you.

). I need to read and save data from the microphone (currently to simulate a signal because I need to make the program work first) then save and analise IT (has not reached this point yet). I tried to use the structure of the event in order to record and then play tdms files. But unfortunately it saves only a small piece, then I inserted while loop, so it will record continuously but the program is not responding after registration, I push and I can only manually close the toolbar it. Please does someone could help me or suggest that something since I'm not very good for Labview and any comment is welcome. Here's what I've done so now. I tried searching forums for a similar solution but did not find anything useful (some had a much newer version so I could not open). Thank you.Hi and welcome to the forums,

The reason why you can not stop the waveform recording or exit the application is because you have the case of the events set to "lock the table until the end of the matter for this event" (in edition events). This means that LabVIEW will not respond to the user until the end of this structure of the event, but because you have the option to press the while loop stop to complete the structure of the event means that you have a blockage and abandon the VI.

The architecture of your application is not ideal - I highly recommend everything that takes a long time to execute within the structure of your event for the above reason (obviously you can uncheck lock as a quick fix Panel). I think I have a look at the design of producer/consumer model (events) (new... > model > frameworks) because it would be more appropriate for your application. You can manage your button presses in the structure of the event and have a state machine in the loop at the bottom for starting, running and stopping of your data acquisition.

The idea is that you don't do very little inside the event structure so it frees up the façade, but the messages (e.g. power acquisition data, quit the application) are managed by another loop.

I don't know if it comes in LabVIEW 2010, but there are examples of projects that include a project in 2012/2013 "continuous measurement and logging" which may be suitable for your application. There are also examples of the State machines and managers of messages queued.

-

Start of satellite L10 fan speed and noise

My L10 cooling fan start always maximum speed, when it turns on it always burst at maximum speed (and noise) and then slow down to a speed (and slient relative). This fan start noise is very annoying (as fan departure every half hour in office work and every 15 minutes in some simple games).

I try muffler or bios settings performance they do not fix the noise and the starting speed.

Maybe Toshiba could release some bios update or patch or something to fix this? (my bios version 2.0)

Maybe this problem has the solution software thrid party?

Or the fan speed max is just a hardware problem?Thank you.

Hello Albert

The same thing my Qosmio F20 and in my opinion, it is not any kind of technical problem. At the moment I'm typing this text and hear Internet radio at the same time. The cooling fan works regularly.

In my opinion, when the operating system runs several different processes running in the background and they need the CPU activity that needs to be cooled correctly. In my opinion, you should check the power settings management settings and change the performance of the processor to lower the level.

If the cooling fan runs every 15 minutes, in my opinion, it is not too often.

Good bye

-

Need help.

We have some problems when shooting in low light.

http://antosch.dyndns.TV/owncloud/index.php/s/mKOEqbsXQrwFVSn

You can see bands and noise in the viewfinder and screen on board as well as on the pictures.

Also more brightness also enhance the effect.

The parameters are:

AE Slog3 movie

MPEG 25 1920 x 1080 p

Exhibition index 3200

MLUT LC709 Type A for the viewfinder and SDI

-

Calibration Toolkit with 3rd party ECU measurement and CAN interface

I would use the NI ECU Measurement and Calibration Toolkit with an NI CAN interface. The interface devices supporting J2534 are very common. The NI ECU Meas and Cal Toolkit allow this? If so, how does one time on another interface CAN interface to the Toolbox?

The NI ECU Measurement and Calibration Toolkit National Instruments Hardware only supports.

If you want to discuss the possibility of using any 3rd party hardware, please contact your representative local.

-

How to measure and mark the value of real-time data?

Hello

I need to measure and trace in time real RMS value of EMG power. I did a VI. But I don't know why it didn't work. Can someone help me please? My VI is set in 2013 and 2011 both version. An example of data is also attached. Thanks in advance.

Taslim. Reza says:

I tried RMS PtByPt VI. But it has not been wired because the source was table 1 d of double and double sink.

Well, ptbypt tools affect only one value at a time, so you must place them in a loop FOR. Here's how.

Maybe you are looking for

-

Software update does not provide the option to display a preview of the document or to print on the back of the muliple pages.

-

Display field of e-mail of contact App problem

Hello I just bought the Xperia M4 double and in the application of Contact, there is a bug in the email field. When the email contains something like 123 @, figures of the tree is replaced by whole email itself. I tested with values from email -Test1

-

Function mouse and keyboard disappears when you try to install the new Windows 7 on new generation.

I have a new version with a platform of Skylake, and when I try to install a new windows 7 OS, I get to the screen installation with choice of country, etc. and have no function on my keyboard, mouse, so I can't click or tab to make choices. Basicall

-

MSXML. updated 4 (version 4.20.9818.0) does not in the sysWOW64 folder.

Windows 7 Pro x 64. SP1 update. MSXML. updated 4 (version 4.20.9818.0) does not in the sysWOW64 folder. Secunia PSI list this version of MSXML 4 as a security risk. This became apparent only since I installed SP1 on my 64 bit win 7 pro PC. There is n

-

How to move objects from root to sub - org and vice versa.

On each object, when I right-click, I see copy option. What is the use of it? I need to spend some policies of root to sub - org. Is removing policies and creating are the only option?