Measurement of low-cost input analog (4-20mA) with data acquisition

Hello

I would like to have a very low cost measurement system loop which I can plug in my laptop current:

I have a load, which is connected to a circuit of air conditioning/signal booster, which output a 4-20mA. I want to measure this current loop signal.

An idea for the lowest cost system? I think that the most NOR DAQ are too expensive and too exaggerated.

I have LabVIEW.

Use of remote sensing current low-value resistance, then measure the fall of voltage through it with the help of an acquisition of data 6008/6009? They have about $150.

You will probably need to amplify current-sense with a MAX4372 or similar resistance to achieve a result that allows you to use a reasonable scale on data acquisition. I measure the current through our products in almost all of our equipment to test in this way. The size of the resistance of meaning as a result. The 6008 is accurate enough, but it is not the fastest nor well presented. But starting at $ 150, they are hard to beat.

Tags: NI Hardware

Similar Questions

-

What is a low cost switch which can speak with Labview?

Hello! I apologize if this question is in the wrong forum auxiliary; Let me know a more appropriate and I will happily move it.

I have a chip test with four aircraft on what I measure through an amplifier to lock. Currently, we can make a measurement, then have to physically move the connection to test the following. I would like to automate this process. I hunted around for a cost relatively low switch that can talk with Labview and allow him to select one of the four signals in turn, but did not have much luck. I was wondering if anyone had recommendations or devices that they had worked with.

Thanks in advance,

Dan

Dan,

I was looking at using reed relays. Download those with golden contacts so that they work well to currents microampere. Use a with digital outputs DAQ card to drive the relay. Before you buy, check current relay coils and exits Digital DAQ hardware.

Lynn

-

Low cost intelligent or managed switch with the console interface

Could someone recommend more low switch cost smart or managed with the interface of the console?

I only need 4 ports but need to control CLI, telnet, or SSH.

no downtime

-

input analog trigger on the door of the meter to measure the frequency of generation

Hello

I want to measure a frequency on the analog input, but it doesn't seem to work.

I'm trying to work with DAQmx with the use of the ansi c standard.

The first step, I've done was acquiring information on the analog input. With the use of a simulated device, it shows a sine wave on the entry.

My next step is to generate a trigger for the meter signal, but this doesn't seem to work.

I don't see how it is possible to connect the trigger on the entrance to the analog meter.

For the creation of the analog input and relaxation, I use the following code:

DAQmxErrChk (DAQmxCreateTask("",&taskHandle));

DAQmxErrChk (DAQmxCreateAIVoltageChan(taskHandle,"Dev1/ai0","",DAQmx_Val_Cfg_Default,-3.0,3.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk (DAQmxCfgSampClkTiming(taskHandle,"",10000.0,DAQmx_Val_Rising,DAQmx_Val_FiniteSamps,1000));DAQmxErrChk (DAQmxCfgAnlgEdgeStartTrig (taskHandle, "Dev1/ai0 ', DAQmx_Val_RisingSlope, 0 '"));

For the creation of the meter, I use the following code:

DAQmxErrChk (DAQmxCreateCIFreqChan (taskHandle1, "Dev1/ctr1", "", 1 January 2000, DAQmx_Val_Hz, DAQmx_Val_Rising, DAQmx_Val_LowFreq1Ctr, 1, 4, ""

);)

);)I hope someone could give me a hint.

I also tried the examples that come with DAQmx but well I know this are only examples to counter with the help of the digital inputs.

Thanks in advance.

Hello

You must use the exit event of comparison at the entrance of the meter. Change this property after the configuration string function.

DAQmxSetChanAttribute (taskHandle1, "", DAQmx_CI_Freq_Term, Dev1/AnalogComparisonEvent);

Kind regards

Bottom

-

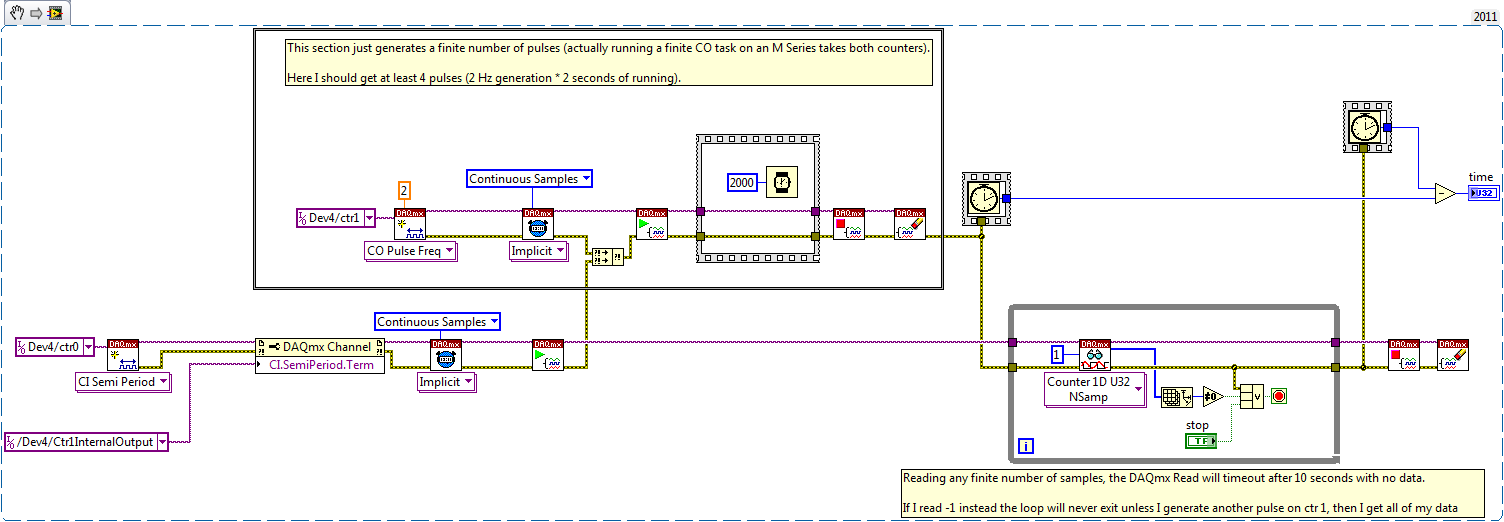

Failed to retrieve Counter measures series M USB input

I have an application where I need to measure any number of semi-periodes using a counter stain in the input on a USB M Series buffer (6221). As the number of pulses is undetermined, I did the ongoing task (but a finished task with a buffer larger than the number of times my semi gives the same behavior). I start the task of counter entry and then trigger an external pulse generator. I'm getting a signal saying the external pulse generator is finished, and at this point, I know I can read back all the acquired samples.

I waited for that (for a small number of impulses) reading-1 simply give an empty array that my samples would have sat on a USB and DAQmx hardware FIFO would have no way to know about them (indeed, it is true). However, it turns out that to read a finite number of samples not initiates transfer and instead results in a time-out (even with the time set to the default value of 10 seconds). It seems that after DAQmx Read is called an additional impetus is needed to start the transfer of the buffer to the software (as if there was some kind of pipeline). However, for this application to work I need to read after the last pulse was generated so I can measure all impulses. Generate additional impetus after reading allows me to read my full buffer, but I can't afford to send an additional impetus (that would trigger my polymerization of power system pulsed) just to re-read my data.

Here's a simple reproduction VI:

I should also mention that the behavior is correct in USB x 621 (and so probably the X series as well). In other words, reading a finite number of samples is initiating the transfer (no additional impetus is needed) so I can read 1 sample followed by-1 to get all my data. Internal bus (PCI, PXI, PCIe, SMU) do not have the USB FIFO and so I'm not expecting to show the behavior either. Reproduce, you would need to run it on a key USB 622 x 625 x or x 628.

If I make the finished task and provide the correct number of pulses it works (I can just read back the desired number of only one), but as I don't know how many pulses are generated to do the continuous task or end up with many more - in both cases, it seems impossible to get all of my data back.

Does anyone have a way to initiate the transfer of a task (not bus-powered) USB M Series semi period once the source of the signal ceased to produce pulses? Hardware change (or alternatives such as the DI a timestamp change the task of detection) is not an option for me as we have several systems deployed to customers already and this input is wired for a PFI to a USB 6221 OEM line. The Size property of ask Xfer USB does not seem to be supported on USB M Series for half tasks. I also tried to interview samples available by channel (thinking maybe this could initiate a transfer) but it made no difference.

Best regards

Hello again John! Of course, push you our products on the cutting edge!

Unfortunately, in this case, this is the expected behavior. Signals Streaming technology of NOR allows we to the bus package based such as USB, Ethernet and wireless applications to high bandwidth everywhere. Unfortunately, our first generation data acquisition products to use signals Streaming technology of OR were based on the ASIC STC2 which was not designed originally with Streaming of signals OR to mind. Counters, in particular, are a special cirumstance where it's particularly weird. When you call DAQmx Read, we usually indicate to the device to send through all the necessary data that you request instead of create a maximum of size package for the best use of USB bandwidth. In the meter case, however, actually required the aircraft to send a sample of more than what you actually want. The reason for this is that we must manually check for error on the subsystem of meter conditions until we send you all data which may not be valid. Because of this, we inject an additional step in the chain of events before that data is sent across the bus to ensure that, in fact, the data we send is valid. This requires us to acquire a sample of data that we expect to kick indicating error conditions that could occur from the subsystem.

As you have noticed, this is should be a question on the USB M Series devices for. Powered by bus of devices USB M Series (USB-621 x), X series (USB-63xx) and all the features of the plugin should not have this problem.

-

Low cost brushless three-phase amplifier

I use cRIO to request control phase brushless motor three specialty. NOR has a NI 9505 module for control of motors brushed. I have not found one for brushless motors. There are a lot of controllers of third brushless motors integrated in the market. Will not work for me. I need to directly control the cRIO output transistors.

I guess I'm looking for a low-cost three-phase bridge amplifier. The low cost is necessary because it will be part of an OEM product. The engine is rated at 40 volts and 10 amps.

Does anyone have any suggestions?

Here files gerber and the source files of origin for the Council that I built. It was designed by EagleCAD, and the final design is drive1.brd. I don't think that there is an error with this review of the Council, it should be good to go if you built it this way.

Others that some of bridge-on-chip solutions that I found, there was no option for the units silent, low-power (disks without built control logic in). Because I wanted to do my own development of control algorithms, it meant that I had to either crack open a trade route or build my own. Of course, I went the second route.

Good luck.

-

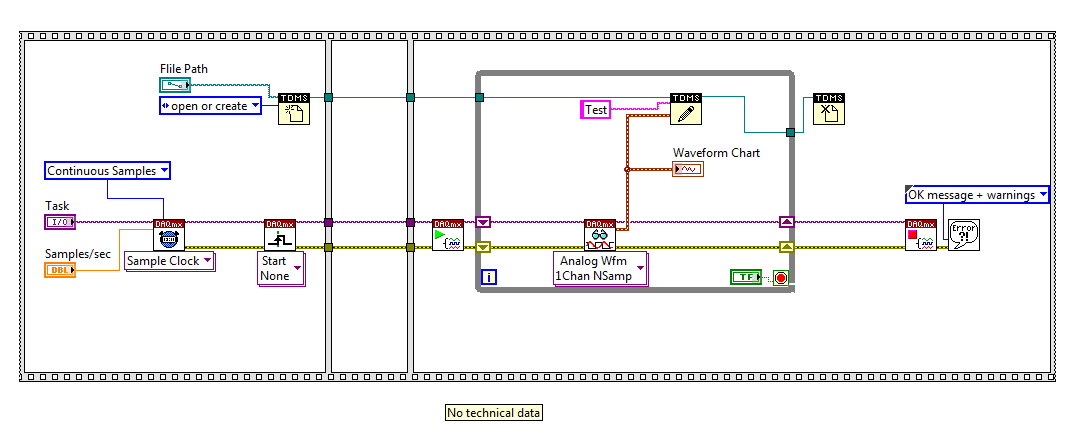

Trigger (acquisition of data for input analog power)

Hello

I want to acquire a signal from a controller of the machine and save the data in a PDM file. In order to develop this application, I created a simulated task. Everything is going well so far, except the start of data acquisition. I want the application to start recording of the data when the signal passes a specific value and stop it when the signal is lower then the value, is obtaining current data which records data only when it is higher then the set limit.

I already tried to use the DAQmx Trigger Vi but I always get the following error message when I use it.

Requested value is not supported for this property value. The value of the property may be invalid because it is in conflict with another property.

Property: Start.TrigType

Requested value: analog edge

Possible values: Digital Edge, noI also read a lot of different posts on this topic, but I'm still not able to get it going.

I'm pretty new to LabVIEW please excuse if I have a few questions.

A photo and Vi itself is attached.

Updated the code has beed saved in LV2014

-

Low-cost servers for virtualization

I'm looking for 2 servers of low cost which supports x 64 and hardware assisted virtualization.

I think that Dell PowerEdge T300 is in favour. How does Dell PowerEdge T105?

-

Hello

I have a PalmOne, Zire 31. Looking to download games while I'm waiting at the Office of the drs (so to speak). If the package saids its its for Palm OS - is - it say that it will work for my pda--thank you very much for your help...

Hello darlened and welcome to the forums of Palm.

Most of the time, if an application or a game is written for Palm OS 5, it will work with your Zire 31. That said, the Zire 31 is a Palm device low cost and has all the same features of the more expensive Palm PDA Tungsten E2 and TX.

I suggest that you download and install the version of a demonstration/test before buying it and confirm on the website that the Zire 31 is supported.

Alan G

-

The AEC to Flash 10.3 is available only for low-COST airlines?

Hello

I wanted to know if Flash 10.3 AEC function is only available with ACC?

The AEC swc file I see everywhere is one ACC. Are there the playerglobal.swc specific non - ACC or can we use the swc even where we develop a custom application and not by low-COST airlines?

Any help is very appreciated.

Thank you.

LiveCycle is a Java server that you can integrate Flex. The same playerglobal.swc is used in Flex and Flash. Download the latest (FP11) http://www.adobe.com/support/flashplayer/downloads.html

--

Kenneth Kawamoto

-

Registration of the companies low-cost (RTMFP vs protocols RTMP/S)

Hey everybody,

I read through all http://learn.Adobe.com/wiki/display/LCCs/LiveCycle+collaboration+service and read through the forums (even in the FMS side that may be relevant http://forums.adobe.com/message/3345179#3345179 and http://forums.adobe.com/thread/736733 ). As recording LCC is in beta is missing the right docs for now.

Could you guys validate/invalidate my hypothesis on the registration of low-COST airlines:

Note: we require Flash 10.0 and connect LCCS SWC (flash 10.0) currently(1) setting < rtc:AdobeHSAuthenticator id = "auth" username = password "{ourUserName}" = "{ourPassword}" protocol = "RTMFP" / > will do the following:

(a) causes a P2P multicast (which in turn uses UDP and no communication at the service of SUPPLY) between customer sovereign Fund, which means no is / V record steam.(2) setting < rtc:AdobeHSAuthenticator id = "auth" username = password "{ourUserName}" = "{ourPassword}" protocol = "RTMPS" / > will do the following:

(a) cause a TCP via server companies low-cost that will record the A / V broadcasts very well.3) what I'd do if we wanted to take advantage of P2P multicasting during the recording of the session of the ACC? To do something like: http://forums.adobe.com/message/3345179#3345179 ?

4) according to this: http://learn.adobe.com/wiki/display/lccs/09+Peer-to-Peer+Data+Messaging+and+AV+Multicastin g that we could make the P2P with Flash 10.1 multicast

(a) maybe I am completely wrong in #1 and #2, and by requiring Flash10.1 for our application, I get the recording work using the RTMFP Protocol?Thank you

Alex G.Hi Alex,

OK - now, when you make P2P RTMFP streaming,

recording does not work. To work around this problem, we recommended

either disable RTMFP either by setting StreamManager.maxP2PStreamPublish to 0. In

the latter case, you would still use RTMFP and UDP and so get most of the

benefits.

Answer each of your questions:

(1) partially correct - RTMFP is required for P2P streaming, but not all

RTMFP streaming is P2P. For example, once there are more than 3 beneficiaries.

We automatically hub-and-spoke, because P2P becomes difficult for

the publication server. You can control this threshold using the

StreamManager.maxP2PStreamPublish.

(2) correct. This is a temporary workaround solution - I prefer the 2nd

workaround solution, using StreamManager.maxP2PStreamPublish = 0, which still allows

the benefits of RTMFP without using P2P. In the next version, even if you are

you use RTMFP, once you turn on the recording, he will argue as well

all broadcast on hub-and-spoke (without change of RTMFP).

(3) for the moment, there is no way to do P2P streaming with record

permit. In the future (probably much later), we might have time to allow

This, but it is quite difficult (perhaps not even possible) and it is not high

as a priority.

(4) it is important to separate these issues - requires no P2P RTMFP

streaming. In fact, most of the benefits of RTMFP (mainly the fact that)

It is based on UDP) works on the star network. What we have seen is that Multicast

P2P (activated in 10.1 and rather than direct P2P) is often not a great

choice for conversations, because multicast introduces a latency more than

simply using hub-and-spoke.

hope this helps,

Nigel

-

How to ensure that no data is ever stored on the servers of the low COST airlines?

In particular, I would like to use the SimpleChat and SharedWhiteboard in a secure application and will not have any data stored on the servers of LCCs (only used to manage the flow of data). At least, I don't want to have data stored between sessions, but the 'sessionDependent' of these two pods property doesn't seem to work (ie. when the value 'true' history of cats and forms of Whiteboard are preserved between sessions).

I put in a few hooks to clear both at the end of the session and the start of a session, but what I really want, is to ensure that the data never be stored in the first place.

Is this possible with these pods, or do I need Custom develop something on my own to support this behavior?

Thank you

The data are stored only in memory. When "persistent" we check the configuration node and transitional so we don't save this node on the disk.

If you're really worried about a security breach, you should probably encrypt your messages before you send them through companies low-cost (it should be easy to subclass the current models and encrypt/decrypt points on the fly).

-

low-cost router with DHCP 66 option

I'll try to find the lowest cost of the Cisco router with option 66. I use the router in conjunction with spa50x phones and must be able to ensure the configuration at startup.

I used the srp521. It was suggested to use the isa550, but who just got an end of LIFE. All routers of RV supports, I don't the have not found on a rv110 and I know that's not on the rv042. It seems to me that it is a feature that should be on a router to a small business.

Sage

Sage

I was also looking for a replacement for the SRP521. I found this in the Guide of the administrator for the RV180:

You can also try emulators of RV here, https://supportforums.cisco.com/community/netpro/small-business/onlinedemos.

Stephen

-

Why the optimizer ignores Index Fast full Scan when much lower cost?

Summary (tracking details below) - to improve the performance of a query on more than one table, I created an index on a table that included all the columns referenced in the query. With the new index in place the optimizer is still choosing a full Table Scan on an Index fast full scan. However, by removing the one query tables I reach the point where the optimizer suddenly use the Index Fast Full Scan on this table. And 'Yes', it's a lot cheaper than the full Table Scan it used before. By getting a test case, I was able to get the motion down to 4 tables with the optimizer still ignoring the index and table of 3, it will use the index.

So why the optimizer not chooses the Index Fast Full Scan, if it is obvious that it is so much cheaper than a full Table Scan? And why the deletion of a table changes how the optimizer - I don't think that there is a problem with the number of join permutations (see below). The application is so simple that I can do, while remaining true to the original SQL application, and it still shows this reversal in the choice of access path. I can run the queries one after another, and he always uses a full Table Scan for the original query and Index fast full scan for the query that is modified with a table less.

Watching trace 10053 output for the two motions, I can see that for the original query 4 table costs alone way of ACCESS of TABLE UNIQUE section a full Table Scan. But for the modified query with a table less, the table now has a cost for an Index fast full scan also. And the end of the join cost 10053 does not end with a message about exceeding the maximum number of permutations. So why the optimizer does not cost the IFFS for the first query, when it does for the second, nearly identical query?

This is potentially a problem to do with OUTER joins, but why? The joins between the tables do not change when the single extra table is deleted.

It's on 10.2.0.5 on Linux (Oracle Enterprise Linux). I did not define special settings I know. I see the same behavior on 10.2.0.4 32-bit on Windows (XP).

Thank you

John

Blog of database Performance

DETAILS

I've reproduced the entire scenario via SQL scripts to create and populate the tables against which I can then run the queries. I've deliberately padded table so that the length of the average line of data generated is similar to that of the actual data. In this way the statistics should be similar on the number of blocks and so forth.

System - uname - a

Database - v$ versionLinux mysystem.localdomain 2.6.32-300.25.1.el5uek #1 SMP Tue May 15 19:55:52 EDT 2012 i686 i686 i386 GNU/Linux

Original query (complete table below details):Oracle Database 10g Enterprise Edition Release 10.2.0.5.0 - Prod PL/SQL Release 10.2.0.5.0 - Production CORE 10.2.0.5.0 Production TNS for Linux: Version 10.2.0.5.0 - Production NLSRTL Version 10.2.0.5.0 - Production

Execution of display_cursor after the execution plan:SELECT episode.episode_id , episode.cross_ref_id , episode.date_required , product.number_required , request.site_id FROM episode LEFT JOIN REQUEST on episode.cross_ref_id = request.cross_ref_id JOIN product ON episode.episode_id = product.episode_id LEFT JOIN product_sub_type ON product.prod_sub_type_id = product_sub_type.prod_sub_type_id WHERE ( episode.department_id = 2 and product.status = 'I' ) ORDER BY episode.date_required ;

Updated the Query:SQL_ID 5ckbvabcmqzw7, child number 0 ------------------------------------- SELECT episode.episode_id , episode.cross_ref_id , episode.date_required , product.number_required , request.site_id FROM episode LEFT JOIN REQUEST on episode.cross_ref_id = request.cross_ref_id JOIN product ON episode.episode_id = product.episode_id LEFT JOIN product_sub_type ON product.prod_sub_type_id = product_sub_type.prod_sub_type_id WHERE ( episode.department_id = 2 and product.status = 'I' ) ORDER BY episode.date_required Plan hash value: 3976293091 ----------------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes |TempSpc| Cost (%CPU)| Time | ----------------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | | | | 35357 (100)| | | 1 | SORT ORDER BY | | 33333 | 1920K| 2232K| 35357 (1)| 00:07:05 | | 2 | NESTED LOOPS OUTER | | 33333 | 1920K| | 34879 (1)| 00:06:59 | |* 3 | HASH JOIN OUTER | | 33333 | 1822K| 1728K| 34878 (1)| 00:06:59 | |* 4 | HASH JOIN | | 33333 | 1334K| | 894 (1)| 00:00:11 | |* 5 | TABLE ACCESS FULL| PRODUCT | 33333 | 423K| | 103 (1)| 00:00:02 | |* 6 | TABLE ACCESS FULL| EPISODE | 299K| 8198K| | 788 (1)| 00:00:10 | | 7 | TABLE ACCESS FULL | REQUEST | 3989K| 57M| | 28772 (1)| 00:05:46 | |* 8 | INDEX UNIQUE SCAN | PK_PRODUCT_SUB_TYPE | 1 | 3 | | 0 (0)| | ----------------------------------------------------------------------------------------------------- Predicate Information (identified by operation id): --------------------------------------------------- 3 - access("EPISODE"."CROSS_REF_ID"="REQUEST"."CROSS_REF_ID") 4 - access("EPISODE"."EPISODE_ID"="PRODUCT"."EPISODE_ID") 5 - filter("PRODUCT"."STATUS"='I') 6 - filter("EPISODE"."DEPARTMENT_ID"=2) 8 - access("PRODUCT"."PROD_SUB_TYPE_ID"="PRODUCT_SUB_TYPE"."PROD_SUB_TYPE_ID")

Execution of display_cursor after the execution plan:SELECT episode.episode_id , episode.cross_ref_id , episode.date_required , product.number_required , request.site_id FROM episode LEFT JOIN REQUEST on episode.cross_ref_id = request.cross_ref_id JOIN product ON episode.episode_id = product.episode_id WHERE ( episode.department_id = 2 and product.status = 'I' ) ORDER BY episode.date_required ;

Creating the table and Population:SQL_ID gbs74rgupupxz, child number 0 ------------------------------------- SELECT episode.episode_id , episode.cross_ref_id , episode.date_required , product.number_required , request.site_id FROM episode LEFT JOIN REQUEST on episode.cross_ref_id = request.cross_ref_id JOIN product ON episode.episode_id = product.episode_id WHERE ( episode.department_id = 2 and product.status = 'I' ) ORDER BY episode.date_required Plan hash value: 4250628916 ---------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes |TempSpc| Cost (%CPU)| Time | ---------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | | | | 10515 (100)| | | 1 | SORT ORDER BY | | 33333 | 1725K| 2112K| 10515 (1)| 00:02:07 | |* 2 | HASH JOIN OUTER | | 33333 | 1725K| 1632K| 10077 (1)| 00:02:01 | |* 3 | HASH JOIN | | 33333 | 1236K| | 894 (1)| 00:00:11 | |* 4 | TABLE ACCESS FULL | PRODUCT | 33333 | 325K| | 103 (1)| 00:00:02 | |* 5 | TABLE ACCESS FULL | EPISODE | 299K| 8198K| | 788 (1)| 00:00:10 | | 6 | INDEX FAST FULL SCAN| IX4_REQUEST | 3989K| 57M| | 3976 (1)| 00:00:48 | ---------------------------------------------------------------------------------------------- Predicate Information (identified by operation id): --------------------------------------------------- 2 - access("EPISODE"."CROSS_REF_ID"="REQUEST"."CROSS_REF_ID") 3 - access("EPISODE"."EPISODE_ID"="PRODUCT"."EPISODE_ID") 4 - filter("PRODUCT"."STATUS"='I') 5 - filter("EPISODE"."DEPARTMENT_ID"=2)

1 create tables

2. load data

3 create indexes

4. collection of statistics

10053 sections - original query-- -- Main table -- create table episode ( episode_id number (*,0), department_id number (*,0), date_required date, cross_ref_id varchar2 (11), padding varchar2 (80), constraint pk_episode primary key (episode_id) ) ; -- -- Product tables -- create table product_type ( prod_type_id number (*,0), code varchar2 (10), binary_field number (*,0), padding varchar2 (80), constraint pk_product_type primary key (prod_type_id) ) ; -- create table product_sub_type ( prod_sub_type_id number (*,0), sub_type_name varchar2 (20), units varchar2 (20), padding varchar2 (80), constraint pk_product_sub_type primary key (prod_sub_type_id) ) ; -- create table product ( product_id number (*,0), prod_type_id number (*,0), prod_sub_type_id number (*,0), episode_id number (*,0), status varchar2 (1), number_required number (*,0), padding varchar2 (80), constraint pk_product primary key (product_id), constraint nn_product_episode check (episode_id is not null) ) ; alter table product add constraint fk_product foreign key (episode_id) references episode (episode_id) ; alter table product add constraint fk_product_type foreign key (prod_type_id) references product_type (prod_type_id) ; alter table product add constraint fk_prod_sub_type foreign key (prod_sub_type_id) references product_sub_type (prod_sub_type_id) ; -- -- Requests -- create table request ( request_id number (*,0), department_id number (*,0), site_id number (*,0), cross_ref_id varchar2 (11), padding varchar2 (80), padding2 varchar2 (80), constraint pk_request primary key (request_id), constraint nn_request_department check (department_id is not null), constraint nn_request_site_id check (site_id is not null) ) ; -- -- Activity & Users -- create table activity ( activity_id number (*,0), user_id number (*,0), episode_id number (*,0), request_id number (*,0), -- always NULL! padding varchar2 (80), constraint pk_activity primary key (activity_id) ) ; alter table activity add constraint fk_activity_episode foreign key (episode_id) references episode (episode_id) ; alter table activity add constraint fk_activity_request foreign key (request_id) references request (request_id) ; -- create table app_users ( user_id number (*,0), user_name varchar2 (20), start_date date, padding varchar2 (80), constraint pk_users primary key (user_id) ) ; prompt Loading episode ... -- insert into episode with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 1000000 ) select r, 2, sysdate + mod (r, 14), to_char (r, '0000000000'), 'ABCDEFGHIJKLMNOPQRSTUVWXYZ' || to_char (r, '000000') from generator g where g.r <= 300000 / commit ; -- prompt Loading product_type ... -- insert into product_type with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 1000000 ) select r, to_char (r, '000000000'), mod (r, 2), 'ABCDEFGHIJKLMNOPQRST' || to_char (r, '000000') from generator g where g.r <= 12 / commit ; -- prompt Loading product_sub_type ... -- insert into product_sub_type with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 1000000 ) select r, to_char (r, '000000'), to_char (mod (r, 3), '000000'), 'ABCDE' || to_char (r, '000000') from generator g where g.r <= 15 / commit ; -- prompt Loading product ... -- -- product_id prod_type_id prod_sub_type_id episode_id padding insert into product with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 1000000 ) select r, mod (r, 12) + 1, mod (r, 15) + 1, mod (r, 300000) + 1, decode (mod (r, 3), 0, 'I', 1, 'C', 2, 'X', 'U'), dbms_random.value (1, 100), NULL from generator g where g.r <= 100000 / commit ; -- prompt Loading request ... -- -- request_id department_id site_id cross_ref_id varchar2 (11) padding insert into request with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 10000000 ) select r, mod (r, 4) + 1, 1, to_char (r, '0000000000'), 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz01234567890123456789' || to_char (r, '000000'), 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789012345678' || to_char (r, '000000') from generator g where g.r <= 4000000 / commit ; -- prompt Loading activity ... -- -- activity activity_id user_id episode_id request_id (NULL) padding insert into activity with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 10000000 ) select r, mod (r, 50) + 1, mod (r, 300000) + 1, NULL, NULL from generator g where g.r <= 100000 / commit ; -- prompt Loading app_users ... -- -- app_users user_id user_name start_date padding insert into app_users with generator as (select rownum r from (select rownum r from dual connect by rownum <= 1000) a, (select rownum r from dual connect by rownum <= 1000) b, (select rownum r from dual connect by rownum <= 1000) c where rownum <= 10000000 ) select r, 'User_' || to_char (r, '000000'), sysdate - mod (r, 30), 'ABCDEFGHIJKLMNOPQRSTUVWXYZ' || to_char (r, '000000') from generator g where g.r <= 1000 / commit ; -- prompt Episode (1) create index ix1_episode_cross_ref on episode (cross_ref_id) ; -- prompt Product (2) create index ix1_product_episode on product (episode_id) ; create index ix2_product_type on product (prod_type_id) ; -- prompt Request (4) create index ix1_request_site on request (site_id) ; create index ix2_request_dept on request (department_id) ; create index ix3_request_cross_ref on request (cross_ref_id) ; -- The extra index on the referenced columns!! create index ix4_request on request (cross_ref_id, site_id) ; -- prompt Activity (2) create index ix1_activity_episode on activity (episode_id) ; create index ix2_activity_request on activity (request_id) ; -- prompt Users (1) create unique index ix1_users_name on app_users (user_name) ; -- prompt Gather statistics on schema ... -- exec dbms_stats.gather_schema_stats ('JB')

10053 - updated the Query*************************************** SINGLE TABLE ACCESS PATH ----------------------------------------- BEGIN Single Table Cardinality Estimation ----------------------------------------- Table: REQUEST Alias: REQUEST Card: Original: 3994236 Rounded: 3994236 Computed: 3994236.00 Non Adjusted: 3994236.00 ----------------------------------------- END Single Table Cardinality Estimation ----------------------------------------- Access Path: TableScan Cost: 28806.24 Resp: 28806.24 Degree: 0 Cost_io: 28738.00 Cost_cpu: 1594402830 Resp_io: 28738.00 Resp_cpu: 1594402830 ******** Begin index join costing ******** ****** trying bitmap/domain indexes ****** Access Path: index (FullScan) Index: PK_REQUEST resc_io: 7865.00 resc_cpu: 855378926 ix_sel: 1 ix_sel_with_filters: 1 Cost: 7901.61 Resp: 7901.61 Degree: 0 Access Path: index (FullScan) Index: PK_REQUEST resc_io: 7865.00 resc_cpu: 855378926 ix_sel: 1 ix_sel_with_filters: 1 Cost: 7901.61 Resp: 7901.61 Degree: 0 ****** finished trying bitmap/domain indexes ****** ******** End index join costing ******** Best:: AccessPath: TableScan Cost: 28806.24 Degree: 1 Resp: 28806.24 Card: 3994236.00 Bytes: 0 ****************************************************************************** SINGLE TABLE ACCESS PATH ----------------------------------------- BEGIN Single Table Cardinality Estimation ----------------------------------------- Table: REQUEST Alias: REQUEST Card: Original: 3994236 Rounded: 3994236 Computed: 3994236.00 Non Adjusted: 3994236.00 ----------------------------------------- END Single Table Cardinality Estimation ----------------------------------------- Access Path: TableScan Cost: 28806.24 Resp: 28806.24 Degree: 0 Cost_io: 28738.00 Cost_cpu: 1594402830 Resp_io: 28738.00 Resp_cpu: 1594402830 Access Path: index (index (FFS)) Index: IX4_REQUEST resc_io: 3927.00 resc_cpu: 583211030 ix_sel: 0.0000e+00 ix_sel_with_filters: 1 Access Path: index (FFS) Cost: 3951.96 Resp: 3951.96 Degree: 1 Cost_io: 3927.00 Cost_cpu: 583211030 Resp_io: 3927.00 Resp_cpu: 583211030 Access Path: index (FullScan) Index: IX4_REQUEST resc_io: 14495.00 resc_cpu: 903225273 ix_sel: 1 ix_sel_with_filters: 1 Cost: 14533.66 Resp: 14533.66 Degree: 1 ******** Begin index join costing ******** ****** trying bitmap/domain indexes ****** Access Path: index (FullScan) Index: IX4_REQUEST resc_io: 14495.00 resc_cpu: 903225273 ix_sel: 1 ix_sel_with_filters: 1 Cost: 14533.66 Resp: 14533.66 Degree: 0 Access Path: index (FullScan) Index: IX4_REQUEST resc_io: 14495.00 resc_cpu: 903225273 ix_sel: 1 ix_sel_with_filters: 1 Cost: 14533.66 Resp: 14533.66 Degree: 0 ****** finished trying bitmap/domain indexes ****** ******** End index join costing ******** Best:: AccessPath: IndexFFS Index: IX4_REQUEST Cost: 3951.96 Degree: 1 Resp: 3951.96 Card: 3994236.00 Bytes: 0 ***************************************I mentioned that it is a bug related to the ANSI SQL standard and transformation probably.

As suggested/asked in my first reply:

1. If you use a no_query_transformation then you should find that you get the use of the index (although not in the plan you would expect)

2. If you use the traditional Oracle syntax, then you should not have the same problem. -

pulse width of measurement of signals generated by data acquisition

Finally, I would like to:

Start a counter pulse width measurement and the analog output at the same instant.

Stop the measurement with an external digital signal pulse width.My current plan is to use a digital output on the acquisition of data to synchronize a digital input and the start-up of the meter input. The digital input will be a trigger to start for the analog output. This works, except for the meter.

While trying to implement this, I tried a simple test to generate a digital pulse with the acquisition of data and wiring for counter inputs. It does not, even if it seems perfect to an oscilloscope. Then, without changing the software at all, I connect a function generator to my counter entries, and it measures pulse flawless widths.

I'm actually implemented it with a Python wrapper around the C DAQmx API, but I recreated in LabVIEW, and it has the same. VI attached. I have the latest drivers DAQmx.

Accidentally, I posted this in a forum for LabVIEW, as I managed to post with the account of a colleague. I think 2 ups live as this mandate to another post. I'm sorry. Former post is http://forums.ni.com/ni/board/message?board.id=170&message.id=389856.

Solution: I had to set the channel to counter with implicit synchronization. In addition, the sampsPerChanToAcquire must be at least 2, if not, there is an error. I still don't understand why it worked with a source of external impulse, however.

DAQmxCfgImplicitTiming (task_handle, DAQmx_Val_FiniteSamps, 2)

Maybe you are looking for

-

Anyone who has used the service of third party of AIOS USA?

I was contacted by a company called AIOS USA claiming to be third party Apple support and they sell me a series of plans Apple Care for my MacBook Pro at a reduced price that Apple sells. What led them to me was a claim that their Global MicroSoft S

-

Satellite L300 - horizontal line then by restarting

I have this laptop for 2 or 3 years, there can remember, there is almost a year does not start on the first shot,.transform them windows like 2 minutes it is restart and do as Bill as 3 4 times and then is working. now, I try to cook him upwards, and

-

Equium A100-549 - reading disc error occurred press ctrl + alt + delete to restart

Hello I have a laptop Toshiba Equium a100-549, about 3 years, Windows XP Home Edition.Everything worked very well so far. When starting my computer, I received the message: A disk read error occurred press ctrl + alt + deleteI don't want to lose my d

-

Thank you who ever. That took only about 20 minutes of my life to report this abuse obvious repeted. B to sift through and I really feel that I've been honest in all reports of abuse. Surprised this thread did not get ransacked earlier. Once again, t

-

No advance WARNING when a limited time the user is disconnected

I put in place until the account of my children (Vista SP3). There is however, no prior warning, when the spam of time has elapsed. The screen is just blank out of the blue!Is there a setting that I have the system to display a warning advance e.g. 5