Question of timebase NI PCI-5154 digitizer drift

Hello NOR all awaits them:

We have a NO-PCI5154, used for several years now. We use it to capture waveforms of impulse which we care about timing relationships.

We operate the digitizer to sampling of 1 GHz and up to today, we assume the sampling rate is precise and constant. Today, a member of group doubt that since the digitizer specfication said, what the time base drift on "±7 ppm / ° C". So if this is true, suppose we have a Temperation of exploitation that is 20 degrees higher than the temperature at which the scanner has been calibrated, then the derivative can reach up to 140 ppm time 1 GHz which is 140 KHz? It would be a killer of our measures.

Please help clarify this question, then we can estimate errors in our measures.

Unfortunately, we have no data on the repeatability of the time base drift.

To calculate the frequency of real-time database, simply reverse the calculations that we've discussed so far. Measure a source very precise on the digitizer, and any change in frequency of the signal would be caused by the non-ideal time base period.

For example, you measure a signal from 10 MHz to 1 GHz, and its frequency is reported as 10,001 MHz. So, we're out of 1 kHz. 1 kHz = 10 MHz * Xppm, solve for x: X = 100 ppm. Thus, our sample clock runs at 100 ppm. 1 GHz * 100 ppm gives us a period of 0.9999 ns or ns 1,0001. As our frequency has increased to 1 kHz, the signal was compressed when being interpreted to 1ns dt. Thus, the real clock period was 1.0001ns.

Because it sounds like you can't control the temperature of your work environment, to the more specific measures that you can measure the time base clock drift immediately before and after taking your measurements. If you have run your tests in a controlled temperature environment, you might be able to get away with a measure not time base clock drift as often, but you should always run regularly. The reason for this is also due to the effects of aging of the time base oscillator (affects all oscillators). The accuracy of all the oscillators gradually drift or increase over time. Our specifications, take account of this drift in the external calibration interval, but if you're going to measure the actual accuracy, the time is another factor that will affect the accuracy of the time base.

For completeness, I also need to say, that when you measure your test signals ppm accuracy, this shows absolute precision, not only the accuracy of the time base, but also the accuracy of the source of the signal. So it is very important to have a precise source for the test signals.

I hope this helps.

Nathan

Tags: NI Products

Similar Questions

-

I am trying to stream as quickly as possible from the to a FlexRIO 7966 5154 digitizer. From the example "NISCOPE fetch Forever" in LV2012, I can implement the acquisition on 5154 @ 40MS/s, 50 k of data I8 piece and the graphical indicator seems to keep well indefinitely.

I tried then write data to an FPGA - FIFO target host, and it chokes. With FIFO write the method inside the loop of 5154 fetch the 5154 ends in error because of the "overwhelming memory" (it fills its on-board memory until the HOST is reading). With FIFO writing in a parallel consumer (queue conduit) loop, the queue overflows just because he can't write that as fast as the 5154 FIFO provides samples in the queue. What Miss me? The 5154 uses a PCI DMA bus (I think), so I think that if he could hold, the host to the FPGA PCIe bus must be capable.

There is another factor, that I have not taken into account? Sorry I can't provide the VI since the installation of the LV is on a PC not connected to the network. Any advice or suggestions would be appreciated.

Thank you

Mark Taylor

For future reference, I ended up resolving this by moving the implementation on the actual FPGA. I had run in mode "on the development computer with i/o simulated" and no matter what I did with the start-up or sizing FIFO sequence, it just doesn't work.

After compiling and running on the FPGA, all right. Maybe it's the basic knowledge, in fact I remember somewhere in my travels reading that don't accurately represent timing problems when running to the old fashion, but LV FPGA has kind of us painted into a corner with the compromise of simulation/compilation. They do not include Modelsim, which is the only tool that enables co-simulation do functional and verification of the timing set (we Questa, unfortunately) and independent VHDL simulation captures the interaction host with precision (and software processing/timing is impossible to quantify!). In my situation, the only way to operate at speed is in the FPGA, but then I can't see all of the things that I need to see to debug... ARGH!

Additional links and resources always will welcome (on debugging of FPGA LV and design in general). I found a couple of things below, which was somewhat useful:

"NEITHER powerful LabVIEW FPGA Developer's Guide"

http://www.NI.com/Tutorial/14600/en/

I found a link to an "FPGA Debug Reference Library": http://www.ni.com/example/31067/en/, but my installation does not seem to have this available.

And it looks like 2013 may add functionality to help alleviate some of this via the node of the execution of office referred here to the 'Test and debug LabVIEW FPGA Code'

-

Hello!

Has created the 5154 digitizer. A few months everything worked remarkably.

Yesterday did not socket TRIG, PFI0, PFI1.

Tension has sent 1 - 2V.

The device past self-test successfully. Calibration and reset devices did not help.

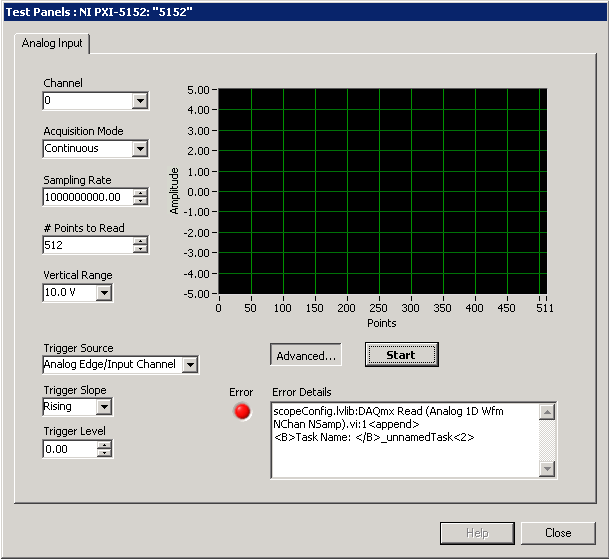

I start with autoparameters test Panel. I put the synchronization on TRIG and there is an error: "scopeConfig.lvlib AQmx Read (analog 1-d Wfm NChan NSamp) .vi:1.

AQmx Read (analog 1-d Wfm NChan NSamp) .vi:1.

" Task name: _unnamedTask.

What should do? How to fix?Hello

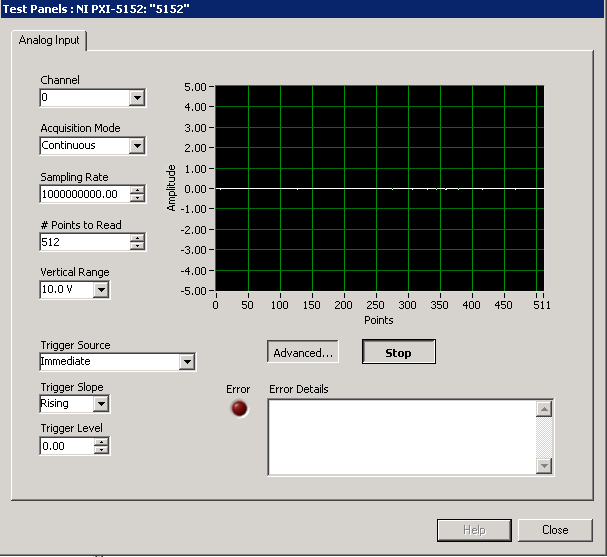

Let me assure you that I have a complete understanding of the problem. You use one OR PXI-5154 digitizer that passes the self-test and performs a reset of the device and automatic calibration without any error. If that's the case, then it most likely is not a problem with how the device communicates with the controller and probably not a problem with the device itself. I have performed remotely in one of our systems of PXI here and was able to reproduce the error of test here panels and got the same error as you. My screen looks like this:

If you do not trigger options, click on the Advanced... button. Is this the same problem you are having? If so, what if because it's never really recieivng this source of relaxation. I could define an immediate Tigger and run without error. For more information on scanner triggering, seethis article from DeveloperZone.

It would be also interesting to watch the NOR high speed scanners Getting Started Guide , particularly on page 21. You mentioned that you have problems with TRIG, 0 to PFI 1 PFI. TRIG is for connections external triger analog, and PFI lines are for reference in sample clock clock in and digital triggering input/output. None of the signals related to it are in fact going to be digitizerd. "" "" "You can also find more information about this digitizer and triggering by going to start ' programs ' National Instruments ' NOR-SCOPE" Documentation "high-speed digitizers help. "" This will open a HTML file and go help scanners high speed OR "devices" 5153/5154.

I hope this helps!

-

Hello

What is the sample rate max 5154 PCI for two channel inputs? The manual States the 2GS/s is for one channel only. So, am I not able to get a bandwidth of 1 GHz for the simultaneous measurement of two channels? Thank you!

Hi gbhaha,

First of all, TIS mode up to 20 GECH. / s using an ADC, while your real time sampling uses two converters a/n at the same time to a single channel. Take a look at these diagrams that I linked in my first post for more details on this architects.

About the difference in the bandwidth between the 5153 and 5154 - the 5153 has 500 MHz of bandwidth in its circuits, even when acquiring at faster sampling rates. The 5154 1 GHz of bandwidth, this is why it is more expensive.

Kind regards

-

I tried to find an answer to the question and hope someone here can help.

I have a XPS with an I5 2300 processor 2.8 8300. The tag indicates it was made in August 2011. The PCIe slots on the mobo are PCIe (1) x 16, which is occupied by a graphics card and (3) connectors PCIe x 1 which are empty. My question is about the three empty slots and what generation these slots are - PCIe 1.0, PCIe 2.0 or PCIe 3.0?

I can't find the answer to this question. The reason for the concern is the computer has no USB 3.0 ports, and I am interested in installing a USB 3.0 PCIe card. I read that you need to match the generation card PCI with the same build location for optimum performance. In other words, if these niches is only PCIe 1.0, it seems unwise to pay the sum of $ for a PCIe 2.0 (or 3.0 PCIe) card if they'll only opearte to bandwidth 1.0?

Thnx in advance for any help.

Hi Scewter54,

PCI-E 2.0 and PCI-E Gen2 are exactly the same thing. XPS 8300 comes with Intel H67 Chipset.

Answer please for more details.

-

ThinkStation S30 + question Plextor PX - AG512M6e PCI Express SSD

We try to do the Plextor P6e working with S30 as boot drive. For now - no luck. Playing with different settings of BIOS S30 - not made no difference. This PCI Express SSD drive is bootable, without driver, with a clean ROM with AHCI implemented. Logo of ROM shows same at startup. However, it seems that S30 does not know what to do with it. SSD itself supports UEFI boot and legacy.

If the Windows installation (7) is started from DVD, he sees SSDS as 'disc', but it cannot be partiitioned and there is a message, windows cannot be installed on it, since it cannot be boot drive. We have cloned the SSD HARD drive Windows installation; S30 doesn't recognize it, then will not start.

Can anyone help?

P.S., Plextor itself is not a problem, works well with many other computers, even with Lenovo M91p...

Edit - Update: option Legacy - no go, UEFI - install windows on a SSD OK (GPT partition), but freezes or bluescreens when WIndows loads...

Quick update:

-Win 7 installation inherited / boot - NO GO

-Win 7 UEFI install / boot - finally working with some really weird BIOS settings: all parameters must be UEFI, only video OpROM must have the value of legacy... If it is defined in UEFI, Win 7 starts, but display is frozen at "start windows". Also, he needed a few reboots it got finally work without errors...

-

2 questions about VMDirectpath (card PCI, DMA)

Well, I managed to configure Passthrough to date, but I have met barriers again. Here's what I want to know how to solve.

1. whenever we encounter a lot of trouble, we probably think that those are hardware problems. We use actually PCI which does not support virtualization, even if we could set up passthrough. I already read the document on VMDirectpath partner supported, and I found the HP servers, HBA, ESX support versions, CVEVA, EVA boards and operating systems invited. However, I coldln can't find information on the PCI card. Should we use special PCI card? If Yes, could you let me know what to use?

2. as I mentioned above, we have configured Passthrough, and we did two Virtual Machines. When we have simulated VMDirectpath, i/o control was OK, but the DMA was not available. Remapping is activate, but they return a ridiculous address. Why are these things happening? What's wrong?

Advice or useful links will help me.

Thank you

Kwangwon

Only a few cards are officially supported.

See also: VMware VMDirectPath of e/s

André

-

I have a R4 Aurora with the 79 X chipset and processor ivy bridge E, which supports up to 40 lanes of pcie. It has 4 slots, pcie x 16, x 1 and x 16, x 1, and the bios gives me the opportunity to support pcie gen 3.0. I know that it works for the x 16 cards as GPU - Z says that I connect to 3.0 speeds. I use currently an x 16 slot for my network card and a x 16 slot for my graphics card, which is a card double slot and obscures one of the x 1 slots. This leaves a x 1 single slot available.

Does anyone know what generation of PCIe x 1 connectors is? I would like to install a usb3 card and the cards I find require PCIe 2.0. I can't seem to find the specs anywhere, any knowledge would be greatly appreciated.

If I'm not wrong,

-The X 1 would be a 2.0

and the 16 x would be 3.0.

Let me know if you need anything that anyone else.

-

Question on the NI PCIe-6320 cash events

Hello, all:

I use 6320 for counting digital proofs. I use a tampon gated method of counting. At the end of each inventory period, I read everything in the buffer of events to get their timestamps. However, there is a problem with this method. When the number of events exceeds 1 million (?), all buffered of lost events and I can't read anything. My guess is that this is due to the size of the buffer or counting clock reversal. (?) There are basically two ways to overcome this problem. We're to do several reading and the other events is prescale events. Anything know how to perform a count prescale? For example, I can just count the timing of each event in the fifth.

My method of counting is seen in the attached vi.

Thank you.

Since your actual code is different from what has been published, I can only take a few guesses for why you can't count impulses more than (about)? 1 million.

1. If your code real don't wire DAQmx Timing.vi a value of 'number of samples', so a primary task which is told that she acquires 100 MHz uses a default buffer size of 1 million samples. You can create a larger buffer by wiring in a greater value. You can also loop on your reader calls to help prevent a buffer overrun.

2 Theres a limit to the rate, you can stream data from the system RAM card. The X-series is good better than most of his predecessors in this respect for the measures in the base. (See this thread and the related links.) But it won't always manage frequencies of efficient sampling of 100 MHz for any significant duration.

3. Another option I've seen used is County binning. This method works a little inside-out your method by letting external impulses increment the counter that this indictment is sampled at a constant sampling rate.

4. I could not yet make any real life playing with a card X-series, but I think that there is also a precise frequency measurement mode for sampling at a constant rate. This mode may be useful for you as well.

-Kevin P

-

How to start, stop, and restart automatically

Hello

I'm Pramod.S, I PCI - 5154 digitizer, I'm acquirng data now my question is I want to acquire the data for say 1 min to stop, after the gap of 2: I want to start acquirng again automatically for 1 min. can you please guide me in this way. I'll be gratefull if you send examples to demonstrate this feature.

Pramod Hello,

Regarding your question on the acquisition of a min, wait 2 minutes and acquires again for a minute, you'll need to create a program to control the acquisition and away. Is there a programming language you will use to control the acquisition?

A simple control may use the function time in LabVIEW feature to see how long the acquisition has run before deciding to interrupt the acquisition and become inactive for 2 minutes. A simple implementation to start is attached.

Hope this helps

-

NI5105 digitizer and Measurement Studio 2010

Hi I'm new here. I have a problem that needs your help.

My company just bought a PCI-5101 digitizer of NOR, we want to continually read an output signal of all 8 channels of this device for our project.

Our project is under Microsoft VS 2010 and .net Framework 4. But I understand that your only support .net NI Scope libraries Microsoft VS 2008, .net 3.5 Frame.

My question is: what should I do? Can Measurement Studio 2010 (.net 4 framework) do the work for the acquisition of data for me? I want to ensure that it can before buying

the Studio of the action on your part.

Thank you.

You're right in that .NET OR-Scope libraries take in charge VS 2008 and .NET 3.5. There is a workaround to use VS 2010, but you should always use the .NET framework 3.5.

Basically, you can install the .NET OR-SCOPE 1.1.1 class library on you machine to install the samples. You will then open them in VS 2010, forcing them through a conversion process. Once in VS 2010, you need to remove the reference to NationalInstruments.Common, as it references the 4.0 framework. You will then add the NationalInstruments.Common reference for the 3.5 framework. Then, the program will be able to compile. I was able to verify this and was able to run the FetchForever example with a simulated scope device.

That being said, it is not officially supported, and I'm not sure when support for .NET 4.0 and VS 2010 will be added. But if you agree .NET 3.5 programming, you should be able to program in VS 2010 with the 5101.

Kind regards

Elizabeth K.

National Instruments | Sales engineer | www.NI.com/support

-

5154 TRIG, card fpga 7842R signals routing

Can someone tell me how to get a TRIG signal from 7842R 5154 digitizer card card. I thought I'd see RTSI as a choice in the drop-down list in the config OR-SCOPE VI trigger, is there a step I'm missing?

I should have digital triggering selected on the trigger to set up VI, must have been half asleep yesterday.

-

System-> DevNames DAQmx detects not PCI-5105

Hi all

I use the property system/DevNames DAQmx node to remove all the hardware connected to the system and to dynamically assign device names to measures/controls s/w. This property node has worked well up until now - when I then added a PCI-5105 (digitizer).

I understand that this use the niSCOPE IVI instrument driver when you write Labview code and perhaps this has something to do with the problem, but my understanding was that if the Measurement & Automation explore displays the device and name under the directory under devices and peripheral Interfaces/NOR-DAQmx then this property node needs to pick it up. (IE NI PCI-6255: "Dev1" or NI PCI-5105: 'Dev3, etc...)

Is there a way where I can automatically detect the device name? Or is the problem, because its use IVI and requires a class IVI to define?

Would be happy for some advice!

Hi Barkusmaximus,

In fact, you can use the scope OR api to detect devices connected to your system. The VI under NI Scope > utility > ModInst will detect and display the details of the device. See the VI attached for an example.

-

The Lenovo Helix digitizer pen will work with Windows 7

Hello forum,.

I work in the it Department and we have recently stopped a number of Lenovo Helix to be used for special projects.

We will be downgraded to Windows 7 64 bit.

My question is will be the pen digitizer always supported by Windows 7?

Maybe I over looked, but I didn't see any drivers that seem to be associated with the digitizer pen or any what previous reference to this and downgrading to Windows 7.

Any information you can provide will be useful.

Welcome to the forum!

Ballpoint pen stylus is natively under W7 and it will work 'out of the box.

-

Looking for Device Id PCI\VEN_10 & DEV_OBEE & SUBSYS_089C196E & REV_A1\E & 243D7BDO & O & 0170

Using Windows XP 3 with an Athlon 64 processor. In Device Manager, I have a YELLOW QUESTION, which indicates the PCI DEVICE. Click on them for further investigation, and I have no indication as to which is the device, I can locate the driver for the device.

Device Id PCI\VEN_10 & DEV_OBEE & SUBSYS_089C196E & REV_A1\E & 243D7BDO & O & 0170

or

I could be that... Device Id PCI\VEN_10 & DEV_QBEE & SUBSYS_089C196E & REV_A1\E & 243D7BD0 & 0 & 0170

I contacted MSI because I have a MSI motherboard - 7185 K8N SLI 1.8 Version and they told me that windows will assign these numbers, and the only one who is in contact with Microsoft.

All my drivers have been installed, but he left this yellow question mark and I don't know why.

Please send me an email with your answer to

If you know exaclty what is the device then please send me an email with details on the unit and the location as to where I can find the driver for the device.

If possible, please send me links to the location and the pilot of the aircraft.

Hi MichaelV26,

1. did you of recent changes on the computer?

All by searching for the device using the device id it seems to be related to the Nvidia card.

If you have the graphics card from Nvidia that are installed on the computer so you can check in Windows update or the Nvidia site for the latest driver for the card and check if that helps.

Refer to the article below and check if it helps.

How to troubleshoot unknown devices listed in Device Manager in Windows XP

Maybe you are looking for

-

Video playback is divided into 4 pieces and is black and white

Video playback is divided into 4 pieces and is black and white HELP!

-

How you uninstall a microsoft excel security update?

I have a Department of energy Excel based spreadsheet and a security update is initially the scheme to bleed in the entry page. I want to locate and uninstall KB973593 and KB973475 but need help in the process. Bill Moir

-

HP Elitebook 840 G1, G2, G3: Showing empty HP 2013 Ultraslim Docking station

* It seems to connect has a new dock in a configuration that we already know works fixed whatever issue the dock. After connection to a working configuration, we can move to some other computer/editing we need to, and it continues to work. Not a real

-

Poor quality audio inspiron 5437

The audio driver of dell for the card realtek sound in my laptop is very bad, it delivers a dull, with almost no bass and really hard at high frequencies. In another thread someone suggested using the native windows driver and that works, but: I use

-

E6420 - does have an internal microphone and how allow you it

I think they are on each side of the webcam on top of the lid when opened up but not really sure. Someone who can confirm? Also, how do activate you it. I right click a show selected disabled devices, but the microphone always appears as disabled w