read an AVI using "Binary file reading" vi

My question is to know how to read an avi file using vi «The binary read»

My goal is to create a series of small avi files using IMAQ AVI write framework with the mpeg-4 codec to long 2 seconds (up to 40 images in each file with 20 frames per second) and then send them one by one in order to create a video stream. The image has entered USB camera. If I read these frameworks using IMAQ AVI read framework then compression advantage would be lost if I want to read the entire file itself.

I've read the avi file using "Binary file reading" with 8 bit unsigned data format and then sent to the remote end and save it and then post it, but it did not work. Later, I found that if I read an image using "Binary file reading" file with 8 bit unsigned data format and save it to local computer itself, the format should be changed and it would be unrecognizable. I'm doing wrong by reading the file format of number integer 8 bit unsined or should I have used other types of data.

I'm using Labview 8.5 and Labview vision development module and module vision 8.5 acquisition

Your help would be very appreciated.

Thank you.

Hello

Discover the help (complete) message to "write in binary.

"Precede the size of array or string" entry by default true, so in your example the data written to the file will be added at the beginning information on the size of the table and your output file will be (four bytes) longer than your input file. Wire a constant False "to precede the array or string of size" to avoid this problem.

Rod.

Tags: NI Software

Similar Questions

-

Work of doesn´t of reading binary file on MCB2400 in LV2009 ARM embedded

Hello

I try to read a binary file from SD card on my MCB2400 with LV2009 Board built for the ARMS.

But the result is always 0, if I use my VI on the MCB2400. If use the same VI on the PC, it works very well with the binary file.

The

access to the SD card on the works of MCB2400 in the other end, if I

try to read a text file - it works without any problem.Y thre constraints for "reading a binary file" - node in Embedded in comparison to the same node on PC?

I noticed that there is also a problem

with the reading of the textfiles. If the sice of the file is approximately 100 bytes

It doesn´t works, too. I understand can´t, because I read

always one byte. And even if the implementation in Labview is so

bad that it reads the total allways of the file in ram it sould work. The

MCB2400 has 32 MB of RAM, so 100 bytes or even a few megabytes should

work.But this doesn´t seems to be the problem for binary-problem. Because even a work of 50 bytes binary file doesn´t.

Bye & thanks

Amin

I know that you have already solved this problem with a workaround, but I did some digging around in the source code to find the source of the problem and found the following:

Currently, binary read/write primitives do not support the entry of "byte order". Thus, you should always let this entry by default (or 0), which will use the native boutien of the target (or little endian for the target ARM). If wire you one value other than the default, the primitive will be returns an error and does not perform a read/write.

So, theoretically... If you return to the VI very original as your shift and delete the entry "byte order" on the binary file read, he must run a binary read little endian.

This also brings up another point:

If a primitive type is not what you expect, check the error output.

-

"Read binary file" and efficiency

For the first time I tried using important binary file on data files reading, and I see some real performance issues. To avoid any loss of data, I write the data as I received it acquisition of data 10 times per second. What I write is an array double 2D, 1000 x 2-4 channels data points. When reading in the file, I wish I could read it as a 3D array, all at the same time. This does not seem supported, so many times I do readings of 2D table and use a shift with table register building to assemble the table 3D that I really need. But it is incredibly slow! It seems that I can read only a few hundred of these 2D members per second.

It has also occurred to me that the array of construction used in a shift register to continue to add on the table could be a quadratic time operation depending on how it is implemented. Continually and repeatedly allocating more larger and large blocks of memory and copy the table growing at every stage.

I'm looking for suggestions on how to effectively store, read effectively and efficiently back up my data in table 3-d that I need. Maybe I could make your life easier if I had "Raw write" and "read the raw data" operations only the digits and not metadata.then I could write the file and read it back in any size of reading and writing songs I have if you please - and read it with other programs and more. But I don't see them in the menus.

Suggestions?

Ken

-

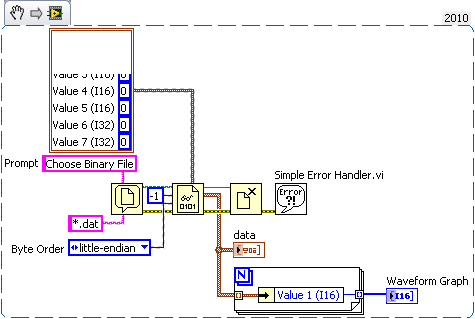

Read and analyze a binary file

I can't properly analyze a binary file. I use the Labview 'Reading binary file' example, I joined to open the file. I suspect that I use incorrect settings on the command "binary file reading.

Here is a little history on my request. The binary file, that I'm reading has given stored as 16-bit and 32-bit unsigned integers. The data comes in blocks of bytes, 18; in this piece of 18 bytes are the five values 16-bit and two 32-bit values. At the end of the day, I fear that with pulling on one of the 16-bit of each data segment values, so the amount of the fine if the sorting method interprets the 32 bit values as two consecutive 16-bit values.

Any suggestions on how to properly analyze the binary file? Thanks for your suggestions!

P.S. I have attached an example of binary file I am trying to analyze. She doesn't have an extension so I chagned it in .txt for download. It has 40 k + events, and a piece of 18-byte data is saved for each "event", so the binary is long enough.

You can read the file until all the bytes and do some gymnastics Unflatten or specify the data type for the binary file reading. No need to feed the size, just let it read all of the file at a time. Nice how the extracted from cultures of the constant of cluster.

-

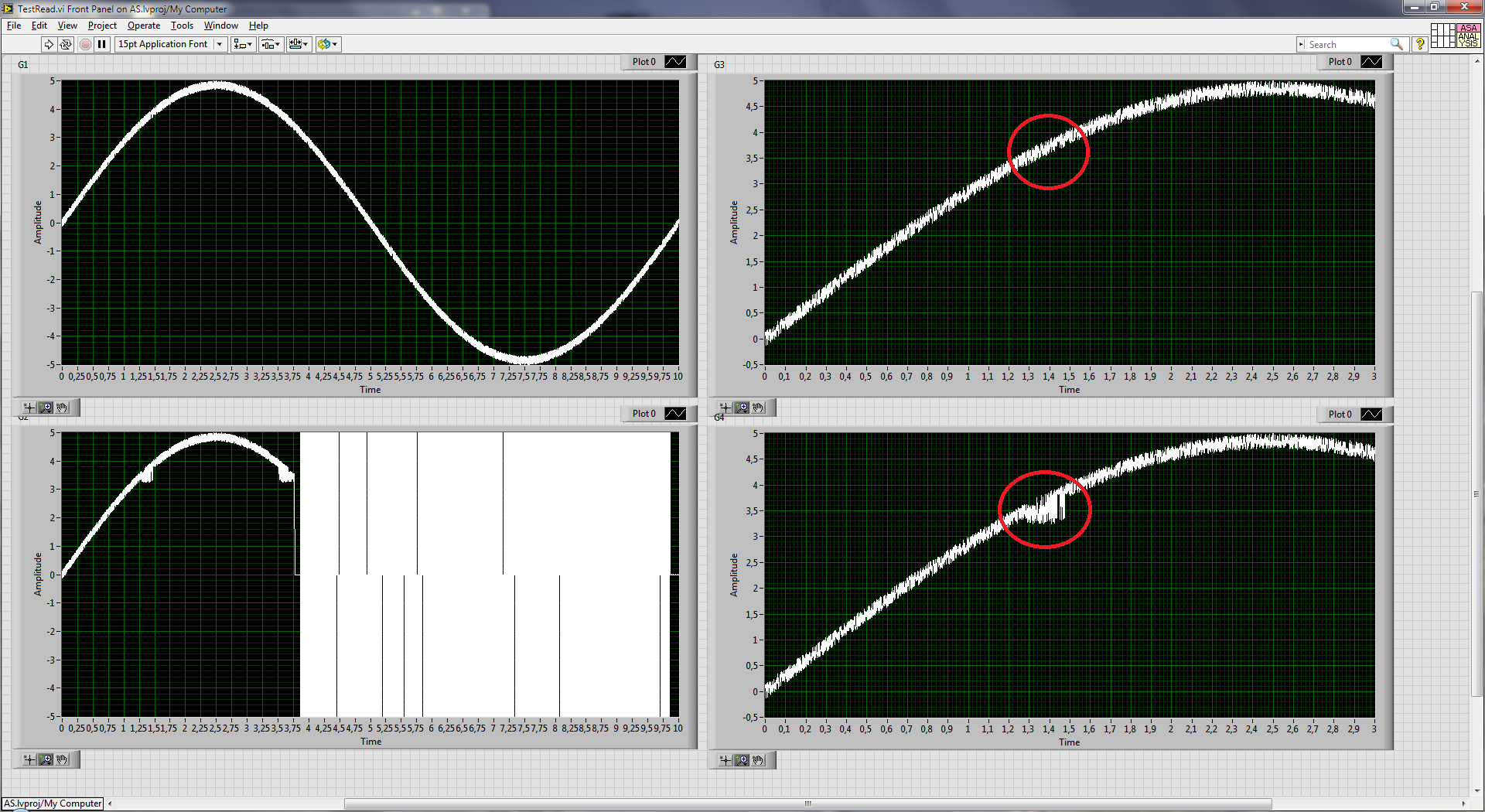

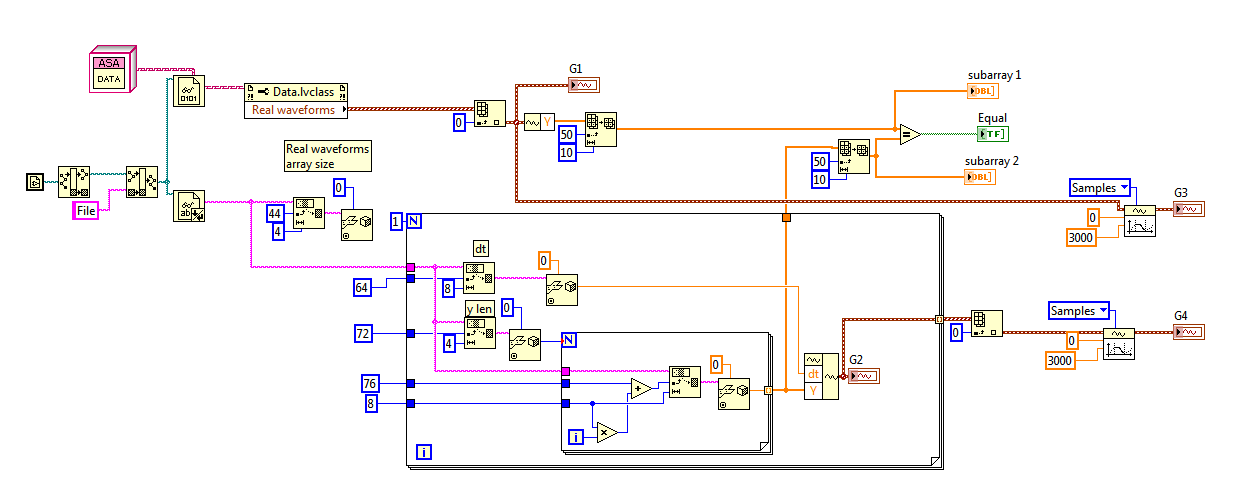

Strange behavior when reading binary file

I'm reading from the binary data as flattened. The file was saved as a class of labview which contained the table of waveforms and other data. When I read the file with read binary file vi with class attached to the type of data the data are correct. But when I read the as flattened data something strange happened (see attached image). The two best shows correct data and two charts below shows the partially correct data. When you write code to read the flattened data I followed the instructions on http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/how_labview_stores_data_in_memory/ and http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/flattened_data/.

The strange is that there is gap around 1.4 s (marked in red), but the samples before and after game.

I don't know what I'm doing wrong.

I have LV2011.

Demand is also attached.

Assuming that you your decoding of binary data is correct (that is, you have the data structure of the serialized class figured out) the problem is probably that you don't the read as binary data, you read in the text using the text file... Modify the read fucntion than binary.

-

Hello

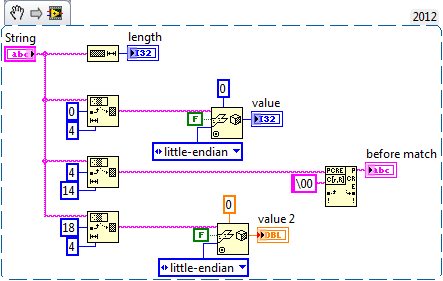

I have the script program, which can produce the desired data in csv, ASCII and binary file format. Sometimes not all of the useful numbers are printed in the csv file, so I need to read data from the binary file.

I have atttached what I have so far and also a small csv and the bin file, containing the same data.

I read the first value of the integer (2), but the second data type is a string, and I can't read this one... I get the error at the end of the file, I guess because the type does not, or I guess I should read the data in a different way?

According to what I see in the csv file, the structure of the binary file should be the following:

integer, String, float, and repeated once again all the lines in the file csv...?

Thanks for the tips!

PS. : the site does not allow me to upload files, so here they are:

https://DL.dropboxusercontent.com/u/8148153/read%20Binary%20File.VI

https://DL.dropboxusercontent.com/u/8148153/init_in_water.csv

https://DL.dropboxusercontent.com/u/8148153/init_in_water.bin

Here is a basic example of what I mean. Oh, the whole analysis and the string. The floating-point number at the end is not decode properly. I tested on the first entry in your file binary. If you have access to the code that generates this data, you can see exactly what format the data is. Your data seems to be 22 bytes of length.

-

read in a labview complex binary file written in matlab and vice versa

Dear all. We use the attached funtion "write_complex_binary.m" in matlab to write complex numbers in a binary file. The format used is the IEEE floating point with big-endian byte order. And use the "read_complex_binary.m" function attached to read the complex numbers from the saved binary file. However, I just don't seem to be able to read the binary file generated in labview. I tried to use the "Binary file reading" block with big-endian ordering without success. I'm sure that its my lack of knowledge of the reason why labview block works. I also can't seem to find useful resources to this issue. I was hoping that someone could kindly help with this or give me some ideas to work with.

Thank you in advance of the charges. Please find attached two zipped matlab functions. Kind regards.

Be a scientist - experiment.

I guess you know Matlab and can generate a little complex data and use the Matlab function to write to a file. You can also function Matlab that you posted - you will see that Matlab takes the array of complex apart in 2D (real, imaginary) and which are written as 32 bits, including LabVIEW floats called "Sgl".

So now you know that you must read a table of Sgls and find a way to put together it again in a picture.

When I made this experience, I was the real part of complex data (Matlab) [1, 2, 3, 4] and [5, 6, 7, 8] imagination. If you're curious, you can write these out in Matlab by your complex function data write, then read them as a simple table of Dbl, to see how they are classified (there are two possibilities-[1, 2, 3, 4, 5, 6, 7, 8], is written "all real numbers, all imaginary or [1, 5, 2, 6, 3, 7, 4) [, 8], if 'real imaginary pairs'].

Now you know (from the Matlab function) that the data is a set of Sgl (in LabVIEW). I assume you know how to write the three functions of routine that will open the file, read the entire file in a table of Sgl and close the file. Make this experience and see if you see a large number. The "problem" is the order of bytes of data - Matlab uses the same byte order as LabVIEW? [Advice - if you see numbers from 1 to 8 in one of the above commands, you byte order correct and if not, try a different byte order for LabVIEW binary reading function].

OK, now you have your table of 8 numbers Sgl and want to convert it to a table of 4 complex [1 +, 2 + 6i, 5i 3 +, 4 + i8 7i]. Once you understand how to do this, your problem is solved.

To help you when you are going to use this code, write it down as a Subvi whose power is the path to the file you want to read and that the output is the CSG in the file table. My routine of LabVIEW had 8 functions LabVIEW - three for file IO and 5 to convert the table of D 1 Sgl a table of D 1 of CSG. No loops were needed. Make a test - you can test against the Matlab data file you used for your experience (see above) and if you get the answer, you wrote the right code.

Bob Schor

-

Unable to replicate the frations seconds when you read a timestamp to a binary file

I use LabVIEW to collect packets of data structured in the following way:

cluster containing:

DT - single-precision floating-point number

timestamp - initial period

Table 2D-single - data

Once all the data are collected table of clusters (above) is flattened to a string and written to a text file.

I try to read binary data in another program under development in c#. I have no problem reading everything execpt the time stamp. It is my understanding that LabVIEW store timestamps as 2 unsigned 8-byte integer. The first integer is the whole number of seconds since January 1, 1904, 12: 00, and the second represents the fractional part of the seconds elapsed. However, when I read this information in and convert the binary into decimal, the whole number of seconds are correct, but the fractional part is not the same as that displayed by LabVIEW.

Example:

Hex stored in the binary file that represents the timestamp: 00000000CC48115A23CDE80000000000

Time displayed in LabVIEW: 8:51:38.139 08/08/2012

Timestamp converted an Extended floating-point number in labview: 3427275098.139861

Timestamp binary converted into a decimal number to floating-point in c#: 3427275098.2579973248250806272

Binary timestamp converted to a DateTime in c#: 8:51:38.257 08/08/2012

Anyone know why there is a difference? What causes the difference?

http://www.NI.com/white-paper/7900/en

The least significant 64 bits should be interpreted as a 64-bit unsigned integer. It represents the number of 2-64 seconds after the whole seconds specified in the most significant 64-bits. Each tick of this integer represents 0.05421010862427522170... attoseconds.

If we multiply the fractional part of the value (2579973248250806272) by 2-64 so I think that you have the correct time stamp in C.

-

You want to read binary files in some parts of the 500th row in the 5 000th row.

I have files of 200 MB of 1000561 lines binary data and 32 columns when I read the file and sequentially conspire full memory of the generated message.

Now, I want to read the file in pieces as the 500th row 5,000th row with all the columns and it draw in the graph.

I tried to develop logic using functions file advanced set file position and the binary file reading block, but still not get the sollution.

Please, help me to solve this problem.

Thanks in advance...

Hi ospl,.

To read a specific part of the binary, I suggest to set the file position where you want to read the data and specify how many blocks you must read binary file for reading binary file.VI

for example, if you write table 2D binary file, and then mention you data type this 2D chart and make your account (5000-500). Then together, you produce position. If you have 32 DBL data type and column then it is 256 to the second row and 256 * 500 for line 501th. Use this number as input into your position.vi file get.

I hope you find you way through this.

-

Error 116 when a string of binary file reading

I try to use the 'writing on a binary' and "binary file reading" pair of VI to write a string to a binary file and read it again. The file is created successfully and a hex editor confirms that the file contains what is expected (a header + chain). However, when I try to read the string back once again, I received an error 116: "LabVIEW: Unflatten or stream of bytes read operation failed due to corrupted, unexpected or truncated data.» A quirk I found though, is that if I put "endianness" to "Big-Endian, network order", the error disappears when I use "native, welcome the order" (my original setting) or "little-endian" error occurs. Did I miss something in the documentation indicating that you can use big endian order when writing of strings, I do something wrong, or is this a bug in Labview? Because the program that it will be used for is to write large networks, in addition to channels, I would like to be able to stick to the 'native' setting for speed purposes and must not mix "endianness".

I have attached a VI of example that illustrates this problem.

I'm using Labview 8.5 on Windows XP SP2.

Thank you

Kevin

Hello

Please contact National Instruments! I checked the behavior that you have met and agree that it is a bug, it has been reported to R & D (CAR # 130314) for further investigation. As you have already understood possible workaround is to use the Big-Endian parameter. Also, I am enclosing another example that converts the string to a binary array before writing to the file, and then converts to a string according to the playback of the file. Please let me know if you have any questions after looking at this example though and I'll be happy to help you! Thank you very much for the comments!

-

What is the best way to read this binary file?

I wrote a program that acquires data from a card DAQmx and he writes on a binary file (attached file and photo). The data I'm acquisition comes 2.5ms, 8-channel / s for at least 5 seconds. What is the best way to read this binary file, knowing that:

-I'll need it also on the graphic (after acquisition)

-J' I need also to see these values and use them later in Matlab.

I tried the 'chain of array to worksheet', but LabView goes out of memory (even if I don't use all 8 channels, but only 1).

LabView 8.6

I think that access to data is just as fast, what happens to a TDMS file which is an index generated in the TDMS file that says 'the byte positions xxxx data written yyyy' which is the only overload for TDMS files as far as I know.

We never had issues with data storage. Data acquisition, analysis and storage with > 500 kech. / s, the questions you get are normally most of the time a result of bad programming styles.

Tone

-

Question in the reading of a binary file, with data "flatten to a string.

I am facing problem while playing a binary file (created using LabVIEW).

I've mentioned (question and method to reproduce) within the attached VI.

Even vi is fixed in versions 2012 and 8.0.

--

Concerning

Reading a string from a binary file stops at a NULL (0x00) character. When the first character is 0 x 00, you just read the of a character. Since you are doing the reverse, I'd written into a byte array. And then you can read as a byte array.

-

Reading a binary file - access error

I am new to the discussion Forum OR and had a basic question on the use of Labview to read a binary file.

For my application, I need Labview to collect a single value (pulse width) of a custom program that is written in C++. Currently, this value is stored in a temporary file of binary data. I'll run Labview and the custom program at the same time.

Is it possible to read a binary file using Labview while the file is written by another program to? At the moment I get an access error. I use a binary code to read the library of example Labview base.

Thanks for your suggestions!

I'm surprised that Windows would let you copy of the file if the other program still has a lock on it. How you try to open your file in LabVIEW now? Maybe if you open the file with accesss unalterable, Windows will allow you to open it in LabVIEW.

Yes, you can copy files in LabVIEW. There are functions to copy under the palette file IO. In addition, you can use a copy within the service system Exec command.

-

File.Read () fails with the binary file

It's annoying me like I thought it would be trivial (and according to the docs, quite possible).

I open a tiff file, and I'm reading all data. I put binary encoding after opening.heres my simple code:

var file is File.openDialog ("select the file");. leader. Open ("r"); leader. Encoding = "binary"; Alert (file. Read());

I use this file:

https://area51.d4creative.com/cgi-bin/fastLink.cgi?LinkId=922 & starts 5162 = & keycode = 9tlpQ3dS

my alert box says: MM if I open this file in a text editor, there is more data after MM. What is the problem? Extendscript really not correctly read binary data despite demand of textbooks he can?

Mike Cardeiro

If I do this way:

{

var myFile = File.openDialog ("Select binary file.");

myFile.open ("r");

myFile.encoding = "BINARY";var myChar, myByte;

var i = 0;

s = "";

While (! myFile.eof) {}

myChar = myFile.readch ();

myByte = myChar.charCodeAt (0) m:System.NET.SocketAddress.ToString (16);

If (myByte.length< 2)="" mybyte="0" +="">

s += myByte + "";

If (I %16 == 15) s += "\r";

i ++ ;

}

myFile.close ();

$.writeln (s);

}That's what I get:

2A of the 4 d 4 d 00 00 00 00 08 00 00 00 04 00 00 fe 0c

00 01 00 00 00 00 01 00 00 03 00 00 00 01 00 04

01 00 00 01 00 03 00 00 00 01 00 04 00 00 01 02

00 03 00 00 00 03 00 00 00 9th 01 03 00 03 00 00

00 01 00 01 00 00 01 06 00 03 00 00 00 01 00 02

00 00 01 11 00 04 00 00 00 01 00 00 00 01 15 bc

03 00 00 00 00 01 00 03 00 00 01 16 00 03 00 00

00 01 00 04 00 00 01 17 00 04 00 00 00 01 00 00

01 30 00 1 C 00 03 00 00 00 01 00 01 00 00 86 49

00 01 00 00 00 18 00 00 00 00 00 00 00 00 08 a4

00 08 00 08 38 42 49 4 d 04 28 00 00 00 00 00 0c

00 00 00 01 00 00 00 00 00 00 3f f0 ff ff ff ff

FF ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

FF ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

FF ff ff ff ff ff ff ff ff ff ff ffDan

-

"Read binary file" in hex format?

Is there a way I can set the data type of the function "Binary file reading" so he came out in Hex format?

Front panel. Right-click. Display format.

Maybe you are looking for

-

I want to change the language of Thunderbird in Swedish. Now English. Thank you...

With the update of your Thunderbird program, language PC switch to English, I use Swedish.

-

T610 filter to CTRL + ALT + DELETE keyboard shortcut

Hello Someone at - he had luck with HP WES7 add-on (filter shortcut key). Looks like we might finally block CTRL + ALT + DEL will be intercepted by WindowsEmbedded when VMware View is full screen (shame VMware do not lift their regular customer-to-cu

-

external reference for USB-5132

Hello I have a few questions (I hope) General on the use of an external reference on our USB-5132 digitizer clock. We want to block for the other oscillators in our system, which are currently locked to a single reference of 10 MHz. If I connect ou

-

LVDT offset before running the program

Hi all I use an LVDT to measure the movement of the ground. This must take into account both the consolidation and swelling and therefore requires the LVDT to move in both directions. As a result, the voltage must be reset after the LVDT is pushed so

-

Howdy. I'm trying to defragment this (HP PCm7170n) computer with Windows XP. Whenever I try, I get a message saying ' Disk Defragmenter has detected that Chkdsk is scheduled to run on the volume: HP_PAVILLION (C).» Please run Chkdsk /f. I looked for