Reduction of sample Compression factor

I use the sample Comrpession VI in order to reduce the number of samples that I draw a graphic. I wish I could vary the reduction factor (RF) during execution. It seems that you can only change the RF in the dialog of VI. Is there a way to get around this?

Hello

You can make the entry of the Express VI reduction factor by converting it to a Subvi. You will need to right click on the sample Compression VI and select display the front panel. This allows to convert the express VI into a Subvi standard and you will not have access to the dialog box that you used previously. You can change the 'size' in the block diagram to a control and bind it on the diagram. This will create another entry in this VI. I have provided a link that should show you how to change the side of the connector. It is in the help of LabVIEW files.

http://zone.NI.com/reference/en-XX/help/371361E-01/lvhowto/selecting_a_connector_pane/

Tags: NI Software

Similar Questions

-

Make the sample compression in an already generated file .lvm

I have captured a huge amount of data in the file *.lvm. I want to make to the spreadsheet, but the data is too large to fit into a spreadsheet. in fact, I forgot to use a compression of the sample in the VI. so, how can I open the file LVM compression of the sample and then take the data set reduces to Excel?

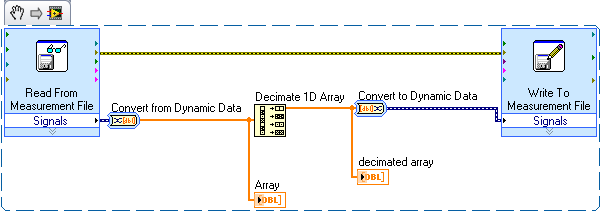

I was thinking about something as simple as this:

Just read the lvm, decimate the table to purge unnecessary samples and then rewrite it in another text file.

-

Hi all

I need to re - sample a 1KH 1MH tdms file, it seems that it is possible with the help of "Sample Compression" but the point is the entrance to this block is a single signal and my tdms file consists of a few different channels a beginner I don't know how to get my file PDM signals and connect them as inputs to the block of "Compression of the sample!

I would really appreciate if someone can help me with some advice.

Thank you very much

Navid

Read your file TDMS using the open functions then reading PDM. Here you can specify the offset and length to read. You can then put this into a while loop get 1000 samples at a time stopping when there is an end of file error, or your reading returns less then 1000 samples. Take these samples of 1000 and get the average of them. Then write this single data point to the TDMS file as a new group. Then close the file TDMS times all 1000 pieces have been processed. Then you will have a file with the raw data in the existing group and decimated in the new data. You can also choose to save them in a new TDMS file but I find that to do this way because it would be all in one file.

-

Is it possible to compress by default CatalystScripts BC somehow?

Google PageSpeed Insights highlights their sub form of files that can be compressed:

Enable compression

Resources with gzip or deflate compression can reduce the number of bytes sent over the network.

Enable compression for the following resources to reduce their transfer size of 16.4KiB (63% reduction).

Compression http://www.site.com/CatalystScripts/ValidationFunctions.js?vs=b2024.r490259-Phase1 could save 14.6KiB (reduction of 63%).

Compression http://www.site.com/CatalystScripts/Java_DynMenusSelectedCSS.js?vs=b2024.r490259-Phase1 could save 1.8KiB (reduction of 64%).

Nothing you can do about them, unless you disable all scripting options, you get all the scripts of BC and CSS manually, optimize and then load and call them when they are needed, such as BC.

-

Open the spreadsheet at the request and add data

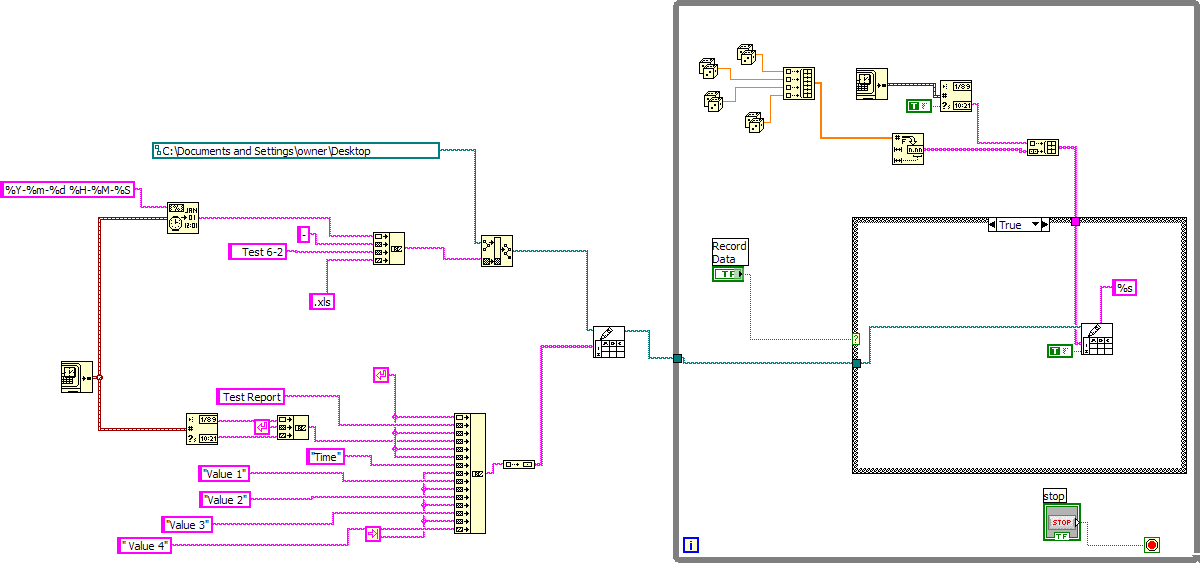

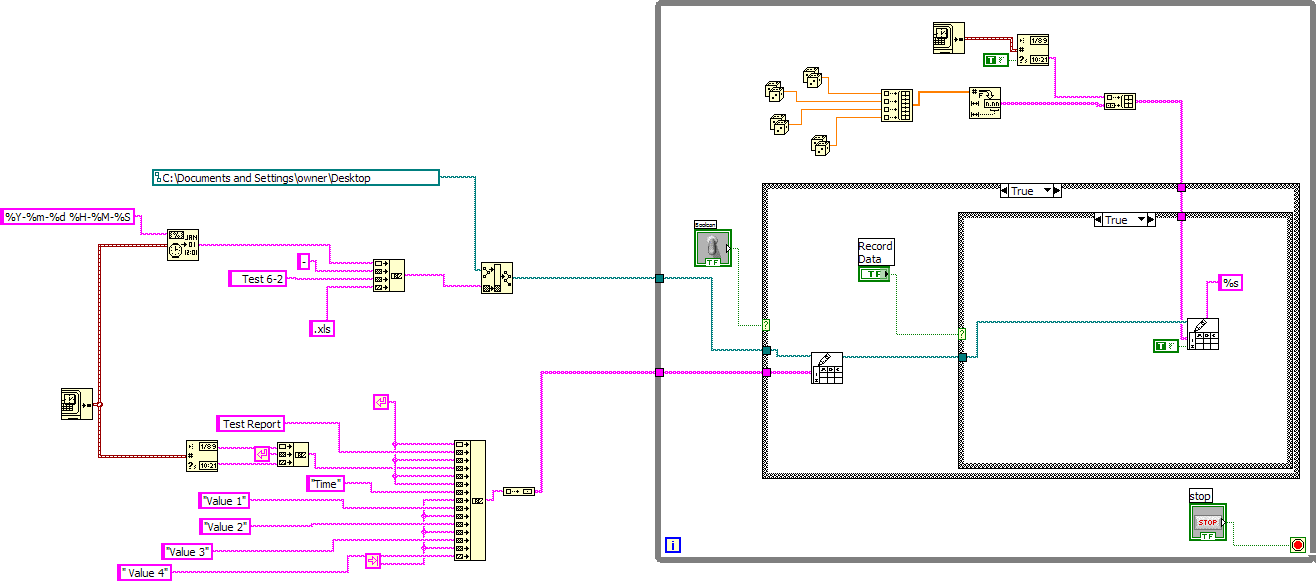

Using a cDAQ 9172 and Labview 8.2, I collect four channels of noisy pressure and temperature data collected during a product test. The data are shown live on the band on the front panel cards after be smoothed by the average feature of the sample Compression Express vi. All this works pretty well.

Data are presented to the test operator so that he can see when the test conditions have stabilized, which usually takes 3 to 5 minutes. After stabilization, I want to be able to press a button on the front panel so that a spreadsheet is open (ideally, with the original opening time as part of the file name) and a scan of the four data channels are added to the spreadsheet (with a timestamp). Then, ongoing test for 1 or 2 minutes, I want to again push the button every 10 or 15 seconds or so to add more data analysis, so that we can see later that the conditions had really stabilized. The exact date and the number of analyses of additional data is not critical, I just want to be able to connect to another 4 to 8 lines of data before you complete the test.

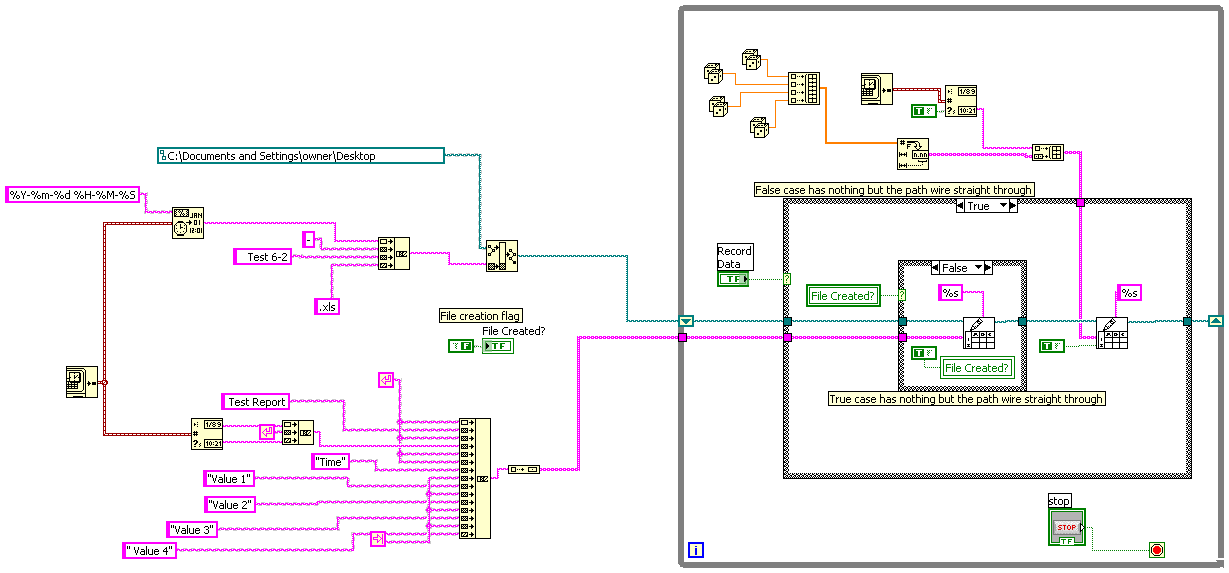

I have a kind of sort of do this, shown on the seal 'on demand spreadsheet.vi' below. Noisy signals are shown here numbers just under the random name embedded in a table (which is how my data writing to a spreadsheet file in my actual program). The problem with this arrangement is that the spreadsheet file is created each time that I start the vi and there are several times during execution of tests and data are not collected for some reason any. Accordingly, we would end up with a large number of files with no or data without meaning .xls. I just want to create the worksheet file when I press the button (s) during a successful test after conditions have stabilized.

I tried a slightly different approach in the joint 'on demand spreadsheet 2.vi' below where I added a switch to toggle to activate a case to open the spreadsheet, and an additional button to save data. It seems like it should work, but I don't get all the lines of data, only the headers.

I'm still pretty new to all this and I have spent days trying to figure this out. Any help is greatly appreciated.

I don't have 8.2. You can either save my vi and publish it on the thread Downconvert VI ask, or you could look at just the image:

-

Help for the reconciliation of table. Average cost

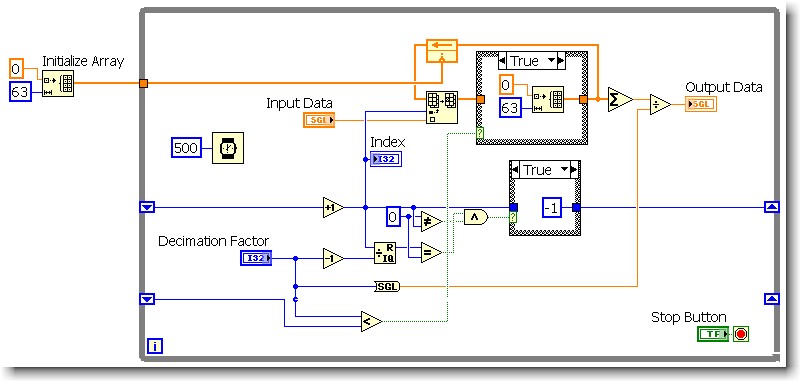

So this is my attempt to imitate a function block that we use in our standard converter software - "Decimation filter" which is nothing more than a running average / mobile. The sample size is adjustable to execution of 2 to 64 samples (decimation factor). I saw many topics on this and used to average around 4 large samples shift registers - but I wanted to be able to change the sample size without recompiling. I'm new to LV, there is likely a lot of better ways to do this.

I would like to have answered is linked to clear the table, if the decimation factor is set to a lower number than the last time that the loop executed. (The uppercase - false statement is wired directly by)

The math in the shift register: creates an array index that cycles from 0 to (decimation Factor-1). The index is then used to fill elements in the table (the rest being zeros). When the decimation factor decreases, I need zero external element in (former decimation factor - 1) (new decimation Factor-1) positions. So I tried various things, but the only thing that seems to work, it's the re - initialize the array. I think it's less than optimal.

I tried:

(1) leaving the tunnel of continuous wire output for the real deal and selecting the option 'use default if unwired' - thinking I'd get a table of 64 elements, of zeros. Doesn't seem to work.

(2) a constant matrix cable tunnel exit if this value is true. When I followed him, after a decline in decimation factor - the probe seems to indicate an array of elements, not 64 No. And I do not see how to specify the size of the array of constant matrix.

If I use this in my application it will run on a target of cRIO.

Any help much appreciated.

You already have your initialized table, why not use it? Wire your table initialized via if this value is TRUE. Or better yet, use Select? function.

-

Hello

I have a scanner with the following configuration:

-Sampling rate = 20000000

-Lrecl = 12000000It works very well so far and gives me a sampling time of ~ 600ms. Where the problem occurs is when I want to display the signal in a graph. Here, I get the error message 'insufficient memory '. That's why I used the express vi "compression of sample to reduce the data by a factor of 10'000 for example but this still does not work when I put the entrance of the"Fetch WDT.vi niScope"value"-1 "."

It works when I set this value to 1'000 ' 000 for example but is not a solution because I do not have the full signal until ~ 600ms! I also can't reduce the sampling rate of the digitizer because I will lose the precision of measurement.

How can I solve this problem?

Thanks in advance

Examples of NO-SCOPE contain examples of code explaining how to extract data from map of range into pieces (for example niScope EX Fetch in Chunks.vi). As mentioned above, there are tuturials on how to manage large sets of data to LabVIEW, one using LabVIEW and one here. Between these three sources, you have sample code to pick up your card brought into pieces, decimate for display and display without running out of memory. It's the algorithm and the method used by the NO-SCOPE Soft Front Panel.

-

the file size reduction of Essbase cube/pag bitmap compression

We see some huge pag files in our essbase cube and Oracle had suggested to change the bitmap image compression to see if this will help. (we currently have "no compression")

That said, what is the difference between the use of ELA versus bitmap encoding for BSO cubes?

I would like to hear the experiences of others.(we currently have "no compression")

^ ^ ^ You're going to be very happy. Very, very happy.

You can read the database administrator Guide - just search for "comrpession.

It's here: http://download.oracle.com/docs/cd/E17236_01/epm.1112/esb_dbag/dstalloc.html

There are a bunch of updates in custody and the rules concerning the 'better' compression method - it does say I use almost always ELA as the method that seems most effective wrt the disk space. It is logical that this method examines each block and apply the most effective method: pair ELA, bitmap, or Index value. I can't see a difference in calculation between bitmap and ELA.

How the hell did you in the position of no compression in the first place?

Kind regards

Cameron Lackpour

Published by: CL on July 18, 2011 10:48

Oops, a couple things:

(1) after you apply the change of compression in environmental assessments or via MaxL, you must stop and then restart the database for the setting to take effect.

(2) you must export the database, clear out and reloaded using the new compression method - #1 change affects only new blocks if you could have a mixture of compressed and uncompressed to your db, bliocks which will do nothing for the storage space.

(3) the only way to really know which method is the most effective is to compare the calculation (remember to write on the disk which could be a little slower than now) and the compression methods is to do it. Try it as a bitmap and note time calc of sampling and file size IND and PAG, so try as ELA and do the same thing. -

Why is my file jpeg of 1 x 1 pixel 8 bit RGB factor 10 to 50 MB?

I found this problem on a number of images. they were initially supplied to me as psb 32bits (they are rendered CGI). I worked on them in PS and save low resolution JPEG files. I usually send 2000 pixel wide JPEG which would be about 1 MB. but the size of the file that I get is unexpectedly massive. I tried and checked everything I can think of. the image is 8 bits without additional channels Srgb profile or access roads and the canvas is cropped. Profile of color change makes a difference. using compression different factor jpeg does not affect things. Same record in TIFF with LZW file compression is even greater. Fill the image with a single color its still the same.

According to me, it can be a sort of corruption as even if I crop the image of a pixel wide, it's even 50 MB JPEG. Also if I copy a pixel of the "infected" image and paste it into a blank canvas, the new canvas will encounter the same problem.

Helps save for web gives a normal file size, but the color appears different in preview

Using the same computer I have not encountered this problem on other files.

The 'infected files' are the same when it is tested on several different machines and both CS6 and CC

an example here would be to download except that it's too big for upload!@Staff I can send a sample for you to review?

my client wants to use these images for the web, so I can't provide 50 MB of files!

Help, please!

Thanks in advance

Could you post a screenshot of the file Info > raw data?

-

amplify, mounting and pan requests for hearing CS6 samples

I am a relatively new user of CS6 which Cooledit and then Audition 2.0 widely used for audio editing. Some features that were essential for me 2.0 seem to lack on CS6. I've searched the forums and all simply messed around with the software for a lot of time to find these tools, but without success. I would like to know if they are actually missing, or if I'm just inept to them to find.

All these apply to editing in waveform mode.

Is it still possible to:

(1) amplify a selection based on a multiplicative factor instead of dB?

(2) conduct a ramp of amplification on a selection, for example a linear ramp from 30% to the amount of starting at 70% at the end? The only one of these tools I see is fade in and out, but I can't get this to work at the beginning or end of audio and even then, it is not very accurate, it doesn't allow arbitrary values start and end amplification.

(3) pan through audio one window at a time, as has been done in 2.0 by using the pg-up/down keys (if I remember correctly)? I can grab and drag upward above the numbers the time axis, but scanning through tens of windows a data value of this way is tedious.

(4) plays the audio visible in the window and have the game stops at the end of the window, rather than continue on? The only way I can find to do this is to select the audio in the window.

(5) and finally, moving individual samples precisely? In earlier versions we could directly enter and drag sample upwards or downwards, or right-click on the sample and enter its value. Now the only way I have found to do this (very clumsily) is to use the HUD after selecting an individual sample, but the sensitivity of the control is such that I can barely make very small, precise movements. Do redraws a range of samples in this way is awkward, limit impracticable when hundreds of locations in the audio must be dealt with. As an alternative, a pencil, like some other publishers have to redraw the samples would work well.

and a related question about 5): is it possible to simply read the value of the amplitude of an individual sample?

I realize that some users may not need these features, but my editing tasks make some of them (especially 2 and 5) absolutely vital.

Thanks for any help you can provide; I really appreciate it.

(2) effects/Amplitude and Compression/gain envelope.

(3) scroll page-by-page is available as keyboard shortcuts/view but not the keys to the default value.

-

Attack and Release in Compression

Good, rather than as one problem, another one of my academic discussions 'how it works '.

On another forum, I debated the meaning of 'attack' and 'release' in compression. My thesis is that the attack time is how long it takes to reach the set level of gain reduction once the signal passes through the threshod... level and liberation is the opposite: reduction, once again the time it takes to come back or not gain levels once to cross the threshold down.

Another poster, who usually knows what he's talking about, the content as time attack and release are triggered, not levels reaching the threshold, but rather simply by the compressor, a level upward or downward change detection.

As evidence, he has published a test of Soundforge: a tone that made the transition between - 12 and - 3 dB at intervals 300ms. He then applied with the threshold set at-16 compression (so everything should be compressed) and, indeed, the waveform shows the effect of the attack and communicated to each transition even if the test set should be compressed.

I tried the same thing at the hearing and, although things are not as noticeable as Sound forge, you can see the pumping on the waveform.

I don't see that the attack and release can be quite skilled to respond to each change in level (especially on a real signal, not his test) so I have to assume that the cause is a bit more basic.

Here is a picture of the original file that I made:

And here is the same file compressed with an attack of 2ms and 5ms release and a threshold of - 15dB, i.e. less than what anyone in the clip.

As you can see, you can see where the attack and release happens (I do not post a picture but, with attack and release to zero, you can not see any transition) so if someone can explain that you will cure my curiosity!

Want to know how to work the compressors?

Well, I wouldn't trust in Wikipedia - especially when he's wrong!

We must look at the history of the compression, and how this was done by conventional electronic means before having any kind of idea of how it works really - and then we must keep in mind that even if software compression can move some of the apparent problems, it is not necessarily a good thing to do.

The initial problems with compression were double, when it came to the electronic traditional compressors. First, they took the time to analyze the incoming signals; Second, they took even more time to respond to the changes. It is usually because the element doing the control signal (and there were several different methods) also had a finite response time. Usually the response of the control element has been physically forced, and ultimately limited the attack time - even if you can detect a more rapid change, you could not apply it more quickly - you could not delay the release, that needed to be addressed in real time. The response of the detector is essentially based on the amplitude - if the first half cycle exceeds the amplitude threshold, then theoretically a corresponding signal is sent to the control element. The detector, but always follows the the envelope amplitude of the received signal, and this is what determines, by the controls, the compression ratio. The decay time depended entirely on controls of that - at the time wherever the envelope of the signal is less than the threshold, the rate of decay sets in. If the signal rises to the top, then decay stops - after another attack of time spent.

The threshold setting determines the point at which the control starts, and report control determines the rate of Exchange used at that time - and that's why Wikipedia quote is bad, because the absolute level has not been determined at all - just the rate to which the control signal is going in this direction. It may be supplanted by a change of the signal level.

In any case, it is this that compressors software were essentially try to imitate. I have never designed a software, but I designed and built a couple of material ones - that's why I know not how they work!

But what is that software that can compensate for certain aspects of the behavior of compressor, because they have not primarily to work in real time. This means that it is possible to use ' to come'. If you do this, then theoretically you can do a good job the same catch the first half cycle of a waveform that exceeds your threshold - because you have already made the detection. And because you control software of the final amplitude rather than a hardware device, you can cause instant changes unfold. But as everyone experiences a software compressor soon realizes, most of the things that are possible not - happen devices are designed more along the lines of the original material, because that's what people really want! They do not want instant attack, because that gets rid of transients, and that's where a lot of crucial information in a signal. Also, for other timed titles of pumping is called for - and that requires a system with a known time response, not one where the rate of change of the input signal is determined and used in any way.

The other thing that you should keep in mind is that we create a signal envelope. And to do this, we need to make some money. What we are doing is technically known as integration - we are calculating the area under a curve and for that we need a period of integration. With Audition and Cool Edit dynamic controller we get options here to control this. And it's in the section Attack/Release to get you all the clues. First, note that it says that it is the amplitude is determined, not the rate of change, and secondly, you can determine (because it has more options than most of the compressors) how the amplitude envelope is determined in the detector - peak values, which means that the integration time is actually the same as the frequency of sampling, or RMS values. It does not say what is the RMS integration time, but dare I say that it would be too difficult to determine.

But the dynamics of hearing controller is a good example of how the software can imitate - and also to overcome the limits of the - a hardware compressor. The characteristics of the sensor and the controller can be determined separately, and look forward can also be specified. I'm not 100% convinced that reflects the output always exactly what you set, but it's close enough. But just because you can overcome how works a compressor equipment, it does not usually pay to do unless you have a very good reason to do.

-

Reduction of the noise (white noise) synthesizer

Hey,.

I was wondering if someone could help me to reduce my sample of synthesizer noise: Black Sun, found in [synthesizer > soundscape > Black Sun]

I feel that I can not change it for a reason because I tried different methods of noise reduction, some of them include:

* Modify the 'smart controls' and EQ.

It was frustrating to watch YouTube videos a lot of different methods, because they seemed to all have a similar solution that would solve the question of a recording (voice / instrument). I just used this sample that I put in place in the section of piano with a few midi controllers roll (iRigs Drumpad, Axiom 25 and Korg nano key 2)

I'm willing to start any suggestions that anyone might have for me.

This is a link to the example I created; You can hear this noise I described https://www.youtube.com/watch?v=npYBQlO7sXo

Thanks for the help.

I'm running Logic Pro X, using a MacBook Pro 9.2. OS X 10.11.3 (15 D 21)

Intel Core i5 2.5 GHz. 4 GB memory, 500 GB of storage.

In black noise Sun patch is pre-programmed by the designer of this noise and is generated by the Bitcrusher plugin. You can remove it, or play with Bitcrusher settings to get what you like.

-

do I really want to compress all my data?

Hello

I'm a peasant total techno and was trying to find out how much space was left on my macbook pro 2009. In the finder, I saw "compress data" and thought that I remembered how to seller by recommending day to do so. I clicked it, and my computer is making its way through 183,59 GB of data compression, which according to her, will take 5 days! When I tried a google search, I came across a lot of complicated things that sounds like you should be only compress one file at a time.

While we are there, please can you me how do I know how much space is left on my computer?

Thanks a lot and it's okay if you roll your eyes.

Dana

Hi Dana,

You compress your entire hard drive? Or only some data on the hard drive folders?

Compression of data such as pictures, documents, pdf files, and other similar files is perfectly OK!

A file is made up of a bunch of metadata. Metadata is simply data about data. Well, there is some metadata is just useless in a file and there is no advantage. With compression, the file gets processed and been ejected from some of its attributes and metadata which translates to a much smaller file size.

However, there are some data that you don't want to compress. It comes to binary files that build the operating system (OS). If you plan to compress these file types, then corruption is likely to occur or may occur. When data compression, make sure that you can do without affecting your operating system files.

The reason why your compression takes so long is that you MOST likely have a disk speed is very low. Depending on your model laptop and the year, you most likely have a tough guy who has a speed of 5400 revolutions per Minute (RPM). This is... the magnetic disks inside the hard drive that revolve around a single PIN for read and write data. Low RPM train speed slow drive, which slows EVERYTHING down. * And to emphasize, if your drive is old and has a lot of reads/writes that took place during his life, so the speed of the discs degrade. This is what we call performance degradation.

In your scenario, I guess you have a slow drive, low RAM and a processor low range. Given these factors, it would take time to process and compress all data on your drive.

I suggest you kill the compression operation. Compression is really intended to those who is EXTREMELY low on disk space and they have no choice OR you have pure RAW data which can slow down operations in an application, you are working with, in which case compress you the data to remove some of that unnecessary metadata.

In the future, when you get a new machine, it is preferable to an installation on your disk compression before starting to use your machine. The data will be then get compressed by increments, rather than in a single session, which takes a lot of time.

I hope this helps.

Thank you

Mark

-

Measurement error of the County of edge by using the external sample clock

Hello

I'm trying to measure the number of edges (rising) on a square wave at 5 kHz with a generator function on a device of the NI PCIe-6363. I configured a channel of County of front edge of counter at the entrance of the PFI8 device. I use an external sample clock that is provided by the output of the meter of a NI USB-6211 housing channel. If I acquire for 10secs then ideally I would expect to see a total of 50000 edges measured on the meter inlet channel. However, my reading is anywhere between 49900 and 50000.

When I use the internal clock of time base to measure the edges, the measure is accurate and almost always exactly 50000. I understand that when you use the external sample clock, the precision of the measurements is subject to noise level of the clock signal. However, I checked the clock signal is stable and not very noisy. Any reason why there is an error of measurement and how tolerance should I expect when using an external sample clock compared to when you use the internal time base clock?

Also, what is best clock Frequency (with respect to the frequency of the input signal) when using an external clock?

Thank you

Noblet

Hi all

Thanks for all your sugggestions. I was using an input signal with a function generator which had a range of 8V. It turns out that the reduction of the amplitude to 5V solves the problem. I was able to get accurate numbers with the 6211 external clock.

Thank you

Noblet

-

I read a sine wave using a data acquisition with a sampling frequency of 250 Hz. Once I acquire the signal, I get the component signal Y and multiply it by a factor inside a matlab script. I use the wave build function to retrieve the waeform and display it. If I put the component dt of my function to build in 0.01 waveform, this means that the signal is sampled at 100 Hz?

navinavi wrote:

If I put the component dt of my function to build in 0.01 waveform, this means that the signal is sampled at 100 Hz?

Not exactly. You are just lying to the display, which gives it something which has been sampled at 250 Hz and claiming that it was sampled at 100 Hz. If you filter on it or whatever it is in the frequency domain, everything will be far away.

Maybe you are looking for

-

Message: Shared spreadsheets cannot be changed when the number is offline now

I just updated to IOS10. I can open all other spreadsheets in numbers (shared and non-shared) bar one when I get the above message. Locations - iCloud drive

-

Is there a restore to previous state for XP Pro?

I'm trying to follow the instructions in the Microsoft Support 'How to restore Windows XP to a previous state' I am using Widows XP Professional and signed as an administrator. I got these instructions click on start, then all programs, then to Acce

-

Classic activation error messages blackBerry - set up HELP

Hi all I hope you guys can help as I ned it BIG TIME! I have to put up all of my work phones (30 + BB classic) and I struggle with the kick tutorial (even if I did it for 5 phones already) and if this does not prevent me then I get the activation err

-

We purchased Adobe Acrobat X 1 Pro for Mac in 2013. I recently bought a new computer and installed the program, but I had a lot of problems. Recently, when I open the program, it asks me to buy Acrobat DC? Why?

-

Hello. I have a problem with changing my trial to a paid subscription. After paying the tax required for Adobe Muse, I'm in the cloud to Adobe, but nothing seems to be changed at all. The application Adobe Muse that I paid for always tells me trial p