Size of binary files and csv

Hi all

I want to save my data in CSV and binary (.) (DAT). The VI adds 100 new double data in these files every 100 ms.

The VI works fine but I noticed that the binary file is bigger than the CSV file.

For example, after a few minutes, the size of the CSV file is 3.3 KB, while the size of the binary file is 4 KB.

But... If the binary files should not be smaller than the text files?

Thank you all

You have several options.

The first (and easiest) is to you worry not. If you use DBL and then decide the next month you want six-digit resolution, so he's here. If you use STR and decide next month, well, you're out of luck. Storage is cheap, maybe that works for you, maybe it's not.

Comment on adding it at the beginning of the header is correct, but it's a small overhead. Who is the addition of 4 bytes for each segment of 100 * 8 = 800, or 0.5 percent.

If you don't like that, then avoid adding it at the beginning. Simply declare it as a DBL file, with no header and make.

You are storing nothing but slna inside.

This means YOU need to know how much is in the file (SizeOf (file) / SizeOf (DBL)), but it's doable.

You must open the file during writing, or seek open + end + write + firm for each piece.

If you want to save space, consider using it instead of DBL SGL. If measured data, it is not accurate beyond 6 decimal digits in any case.

Or think to save it as I16s, to which you apply a scale factor during playback.

Those who are only if you seriously need to save space, if.

Tags: NI Software

Similar Questions

-

Hello:

I'm fighting with digital table of 1 d writeing in a binary file and start a new line or insert a separator for each loop writing file. So for each loop, it runs, LABVIEW code will collect a table 1 d with 253 pieces of a spectrometer. When I write these tables in the binay file and the following stack just after the previous table (I used MATLAB read binary file). However whenever if there is missing data point, the entire table is shifted. So I would save that table 1-d to N - D array and N is how many times the loop executes.

I'm not very familiar with how write binary IO files works? Can anyone help figure this? I tried to use the file position, but this feature is only for writing string to Bodet. But I really want to write 1 d digital table in N - D array. How can I do that.

Thanks in advance

lawsberry_pi wrote:

So, how can I not do the addition of a length at the beginning of each entry? Is it possible to do?

On top of the binary file write is a Boolean entry called ' Prepend/chain on size table (T) '. It is default to TRUE. Set it to false.

Also, be aware that Matlab like Little Endian in LabVIEW by default Big Endian. If you probably set your "endianness" on writing binary file as well.

-

How can I determine the size of the files and photo albums?

How can I determine the size of the files and photo albums?

It is not really in any way, nor is it necessary-what you trying to accomplish?

LN

-

How to determine the size of the file and the pixel of an image?

How to determine the size of the file and the pixel of an image?

1 MB is 2 ^ 20 bytes. It is easy to represent long:

final long MegaByte = 1048576L; //1048576 is 2^20 and L denotes that this is a long if(fileConn.fileSize() > MegaByte) { do something here } -

"Read binary file" and efficiency

For the first time I tried using important binary file on data files reading, and I see some real performance issues. To avoid any loss of data, I write the data as I received it acquisition of data 10 times per second. What I write is an array double 2D, 1000 x 2-4 channels data points. When reading in the file, I wish I could read it as a 3D array, all at the same time. This does not seem supported, so many times I do readings of 2D table and use a shift with table register building to assemble the table 3D that I really need. But it is incredibly slow! It seems that I can read only a few hundred of these 2D members per second.

It has also occurred to me that the array of construction used in a shift register to continue to add on the table could be a quadratic time operation depending on how it is implemented. Continually and repeatedly allocating more larger and large blocks of memory and copy the table growing at every stage.

I'm looking for suggestions on how to effectively store, read effectively and efficiently back up my data in table 3-d that I need. Maybe I could make your life easier if I had "Raw write" and "read the raw data" operations only the digits and not metadata.then I could write the file and read it back in any size of reading and writing songs I have if you please - and read it with other programs and more. But I don't see them in the menus.

Suggestions?

Ken

-

Write to the Cluster size in binary files

I have a group of data, I am writing to you in a file (all different types of numeric values) and some paintings of U8. I write the cluster to the binary file with a size of array prepend, set to false. However, it seems that there are a few additional data included (probably so LabVIEW can unflatten on a cluster). I have proven by dissociation each item and type casting of each, then get the lengths of chain for individual items and summing all. The result is the correct number of bytes. But, if I flattened the cluster for string and get this length, it is largest of 48 bytes and corresponds to the size of the file. Am I correct assuming that LabVIEW is the addition of the additional metadata for unflattening the binary file on a cluster and is it possible to get LabVIEW to not do that?

Really, I would rather not have to write all the elements of the cluster of 30 individually. Another application is reading this and he expects the data without any extra bytes.

At this neglected in context-sensitive help:

Tables and chains in types of hierarchical data such as clusters always include information on the size.

Well, it's a pain.

-

I'm using cs5 DW, I need to increase the font size in the file and edit the menu bar

The font size is as small as 1 or 2 px on the rediculouly file and change the menu. Other programs on my computer I don't have this problem. I have tred every thing and wack on the sizes of things on my favorite computrt

It is similarly to the CS6. That said, DW works better with normal text size. If you can read comfortably with your current display settings, take a step back. For example, if your monitor supports a 1900 max width, try to walk around 1600 or less.

Or use the features of magnification of your operating system.

Windows

Mac

http://science.opposingviews.com/use-magnify-Mac-10487.html

Nancy O.

-

Codec and the size of the file

I just started a new camera editing files. Here is the Sony XAVC. I produced a one minute movie to experiment with different exports and I do not understand something. First, I exported a file MP4 the value 4096 x 2160, who made an 80 MB file. Then I exported the same chronology of one minute 1920 x 1080, also as a MP4 but value makes a 220 MB file.

I thought export 4096 would make the larger file, if someone can explain what is happening here?

Thank you

One thing determines the size of the file, and it is the speed of TRANSMISSION. The export of 1080 p had to have a higher flow rate adjustment

Thank you

Jeff Pulera

-

Strange binary file write behavior

Something a little strange when I try to write data to a binary file. I have reproduced the issue in the StrangeBinaryFileBehaviour.vi which is attached below.

I simply write two bytes in a binary file and yet my hex editor/Viewer tells me I have 6 bytes of data, a screenie of the discharge of the binary hexadecimal editor is also attached

Maybe that I lose the plot and I'm missing something, forgive it is late on a Friday

Stroke

Wire a FAKE to the Terminal "prepend array or string size...? The default value is TRUE, so that you get extra bytes, depending on what it is to wire.

For a 1 d array, you would get 4 extra bytes to the size of the array (I32) and tha of what you see.

-

Adds data to the binary file as concatenated array

Hello

I have a problem that can has been discussed several times, but I don't have a clear answer.

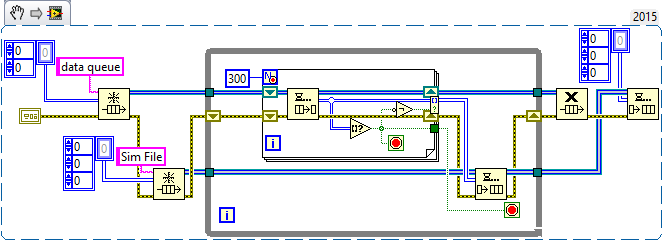

Normally I have devices that produce 2D image tables. I have send them to collection of loop with a queue and then index in the form of a 3D Board and in the end save the binary file.

It works very well. But I'm starting to struggle with problems of memory, when the number of these images exceeds more than that. 2000.

So I try to enjoy the fast SSD drive and record images in bulk (eg. 300) in binary file.

In the diagram attached, where I am simulating the camera with some files before reading. The program works well, but when I try to open the new file in the secondary schema, I see only the first 300 images (in this case).

I read on the forum, I have to adjust the number of like -1 in reading binary file and then I can read data from the cluster of tables. It is not very good for me, because I need to work with the data with Matlab and I would like to have the same format as before (for example table 3D - 320 x 240 x 4000). Is it possible to add 3D table to the existing as concatenated file?

I hope it makes sense :-)

Thank you

Honza

- Good to simulate the creation of the Image using a table of random numbers 2D! Always good to model the real problem (e/s files) without "complicating details" (manipulation of the camera).

- Good use of the producer/consumer in LT_Save. Do you know the sentinels? You only need a single queue, the queue of data, sending to a table of data for the consumer. When the producer quits (because the stop button is pushed), it places an empty array (you can just right click on the entry for the item and choose "Create Constant"). In the consumer, when you dequeue, test to see if you have an empty array. If you do, stop the loop of consumption and the output queue (since you know that the producer has already stopped and you have stopped, too).

- I'm not sure what you're trying to do in the File_Read_3D routine, but I'll tell you 'it's fake So, let's analyze the situation. Somehow, your two routines form a producer/consumer 'pair' - LT_Save 'product' a file of tables 3D (for most of 300 pages, unless it's the grand finale of data) and file_read_3D "consume" them and "do something", still somewhat ill-defined. Yes you pourrait (and perhaps should) merge these two routines in a unique "Simulator". Here's what I mean:

This is taken directly from your code. I replaced the button 'stop' queue with code of Sentinel (which I won't), and added a ' tail ', Sim file, to simulate writing these data in a file (it also use a sentinel).

Your existing code of producer puts unique 2D arrays in the queue of data. This routine their fate and "builds" up to 300 of them at a time before 'doing something with them', in your code, writing to a file, here, this simulation by writing to a queue of 3D Sim file. Let's look at the first 'easy' case, where we get all of the 300 items. The loop For ends, turning a 3D Board composed of 300 paintings 2D, we simply enqueue in our Sim file, our simulated. You may notice that there is an empty array? function (which, in this case, is never true, always False) whose value is reversed (to be always true) and connected to a conditional indexation Tunnel Terminal. The reason for this strange logic will become clear in the next paragraph.

Now consider what happens when you press the button stop then your left (not shown) producer. As we use sentries, he places an empty 2D array. Well, we dequeue it and detect it with the 'Empty table?' feature, which allows us to do three things: stop at the beginning of the loop, stop adding the empty table at the exit Tunnel of indexing using the conditional Terminal (empty array = True, Negate changes to False, then the empty table is not added to the range) , and it also cause all loop to exit. What happens when get out us the whole loop? Well, we're done with the queue of data, to set free us. We know also that we queued last 'good' data in the queue of the Sim queue, so create us a Sentinel (empty 3D table) and queue for the file to-be-developed Sim consumer loop.

Now, here is where you come from it. Write this final consumer loop. Should be pretty simple - you Dequeue, and if you don't have a table empty 3D, you do the following:

- Your table consists of Images 2D N (up to 300). In a single loop, extract you each image and do what you want to do with it (view, save to file, etc.). Note that if you write a sub - VI, called "process an Image" which takes a 2D array and done something with it, you will be "declutter" your code by "in order to hide the details.

- If you don't have you had an empty array, you simply exit the while loop and release the queue of the Sim file.

OK, now translate this file. You're offshore for a good start by writing your file with the size of the table headers, which means that if you read a file into a 3D chart, you will have a 3D Board (as you did in the consumer of the Sim file) and can perform the same treatment as above. All you have to worry is the Sentinel - how do you know when you have reached the end of the file? I'm sure you can understand this, if you do not already know...

Bob Schor

PS - you should know that the code snippet I posted is not 'properly' born both everything. I pasted in fact about 6 versions here, as I continued to find errors that I wrote the description of yourself (like forgetting the function 'No' in the conditional terminal). This illustrates the virtue of written Documentation-"slow you down", did you examine your code, and say you "Oops, I forgot to...» »

-

Hello

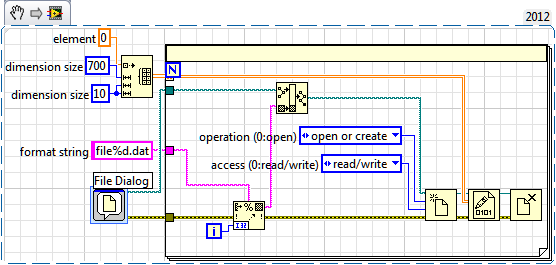

I have 700 2D double table to save and I would save in 700 different .dat extension (binary) files. I know that I can save a table in a binary format, but using a dialog box that opens a window so that I can choose the file. Since there are 700 table I don't want to have to choose the right way every time.

I use a dialog box create a folder where I want to save 700 files. For now I earn 700 tables in the text file of 700 different using the "write to the spreadsheet file" VI and I works well, but it creates text files...

Is it possible to do the same thing but by memorizing the data in the binary file and without using a bow of dialogue for each file?

Thanks in advance

Use writing binary vi to write in binary file. http://zone.NI.com/reference/en-XX/help/371361J-01/Glang/write_file/

You could ask the user to select the folder you make below and then programatically generate paths, something like this:

-

You want to read binary files in some parts of the 500th row in the 5 000th row.

I have files of 200 MB of 1000561 lines binary data and 32 columns when I read the file and sequentially conspire full memory of the generated message.

Now, I want to read the file in pieces as the 500th row 5,000th row with all the columns and it draw in the graph.

I tried to develop logic using functions file advanced set file position and the binary file reading block, but still not get the sollution.

Please, help me to solve this problem.

Thanks in advance...

Hi ospl,.

To read a specific part of the binary, I suggest to set the file position where you want to read the data and specify how many blocks you must read binary file for reading binary file.VI

for example, if you write table 2D binary file, and then mention you data type this 2D chart and make your account (5000-500). Then together, you produce position. If you have 32 DBL data type and column then it is 256 to the second row and 256 * 500 for line 501th. Use this number as input into your position.vi file get.

I hope you find you way through this.

-

Error 116 when a string of binary file reading

I try to use the 'writing on a binary' and "binary file reading" pair of VI to write a string to a binary file and read it again. The file is created successfully and a hex editor confirms that the file contains what is expected (a header + chain). However, when I try to read the string back once again, I received an error 116: "LabVIEW: Unflatten or stream of bytes read operation failed due to corrupted, unexpected or truncated data.» A quirk I found though, is that if I put "endianness" to "Big-Endian, network order", the error disappears when I use "native, welcome the order" (my original setting) or "little-endian" error occurs. Did I miss something in the documentation indicating that you can use big endian order when writing of strings, I do something wrong, or is this a bug in Labview? Because the program that it will be used for is to write large networks, in addition to channels, I would like to be able to stick to the 'native' setting for speed purposes and must not mix "endianness".

I have attached a VI of example that illustrates this problem.

I'm using Labview 8.5 on Windows XP SP2.

Thank you

Kevin

Hello

Please contact National Instruments! I checked the behavior that you have met and agree that it is a bug, it has been reported to R & D (CAR # 130314) for further investigation. As you have already understood possible workaround is to use the Big-Endian parameter. Also, I am enclosing another example that converts the string to a binary array before writing to the file, and then converts to a string according to the playback of the file. Please let me know if you have any questions after looking at this example though and I'll be happy to help you! Thank you very much for the comments!

-

How to see the size of the file.

With Win 7 Home Premium how do you see the size of the file and the file extension? The only solution is to go to properties?

Hello JRHoo,

Thank you for visiting the Microsoft answers community.

In Windows Explorer change your point of view to Details.

On column headers, right-click and select more > make sure the size and type are selected > click ok

Hope this helps

Chris.H

Microsoft Answers Support Engineer

Visit our Microsoft answers feedback Forum and let us know what you think. -

How can I see the size of the file?

How can I see the size of the file in LR CC? I can see the pixel size, I see millions of pixels (interested in MP?). To see the size of the file, I have to 'show in Finder '. That s annoying.

Thanks in advance

Uwe

Bob Somrak says:

I'm sorry that I have implied by using the 'Limit of the file size' option to export. I never use (probably rarely) this option. I wanted to suggest you to use the option of resizing and set the pixel dimensions to limit the size of the file and the quality level.

Yes, I've never used 'File size limit' because I know better. IMHO, this is an Adobe option could remove and do more good than harm. We have seen many messages here from users who are confused when it doesn't do what they expect.

Bob Somrak says:

Too, I think it would be easy to implement the ability to see the size of the file in Lightroom but see no advantage. It does not provide any information whatsoever on what the derived file size will be.

It is of little interest to the 'original' camera files imported into LR. But it is useful to review export and "Change in the PS" files for all of the reason fotoroeder and I have just described.

The bottom line is that it can't hurt and it can be made an option to preferences for "Advanced" users to eliminate the confusion." It goes same for IPR display and the color profile, which is very useful for managing the resized image files to be used with a program such as InDesign layout. I currently have to use Adobe Bridge for this purpose, but LR to create and organize the files of components. So it forces me to work inefficiently. It would be really beneficial if LR and InDesign could be integrated to work similar to the LR book module together... but that's just a dream.

Maybe you are looking for

-

IE has an option "save target as".

-

can't understand how to use ios 9.3.2 on iphone 5s since the upgrade

After I upgraded my iphone 5 s for 9.3.2,and I can't understand how to use the many features, iTunes is very different, iphots also, very frustrating. Is there a help available online at least? Thank you.

-

I have no windows logo in the drop down menu to go and touch

I just downloaded beta 29 and no touch or window icon in the menu. How can I use mode metro

-

Drivers for HP DeskJet 970cxi, Win7

Hello I have trouble with my printer on Windows 7 Vista have drivers and the printer works well, but I can't install it on windows 7. Someone knows how to fix this? Message edited by Łukasz on 06/24/2009 05:41

-

What happened to the access of the button "back" to several pages past?

What happened to the access of the button "back" to several pages past?