Size of the buffer

Hey!

I'm trying to capture and save images from my camera. I need a cadence of 210fps, so I decide to enter with a sequence and it works, if I take one length for a second registration. Here's the problem: when I want to make recordings of 10 seconds (set 2100 photos), I get the error message that my work space is not enough. Is there a possibility to decompress? I think LabView Gets an internal buffer, not my RAM, because I installed 16GB.

Basler pilot piA640-210gc

LabView 2013 (32 bit)

Windows 7

With the Basler camera, the images are 640 x 480. images of 2100 would be about 645 MB in grayscale, but about 2.6 GB in color. If you run 32-bit LabVIEW, you are probably exceeding the available memory.

Your options would be to use 64-bit LabVIEW, acquire/store the images in the raw grayscale Bayer and later convert them or reduce the number of images. I don't think you would have to compress the images you acquire them.

Bruce

Tags: NI Hardware

Similar Questions

-

What are the data size of the buffer on PX1394E - 3 50

Hello

I bought this drive a few days ago and I'm a little confused on the

the size of the data buffer. The box indicates 16 MB, Toshiba web page says 8 MB, etc...Nobody knows, what are the data buffer size of this model?

Is there a SW or the request to meet with whom?BTW, great car, fast, noise, however, extremely when it comes to finding large files.

Thank you

FranciscoHello

I have lurked around a bit and it seems that your drive has buffer of 16 MB that is absolutely definitely sure. To check the details of your computer, you can use the tool "Sisoft Sandra". You can download [url href = http://www.sisoftware.net/index.html?dir=&location=downandbuy&langx=en&a=] here [/ URL].

After download, just install it and you will get all information about your computer, as the size of the buffer of your external hard drive.

Welcome them

-

Range the range must belong to the size of the buffer.

Hello

I'm pretty stumped on this problem. I have a chart with a picture of Chartcollections. The tables are filled in at the same time. Sometimes, quite randomly, I get this exception. In this case, I had three parcels posted:

System.ArgumentOutOfRangeException was unhandled

HResult =-2146233086

Message = range slice must belong to the size of the buffer.

Parameter name: length

Real value was 3.

Source = NationalInstruments.Common

ParamName = length

StackTrace:

to NationalInstruments.Restricted.Guard'1.Satisfies (Boolean condition, Func 4 exceptionCreator, String format, Object [] args)

NationalInstruments.Restricted.NIValidation.IsInRange [T] (1 keep, Boolean isInRange, format String, Object [] args)

to NationalInstruments.DataInfrastructure.Primitives.RawDataStore'1.Slice (Int32 startIndex, Int32 length)

to NationalInstruments.DataInfrastructure.Buffer'1.Slice (Int32 startIndex, Int32, Func 2 traitFilter length)

to NationalInstruments.DataInfrastructure.Buffer'1.NationalInstruments.DataInfrastructure.IBuffer.Slice (Int32 startIndex, Int32 length)

at NationalInstruments.Controls.Internal.DefaultAdjusterStep.a (IDictionary 2 A_0)

at NationalInstruments.Controls.Internal.DefaultAdjusterStep.c (IDictionary 2 A_0)

at NationalInstruments.Controls.Internal.DefaultPipelineDataProcessor.a (DefaultDataItemDescription [] A_0)

at NationalInstruments.Controls.Internal.DefaultPipelineDataProcessor.a)

at System.Windows.Threading.ExceptionWrapper.InternalRealCall (Int32 numArgs, delegate callback, object args)

to the millisecond. Internal.Threading.ExceptionFilterHelper.TryCatchWhen (Object source, method Delegate, Object args, Int32 numArgs, delegate catchHandler)

at System.Windows.Threading.DispatcherOperation.InvokeImpl)

at System.Windows.Threading.DispatcherOperation.InvokeInSecurityContext (Object state)

at System.Threading.ExecutionContext.RunInternal (ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run (ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run (ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Windows.Threading.DispatcherOperation.Invoke)

at System.Windows.Threading.Dispatcher.ProcessQueue)

at System.Windows.Threading.Dispatcher.WndProcHook (IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean & handled)

to the millisecond. Win32.HwndWrapper.WndProc (IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, Boolean & handled)

to the millisecond. Win32.HwndSubclass.DispatcherCallbackOperation (Object o)

at System.Windows.Threading.ExceptionWrapper.InternalRealCall (Int32 numArgs, delegate callback, object args)

to the millisecond. Internal.Threading.ExceptionFilterHelper.TryCatchWhen (Object source, method Delegate, Object args, Int32 numArgs, delegate catchHandler)

to System.Windows.Threading.Dispatcher.LegacyInvokeImpl (priority DispatcherPriority, TimeSpan timeout, Delegate method, Object args, Int32 numArgs)

to the millisecond. Win32.HwndSubclass.SubclassWndProc (IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam)

to the millisecond. Win32.UnsafeNativeMethods.DispatchMessage (MSG & msg)

at System.Windows.Threading.Dispatcher.PushFrameImpl (DispatcherFrame frame)

at System.Windows.Threading.Dispatcher.PushFrame (DispatcherFrame frame)

at System.Windows.Threading.Dispatcher.Run)

at System.Windows.Application.RunDispatcher(Object ignore)

System.Windows.Application.RunInternal (window)

System.Windows.Application.Run (window)

at System.Windows.Application.Run)

at MyProject.FinalTest.Specific.GUI.App.Main (c:\Sandbox\Specific2\MyProject.FinalTest.Specific.GUI\obj\Debug\App.g.cs:line 0)

at System.AppDomain._nExecuteAssembly (RuntimeAssembly assembly, String [] args)

at System.AppDomain.ExecuteAssembly (String assemblyFile, Evidence assemblySecurity, String [] args)

at Microsoft.VisualStudio.HostingProcess.HostProc.RunUsersAssembly)

at System.Threading.ThreadHelper.ThreadStart_Context (Object state)

at System.Threading.ExecutionContext.RunInternal (ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run (ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

at System.Threading.ExecutionContext.Run (ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.ThreadHelper.ThreadStart)

InnerException:I don't know even where to start looking! Can someone please give me some advice? Thank you!

I found the problem!

I had a timer by calling the method, which has updated the plots of a queue. If there is too many signals, emptying of the queue can take longer than the next call timer, and two threads try to access the graphics paralel. I know that set the idle timer for the queue reading and turn it on after and looks of problem solved!

Thanks for the idea of bouncing, that's the direction I had to start looking!

-

How to increase the size of the buffer

Hi, I would like to ask how can I increase the size of the buffer?

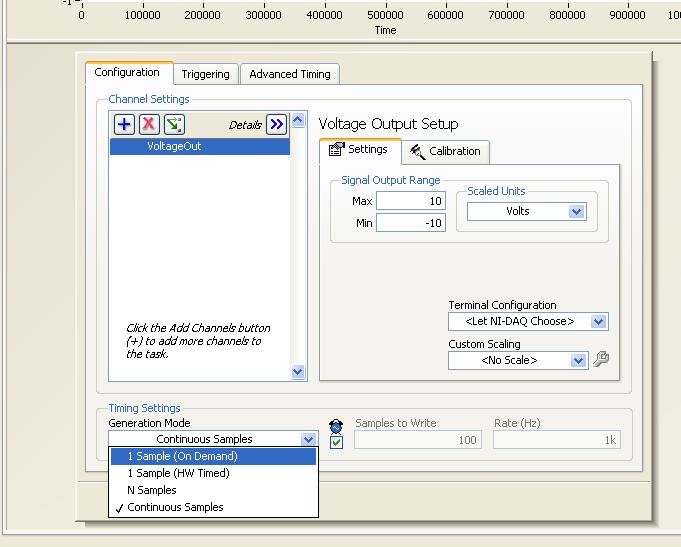

My problem is that the generation cannot be started,

because the buffer size is too small by the way I use DAQ Assistant.

Choose the size of the buffer: 1

Minimum required buffer size: 2

so, how do I solve it?

Thank you

Chris

Hello Chris, can I ask what material you use. Currently the DAQ Assistant in your code example is configured to run continuous Acquisition and thus expects a type of dynamic data. This can be seen by the small red spot known as a point of stress and is shown when LabVIEW must convert the data to a different type. There are two options you can try to solve this problem.

(1) change the type of Acquisition "(on request) 1 sample" in the DAQ Assistant, he will write your input value for the cDAQ with each iteration of the while loop

(2) change of data entry strikes at a waveform

Here are some articles that you might find useful http://zone.ni.com/devzone/cda/tut/p/id/5438

Hope this is useful

Philippe

-

You want to know the size of the buffer on the mk3 5...

... and/or the number of raw files full resolution, it may contain. I was wondering if I used to pull a maximum of six bursts of shooting, writing the same question speed card?

dbltapp wrote:

... and/or the number of raw files full resolution, it may contain. I was wondering if I used to pull a maximum of six bursts of shooting, writing the same question speed card?

It does not matter to 6 shots.

See: http://www.learn.usa.canon.com/resources/articles/2012/eos_understanding_burst_rates.htmlp

The conservative estimate of the size of the buffer (in RAW mode) is that the buffer will hold about 13 shots until the camera is to wait for the data to be written in order to clear enough space for another buffer. I actually tested it with my 5 d III and have found that, in practice, the number is somewhat higher - having about 18 shots before he slowed because of the limitations of the buffer.

-

How to increase the size of the buffer veristand UDP?

Hello!

I'll have some lost data of veristand screen and I want to increase the size of the UDP buffer. This is a new option in veristand 2011 that I can read on this link: http://zone.ni.com/reference/en-XX/help/372846C-01/veristand/whats_new/ (improving the workspace section).

Someone at - it now haow to do?

Thanks in advance,

Miguel.

Do you mean how to do it from the VeriStand workspace? On your graphical work space click on setup to enter the chart configuration dialog box. In the configuration dialog box, you will see where you can enter a new value for the UDP buffer size.

-

How to set the size of the buffer of interruption UDP XP 64-bit

I need to increase the size of the incoming buffer Windows UDP interruption and also fine-tune Windows for optimal UDP performance.

(It is the buffer used by the Windows service interrupt routine to handle incoming UDP, not setsocket for the application buffer).

We have a real-time system, Windows is the only computer connected to the chassis generating UDP datagrams to about 800 Mbps.

Hi _Doug Bell.Your question of Windows is more complex than what is generally answered in the Microsoft Answers forums. It is better suited for the IT Pro TechNet public.

Please post your question in the Forums Pro Windows XP IT

-

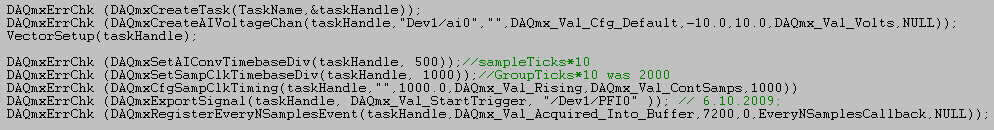

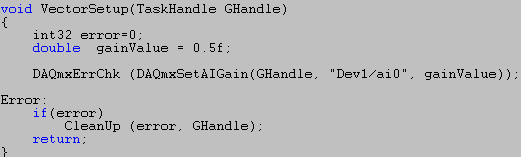

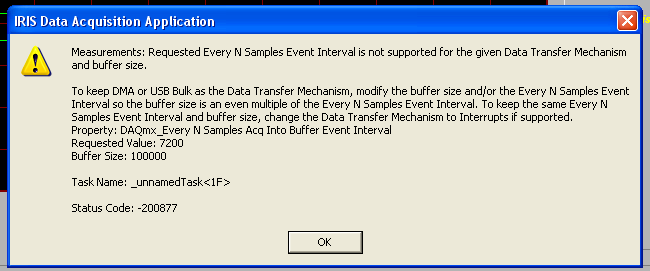

Question of size for the buffer DAQmxRegisterEveryNSamplesEvent

Hello? I'm testing the DAQmxRegisterEveryNSamplesEvent function, but it returns an error message. I put the size of the sample 7200.

Error message is

Providing the function set buffer size? Where do I get 100 000 buffer size in this code?

OK, I thought about it. This is DAQmx_Val_FiniteSamps property of DAQmxCfgSampClkTiming. You must use the buffer size specified in the DAQmx_Val_FiniteSamps property.

Thank you

-

Size of the buffer of size/write DAQmx

What is the strategy privileged in regards to writing data in the buffer output for a digital output or analog DAQmx?

I ask this because in the old days pre-DAQmx, you were supposed to flow of data in the buffer in buffer half lengths, so you would write buffer_len samples on the first entry, begin the proofreading work then write half puts in memory buffer on subsequent writing, the idea being that you write half a buffer while the output card has been reading of the other half.

It doesn't seem to be something to indicate this technique is even longer when you use DAQmx, but he still linger of links that mention the teqchnique.

What is the way NOR recommended, and if it has changed, why?

Thank you

Hi ToeCutter,

Currently there is no need to think about buffers at a level very down the DAQmx digital to analog output.

DAQmx handles it for you as soon as you call the function "Write DAQmx".

You can provide data to write DAQmx in a variety of forms (from waveforms and ending with the raw data).

It may be different depending on the application.

Please take a look in the manual of DAQmx writing VI:

http://zone.NI.com/reference/en-XX/help/370469AA-01/lvdaqmx/mxwrite/

Kind regards

-

Error-50352 DAQmx: How can I determine the maximum size of the buffer?

I use a card PCI-6259 with DAQmx (version 9.0.2) library and I get the error "-50352: memory cannot be allocated" whenever I try to make my record too long. Here are a few terms that I found:

1 channel of AI, 1Msample/s: 7.71 sec max

1 channel AO, 1Msample/s: 7.71 sec max

1 channel of AI, 500ksample/s: 15,4 sec max

2 channels of AI, 500ksample/s: 7.71 sec max

1 HAVE, AO, 1Msample/s 1-channel: 5.6 sec max

IF I can't acquire more 7.71 Msamples in a single task, be it in most cases, or AO. When you mix tasks HAVE and AO, this limit seems to be decreasing. This happens with a freshly started application, so I don't think that it is a question of compensation task.

What is a physical boundary with the device? (And if so, how can I determine this limit?)

My impression is that the device uses DMA to transfer samples to the system memory, which there are plenty - I can easily allocate more than 400 MB of contiguous memory in the same application.

Hi Luke,.

The amount of RAM your system installed?

What operating system do you use? If it is a 32-bit operating system, using 3 GB or increaseuserva? These parameters have a compromise: they reduce the size of the kernel virtual address space. This increases the probability of DAQmx will succeed to allocate memory but do not reach the mapping in the kernel virtual address space.

Your system has all the devices with very large memory mapped I/O beaches, such as a video card with a large amount of memory on-board? On a 32-bit operating system, this can reduce the size of the virtual address space of the nucleus by a significant amount.

Brad

-

DAQ Assistant don't update the buffer size to change the frequency

Hi all

I use DAQ Assistant inside a loop to write a signal in a module output best 9262 OR a cDAQ-9174. I generate the signal with the express vi simulate Signal or with a simple loop using indexing. The problem is that when I change the frequency, using the same sampling frequency, I have a different number of samples to write the cDAQ does not seem to update the size of the buffer, so no my signal gets written in. The result is the first sine wave is nicely written, but each after that gradually get cut off on the edges. I traced imput signal that I generate, so I know that it is generated with the right size and frequency of departure, what ever it is, still works, it is those more later in the loop who have the wrong size aparently buffer. I tried to reset the cDAQ by adding a different DAQ Assistant at the end of the outer loop with the stop bit the true value, it makes me just the error "resource not available.

Any ideas?

I'm using LabVIEW Base development system new V12.0 32 bit.

Thank you

Matt

Idea:

Get rid of the DAQ assistant and use the DAQmx API. The DAQ Assistant is there to support the limited functionality and base up a dirty experience and running quickly. The report of the API offers more funcionallity and DAQmx property nodes allow greater flexibility. DAQ Assistant is just too limited for your needs. (you can't paint a masterpiece with crayons)

-

I update an old VI (LV7.1) which produces a waveform that can be modified by the user in the amplitude and time using DAQ traditional. I used Config.vi to buffer AO (traditional DAQ) to force the buffer to be the same length that the waveform asked so there is no point of excess data that fill all extra buffer space or the required wave form has not been truncated by a short buffer. If I want to perform this action in DAQmx, is there a DAQmx VI to do this, or should I just use the traditional buffer Config.vi AO? Thanks to a highly esteemed experts for any direction I take to do this.

Hi released,

You can explicitly set the buffer using the DAQmx Configure output Buffer.vi. Alternatively, you can leave DAQmx automatically configures your buffer based on the amount of data that you write before you begin the task. You cannot mix and match functions DAQmx and traditional DAQ on the same device, so using the traditional buffer Config.vi AO is not an option if you want to use DAQmx on your Board.

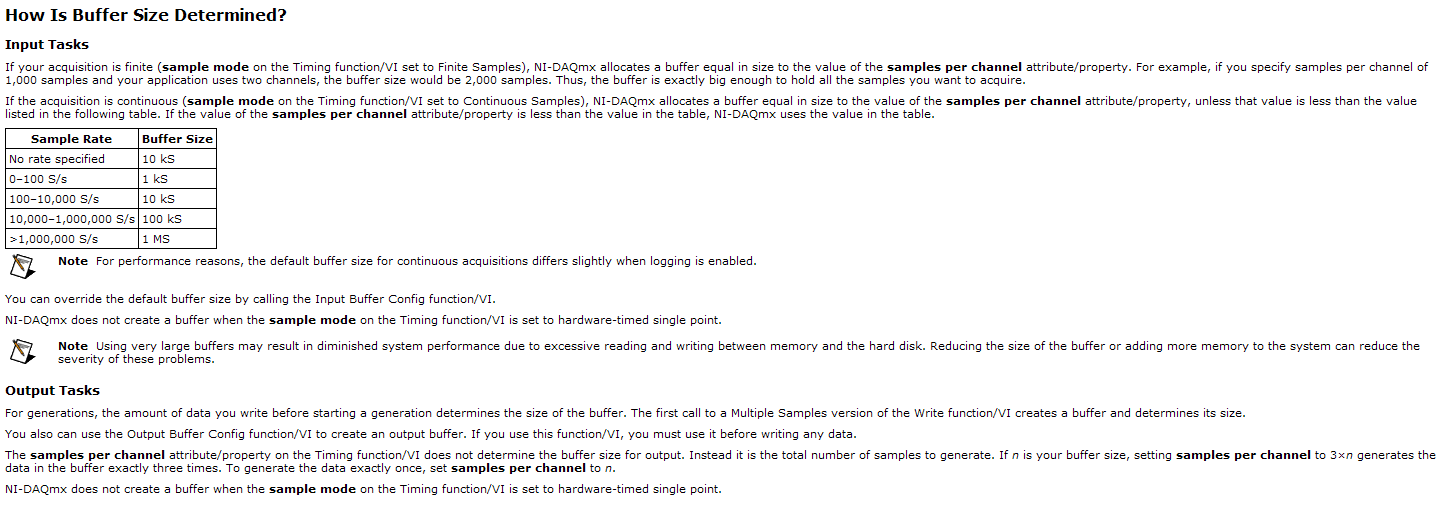

Here's a screenshot of the DAQmx help that explains how the size of the buffer is determined by DAQmx:

I hope this helps!

Best regards

John

-

What is the best size for a buffer overflow in BlackBerry?

Hi all

In my application, I need to read data from an input stream. I put the current size of the buffer for reading as 1024. But I saw in some applications Android size memory buffer has been preserved as 8192 (8 KB). Is there a specific advantage if I increase the buffer size in my application up to 8 k?

Any expert opinion will be highly appreciated.

First thing to note is that the method "isAvailable()', in my experience, does not work on OS 5.0 and earlier versions. It is attached to the OS 6 (at least in my tests).

Because isAvailable() has been broken, (and for other reasons of demand) that I put in place for a socket connection is that each message is preceded by a length. So I read in the socket connection, the length of the message, and then the actual data. This is done with no lock - in other words, I read the entire message, regardless of their size. I recommend you do the same. The message must exist in full somewhere, so it makes no difference if it's in memory managed by the socket connection, or some memory managed by you.

Note also, until the 5.0 OS, when you made reading so that you get all the data fill the buffer you had - in other words, it was blocking. After the OS 6.0, playback can finish without giving you all the data.

In your case, you could work in a post OS 6.0 only, so you could use isAvailable() - create a buffer of this size and read all that. I don't see that it makes a difference if you have the bytes of memory managed by taking, or managed by you.

But in fact, I would say that the best approach is one that makes your simple treatment. So, for example, if you know that the following message is 200 bytes, then read 200 bytes and then process this message. Then read the next message.

You could spend a lot of time trying to manage the buffer to match the underlying socket buffers. I don't know exactly how the underlying socket of BlackBerry processing code, but it is not put data directly in your buffers. Then let it manage its buffer size to optimize the network, you manage your buffer size to optimize your treatment. That will work best for everyone.

-

Question about the size of the redo log buffer

Hello

I am a student in Oracle and the book I use says that having a bigger than the buffer log by default, the size is a bad idea.

It sets out the reasons for this are:

>

The problem is that when a statement COMMIT is issued, part of the batch validation means to write the contents of the buffer log for redo log to disk files. This entry occurs in real time, and if it is in progress, the session that issued the VALIDATION will be suspended.

>

I understand that if the redo log buffer is too large, memory is lost and in some cases could result in disk i/o.

What I'm not clear on is, the book makes it sound as if a log buffer would cause additional or IO work. I would have thought that the amount of work or IO would be substantially the same (if not identical) because writing the buffer log for redo log files is based on the postings show and not the size of the buffer itself (or its satiety).

Description of the book is misleading, or did I miss something important to have a larger than necessary log buffer?

Thank you for your help,

John.

Published by: 440bx - 11 GR 2 on August 1st, 2010 09:05 - edited for formatting of the citationA commit evacuates everything that in the buffer redolog for redo log files.

A redo log buffer contains the modified data.

But this is not just commit who empty the redolog buffer to restore the log files.

LGWR active every time that:

(1) a validation occurs

(2) when the redo log is 1/3 full

(3) every 3 seconds

It is not always necessary that this redolog file will contain validated data.

If there is no commit after 3 seconds, redologfile would be bound to contain uncommitted data.Best,

Wissem -

all samples n transferred from the buffer

Hi all

I have a question for every N samples transferred DAQmx event buffer. By looking at the description and the very limited DevZones and KBs on this one, I am inclined to believe that the name is perfectly descriptive of what must be his behavior (i.e. all samples N transferred from the PC buffer in the DAQmx FIFO, it should report an event). However, when I put it into practice in an example, either I have something misconfigured (wouldn't be the first time) or I have a basic misunderstanding of the event itself or how DAQmx puts in buffer work with regeneration (certainly wouldn't be the first time).

In my example, I went out 10 samples from k to 1 k rate - so 10 seconds of data. I recorded for every N samples transferred from the event of the buffer with a 2000 sampleInterval. I changed my status of application of transfer of data within the embedded memory in full with the hope that it will permanently fill my buffer with samples regenerated (from this link ). My hope would be that after 2000 samples had been taken out by the device (e.g., take the 2 seconds) 2000 fewer items in the DMA FIFO, it would have yielded 2000 samples of the PC for the FIFO DMA buffer and so the event fires. In practice it is... not to do so. I have a counter on the event that shows it fires once 752 almost immediately, then lights up regularly after that in spurts of 4 or 5. I am at a loss.

Could someone please shed some light on this for me - both on my misunderstanding of what I'm supposed to be to see if this is the case and also explain why I see what I see now?

LV 2013 (32 bit)

9.8.0f3 DAQmx

Network of the cDAQ chassis: 9184

cDAQ module: 9264Thank you

There is a large (unspecced, but the order of several MB) buffer on the 9184. He came several times on the forum, here a link to an another discussion about this. Quote me:

Unfortunately, I don't know the size of this buffer on the 9184 on the top of my head and I don't think it's in the specifications (the buffer is also shared between multiple tasks). This is not the same as the sample of 127 by buffer slot AO which is present on all chassis cDAQ - controller chassis ethernet / wireless contains an additional buffer which is not really appropriate I can say in published specifications (apparently it's 12 MB on the cDAQ wireless chassis).

The large number of events that are triggered when you start the task is the buffer is filled at startup (if the on-board buffer is almost full, the driver will send more data — you end up with several periods of your waveform output in the built-in buffer memory). So in your case, 752 events * 2000 samples/event * 2 bytes per sample = ~ 3 MB of buffer memory allocated to your task AO. I guess that sounds reasonable (again would really a spec or KB of...) I don't know how the size of the buffer is selected).

The grouping of events is due to data sent in packages to improve efficiency because there is above with each transfer.

The large buffer and the consolidation of the data are used optimizations by NOR to improve flow continuously, but can have some strange side effects as you saw. I might have a few suggestions if you describe what it is you need to do.

Best regards

Maybe you are looking for

-

Helps the dv4 HP system recovery

My laptop does not start normally. I have to put it in safe mode in order to start it. When I try to boot normally, a blue screen appears a second after the computer starts. It's only for a second, then my computer restarted, promptimg me to start in

-

I bought a new M5 Acer in a few weeks. Strange things are happening tonight. All of a sudden I can't get browsers (one of them; I tried several) to connect to most of the sites. Twitter - work Facebook - works Google - does not work Yahoo - does no

-

Remove secure phishing messages

I turned it off option of phishing in Windows Mail, but I always have known senders of emails are classified as phishing, and I'll have automatically deleted text. Text has been removed into account WIN MAIL with a message in WIN Mail: Windows Mail h

-

Why filmmaker takes an extremely long time to save my movies or publish them?

Here ARE MY SPECS-Microsoft Windows 7 Ultimate operating system nameVersion 6.1.7601 Service Pack 1 Build 7601Manufacturer of operating system Microsoft CorporationSystem manufacturer Hewlett-PackardHP Pavilion dv6000 model systemSystem Type X 86-bas

-

UCS Config - is allowing disruptive VAN?

Hello I'm working to implement the UCS in our environment for the first time. We have two 6120 s and a blade single chassis. We are links to the storage of the 6120 s through two MDS 9124 fabric switch (v.4.2(3), I think). If I understand correctly,