Tab 2 of the A10 input voltage?

I wonder if tab 2 a10 should work abroad where the electricity voltage is 220 instead of 110.

Any help would be appreciated. Thank you!

You will be fine, that the C - P35 AC adapter is bitensión with 100-240V input voltage range

Output 5.2V 2A.

Tags: Lenovo Tablets

Similar Questions

-

Q150 input voltage? Help, please

I sent twice, named Sales and no one yet knows the Q150 input voltage...

Can someone view detailed list of specifications of Q150 which includes its input voltage? Or anyone who owns a Q150 can look for me?

Thank you

Hi ciguli and welcome to the Lenovo user community!

Said manual material: 100-240v AC:

http://consumersupport.Lenovo.com/us/en/Userguide/Guide_list.aspx?CategoryID=741912

-

Analog input voltage assistant DAQ

Does anyone know why theres error when you use two assistant DAQ (in a while loop at the same time) for reading of the analog input voltage?

There is not a problem if you use a wizard to data acquisition for analog input voltage reading simple.

If you get an error, wouldn't it useful that you have told us what it was, we may be able to explain it?

I'm guessing that you have error-50103, and if you look in the forums for '50103' (leave out the negative sign), it will give you the answer for this question has only requested thousands of times before.

-

Configure the input voltage range

I use an NI USB-6221 with SignalExpress 3.0 card.

The Spec for the 6221 map specifies 4 analog input ranges of +/-10V, 5, 1, & 0.2.

My question, how is the range of input voltage on the map on one of the beaches specified?

The closest thing I can find in SignalExpress is step 'DAQmx aquire', looking at the tab "configuration" of "Configuring the stage." There is a group called 'settings', there are areas of maximum and minimum input Signal, but context-sensitive help indicates that it is expected for the channel after the scaling values.

I also looked into MAX, but I see no way for me to directly configure one of these ranges.

Can someone explain how this works?

Mike

You look at the right thing. When you specify the min and max, the DAQmx driver and then automatically selects the best range of this signal. For example, the Council supports + /. 1 + /-1, at ± 5 and ± varies from 10 volts and a jury of 12 bits. If you enter max/min to + /-2, the jury will be set to the +/-5 volt range. Your resolution is then 10 (oscillation of the total voltage) volts divided by 4096 (2 * 12).

If you were using LabVIEW, you can get the actual voltage selected range by using channel properties DAQmx AI. Rng.High and I. Rng.Low. If you specify a min and max that is less than the amplitude of the real signal is greater than the actual scale used, then you cut your input signal. DAQmx does not have an autoscale. Take a look at Page 4-2 in the Manual of the M series.

-

6225 PCI residual voltage in the analog input channel

Hello, I'm new to the Forum and just start working hands with NI hardware/software/etc.

I use MAX (differential setting) to monitor an input channel analog (ai71) through a PCI-6225 card with an SCB-68. The voltage displayed in this MAX sometimes regular 10.6 volts and sometimes intermittent noise 0 to 10.6 volts or vague angular. I watched the disintegration of noise and waves to zero. The voltage displayed in MAX is (seemingly at random) changes when a voltmeter is used to measure the voltage between pins 1 and 35 (with no wire signal)

When an external square wave (2.7 volts DC) is applied to the pins 1 and 35 in the SCB - 68 the value in MAX is dominated by the 0 - 10.6V 'noise', while a voltmeter between pins 1 and 35 simultaneously shows the square wave.

Any suggestions? Thank you in advance.

If you dig into the data acquisition specifications, input voltages must be referenced to the mass of AI or you may damage the Board. Have a good read of this article: wiring field and considerations of noise for analog signals. Since you're probably dealing with a differential signal with no mass, what you want to do is to add resistance on each side of the signal to ground. This article recommends until 100kOhm 10kOhm resistors.

-

Inputs voltage DAQ affecting each other and the camera, they save you of

Hello world!

I use ELVIS II + and LabVIEW 2012 to register a device with a resistive sensor voltage (voltage see with ELVIS II.jpg for VI). The device (device + ELVIS II Schematic.jpg) measure the voltage across the resistive sensor and is controlled by a msp430. If the msp430 believes the change of voltage of the probe is above the threshold, a light is on. There is a motor function on the device and the LED is normally not on when the engine is turned on. I found that registration with the acquisition of data affects the behavior of the device. The reasons daq hardware and devices are connected. During data acquisition does NOT record, gross and analog sensor in tensions are constant (and the LED off) while the engine is on (Figures.doc; (A). when data acquisition IS recording, the engine power seems to distort analog in / raw voltage of the sensor causing the LED lights up (Figures.doc; (B).

The trials that did not issue (led on while engine):

1. registering with new ELVIS II + DAQ

2 separate inputs raw and engine sensor on data acquisition

The tests which fixed the problem (any engine LED is off):

1 grounding gross and/or motor sensor

2 separate channels of raw and engine sensor in the wizard DAQ (Figures.doc; C: shows engine and raw sensor separated by A_VCC. Figures.doc; D: where engine and gross sensor are side by side). I don't know why the order of the channels in the DAQ assistant would affect the behavior of the sensor signal.

Any advice on this problem and ways to troubleshoot/potential long-term solutions would be greatly appreciated!

Thank you!

If your device has no acquisition of simulteanous, then each channel is multiplexed in the ADC. It has a capacity of entry inside your device, which gives it a 'memory '. If the voltage stored in the capacitor is not unloaded a scan before the next scan starts, then the second scan will "remember" is the result of the first analysis, give you really weird problems. More source impedance on the canal e e greater probability that the signal from the previous 'remembered '. That's why the order of the questions, the higher source impedance going first.

-

Cut-off for the 6008 analog input voltage

I am using the analog inputs NI USB-6008. The specification says they have a 144 k ohms input impedance. But it does not say what is the cut-off voltage. If you leave a disconnected and measure the voltage you will get 1.4 volts. So I guess it's the cut-off voltage, but it is not spec'd.

Someone agree that these Amnesty International isn't terminatied by 144 k - ohms to 1.4V? Is this in the documentation somewhere?

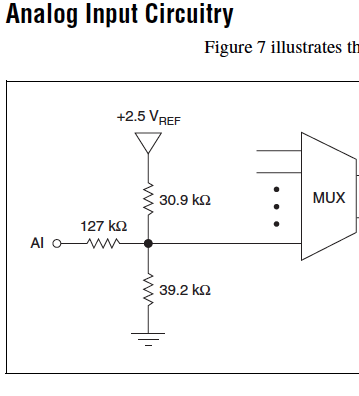

Figure 7 on page 16 of the NI USB-6008/6009 User Guide and specifications shows the strange input of this unit circuit.

Lynn

-

Using the DAQ USB-6009 meter and an analog input voltage at the same time.

Hello

Currently, I'm reading the two channels of voltage with the USB-6009. It happens that one of the channels is the output of a digital coder, and it would be much easier to use it directly to the PFIO entry that is defined as a counter. The problem I am facing right now, it's that I can't use the DAQ Assistant to use the analog voltage to a channel and the digital channel counter at the same time. Once I put the DAQ Assistant to read the input from analogue voltage, I won't be able to add analog inputs. And as I put the DAQ Assistant to use the PFIO as a counter, I can add more entries to read analog voltage is.

I wonder if it is possible to solve this problem using the lower level data blocks? Another solution would be to read two channels in analog input voltage and that the use of Matlab to process data resulting from it, since I was not able to do the counting to work simultaneously with the acquisition in Labview to impulses.

Hope you guys can help out me.

Thanks in advance.

Using a simple wizard of DAQ is incorrect. You need one to acquire analog inputs and one for the meter.

-

This happens when I have several tabs. I go to another tab and a window in mosaic appears with a single window of the tab page and the other tab next to him. He became quite annoying.

Start Firefox in Safe Mode to check if one of the extensions (Firefox/Firefox/tools > Modules > Extensions) or if hardware acceleration is the cause of the problem (switch to the DEFAULT theme: Firefox/Firefox/tools > Modules > appearance).

- Do NOT click on the reset button on the startup window Mode without failure.

-

digital input voltage measurement

Hello.

I develop software for a test bench.

(the material has been developed in the past by someone else, and I have to use this material now)

I have to read some digital data with one nor usb 6501.

I measured the voltage on pins levels and realized that to logic 1, I get about 4, 7V, logic 0 about 3, 5V (who, after having converted to digital, is always 1).

You have an idea how I could fix this?

I thought that if I could somehow put the analog value of voltage on the PIN, the problem would be solved, but I n ' not know if it is possible.

Thanks in advance.

Katona

Hello

the 6501 low input voltage (false logic) is 0.8V and high voltag of entry is on the order of 2.0 v to 5.8V. You must use an electrical circuit or device with an analog entry order to solve this problem.

What do you think of "Schmitt Trigger"

http://en.Wikipedia.org/wiki/Schmitt_trigger

Concerning

Rüdiger

-

How do I capture the output of voltage full bridge with Signal Express NI9219

Hello. I'm trying to do and calibrate a load cell with the installation of full-bridge strain gage. I use a NI9219 module with a cDAQ chassis. Is it possible to capture the actual output voltage? Signal Express gives me a value of strain, but I really need to know the output voltage. Where to look. I need only two channels for full-bridge. I think that could connect the wires to the two remaining channels and read the output voltage of the strain gauges which would be connected as a tension of the 9219 entry, but I think that Signal Express could give me the voltage and output voltage directly. Any input would be appreciated. Thank you! P.S. I only use this equipment on occasion and am not the more familiar with it, so keep things simple for me. Thanks again.

Hi jgh@AET,

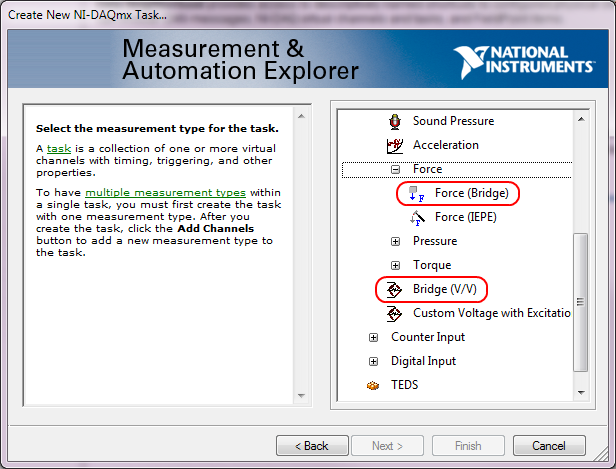

The NI 9219 measures the ratio of voltage full bridge in hardware sensors, allowing any variation of the voltage to cancel. You won't be able to measure the output voltage of the sensor regardless of the voltage without additional channels, but you can measure the ratio of raw tension using the type of Bridge (V/V) . You can also use the type of measure of Force (bridge) measurement of load cell with engineering units (N, lb, kgf, no strain).

This screenshot shows where the two Bridge (V/V) and Force (bridge) can be selected in the DAQ Assistant:

These types of measurement were added sometimes around DAQmx 9.1, so if you have an older version of NOR-DAQmx, your DAQ Assistant maybe not them. The latest version is currently 9.4 of NOR-DAQmx. Front of NOR-DAQmx 9.1, the approach to recommend to measure the load cells was to use the custom with Excitation voltage type and a custom scale. However, Tension Custom excitedly can't Bridge of calibration in the DAQ Assistant.

Brad

-

PXI-6071e offset drift on the analog inputs

Hi, I have three cards PXI-6071E, sitting in a PXI-1042 chassis that is controlled by a computer with windows XP. The 6071Es are connected to the SCB-100 break out boxes that are wired to a pannel of BNC female Panel Mount on twisted pair.

I noticed that all of my analog inputs will drift around-10 V to + 10 V if they are not connected to what whether forcing them to a certain tension. This has always happened. We also see a bit of crosstalk between channels. For example if I open a panel of test in the measurement and automation Explorer I can watch the voltage read on the drift tickets through their full range, and alteration of the signals on nearby channels will appear on the channel, I am able.

Is this just standard behavior and to predict? Is there something more I could do to minimize this drift and crosstalk? I am trying to reduce noise in my system so I figure optimize my DAQ could not hurt.

Thank you

With nothing plugged into the catch to high impedance, drifting you see is quite normal. The front end of the circuitry builds up a charge, crosstalk is proabably due to the multiplexer input (did not check but I think that the 6071 has a) transferring the load to the other channels when they are analyzed.

Search the Forum of ghosting, you will find related discussions.

-AK2DM

-

Scaling of voltage of the thermocouple before voltage to temperature conversion

Hello

I have a module of data USB-6211 acquisition entry with Labview 8.6, and I will measure temperatures using a type R thermocouple. Because this thermocouple voltages are very small (about 0-20 mV), I'll use an external amplifier (50 x gain) to transform the signal to 0-1 V, then read the entries using the module of data acquisition.

At this point, I can divide the signal by my (x 50) gain and use inverse polynomials for thermocouples of type R (published online: http://www.omega.com/temperature/z/pdf/z198-201.pdf) to convert pressure into a temperature.

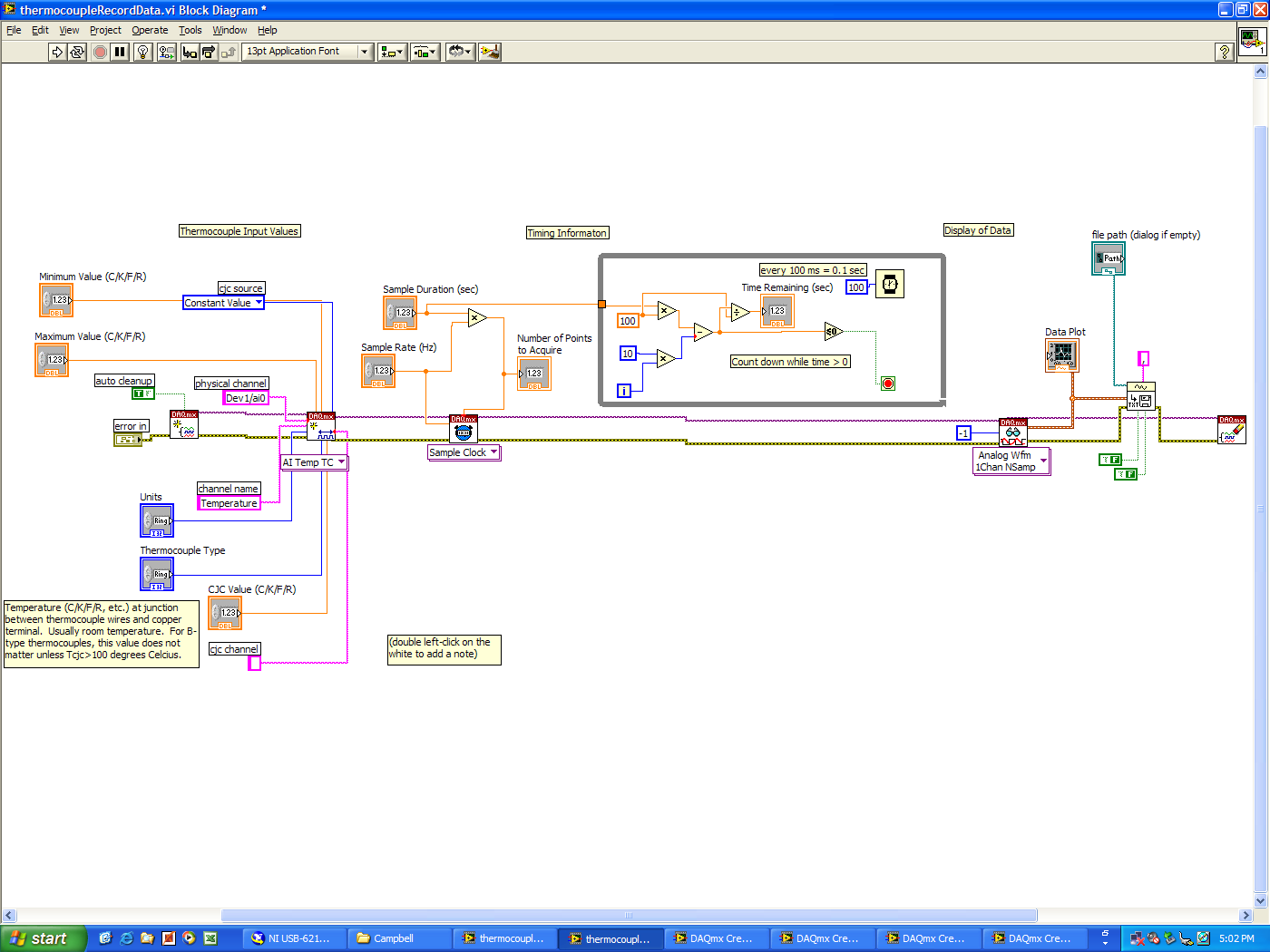

However, I noticed that Labview has built in thermocouple modules. There is a stage of creation of task, and the task is sent to a module that allows me to specify the thermocouple type, value CJC etc, then a sample clock, then a sampler with analog wafeform (see below). I would use this structure, rather than coding in polynomials. However, I can't find a way to divide my my (x 50) gain input voltage in order to give the thermocouple module reasonable voltage values.

Is there a simple way to do this, or I'm better just a coding in polynomials?

Thank you!

Amplify the signal, read the voltage, then divide by 50.

No need to code polynomials. Search for pallets for the Subvi convert Thermocouple Reading.VI

-

How can I programmatically change the parameters of voltage range in a DAQ Assistant

Hello

First post here.

I need to be able to change the properties of voltage range of a daqmx assistant DAQ based on user input. My material, an SCXI module - 1102C does not change this property on a running task, so I would together the range of input voltage analog before activating the DAQ Assistant, or break the DAQ Assistant immediately after it starts, set the values, and then resume.

I don't know how to change the task ahead because the DAQ assistant creates the task when it is running, and there is no job before that.

In the attached photo, I have a conditional section, configured to run only if the loop iteration is 0. I take the task of the Daq assistant, sending him stop vi of task, set the property and then send the task with the snap the vi task. I can watch him run with lightweight debugging on, and everything seems to work properly, but on the second (and all others) iteration of the loop, I read I. Max and it seems that a re DAQ Assistant set it to the 5V. You can see what's going wrong here?

BTW, there is a continuous acquisition and the code doesn't produce error messages when executing.

I've encountered a similar question someone posted here in 2006, but his question was specifically a Labview API (VB, I think) and not a real solution of G.

Attached are the real vi in question and a PNG of the block diagram.

Thank you!

Ruby K

First of all, if you want to start getting beyond the basics with the DAQ hardware, you have to stop using the DAQ assistant and do it with lower level VI DAQmx. There are hundreds of examples in the finder of the example. You can even make a right-click on the DAQ assistant and select open front panel. This will create a Subvi, you can open and see what is happening behind the scenes. Do it. I think you will find that the task DAQ is recreated on each (although I'm not 100 percent the way parameters are established or maintained in each section of this sub - VI).

The second problem is that you have a bit of a race on iteration 0 condition. These two property DAQ nodes are running at the same time. Thus, when you read the AI. Max, this can happen before or after the AI. Max is located in the structure of your business.

Thirdly, make sure that involve you your son of the error.

-

I use a cRIO 9004. I noticed it has a chassis temp option. I was wondering if there was a way to find the input voltage. My unit is battery powered and I knew my batery voltage level. I'm doing some kind of a voltage drop detector.

I am a novice to Labview - I'm sorry if I missed something obvious.

Thank you.

You have not forgotten anything. Currently, there are no i/o chassis set up to read the voltage on your power supply. You'd have to do is to use an analog input module to read the voltage yourself.

Kind regards

Maybe you are looking for

-

I do not see firefox home page when I open firefox

When I open Firefox, I can't have the default Start Page for Firefox since the update to Firefox 20.0.I used the option restore failing Firefox preferences but it refuses to work

-

How can I disable Firefox start automatically when I restart my computer

When I restart my computer (MacBook Pro), Firefox (18.0.2) automatically starts up.

-

FCPX - captured live from a remote camera?

Experience scientific, a little dangerous. I have a Sony FS700 camera trained on experience. I want to be able to run a USB cable from the camera to a control booth closed about 20 feet. I want to be able to control the camera from a Macbook Pro.

-

Desktop HP TouchSmart 320: I need help to remove the back panel on my desktop HP TouchSmart 320.

After losing the 2 screws on the lower back, it still seems glued to the front. Thanks in advence for your help.

-

My Microsoft a sluggish wireless mouse click response

When I click my mouse, the action occurs. I'm usually very hard click or together several times before it works. I tried some other wireless mouse that I have, and the answer is the same.