VI memory usage: very large data

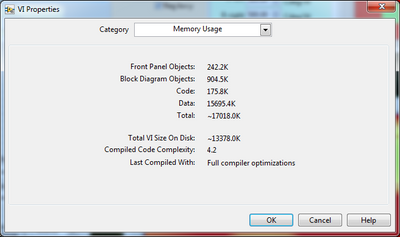

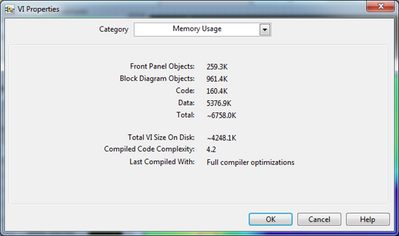

I'm working on the restructuring of an old project using structures that are more up-to-date. The old code used parallel loops to check if the values of the controls have been changed. My new code instead uses the event structures. Glancing on both memory usage statistics, I have reduced slightly before Panel and block diagram object memory, but I have more than 10 MB more 'Data' in the new code. I checked all my paintings and others, none of which is too large. Any ideas on what is using all this memory? Thank you!

It is using new code from memory.

And it's the former.

Unfortunately, the code is too big to fix.

How about a control or an indicator that has a large amount of data stored as default? Who will eat the memory even if the indicator/control displays all these data at the moment.

Close your VI and reopen it. See if any of them contain data that you do not wait. The data can appear even larger because the controls now contains data that is the display as well as the data that is stored by default as one of its properties.

Tags: NI Software

Similar Questions

-

How to calculate daily, hourly average for the very large data set?

Some_Timestamp

"Parameter1" Parameter2 1 JANUARY 2015 02:00. 00:000000 AM - 07:00 2341.4534 676341.4534 1 JANUARY 2015 02:00. 01:000000 AM - 07:00 2341.4533 676341.3 1 JANUARY 2015 03:04. 01:000000 PM - 07:00 5332.3533 676341.53 1 JANUARY 2015 03:00. 01:000046 PM - 07:00 23.34 36434.4345 JANUARY 2, 2015 05:06.01:000236 AM - 07:00 352.33 43543.4353 JANUARY 2, 2015 09:00. 01:000026 AM - 07:00 234.45 3453.54 3 FEBRUARY 2015 10:00. 01:000026 PM - 07:00 3423.353 4634.45 FEBRUARY 4, 2015 11:08. 01:000026 AM - 07:00 324.35325 34534.53 We have data as above a table and we want to calculate all the days, the hourly average for large data set (almost 100 million). Is it possible to use the sql functions of analysis for better performance instead of the ordinary average and group of? Or any other way better performance?

Don't know if that works better, but instead of using to_char, you could use trunc. Try something like this:

select trunc(some_timestamp,'DD'), avg(parameter1) from bigtable where some_timestamp between systimestamp-180 and systimestamp group by trunc(some_timestamp,'DD');Hope that helps,

dhalek

-

Keep two very large synchronized data warehouses

I look at the options to keep a very large (potentially 400GO) datastore TimesTen (11.2.2.5) in sync between a Production Server and [sleep].

The replication was abandoned because it does not support compressed tables, or table types creates our closed code (without PKs not null) application

- I did some tests with smaller data warehouses for the indicative figures, and a store of 7.4 GB data (according to dssize) has resulted in a set of 35 GB of backup (using ttBackup - type fileIncrOrFull). This surge in volume should, and would it extrapolate to a 400 GB data store (2 TB backup set?)?

- I have seen that there are incremental backups, but to keep our watch as hot, we will restore these backups and what I had read and tested only a ttDestroy/ttRestore is possible, that is full restoration of the full DSN every time, which takes time. Am I missing a smarter way to do this?

- Y at - it other than our request to keep two data warehouses in the construction phase, all other tricks we can use to effectively keep the two synchronized data stores?

- Random last question - I see "datastore" and "database" (and to a certain extent, "DSN") apparently interchangeable - are they the same thing in TimesTen?

Update: the 35 GB compress down with 7za to slightly more than 2.2 GB, but takes 5.5 hours to do this. If I take a fileFull stand-alone, it is only 7.4 GB on the disc and ends faster too.

Thank you

rmoff.

Post edited by: rmoff - add additional details

It must be a system of Exalytics, right? I ask this question because compressed tables are not allowed for use outside of a system of Exalytics...

As you note, currently the replication is not possible in an Exalytics environment, but that is likely to change in the future and then he will certainly be the preferred mechanism for this. There is not really of another viable way to proceed otherwise than through the application.

With regard to your questions:

1. a backup consists mainly of file of the most recent checkpoint, more all the files/folders that are newer than this file logs. Thus, to reduce the size of a backup complete ensure

that a checkpoint occurs (e.g. "call ttCkpt" a ttIsql session) just before starting the backup.

2. No, only the restore is possible from an incremental backup set. Also note that due to the large amount of rollforward needed, restore a large incremental backup set can take quite a long time. Backup and restore are not really intended for this purpose.

3. If you are unable to use replication, then a sort of application-level synchronization is your only option.

4 data store and data mean the same thing - a physical TimesTen database. We prefer the database of the expression of our days; data store is a legacy term. A DSN is something different (Data Source Name) and should not be swapped with the data/database store. A DSN is a logical entity that defines the attributes of a database and how to connect. It is not the same as a database.

Chris

-

The SQL Developer memory usage is very high + session monitor work not

Hello

I'm facing 2 problems with SQL developer tool.

(1) high memory usage (about 160 MB).

(2) when I click on tools-> monitor sessions, session related window opens upward.

I use 1.5.4 version. Please let me know if there are fixes for these issues.

ThanksHello

(1) not much help for you, but for me, 160 MB is not excessive use of memory for SQL Developer (mine is currently sitting at around 150 MB). And then, I have been using the tool for a while and have a lot of memory on my computer.

(2) tools > Sessions of the monitor works fine for me (DB 10.2.0.3). Do you get an error message when it "does not open upward? Which version of DB are you using? If you go through the browser reports (see > reports if it is not already displayed) and select Data Dictionary reports > Database Administration > Sessions > Sessions, does it report?theFurryOne

-

I run the test center for a very large school district with over 120 students of k. We have a current deployed at base of 54 client machines of k using Firefox 3.6. We have not upgraded for many reasons, of which the most important is to remove the possibility of use in navigation private students and dealing with plugin-updates for the digital non-born (read dumber than a bag of hammers users) that make up the majority of the customer base.

We test ESR now, but only discovered this end of life for 3.6 is tomorrow, 4/24. We are currently in the middle of the scale of the State of online tests. The question is, what will happen tomorrow, when the browser will end of life. ESR wiki mentions that "an update to the current version of Firefox Desktop will be available through the Update Service Application"

So the main question is, are my students/teachers goes to get a popup telling them that they have updated the browser if we have already turned off updates? If so, can I I disable remote using SCCM, because there will be all sorts of havoc.

Please advise as soon as possible and thank you in advance.

An addon like https://addons.mozilla.org/en-US/firefox/addon/disable-private-browsing-pl/ can help, or http://kb.mozillazine.org/User:Dickvl / Private_Browsing_disable (don't forget, this is beyond what this forum can help you with if you need help making these changes).

-

Extremely high after upgrade to Firefox 12 memory usage

After I've upgraded to Firefox 12, I started frequently affected by hot air balloon Firefox memory usage extremely high (2-3 GB after a few minutes of navigation) light. Sometimes it will fall back down to a more reasonable level (a few hundreds of MB), sometimes it crashes (probably trying to garbage collect everything), and sometimes it crashes. Usually the thread crash cannot be determined, but when it's possible, it's in the garbage collection code ( https://crash-stats.mozilla.com/repor.../list?signature=js%3A%3Agc%3A%3AMarkChildren%28JSTracer * %2 js C + % 3A % 3Atypes % 3A % 3ATypeObject * 29% ).

I managed to capture a subject: report memory when Firefox had about 1.5 GB and have attached an image.

A couple of things I've tried. I have a lot of tabs open (although don't load it the tabs until the selected option is enabled), so I copied my profile, all kept my extensions enabled, but all my tabs closed. I then left an open page http://news.google.com/ and it worked very well for several days, while my original profile goes down several times a day.

I also tried to disable most of my extensions, leaving the following extensions that I refuse to sail without:

Adblock more

BetterPrivacy

NoScript

PasswordMaker

Views

Priv3However, the problem still happens in this case.

Don't know if this helps or not. I'm looking forward to trying Firefox 13 when it comes out.

Never knew what was causing the problem, but have disappeared since the upgrade to Firefox 13, accidents and memory pathological use.

-

withdraw the authorization allowing you to monitor the memory usage

I now often have either force quit, restart and started having panic 'of the core"since I agree allow Firefox CPU usage monitor. I'm still on OS X 10.6.8 on my Mac. I got no problem with anything since installing this OS X.

However, from time to time with the latest Firefox 7.0.1 installation I think the program or my computer 'blocks' and seems unresponsive. I left active Firefox and resident on my computer last night and once again, everything will be inadmissible. I opened the monitor activity and noticed that Firefox was consuming 283 MB of real memory (now 298,6 MB) and I have this one open another tab for the Seattle Times. I withdraw my permission to allow Firefox to watch my memory usage and need to know how to delete the cookie or what ever that governs)

Go to Firefox > Preferences > advanced > general tab and uncheck the box "send performance data" more information here.

-

packaging of cluster - memory usage

After reading this article http://www.catb.org/esr/structure-packing/ on the elements of data filling in C, I started to wonder if LabVIEW behaves in the same way. In short, the order of items in a cluster can greatly influence the size of the cluster. For example, if you have 4 items in your cluster (in order) long, char, long, char, the memory requirements are 4 + 1 + 3 + 4 + 1 + 3 = 16 bytes. The two "3" byte are filling required for alignment of Word elements. If you change the order at long, long, char, char memory requirements is 4 + 4 + 1 + 1 + 2 = 12 bytes. The last 2 bytes are padding.

The article also mentions bit fields. It does not appear that LabVIEW supports bit fields. Therefore, if you have a number of Boolean values, you might be better off (memory wise) to store in a larger data type appropriate and pack / unpack them yourself. The trade-off is that your code will run so slightly

slower.

slower.I suppose that elements in a cluster, the tab order is the order that they are declared in the memory. Changing the tab order may allow the user to reduce the latter's memory footprint. This is especially important if you have thousands or hundreds of thousands of cases in the cluster.

Any ideas on that?

The information that you want are in the help to the title of "how LabVIEW stores data in memory", see section groups. The short answer is, it depends on what platform you are using.

-

I get a pop up has a high memory usage when on Internet Explorer.

MY COMPUTER IS currently RUNNING VERY SLOW WHEN I SURF the INTERNET a POP UP CONSTANTLY APPERARS. TOO HIGH MEMORY USAGE. What CAN I DO TO CURE THIS PROBLEM?

Original title: pop ups. high memory usage

try to clean the temporary files, disable unnecessary programs that are running

in the background and disable add-ons, you don't use

at the time.

-

App crash when loading very large images in Spark Image component

Hello world

A client of ours should display very large jpgs (about 8000 x 5000 px), which are the object of a scroll and zoom using touch gestures.

To my surprise, even, the performance to do this using a scroller is pretty cool

BUT unfortunately the application sometimes freezes when loading the image. (about 15% of chance to do)

Debugger does give no info at all, simply closes debugging.

Does anyone have an idea what could be causing this or how to avoid this?

PS tested on several machines and different Versions of Tablet, including 2.1.0.1032

Thx a lot.

Tim.

Sorry it took me a long time to respond, but I had other issues to be addressed in the first place

Use the Profiler showed a leak memory in the skin of the viewer of the image.

It is a memory problem.

I must put BimapImage null source value before destroying the display manually, even if I had no other reference to it.

A little strange, but this solved my problem.

He could reappear obviously using a single image much bigger, but I guess that it must accept some limits on a mobile device somehow.

-

QNetworkAccessManager limiting the memory usage when no head

I have a long application works without head that downloads files from 6 MB upwards on a web server. Now using QNetworkAccessManager to view the file, I see the memory usage, pull up and soon to exceed the limit of 3 MB.

The QNetworkAccessManager then suffers bad allocations and crashes.

I was hoping would be sort of the QNetworkAccessManager chunk the data of the file on the disk and keep low memory footprint, but it seems he is trying to remove all of the file.

All means to limit or control the behavior of the QNetworkAccessManager?

See you soon

Paul.

Hello.

We started an internal investigation to verify the QNetworkAccessManager implementation. A suggestion to try next was to try using libcurl to perform the download of files.

-

IO error: output file: application.cod too large data section

Hello, when I compile my application using BlackBerry JDE, I get the following error:

I/o Error: output file: application.cod too large data section

I get the error when you use the JDE v4.2.1 or JDE v4.3. It works very well for v4.6 or higher.

I have another forum post about it here.

I also read the following article here.

On this basis I tried to divide all of my application, but that doesn't seem to work. The best case that it compiles for awhile and the problem is that I add more lines of code.

I was wondering if someone could solve the problem otherwise. Or if someone knows the real reason behind this question?

Any help will be appreciated.

Thank you!

I finally managed to solve this problem, here is the solution:

This problem occurs when the compiler CAP is not able to give an account of one of the data resources. The CAP compiler tries to package the data sections to a size of 61440 bytesdefauly max. "You can use the option of CAP'-datafull = N' where N is the maximum size of the data section and set the size to be something less than the default value. With a few essays on the size, you'll be able to work around the problem.

If someone else if this problem you can use the same trick to solve!

-

How to install the large data file?

Anyone know how I can install large binary data to BlackBerry files during the installation of an application?

My application needs a size of 8 MB of the data file.

I tried to add the file in my BlackBerry project in the Eclipse environment.

But the compiler could not generate an executable file with the following message.

«Unrecoverable internal error: java.lang.NullPointerException.» CAP run for the project xxxx»

So, I tested with a small binary file. This time, the compiler generated a cod file. but the javaloader to load the application with this message.

"Error: file is not a valid Java code file.

When I tried with a small plus, we managed to load, but I failed to run the program with that.

"Error at startup xxx: Module 'xxx' has verification error at offset 42b 5 (codfile version 78) 3135.

Is it possible to include large binary data files in the cod file?

And what is the best practice to deal with such a large data files?

I hope to get a useful answer to my question.

Thanks in advance for your answer.

Kim.

I finally managed to include the large data file in library projects.

I have divided the data file in 2 separate files and then each file added to library projects.

Each project the library has about 4 MB of the data file.

So I have to install 3 .cod files.

But in any case, it works fine. And I think that there will not be any problem because I use library projects only the first time.

Peter, thank you very much for your support...

Kim

-

Need to free more RAM to sort and link several very large excel files

HP Pavilion dm1 computer running windows 7 64 bit with Radeon HD graphics card laptop. Recently upgraded to 8 GB with 3.7 GB of usable memory.

I have several very large files with more 150 000 lines in each excel file. Need to combine these 3-4 files and sort. Research to maximize the availability of memory. The Task Manager is total view Mo 3578, available - 1591 MB, cache - 1598. Free 55MO. A single instance of Chrome and Excel 2014 without worksheet are running.Appreciate your help.It's her! It worked... disabled the maximum memory and restarted.

available memory is 7.6 GB to 8 GB. Oh boy, I can't believe it...Thank you guys. Many thanks to hairyfool, Gerry and countless others who have given pointers... -

Memory usage high phys with SMB share

I have a number of shared folder of the os on my computer, shared the old through the advanced sharing.

The usual RAM load is 1.49 GB as evidenced by the Task Manager showing 4094 MB total, 2387MB cache, 2562 MB available 215 MB of space free.

This morning I realized that my memory usage was 3.9 GB and do not add numbers, pull up of resource monitor don't add sweats or it was all the "in use" with less than 300 MB of cache and 0 MB of free space, he showed the usual processes of same...

the thing I discovered with the computer Manager was that my brother had a 2.9 GB. ISO file mounted in daemon tools on his computer (shared mine), JUST mounted it was not used at all and which seemed weird. At the point where I told him to remove the ISO (and the time TI has disappeared from the list of open files) memory usage has dropped to normal.

During the period of the high memory use, the computer is VERY slow paging left and right whenever I tried to open the windows, open the tabs in firefox, switch tabs, etc.

I want to know how to solve this behavior horribly wrong as my machine has a ton of shared files and in any case I'll tolerate that eat all my ram (what happens if I want to start a game when usage is through the roof?, what to do if all users on the network to mount the same file?) , XP, I had no this question and use memoery did not budge even on the share.

I disabled the offline files and the service as well.

Specifications of the PC: Win7 x 64 pro, 4 GB of RAM

OK, I thought you open mount the ISO on Windows 7.

Therefore, a Windows 7 problem. I think that, in this case you must contact the support of Ms. I don't know how I we can solve it.

"A programmer is just a tool that converts the caffeine in code" Deputy CLIP - http://www.winvistaside.de/

Maybe you are looking for

-

Check if a number is in the range

Hello How can I check if a number is within a range and then return the value associated with a GPA Calculator: Thus, for example, if the mark is 78, I want to return the Grade B +. I couldn't understand that using the HLOOKUP function. Thanks in adv

-

Is this OK to play games on your laptop?

Hello Is this OK to play games on your laptop? I don't have a problem with that, but I always hear it's like murder. Address your comments. Thank you.

-

How can I use properties with TestStand

I guess that's another pretty basic question: -. I use just a step for now to do what I want to do and reprot back after it's done. Finally, I would like to use properties as the current method has considerable drawbacks. Problem is: 'the object' see

-

I have this printer Epson WF-2540, but I can not print and it says I am offline. So I uninstall the printer and re-installed again. But it is still telling me that I have offline. Yet once uninstalled and I have Windows 7 Home Premium installed also.

-

Smartphones from blackBerry Curve 8520 - Blackberry MAPS - how/where can I configure the GPS signal?

I took a brief look at the questions on the CARDS, but none of these go to the ART GPS end does not. (I'm sure it's somewhere in the support forums, but I found nto). I have the icon of the WAP on my Blackberry Curve 8520. But when I click it I don't