Execution of the asynchronous function

I've looked through the documentation, but can't seem to find an example of a fire and forget delivery FunctionService.

Unless I missed something in the api documentation, it is not a method. Run that is not return a ResultsCollector or throw a FunctionException if you ignore it.

Yes, I know that I could return just a .lastResult (null), but it does not seem to violate the spirit of "fire and forget".

I missed something somewhere?

Your function is to implement Function.hasResult to return false. It's what makes a fire and forget the function.

Tags: VMware

Similar Questions

-

How does GF determines number of threads of execution of the function.

Hello

Depending on the topic, I am interested to know how GF determines the number of threads to yield to the execution of the function on a JAVA virtual machine.

Also, is there a way to override this property?

We use GF 6.6.4

Thank you very much

David Brimley

Hi David,

The number of threads of FE is determined by max (UC * 4, 16)-processors being, essentially, of hearts in this example. You can increase your threads of FE by setting the DistributionManager.MAX_FE_THREADS system property.

-Jens

-

The execution of a referenced function

I want to run one of the many functions based on a string passed from an external data source. I could of course write a statement massive switch/case, but I would prefer something more elegant.

Which would be loverly is something like;

Var menu = eval (event.item.@value);

menuFuction();

But I think it's impossible from v 3.0.

Anybod you have ideas?

PaulFunctions as variables - they are members of a class. And so they can be referenced by similar means. For example, if you have an instance of the Button class you can call its value method or by practice:

b.getStyle ("color") or b ["value"]("color").

You can do the same thing: var menuFunction:Function = this[event.item.@value); menuFunction(); Make sure you type your variable in function.

-

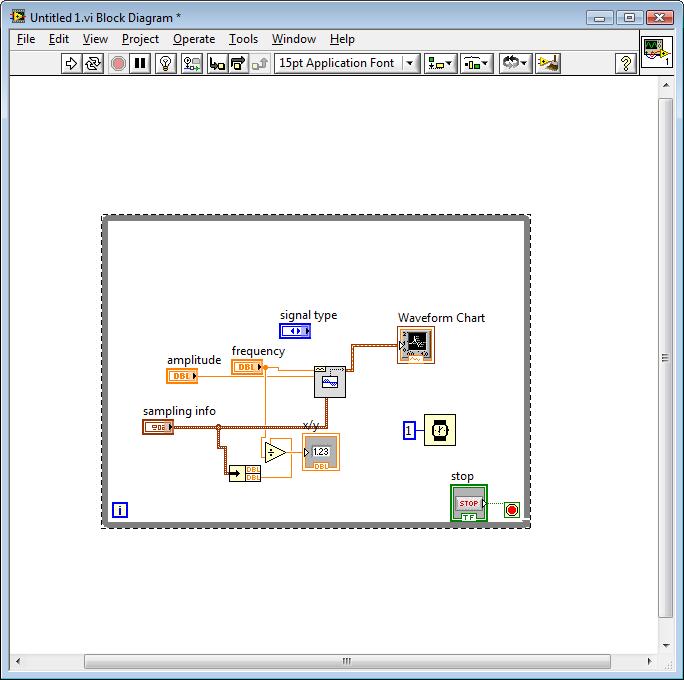

I have a question about the order of execution. In the WHILE loop, I have two things to measure, period and tension using the DAQmx READ functions for voltage and the meter. In the end, I want to collect these data as points almost simultaneously as possible, as a pair and then send them together to another piece of code (not shown here) which them will result in some sort of command for an engine. It would be run, and then I want to perceive the tension and the period at a time later and do the same thing.

(1.) I'm a little confused on what the meter of the READ function is back because it's a table. What is a picture of? I thought that it was up to the value of the individual periods between rising edges. The output of the counter 1 DBL d's a table. How many elements in this table, and what determines the size of this table? Are the elements of the array the individual delays between the edges? How many values are stored in the array by executing? We take the AVERAGE of the last 15 items, but do not know if we are throwing some of the data or what. How to understand the composition of this painting? How can I change the composition of this painting? Is it possible to measure only one period at a time, for example the time between TWO edges?

2.) Will this WHILE loop execute as it gathers tension and a "period table ' (remains to be understood by me) by TIME running in a loop? In particular, we want that the value of the tension associated with the value of the AVERAGE of the period "array", so we can use two data items to create orders of next control every time that the two values are reported. The structure for the delivery of vi will be attached data in pairs like this? I understand that one of the READING functions run not before the other function of READING in the WHILE loop. I want that the period "means" and "strain (Volt) collected at the same pace. This vi will he?

Thank you

Dave

Hi David,

I suggest including the DAQmx Start Task function. If it does not start before the loop, it starts the loop and work very well, but it is not as fast and efficient. In the model of task status, task wiill go to run the checked each iteration of the loop and then back the time checked running when it restarts.

The status of the task model: http://zone.ni.com/reference/en-XX/help/370466V-01/mxcncpts/taskstatemodel/

Kind regards

Jason D

Technical sales engineer

National Instruments

-

Two parallel executions, calling a DLL function

Hello

Since this test takes about 6 hours to test my USE, I plan to use the parallel model to test 2 UUT at the same time in parallel.

I implement the test code as a DLL of CVI.

However, to my surprise, it seems that the steps that call a DLL function actually traveled in one series, not in parallel:

Test 2 power outlets if one enters and executes a DLL works, the other waits for the first to complete its operation and return. While the other runs on the same copy of the DLL, so that the DLL global variables are actually shared between executions.

So if a DLL will take 5 minutes to complete, two executions in the running at the same time take 10 minutes. This isn't a running in parallel in every way.

What I want and expect also TestStand, was to completely isolate the copies of these two executions DLL such as test two casings could run at the same time the same DLL function by arbitrary executiong their copy of the function, completely isolated from one another.

So they separated globals, discussions, etc., and two parallel jacks take 5 minutes to run a step, instead of 10.

Such a scenario is possible?

If not, how can I use my test in parallel (in truly parallel) when the use of 2-socket test?

(1) Yes, he'll call the multiple executions in TestStand calling into the same dll in memory the same copy of this DLL. Thus dll called in this way must be thread-safe (that is written in a way that is safe for multiple threads running the code at the same time). This means usually avoiding the use of global variables among other things. Instead, you can store the thread shows in local variables within your sequence and pass it in the dll as a parameter as needed. Keep in mind all the DLLs your dll calls must also be thread-safe or you need to synchronize calls in other DLLs with locks or other synchronization primitives.

1 (b) even if your dll are not thread-safe, you might still be able to get some benefits from parallel execution using the type of automatic planning step and split your sequence in independent sections, which can be performed in an order any. What it will do is allow you to run Test a socket A and B Test to another socket in parallel, and then once they are then perhaps test B will take place on one and test one run on the other. In this way, as long as each test is independent of the other you can safely run them in parallel at the same time even if it is not possible to run the same test in parallel at the same time (that is, if you can not run test on two Sockets at the same time, you might still be able to get an advantage of parallelism by running the Test B in one take during the tests in the other. See the online help for the type of step in autoscheduling for more details).

(2) taken executions (and all executions of TestStand really) are threads separated within the same process. Since they are in the same process, the global variables in the dll are essentially shared between them. TestStand Station globals are also shared between them. TestStand Globals file, however, are not shared between runs (each run gets its own copy) unless you enable the setting in the movie file properties dialog box.

(3) course, using index as a way to distinguish data access are perfectly valid. Just be careful that what each thread does not affect data that other threads have access. For example, if you have a global network with 2 elements, one for each grip test, you can use safely the decision-making of index in the table and in this way are not sharing data between threads even if you use a global variable, but the table should be made from the outset before start running threads , or it must be synchronized in some way, otherwise it is possible to have a thread tries to access the data, while the other thread is created. Basically, you need to make sure that if you use global data which the creation/deletion, modification and access in a thread does not affect the global data that the other thread use anyway in or we must protect these creation/deletion, modification and access to global data with locks, mutex or critical sections.

Hope this helps,

-Doug

-

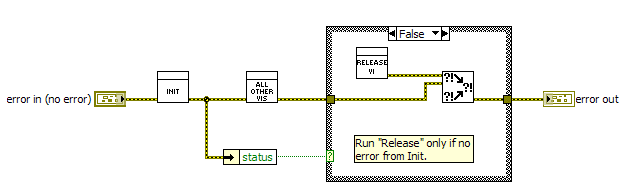

Control the order of execution of the init/release

I have a small program that I write in LabView. It has an API (one set of screws to wrapping the functions of the DLL to control a device).

Other such programs in the Gayshop make liberal use of the structures of the sequence. I understand that the sequence into LabVIEW structures are not usually recommended. I am writing my program with the best style of LabVIEW (as far as I understand - I'm still a relative novice in LabVIEW).

I found that I could wire together the error / mistake Terminal to create a data flow to control the order of execution and it works beautifully.

However, there are some cases where it is not enough.

Here is an example. I hope that the answer to this will answer my other questions. If this is not the case, perhaps that I'll post more.

One of the first live I call is an Init function. One of the last screw is the release function.

The release function must be called at the end, after the rest of the program executed (in this case, it is after the user requests the stop). It should be naturally at the end (or almost) of the error string in / out connections error (as it is currently).

However, the error it receives, which will determine whether or not he will run, should be the output of Init. Release should work even if something else has failed.

I enclose a photo showing the problem, with most of the code snipped out (as exactly what is happening in the middle is not relevant).

What is the elegant way to handle this in LabVIEW. Is it really a deal for a structure of the sequence, or is there a way more pleasant or better? How would you recommend handling?

Thank you very much.

BP

I agree with what pincpanter said, more you will need to use the status of the function 'Init '.

Note that you can ignore the error and make the merger within the Vi version if you wish.

Steve

-

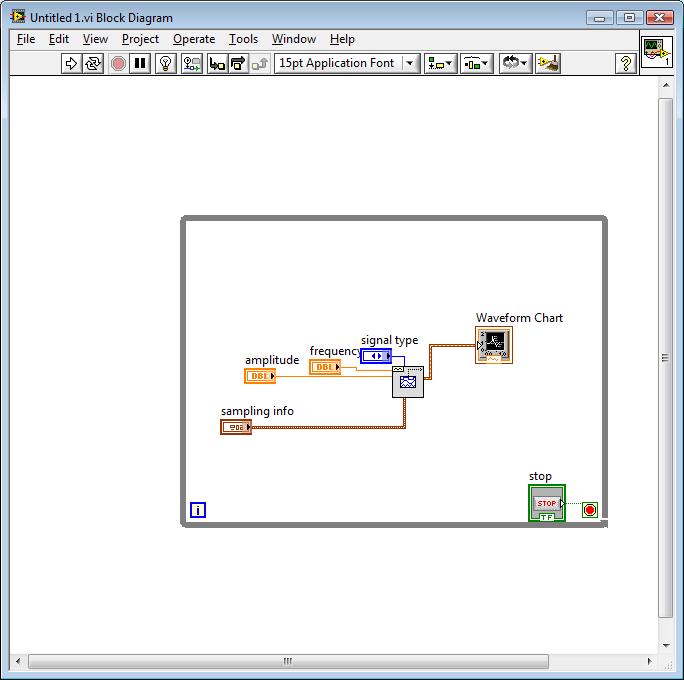

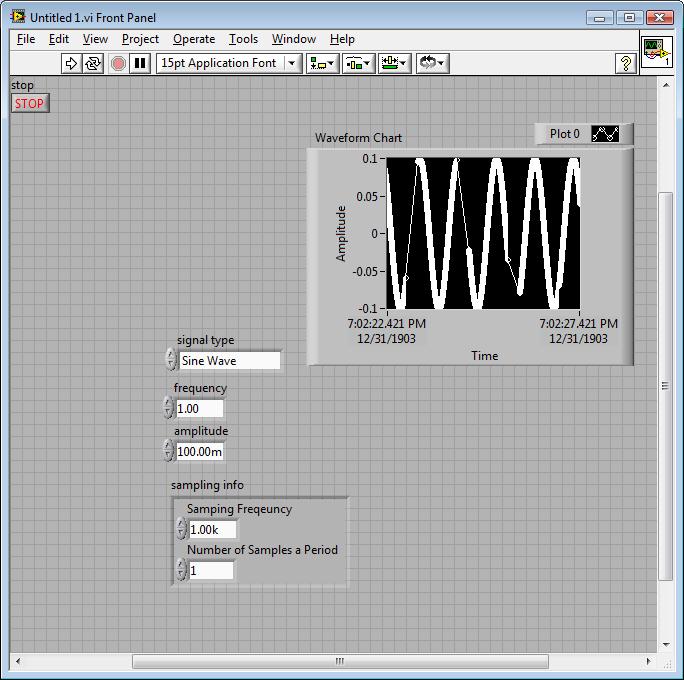

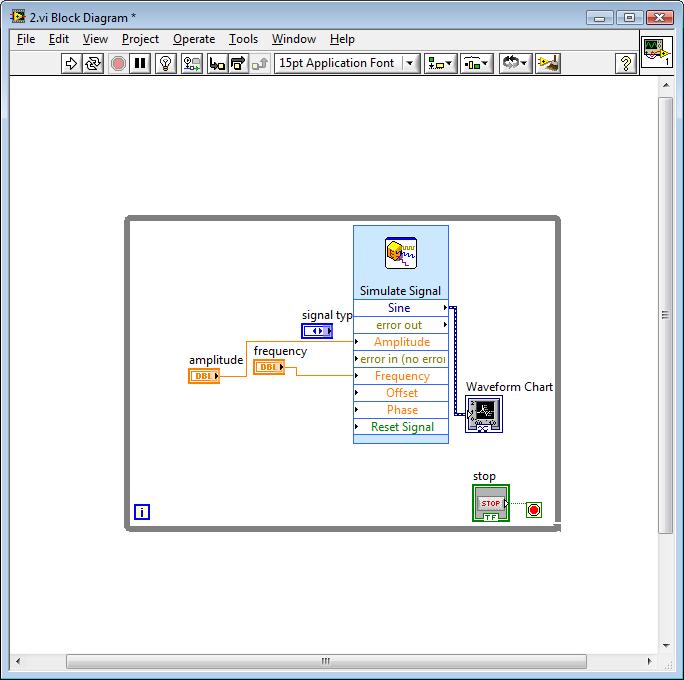

Continuity of the functions of the basic function generator and the right time

I need to create a sine wave, point by point, which will be forwarded to the MIP and finally to a channel of analog output on a PCI-6014

Ive tried a few different ways to do it, but everyone has some problems with her slider.

with the 'generator.vi of the base feature.

and also the "sine waveform.vi.

There seems to be problems with this lack of points.

someone helpfully pointed, its very likely windows interrupts the origin of the problem

Here is a schematic representation of the panels front and rear

the back panel

the façade has a few points that are missing

Another way to do that seems to work better known that the signal "simulate" express vi.

It's great because it's actually a way of sine 1htz occur at 1htz.

There are also all the points.

The problem is that as soon as I put in my request, she grinds to stop.

Program speed about changes 10khtz less than 1htz when the basic function generator is replaced with this express vi.

A third idea or concept that has been proposed is to put a programmer to slow the timetable.

It is once again, works fine, no more points are missed in the plot.

Yet, he kills again the speed of execution of the program as everything can wait 1ms (or the recripocal of sampling points interval time).

Someone at - it an idea on how I can get a sine wave in PID, then, in the analog waveform without a huge amount of the efficiency of the program. Im sure this is simple.

Nevermind

accedanta do the 2nd option from the top.

go just to increase the number of samples for now leave the rest of the fastest of the program

-

An error [-5005: 0 x 80070002] occurred during execution of the installation of magictune

Original title: uninstall magictune

I am running Windows 7 64 X. By mistake I have install MagicTune Premium. My laptop is Toshiba Satellite P799 with Nvidia GT 540 M. I tried to uninstall it using the program and functionality but receive the following error message:

An error [-5005: 0 x 80070002] occurred during execution of the installation

When I click OK, it brings me to the Samsung download web site. I repeat the download and then restart. The coup explore whenever I try to run.

I always unable to uninstall it.

Grateful for the help

Thank you and best regards,

JC

Hello

Follow these methods and check if that helps:

Method 1:

Try to uninstall the program in clean boot mode and check if it helps.

How to troubleshoot a problem by performing a clean boot in Windows Vista and Windows 7.Note: Don't forget to reset the computer to start as usual when you have found the culprit. Follow step 7 on top of the kb article.Method 2:

I suggest you follow the troubleshooting steps form the article and check if it helps.

How to solve problems when you install or uninstall programs on a Windows computer

http://support.Microsoft.com/kb/2438651 -

WMP is not work show 'failed execution of the server.

while I start the program, it will display 'failed execution of the server.

I have troubleshooted the wmp.

"the configuration parameters have been replaced" is detection.

Please help meHello sai 4 u,.

Follow Windows Media Player troubleshooting below:

Uninstalling and reinstalling Windows Media Player:

Step 1.

Uninstalling Windows Media Player:

1. go to start and in the search type "Turn Windows willing or not.

2. click on "Turn Windows features on or off".

3. search for multimedia and uncheck the brand in the face of Windows Media Player.

4 restart the computer

Step 2.

Reinstall Windows Media Player:

1. go to start and in the search type "Turn Windows willing or not.

2. click on "Turn Windows features on or off".

3. find the multimedia functions and place a check mark in front of the Windows Media Player.

4 restart the computer.If you are having problems using Windows Media Play, try to use the Troubleshoot utility to see if this fixes

the problem.

Open the troubleshooting Windows Media Player settings Troubleshooter by clicking the Start button, then Control Panel. In the search box, type troubleshooting, and then click Troubleshooting. Click View all, and then click the Windows Media Player settings.

I hope this helps.

Marilyn

-

Hello

We have Adobe Captivate installed 8.0.0.145 Windows 7 x 64 v.

Activate Adobe crashes with the following error: a fatal error has occurred and the execution of the application took end. Adobe Captivate one attempts to save all of your work in the respective folders of the project as cpbackup files.

I uninstalled Adobe Acptivate, rebooted the PC and reinstalled activate Adobe - unresolved error.

I have cleared the cache under settings, clear the cache - unresolved error.

Please notify.

Kind regards

George

The update should be available to help, updated. It is available since October 2014.

Being administrator is not the same thing as Captivate running as an administrator that must be put in place in the menu right click on the shortcut that launches Captivate. You have restricted functionality if this is not done.

I told you how to clear preferences, with the file Utils. First close Captivate, when you restart CP will create a new preferences folder.

If you have a lot of customization that you will lose when the erasing of the preferences. To keep them, have a look at my blog:

Captivate 8.0.1 Install? Keep your customization! -Captivate Blog

Layouts folder is located in the public Documents. It is a copy of the original folder in the gallery. Same thing with the Interactions.

-

run the Idoc function in the data file returned by the service of GET_FILE

Hello

I'm new to this forum, so thank you in advance for any help and forgive me of any error with the post.

I'm trying to force the execution of a custom Idoc function in a data file Complutense University of MADRID, when this data file is requested from the University Complutense of MADRID through service GET_FILE.

The custom Idoc function is implemented as a filter of the computeFunction type. One of the datafile has appealed to my custom Idoc function:

* < name wcm:element = "MainText" > [! - $myIdocFunction ()-] < / wcm:element > *.

The data file is then downloaded with CRMI via service GET_FILE, but the Idoc function is not called.

I tried to implement another filter Idoc type sendDataForServerResponse or sendDataForServerResponseBytes, that store objects cached responseString and responseBytes, personalized in order to look for any call to my function in the response object Idoc, eventually run the Idoc function and replace the output of the Idoc in the response. But this kind of filter will never run.

The Idoc function myIdocFunction is executed correctly when I use WCM_PLACEHOLDER service to get a RegionTemplate (file .hcsp) associated with the data file. In this case, the fact RegionTemplate refers to the element of "MainText" data file with <!-$wcmElement ("MainText")->. But I need to make it work also with service GET_FILE.

I use version 11.1.1.3.0 UCM.

Any suggestion?

Thank you very much

FrancescoHello

Thank you very much for your help and sorry for this late reply.

Your trick to activate the complete detailed follow-up was helpful, because I found out I could somehow use the filter prepareForFileResponse for my purpose and I could also have related to the implementation of the native filter pdfwatermark. PdfwFileFilter .

I managed to set up a filter whose purpose is to force the Idoc assessment of a predefined list of functions Idoc on the output returned by the service GET_FILE. Then I paste the code I have written, in which case it may be useful for other people. In any case, know that this filter can cause performance problems, which must be considered carefully in your own use cases.

First set the filter in the set of filters in file .hda from your device:

Filters @ResultSet

4

type

location

parameter

loadOrder

prepareForFileResponse

mysamplecomponent. ForceIdocEvaluationFilter

null

1

@end

Here is a simplified version of the implementation of the filter:

/ public class ForceIdocEvaluationFilter implements FilterImplementor {}

public int doFilter (workspace ws, linking DataBinder, ExecutionContext ctx) survey DataException, ServiceException {}

Service string = binder.getLocal ("IdcService");

String dDocName = binder.getLocal ("dDocName");

Boolean isInternalCall = Boolean.parseBoolean (binder.getLocal ("isInternalCall"));

If ((ctx instanceof FileService) & service.equals ("GET_FILE") &! isInternalCall) {}

FileService fileService = ctx (FileService);

checkToForceIdocEvaluation (dDocName, fileService);

}

continue with other filters

Back to CONTINUE;

}

' Private Sub checkToForceIdocEvaluation (String dDocName, FileService fileService) throws DataException, ServiceException {}

PrimaryFile file = IOUtils.getContentPrimaryFile (dDocName);

Ext = FileUtils.getExtension (primaryFile.getPath ());

If (ext.equalsIgnoreCase ("xml")) {}

forceIdocEvaluation (primaryFile, fileService);

}

}

forceIdocEvaluation Private Sub (file primaryFile FileService fileService) throws ServiceException {}

String multiplesContent = IOUtils.readStringFromFile (primaryFile);

Replacement ForceIdocEvaluationPatternReplacer = new ForceIdocEvaluationPatternReplacer (fileService);

String replacedContent = replacer.replace (fileContent);

If (replacer.isMatchFound ()) {}

setNewOutputOfService (fileService, replacedContent);

}

}

' Private Sub setNewOutputOfService (FileService fileService, String newOutput) throws ServiceException {}

File newOutputFile = IOUtils.createTemporaryFile ("xml");

IOUtils.saveFile (newOutput, newOutputFile);

fileService.setFile (newOutputFile.getPath ());

}

}

public class IOUtils {}

public static getContentPrimaryFile (String dDocName) survey DataException, ServiceException {queue

DataBinder serviceBinder = new DataBinder();

serviceBinder.m_isExternalRequest = false;

serviceBinder.putLocal ("IdcService", "GET_FILE");

serviceBinder.putLocal ("dDocName", dDocName);

serviceBinder.putLocal ("RevisionSelectionMethod", "Latest");

serviceBinder.putLocal ("isInternalCall", "true");

ServiceUtils.executeService (serviceBinder);

String vaultFileName = DirectoryLocator.computeVaultFileName (serviceBinder);

String vaultFilePath = DirectoryLocator.computeVaultPath (vaultFileName, serviceBinder);

return new File (vaultFilePath);

}

public static String readStringFromFile (File sourceFile) throws ServiceException {}

try {}

return FileUtils.loadFile (sourceFile.getPath (), null, new String [] {"UTF - 8"});

} catch (IOException e) {}

throw new ServiceException (e);

}

}

Public Shared Sub saveFile (String source, destination of the file) throws ServiceException {}

FileUtils.writeFile (source, destination, "UTF - 8", 0, "is not save file" + destination);

}

public static getTemporaryFilesDir() leader throws ServiceException {}

String idcDir = SharedObjects.getEnvironmentValue ("IntradocDir");

String tmpDir = idcDir + "custom/MySampleComponent";

FileUtils.checkOrCreateDirectory (tmpDir, 1);

return new File (tmpDir);

}

public static createTemporaryFile (String fileExtension) leader throws ServiceException {}

try {}

The file TmpFile = File.createTempFile ("tmp", "." + fileExtension, IOUtils.getTemporaryFilesDir ());

tmpFile.deleteOnExit ();

return tmpFile;

} catch (IOException e) {}

throw new ServiceException (e);

}

}

}

Public MustInherit class PatternReplacer {}

Private boolean matchFound = false;

public string replace (CharSequence sourceString) throws ServiceException {}

Matcher m = expand () .matcher (sourceString);

StringBuffer sb = new StringBuffer (sourceString.length ());

matchFound = false;

While (m.find ()) {}

matchFound = true;

String matchedText = m.group (0);

String replacement = doReplace (matchedText);

m.appendReplacement (sb, Matcher.quoteReplacement (replacement));

}

m.appendTail (sb);

Return sb.toString ();

}

protected abstract String doReplace(String textToReplace) throws ServiceException;

public abstract Pattern getPattern() throws ServiceException;

public boolean isMatchFound() {}

Return matchFound;

}

}

SerializableAttribute public class ForceIdocEvaluationPatternReplacer extends PatternReplacer {}

private ExecutionContext ctx;

idocPattern private model;

public ForceIdocEvaluationPatternReplacer (ExecutionContext ctx) {}

This.ctx = ctx;

}

@Override

public getPattern() model throws ServiceException {}

If (idocPattern == null) {}

List of the

functions = SharedObjects.getEnvValueAsList ("forceidocevaluation.functionlist"); idocPattern = IdocUtils.createIdocPattern (functions);

}

Return idocPattern;

}

@Override

protected String doReplace(String idocFunction) throws ServiceException {}

Return IdocUtils.executeIdocFunction (ctx, idocFunction);

}

}

public class IdocUtils {}

public static String executeIdocFunction (ExecutionContext ctx, String idocFunction) throws ServiceException {}

idocFunction = convertIdocStyle (idocFunction, IdocStyle.ANGULAR_BRACKETS);

PageMerger activeMerger = (PageMerger) ctx.getCachedObject("PageMerger");

try {}

String output = activeMerger.evaluateScript (idocFunction);

return output;

} catch (Exception e) {}

throw the new ServiceException ("cannot run the Idoc function" + idocFunction, e);

}

}

public enum IdocStyle {}

ANGULAR_BRACKETS,

SQUARE_BRACKETS

}

public static String convertIdocStyle (String idocFunction, IdocStyle destinationStyle) {}

String result = null;

Switch (destinationStyle) {}

case ANGULAR_BRACKETS:

result = idocFunction.replace ("[!-$","<$").replace("--]", "$="">" "]");

break;

case SQUARE_BRACKETS:

result = idocFunction.replace ("<$", "[!--$").replace("$="">", "-] '");

break;

}

return the result;

}

public static model createIdocPattern (

list idocFunctions) throws ServiceException {} If (idocFunctions.isEmpty ()) throw new ServiceException ("list of Idoc functions to create a template for is empty");

StringBuffer patternBuffer = new StringBuffer();

model prefix

patternBuffer.append ("(\\ [\\!--|)")<>

Features GOLD - ed list

for (int i = 0; i)

patternBuffer.append (idocFunctions.get (i));

If (i

}

model suffix

patternBuffer.append ("") (. +?) (--\\]|\\$>)");

String pattern = patternBuffer.toString ();

log.trace ("Functions return Idoc model", model);

Return Pattern.compile (pattern);

}

}

public class ServiceUtils {}

Private Shared Workspace getSystemWorkspace()}

Workspace workspace = null;

WsProvider provider = Providers.getProvider ("SystemDatabase");

If (null! = wsProvider) {}

workspace = wsProvider.getProvider ((workspace));

}

Returns the workspace;

}

getFullUserData private static UserData (String userName, cxt ExecutionContext, workspace ws) throws DataException, ServiceException {}

If (null == ws) {}

WS = getSystemWorkspace();

}

UserData userData is UserStorage.retrieveUserDatabaseProfileDataFull (name of user, ws, null, cxt, true, true);.

ws.releaseConnection ();

return userData;

}

public static executeService (DataBinder binder) Sub survey DataException, ServiceException {}

get a connection to the database

Workspace workspace = getSystemWorkspace();

Look for a value of IdcService

String cmd = binder.getLocal ("IdcService");

If (null == cmd) {}

throw new DataException("!csIdcServiceMissing");

}

get the service definition

ServiceData serviceData = ServiceManager.getFullService (cmd);

If (null == serviceData) {}

throw new DataException (LocaleUtils.encodeMessage ("!")) csNoServiceDefined", null, cmd));

}

create the object for this service

The service = ServiceManager.createService (serviceData.m_classID, workspace, null, Binder, serviceData);

String userName = 'sysadmin ';

UserData fullUserData = getFullUserData (username, service, workspace);

service.setUserData (fullUserData);

Binder.m_environment.put ("REMOTE_USER", username);

try {}

init service do not return HTML

service.setSendFlags (true, true);

create the ServiceHandlers and producers

service.initDelegatedObjects ();

do a safety check

service.globalSecurityCheck ();

prepare for service

service.preActions ();

run the service

service.doActions ();

} catch (ServiceException e) {}

} {Finally

service.cleanUp (true);

If (null! = workspace) {}

workspace.releaseConnection ();

}

}

}

}

-

NPL of multiple execution for the awr sql_id

Hi Experts,

NPL of multiple execution for the AWR sql_id,

I followed questions

1. What plan using opimizer tcurrently?

2. make sure optimizer to choose good plans

SQL > select * from table (dbms_xplan.display_awr ('fb0p0xv370vmb'));

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

SQL_ID fb0p0xv370vmb

--------------------

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Hash value of plan: 417907468

---------------------------------------------------------------------------------------------------------------

| ID | Operation | Name | Lines | Bytes | TempSpc | Cost (% CPU). Time |

---------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | 63353 (100) |

| 1. UPDATE |

| 2. SORT ORDER BY | 17133. 2978K | 3136K | 63353 (1) | 00:14:47 |

| 3. HASH JOIN RIGHT SEMI | 17133. 2978K | 62933 (1) | 00:14:42 |

| 4. COLLECTION ITERATOR PICKLER FETCH | |

| 5. HASH JOIN RIGHT SEMI | 68530 | 11 M | 62897 (1) | 00:14:41 |

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| 6. VIEW | VW_NSO_1 | 5000 | 35000 | 33087 (1) | 00:07:44 |

| 7. COUNTY STOPKEY |

| 8. VIEW | 127K | 868K | 33087 (1) | 00:07:44 |

| 9. GROUP SORT BY STOPKEY | 127K | 2233K | 46 M | 33087 (1) | 00:07:44 |

| 10. TABLE ACCESS FULL | ASYNCH_REQUEST | 1741K | 29 M | 29795 (1) | 00:06:58 |

| 11. TABLE ACCESS FULL | ASYNCH_REQUEST | 1741K | 280 M | 29801 (1) | 00:06:58 |

---------------------------------------------------------------------------------------------------------------

SQL_ID fb0p0xv370vmb

--------------------

SELECT ASYNCH_REQUEST_ID, REQUEST_STATUS, REQUEST_TYPE, REQUEST_DATA, PRIORITY, SUBMIT_BY, SUBMIT_DATE.

Hash value of plan: 2912273206

--------------------------------------------------------------------------------------------------------------------------

| ID | Operation | Name | Lines | Bytes | TempSpc | Cost (% CPU). Time |

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

--------------------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | 45078 (100) |

| 1. UPDATE |

| 2. SORT ORDER BY | 1323. 257K | 45078 (1) | 00:10:32 |

| 3. TABLE ACCESS BY INDEX ROWID | ASYNCH_REQUEST | 1. 190. 3 (0) | 00:00:01 |

| 4. NESTED LOOPS | 1323. 257K | 45077 (1) | 00:10:32 |

| 5. THE CARTESIAN MERGE JOIN. 5000 | 45000 | 30069 (1) | 00:07:01 |

| 6. UNIQUE FATE |

| 7. COLLECTION ITERATOR PICKLER FETCH | |

| 8. KIND OF BUFFER. 5000 | 35000 | 30034 (1) | 00:07:01 |

| 9. VIEW | VW_NSO_1 | 5000 | 35000 | 30033 (1) | 00:07:01 |

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| 10. UNIQUE FATE | 5000 | 35000 |

| 11. COUNTY STOPKEY |

| 12. VIEW | 81330 | 555K | 30033 (1) | 00:07:01 |

| 13. GROUP SORT BY STOPKEY | 81330 | 1 429 K | 2384K | 30033 (1) | 00:07:01 |

| 14. TABLE ACCESS FULL | ASYNCH_REQUEST | 86092 | 1513K | 29731 (1) | 00:06:57 |

| 15. INDEX RANGE SCAN | ASYNCH_REQUEST_SUB_IDX | 1 | | | 1 (0) | 00:00:01 |

--------------------------------------------------------------------------------------------------------------------------

Hash value of plan: 3618200564

--------------------------------------------------------------------------------------------------------------------------------

| ID | Operation | Name | Lines | Bytes | TempSpc | Cost (% CPU). Time |

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

--------------------------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | 59630 (100) |

| 1. UPDATE |

| 2. SORT ORDER BY | 4474 | 777K | 59630 (1) | 00:13:55 |

| 3. HASH JOIN RIGHT SEMI | 4474 | 777K | 59629 (1) | 00:13:55 |

| 4. VIEW | VW_NSO_1 | 5000 | 35000 | 30450 (1) | 00:07:07 |

| 5. COUNTY STOPKEY |

| 6. VIEW | 79526 | 543K | 30450 (1) | 00:07:07 |

| 7. GROUP SORT BY STOPKEY | 79526 | 1397K | 7824K | 30450 (1) | 00:07:07 |

| 8. TABLE ACCESS FULL | ASYNCH_REQUEST | 284K | 5003K | 29804 (1) | 00:06:58 |

| 9. TABLE ACCESS BY INDEX ROWID | ASYNCH_REQUEST | 71156 | 11 M | 29141 (1) | 00:06:48 |

PLAN_TABLE_OUTPUT

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| 10. NESTED LOOPS | 71156 | 11 M | 29177 (1) | 00:06:49 |

| 11. UNIQUE FATE |

| 12. COLLECTION ITERATOR PICKLER FETCH | |

| 13. INDEX RANGE SCAN | ASYNCH_REQUEST_EFFECTIVE_IDX | 327K | | 392 (1) | 00:00:06 |

--------------------------------------------------------------------------------------------------------------------------------

Thank you

-Raj

Published by: tt0008 on August 22, 2012 20:34Hello

(1) you can see what plan has been used lately by running this query:

select begin_interval_time, plan_hash_value from dba_hist_sqlstat st, dba_hist_snapshot sn where st.snap_id = sn.snap_id and sql_id = 'fb0p0xv370vmb' order by begin_interval_time desc;However, there is no guarantee that the next time you run this query, the latest plan will be chosen.

Periodically, the plan is regenerated (for example when new statistics are collected, is the structure of a table referenced in)

the query is changed etc.), and you can get 4 plans, or even a new function of many factors

(statistics, bind variable values, the optimizer, NLS etc settings settings.)(2) this question is too large for the answer to fit into a thread, there are books written on the subject. The short answer is:

If you know which of the 4 plans is right for you, then you can use a stored outline to lock in (it seems that you are not on 11g so SQL Profiler are not an option for you).

Or you can try to find out why the optimizer generates different plans and address the underlying issue (the most common reason is to bind peeking - but to say

course, we need to know more, starting with the text of your query).Best regards

Nikolai -

Using the data logged in an interface with the aggragate function

Hello

I'm trying to use logged data from a source table in one of my interfaces in ODI. The problem is that one of the mappings on the columns target implies a function (sum) overall. When I run the interface, I get an error saying not "a group by expression. I checked the code and found that the columns jrn_subscriber, jrn_flag, and jrn_date are included in the select statement, but not in the group by statement (the statement group contains only remiaining two columns of the target table).

Is there a way to get around this? I have to manually change the km? If so how would I go to do it?

Also I'm using Oracle GoldenGate JKM (OGG oracle for oracle).

Thanks and really appreciate the help

Ajay"ORA-00979"when the CDC feature (logging) using ODI with Modules of knowledge including the aggregate SQL function works [ID 424344.1]

Updated 11 March 2009 Type status MODERATE PROBLEMIn this Document

Symptoms

Cause

Solution

Alternatives:This document is available to you through process of rapid visibility (RaV) of the Oracle's Support and therefore was not subject to an independent technical review.

Applies to:

Oracle Data Integrator - Version: 3.2.03.01

This problem can occur on any platform.

Symptoms

After successfully testing UI integration ODI using a function of aggregation such as MIN, MAX, SUM, it is necessary to implement change using tables of Journalized Data Capture operations.However, during the execution of the integration Interface to retrieve only records from Journalized, has problems to step load module loading knowledge data and the following message appears in the log of ODI:

ORA-00979: not a GROUP BY expression

Cause

Using the two CDC - logging and functions of aggregation gives rise to complex problems.

SolutionTechnically, there is a work around for this problem (see below).

WARNING: Problem of engineers Oracle a severe cautioned that such a type of establishment may give results that are not what could be expected. This is related to how ODI logging is applied in the form of specific logging tables. In this case, the aggregate function works only on the subset that is stored (referenced) in the table of logging and on completeness of the Source table.We recommend that you avoid this type of integration set ups Interface.

Alternatives:1. the problem is due to the JRN_ * missing columns in the clause of "group by" SQL generated.

The work around is to duplicate the knowledge (LKM) loading Module and the clone, change step "Load Data" by editing the tab 'Source on command' and substituting the following statement:

<%=odiRef.getGrpBy()%>with

<%=odiRef.getGrpBy()%>

<%if ((odiRef.getGrpBy().length() > 0) && (odiRef.getPop("HAS_JRN").equals("1"))) {%>

JRN_FLAG, JRN_SUBSCRIBER, JRN_DATE

<%}%>2. it is possible to develop two alternative solutions:

(a) develop two separate and distinct integration Interfaces:

* The first integration Interface loads the data into a temporary Table and specify aggregate functions to use in this initial integration Interface.

* The second integration Interfaces uses the temporary Table as Source. Note that if you create the Table in the Interface, it is necessary to drag and drop Interface for integration into the Source Panel.(b) define the two connections to the database so that separate and distinct references to the Interface of two integration server Data Sources (one for the newspaper, one of the other Tables). In this case, the aggregate function will be executed on the schema of the Source.

Display related information regarding

Products* Middleware > Business Intelligence > Oracle Data Integrator (ODI) > Oracle Data Integrator

Keywords

ODI; AGGREGATE; ORACLE DATA INTEGRATOR; KNOWLEDGE MODULES; CDC; SUNOPSIS

Errors

ORA-979Please find above the content of the RTO.

It should show you this if you search this ID in the Search Knowledge BaseSee you soon

Sachin -

Confusion: related to the asynchronous loading DataGrid or DataGridColumn custom?

First if I am using BlazeDS and I get back a collection of employees of my remote call. When I decide to try to use an implementation of DataGridColumn as described here http://www.switchonthecode.com/tutorials/nested-data-in-flex-datagrid-by-extending-datagri dcolumn I get an exception of null pointer in the call to itemToLabel(data:Object):String - 'data' is null. Do not know why. But even if I need to solve this problem, I'm curious to know why I can't see even the method on the server side to get hit?

For example, when the don't use NO DataGridColumn custom the Sub works fine and I'm going to see the "getEmployees" call logging take place:

Work:

" < = xmlns:mx mx:Canvas ' http://www.Adobe.com/2006/MXML "

xmlns:comp = "components.*".

creationComplete = "init ()" > "

< mx:Script >

<! [CDATA]

[Bindable]

private var employees: ArrayCollection collection;private function init (): void {}

delegate.getEmployees ();

}

private void resultGetEmployeesSuccess(event:ResultEvent):void {}

employees = event.result as ArrayCollection collection;

}

[]] >

< / mx:Script >

< mx:RemoteObject id = 'delegated' destination = fault "faultHandler (event)" = "flexDelegate" >

< name mx:method = "getEmployees" result = "resultGetEmployeesSuccess (event)" / >

< / mx:RemoteObject >

< mx:DataGrid id = "empsID" dataProvider = "{employees}" verticalScrollPolicy = 'on '.

height = "60%" itemClick = "selectEmployee (event)" >

< mx:columns >

< mx:DataGridColumn id = "idCol" dataField = "id" headerText = "ID" draggable = "false" / >

< mx:DataGridColumn id = "first name" dataField = "first name" headerText = "First" draggable = "false" / >

< mx:DataGridColumn id = "lastName" dataField = "lastName" headerText = "Last" draggable = "false" / >

< mx:DataGridColumn id = "age" dataField = "age" headerText = "Âge" draggable = "false" / >

< / mx:columns >

< / mx:DataGrid >However, when I change the above to include

< dataField = "" department.name comp: DataGridColumnNested "headerText ="Department"/ >"

I DO NOT see the server call to "getEmployees" hit-it left me speechless. How can he try to make DataGridColumNested before she even finished loading the employees? Because of the asynchronous call to the remote object? If so, what is the best way to ensure that the grid is not even try to load until it has 'really' data?

Thank you

Simply do as the custom DataGridColumn is

incorrect implementation. The overridden method itemToLabel must manage

"data == null' free as a result of the base class."

In addition, it is interesting to note that the DataGridColumn in version 3.4 of the

Development Kit software supports nested properties, and the code seems to be more

more effective than the solution you use.

-

If the INSTR function will not use the INDEX o?

Hi all

I have a querry as

Is simple index on column Col1. If we use the index will be used or full table scan will happen in this scenario?Select * from Tab1 Where Instr(Tab1.Col1,'XX') >0 ;

Please give me explanatory answer because I have doubts

DhabasHello

You must use the index function if you want to avoid the full table scan. Check this box

SQL> create table tab1(col1 varchar(20)) 2 / Table created. SQL> insert into tab1 values ('XXAB') 2 / 1 row created. SQL> create index col1_idx on tab1(col1); Index created. SQL> explain plan for Select * from Tab1 Where Instr(Tab1.Col1,'XX') >0; Explained. SQL> set autotrace on SQL> Select * from Tab1 Where Instr(Tab1.Col1,'XX') >0; XXAB Execution Plan ---------------------------------------------------------- 0 SELECT STATEMENT Optimizer=ALL_ROWS (Cost=5 Card=1 Bytes=12) 1 0 TABLE ACCESS (FULL) OF 'TAB1' (TABLE) (Cost=5 Card=1 Bytes =12) Statistics ---------------------------------------------------------- 4 recursive calls 0 db block gets 32 consistent gets 0 physical reads 0 redo size 234 bytes sent via SQL*Net to client 280 bytes received via SQL*Net from client 2 SQL*Net roundtrips to/from client 0 sorts (memory) 0 sorts (disk) 1 rows processed SQL> create index col1_idx2 on tab1(Instr(Col1,'XX')); Index created. SQL> Select * from Tab1 Where Instr(Tab1.Col1,'XX') >0; XXAB Execution Plan ---------------------------------------------------------- 0 SELECT STATEMENT Optimizer=ALL_ROWS (Cost=2 Card=1 Bytes=12) 1 0 TABLE ACCESS (BY INDEX ROWID) OF 'TAB1' (TABLE) (Cost=2 Ca rd=1 Bytes=12) 2 1 INDEX (RANGE SCAN) OF 'COL1_IDX2' (INDEX) (Cost=1 Card=1 ) Statistics ---------------------------------------------------------- 28 recursive calls 0 db block gets 22 consistent gets 0 physical reads 0 redo size 234 bytes sent via SQL*Net to client 280 bytes received via SQL*Net from client 2 SQL*Net roundtrips to/from client 0 sorts (memory) 0 sorts (disk) 1 rows processed SQL>Thank you

AJ

Maybe you are looking for

-

problem with update of applications

Hello, I had a problem to update my applications this screenshot above is always like that there not is finished. Can someone help me with this problem?

-

what you should know

-

graph shape wave double y axis: how to change the programmatic axis labels

I'm using waveform graph to display the data from the experiments. The user can select the channels to display; I did 2 axis y to view 2 channels. I would like to than the label of the axis y to change to different channels. I did it with a XY graph

-

Reveal the network security key

I am trying to determine the network security key on file for one of my networks wireless in the screen of the Security tab wireless network properties. I recognise security key (password) is hidden for security reasons, but can I temporarily displa

-

Error installing game; administrator has set policies to prevent installation

try to install a game on my computer and he repeats to me (the system administrator has set policies to prevent this installation.) How to solve this problem? I need help please