script for generating data

Hello

get DDL package, we find the script to create a table.

Is it possible to find a script to generate the same data?

Thank you.

John Stegeman wrote:

SQL Developer can create a script to "insert" with the results of a query. Just run your query, right-click in the grid of results and choose 'export' - from there you can choose the format "Insert", and you'll get a script with instructions insert containing your data.

I just made this in SQL Developer

Select / * Insert * / * from emp;

or

Select / * csv * / * from emp;

..

There is much more

And press F5. This feature is very convenient!

Tags: Database

Similar Questions

-

Help with a script for the date field

Is this possible when the display of a date field is not also: date {EEEE, MMMM D, YYYY}

then

Message: "Please select a date from the calendar to the date.

and focus on the date field

Thank you

I thought the event output, if you change the this.rawValue to xfa.event.change, you can try to use the calculate... event, which would mean that they get the message earlier.

Anyway they will always be able to cut-and - paste a date if it is in the correct format.

Bruce

-

PowerCLI script for DatastoreCluster, data warehouses and the size info, DataCenter, Clusters

Hello - I am looking to remove the DatastoreClusters and then list data warehouses as well with their size (total size, used space, free space, put in service, uncommitted space) and the total number of virtual machines on this data store. I would also like to understand what data center and they are on clusters. Is this possible? I might want to limit what is displayed in data warehouses that are 13 percent of free space or less.

Thank you

LORRI

Of course, try this way

Get-Datastore.

Select @{N = 'Center'; E={$_. Datacenter.Name}},

@{N = "DSC"; E = {Get-DatastoreCluster - Datastore $_______ |} {{Select - ExpandProperty name}}.

Name,CapacityGB,@{N='FreespaceGB'; E = {[math]: tour ($_.)} (FreespaceGB, 2)}},

@{N = "ProvisionedSpaceGB"; E = {}

[math]: Round (($_.)) ExtensionData.Summary.Capacity - $_. Extensiondata.Summary.FreeSpace + $_. ExtensionData.Summary.Uncommitted)/1GB,2)}}.

@{N = "UnCommittedGB"; E = {[math]: tour ($_.)} ExtensionData.Summary.Uncommitted/1GB,2)}}.

@{N = "VM"; E={$_. ExtensionData.VM.Count}} |

Export Csv report.csv - NoTypeInformation - UseCulture

-

Can anyone share a script file of data sample for me pump?

I just want to write a sample script for backup data pump regularly...

Thank you

Andrew.

Please see if it's useful:

http://matthiashoys.WordPress.com/2012/05/03/example-data-pump-export/

K.

-

How to generate the script to insert data tables present in a

How to generate the script to insert data from one table in an entire schema under environment sqlplus

with toads it please help me please!905310 wrote:

How to generate the script to insert data from one table in an entire schema under environment sqlplus

with toads it please help me please!The correct method by using the pump of the database or import/export or unload the data in CSV format for loading using SQL * Loader.

Generate instructions insert with literals is very bad choices - the bind variable is missing. It will be quite slow because many other CPU cycles are spend on hard analysis. The shared pool will become fragmented. This can cause errors of memory for other applications when they attempt to analyze SQLs for cursors.

-

MySQL to Oracle Migration offline: scripts incorrectly generated for tables

Hello

I used SQL Developer 3.0.4 to migrate Mysql to Oracle databases.

I have the following errors on the charger (oracle_ctl.sh) scripts to run:

SQL * Loader-2026: the charge was dropped because SQL Loader cannot continue.

SQL * Loader-500: could not open the file (data / framework_perdadepacotes_diari.txt)

The "framework_perdadepacotes_horario.txt" dump file is generated by mysqlsdump with the original mysql table name and ".txt" sufix:

-excerpt from unload_script.sh

mysqldump-u $username - p $server h $ t socket=/u01/mysqlmulti/mysql2/mysqld2.sock port password data = 3312 fields terminated by = "< EOFD >" fields escaped by = ""-lines terminated by = "< EORD >" "dxdb" "framework_perdadepacotes_horario" "

but sql loader controlfile makes reference to the file by using the new name of the oracle table (in this case, that the new name is truncated to 30 characters):

--

load data

INFILE ' data / framework_perdadepacotes_diari.txt ".

"str"< EORD >"

in the dxdb.framework_perdadepacotes_diari table

Another instance of the same problem, but in this case the table name starts wth "_":

-of unload_script.sh

mysqldump-h $server u $username - p$ password t data fields terminated by = "< EOFD >" fields escaped by = ""-lines terminated by = "< EORD >" "netdb" "_Clientes" "

file name generated by mysqldump: "_Clientes.txt."

CTL file:

load data

INFILE ' data / CLIENTES_1.txt ".

"str"< EORD >"

in the CLIENTES_1 table

Is this a bug, or are we supposed to manually fix the scripts for these cases?

Thank you

Edited by: user12099785 11/10/2011 06:11Currently, you have to fix manually because there was no bug recorded for this question. I have now filed the bug and it will be if all goes well fixed in one of the next versions to come.

-

Scripts for data cleansing Perf

Hello

We currently use BB 4.4 with the new features enabled PERF. Someone at - it scripts for cleaning or truncate the Perf Data collected? 4.5 is supposed to have the function programmed in. We run the BB server on unix.

Perf Data under the $BBHOME / bbvar / perf

The files are in plain text with lines like this:

Here is a not so elegant way to truncate your files in perl. You will need to set the time as a unix timestamp.

#Purge $BBPERF

@files = "grep - Rl. $BBPERF / *';"$file (@files) {} foreach

chomp ($file);

Print "$file\n";

@data ='cat $file | AWK ' {if (\$4 > = $startTime) {print \$0}} ";

If (!) () open (FILE, "> $file"))) {print "cannot open $file: $!"} \n » ; print LOGFILE "cannot open $file: $! \n » ; next ;}

foreach $line (@data) {print FILE $line ;}

Close the FILE;

} -

How to write a script for date get to the Clipboard

Hi experts,

How to write a script for date get to the Clipboard.

the date format will be like this:

05 - may

respect of

John

Thanks guys, thanks Sanon

I finally use the .bat doc

like this:

@@echo off

for /f "delims =" % in (' wmic OS Get localdatetime ^ | find ".") "") Set "dt = %% a"

the value "YYYY = % dt: ~ 0, 4%.

the value "MM = % dt: ~ 4, 2%.If MM % is 01 set MM = January

If % MM == 02 set MM = February

If MM % is MM value = March 03

If MM % is 04 MM value = April

If MM % is 05 MM value = may

If MM % is 06 MM value = June

If MM % == 07 set MM = July

If MM % is MM value = August 08

If MM % is MM value = September 09

If MM % is 10 MM value = October

If MM % is 11A set MM = November

If MM % is game MM 12 = Decemberthe value "DD = % dt: ~ 6, 2%.

the value "HH = % dt: ~ 8, 2%.

the value "Min = % dt: ~ 10, 2%.

Set "s = % dt: ~ 12, 2%.Echo DD - MM HH % %% % Min | Clip

It works

respect of

John

-

Facing the question in calc script while trip data for the current year to the previous year

Hi all

Need your serious help in my calc script.

I am writing a calc script to transfer data from order book of FY15 Q1 to Q4 FY14 here is the problem in my script. If spin this script to copy the same year, it works fine but when trying to load between two different years, it does not work. could someone help me please. where miss me the logic. Thanks for the help in advance.

Here's the script:

ESS_LOCALE English_UnitedStates.Latin1@Binary

SET AGGMISSG

DIFFICULTY (@List ("real GL", "Real ML", "ACT", & ActualLoadYrBklg))

() "Dec / (Inc.) in suffering.

IF (@Ismbr ("Q4"))

"Dec / (Inc.) in suffering (Non - di) =.

-1 * (("Q1"-> "starting Backlog",-1,@Relative("Years",0)) @Prior - ' count backward (Non - di) ");

"DENTE change overdue =.

-1 * (@Prior ("Q1"-> "Cost",-1, @Relative("Years",0))-"Cost of rear" rear);

Else if (@IsMbr ("Q1": "Q3"))

"Dec / (Inc) order book ' =-1 * (@Prior ('Rear start',-1, @Relative("YearTotal",0))-" starting back (Non - di) "");

'Change of GEAR wheels in suffering' =-1 * (@Prior ("backward Cost",-1,@Relative("YearTotal",0)) - "money back");

endif ;)

endfix

SM.

My first question is what is the order of members in your dimension years and quarter the level low your dimension of periods? Secondly, what dimensions are rare and dense (can you tell me which members in the calc are in dense and sparse dimensions)

Third, you cite the specific years FY15 and FY14, I suppose that & ActYearBklg is FY15 assuming this is correct, I would add a substitution variable for & PriorYrBklg with FY14 inside (if I'm back, and then modify the variables) for clarity, I'm going to hardcode the values in the Calc. It also assumes years is sparse

You can try this

FIX ("GL 'real 'real ML", "ACT", "FY15") / * you need not @list * /.

() "Dec / (Inc.) in suffering.

IF (@Ismbr ("Q4"))

"Dec / (Inc.) in suffering (Non - di)"-> "FY14 =.

-1 * ("Q1'-> 'backlog begins' - 'Start rear (Non - di)'->"FY14");

"DENTE change orders"-> "FY14 =.

-1 * ("Q1"-> "Of back - cost"Back cost"-> FY14");

Else if (@IsMbr ("Q1": "Q3"))

("Dec / (Inc) order book"-> "FY14' =-1 * beginning backlog->"FY14'-'Begins the backlog (Non - di)');

'Change of CMV in the order book "->" FY14' =-1 * ('Back cost'-'Back cost'-> "FY14");

endif ;)

endfix

The years may be replaced by the subvars

-

2651 a conversion of TSP Script for LabVIEW

Hello

I have a problem on the conversion of all TSP scripts that contain functions and appeal for the end loops. I'm new to the TSP with models of trigger scripts. I used the Script Builder (TSB) Test tool and am able to run any TSP and generate raw data, but I don't seem able to convert most of the codes in the command of LabVIEW VISA or loader LV TSP TSP and run it to generate data... I can't find any tutorial or examples how to do it.

Let's say that... Use the example of KE2651A_Fast_ADC_Usage.tsp (pulse) and I'll just focus on the portion of function CapturePulseV (pulseWidth, pulseLimit, pulseLevel, numPulses). I have seen a few examples of LV that says loadscript myscript and close on endscript. I did a lot of different approaches, and I kept getting errors in particular the print function that I am not able to generate data through LV by to read the data in the buffer to inside the instrument. Some approaches, I have had no errors but no data... Some approaches, I got error-285.

The part of the code TSP pulse that works in TSB is here (I'm not including loadscript and endscript) and what is the RIGHT way to modify the code for LabVIEW and run it and obtain data? Thank you:

function CapturePulseV(pulseLevel, pulseWidth, pulseLimit, numPulses) if (numPulses == nil) then numPulses = 1 end -- Configure the SMU reset() smua.reset() smua.source.func = smua.OUTPUT_DCVOLTS smua.sense = smua.SENSE_REMOTE smua.source.rangev = pulseLevel smua.source.levelv = 0 -- The bias level smua.source.limiti = 5 -- The DC Limit smua.measure.autozero = smua.AUTOZERO_ONCE -- Use a measure range that is as large as the biggest -- possible pulse smua.measure.rangei = pulseLimit smua.measure.rangev = pulseLevel -- Select the fast ADC for measurements smua.measure.adc = smua.ADC_FAST -- Set the time between measurements. 1us is the smallest smua.measure.interval = 1e-6 -- Set the measure count to be 1.25 times the width of the pulse -- to ensure we capture the entire pulse plus falling edge. smua.measure.count = (pulseWidth / smua.measure.interval) * 1.25 -- Prepare the reading buffers smua.nvbuffer1.clear() smua.nvbuffer1.collecttimestamps = 1 smua.nvbuffer1.collectsourcevalues = 0 smua.nvbuffer2.clear() smua.nvbuffer2.collecttimestamps = 1 smua.nvbuffer2.collectsourcevalues = 0 -- Can't use source values with async measurements -- Configure the Pulsed Sweep setup ----------------------------------- -- Timer 1 controls the pulse period trigger.timer[1].count = numPulses - 1 -- -- 1% Duty Cycle trigger.timer[1].delay = pulseWidth / 0.01 trigger.timer[1].passthrough = true trigger.timer[1].stimulus = smua.trigger.ARMED_EVENT_ID -- Timer 2 controls the pulse width trigger.timer[2].count = 1 trigger.timer[2].delay = pulseWidth - 3e-6 trigger.timer[2].passthrough = false trigger.timer[2].stimulus = smua.trigger.SOURCE_COMPLETE_EVENT_ID -- Configure SMU Trigger Model for Sweep/Pulse Output ----------------------------------------------------- -- Pulses will all be the same level so set start and stop to -- the same value and the number of points in the sweep to 2 smua.trigger.source.linearv(pulseLevel, pulseLevel, 2) smua.trigger.source.limiti = pulseLimit smua.trigger.measure.action = smua.ASYNC -- We want to start the measurements before the source action takes -- place so we must configure the ADC to operate asynchronously of -- the rest of the SMU trigger model actions -- Measure I and V during the pulse smua.trigger.measure.iv(smua.nvbuffer1, smua.nvbuffer2) -- Return the output to the bias level at the end of the pulse/sweep smua.trigger.endpulse.action = smua.SOURCE_IDLE smua.trigger.endsweep.action = smua.SOURCE_IDLE smua.trigger.count = numPulses smua.trigger.arm.stimulus = 0 smua.trigger.source.stimulus = trigger.timer[1].EVENT_ID smua.trigger.measure.stimulus = trigger.timer[1].EVENT_ID smua.trigger.endpulse.stimulus = trigger.timer[2].EVENT_ID smua.trigger.source.action = smua.ENABLE smua.source.output = 1 smua.trigger.initiate() waitcomplete() smua.source.output = 0 PrintPulseData() end function PrintPulseData() print("Timestamp\tVoltage\tCurrent") for i=1, smua.nvbuffer1.n do print(string.format("%g\t%g\t%g", smua.nvbuffer1.timestamps[i], smua.nvbuffer2[i], smua.nvbuffer1[i])) end endI finally solved it myself! I first create support shell, according to the documents, but the problem was with functions of scripts but I solved by introducing VISA separate, feature writing and THEN retrieve the data from the instrument directly by VISA buffer read more. I did TSP_Function Script Loader that allows simply copy/paste codes teaspoon (any * .tsp) of: TSB program or incorporated into this type of function and loader.vi, name (parameters), defined by its own pasted script then it will generate RAW files directly in the array of strings that can be broken into pieces or restructured into what you want as for the graphics, etc..

That's all I really need to do, I can do codes of tsp in LV and get the data off of it easily via the function defined. Now, this Loader.VI behaves in the same way that TSB keithley-made program I use.

Here I add Loader.vi Script TSP_Function (in LV 2011 +).

-

I couln can't find the dbmshptab.sql script for

I would likle to use Profiler hierarchical. In order to use it, I need to run below script. However, I couln t find anywhere I searched the table object DBMS_HPROF package, there are in db. You have an idea how I can run this script? In this case there is not in my computer can I download it anywhere?

Note: I am using windows 7

@$ORACLE_HOME/rdbms/admin/dbmshptab.sql

Thanks for your help.select * from all_objects where object_name = 'DBMS_HPROF'; SYS DBMS_HPROF 10581 PACKAGE BODY 01/11/2007 01/11/2007 2007-11-01:21:00:04 VALID N SYS DBMS_HPROF 7480 PACKAGE 01/11/2007 01/11/2007 2007-11-01:20:55:24 VALID N PUBLIC DBMS_HPROF 7481 SYNONYM 01/11/2007 01/11/2007 2007-11-01:20:55:24 VALID N select * from v$version; Oracle Database 11g Enterprise Edition Release 11.1.0.6.0 - 64bit Production PL/SQL Release 11.1.0.6.0 - Production "CORE 11.1.0.6.0 Production" TNS for 64-bit Windows: Version 11.1.0.6.0 - Production NLSRTL Version 11.1.0.6.0 - ProductionRem Rem $Header: dbmshptab.sql 30-jul-2007.13:07:41 sylin Exp $ Rem Rem dbmshptab.sql Rem Rem Copyright (c) 2005, 2007, Oracle. All rights reserved. Rem Rem NAME Rem dbmshptab.sql - dbms hierarchical profiler table creation Rem Rem DESCRIPTION Rem Create tables for the dbms hierarchical profiler Rem Rem NOTES Rem The following tables are required to collect data: Rem dbmshp_runs Rem information on hierarchical profiler runs Rem Rem dbmshp_function_info - Rem information on each function profiled Rem Rem dbmshp_parent_child_info - Rem parent-child level profiler information Rem Rem The dbmshp_runnumber sequence is used for generating unique Rem run numbers. Rem Rem The tables and sequence can be created in the schema for each user Rem who wants to gather profiler data. Alternately these tables can be Rem created in a central schema. In the latter case the user creating Rem these objects is responsible for granting appropriate privileges Rem (insert,update on the tables and select on the sequence) to all Rem users who want to store data in the tables. Appropriate synonyms Rem must also be created so the tables are visible from other user Rem schemas. Rem Rem THIS SCRIPT DELETES ALL EXISTING DATA! Rem Rem MODIFIED (MM/DD/YY) Rem sylin 07/30/07 - Modify foreign key constraints with on delete Rem cascade clause Rem kmuthukk 06/13/06 - fix comments Rem sylin 03/15/05 - Created Rem drop table dbmshp_runs cascade constraints; drop table dbmshp_function_info cascade constraints; drop table dbmshp_parent_child_info cascade constraints; drop sequence dbmshp_runnumber; create table dbmshp_runs ( runid number primary key, -- unique run identifier, run_timestamp timestamp, total_elapsed_time integer, run_comment varchar2(2047) -- user provided comment for this run ); comment on table dbmshp_runs is 'Run-specific information for the hierarchical profiler'; create table dbmshp_function_info ( runid number references dbmshp_runs on delete cascade, symbolid number, -- unique internally generated -- symbol id for a run owner varchar2(32), -- user who started run module varchar2(32), -- module name type varchar2(32), -- module type function varchar2(4000), -- function name line# number, -- line number where function -- defined in the module. hash raw(32) DEFAULT NULL, -- hash code of the method. -- name space/language info (such as PL/SQL, SQL) namespace varchar2(32) DEFAULT NULL, -- total elapsed time in this symbol (including descendats) subtree_elapsed_time integer DEFAULT NULL, -- self elapsed time in this symbol (not including descendants) function_elapsed_time integer DEFAULT NULL, -- number of total calls to this symbol calls integer DEFAULT NULL, -- primary key (runid, symbolid) ); comment on table dbmshp_function_info is 'Information about each function in a run'; create table dbmshp_parent_child_info ( runid number, -- unique (generated) run identifier parentsymid number, -- unique parent symbol id for a run childsymid number, -- unique child symbol id for a run -- total elapsed time in this symbol (including descendats) subtree_elapsed_time integer DEFAULT NULL, -- self elapsed time in this symbol (not including descendants) function_elapsed_time integer DEFAULT NULL, -- number of calls from the parent calls integer DEFAULT NULL, -- foreign key (runid, childsymid) references dbmshp_function_info(runid, symbolid) on delete cascade, foreign key (runid, parentsymid) references dbmshp_function_info(runid, symbolid) on delete cascade ); comment on table dbmshp_parent_child_info is 'Parent-child information from a profiler runs'; create sequence dbmshp_runnumber start with 1 nocache;See you soon,.

Manik. -

Migration - MySQL to Oracle - do not generate data files

Hello

I have been using the SQL Developer migration Assistant to move data from a MySQL 5 database to an Oracle 11 g server.

I used it successfully a couple of times and its has all worked.

However, I am currently having a problem whereby there is no offline data file generated. Control files and all other scripts generated don't... just no data file.

It worked before, so I'm a bit puzzled as to why no logner work.

I looked at newspapers of migration information and there is no errors shown - datamove is marked as success.

I tried deleting and recreating rhe repository migration and checked all grants and privs.

Is there an error message then it would be something to continue but have tried several times and checked everything I can think.

I also tried the approach of migration command-line... same thing. Everything works fine... no errors... but only the table creation and control script files are generated.

The schema of the source is very simple and there is only the tables to migrate... no procedure or anything else.

Can anyone suggest anything?

Thank you very much

MikeHi Mike,.

I'm so clear.

You use SQL Developer 3.0?

You walked through the migration wizard and choose Move Offline mode data.

The generation of DDL files are created as are the scripts to move data.

But no data (DAT) file is created and no data has been entered in the Oracle target tables.With offline data move, Developer SQL generates (saved in your project, under the DataMove dir directory) 2 sets of scripts.

(a) a set of scripts to unload data from MySQL to DAT files.

(b) a set of scripts to load data from the DAT files in the Oracle target tables.

These scripts must be run by hand, specifying the details for the source databases MySQL and Oracle target."no offline data file generated. Control files and all other scripts generated don't... just no data file. »

«.. . but only the creation and control file table scripts are generated. »What you mean

(1) the DAT files are not generated automatically. This should, if we need to run the scripts yourself

(2) after manually running the scripts that the DAT are not present, or that the DAT files are present, but the data does not load in Oracle tables.

(3) the scripts to move data in offline mode does not get generatedKind regards

Dermot

SQL development team. -

extraction of volume changes to generate data automation

I have two tracks. One is punchier it's synth strings all

I can extract the hard-hitting track volume level changes to generate data automation?

I would reverse the Automation data and apply it to the second track.

The effect I'm trying to achieve with each beat of a drum, for example, the volume of the synth breaks down.

Is there another way to do this?

Looks like a great app for a compressor.

Install the compressor on the synth channel online and have the input of the side chain linked to your drum channel. Adjust the parameters of the compressor (threshold, attack, release, ratio) of taste.

-

Numbers: Automatically generate data in one worksheet to another worksheet.

By the numbers, how can you (or is it possible to) automatically generate data in a spreadsheet that is manually entered in another sheet? For example, I want to track customer information in sheets 2-10 and I want specific data of these sheets to automatically fill the sheet 1 box so I can create an overview of the specific data in any of my clients. As a dashboard of sorts.

The devil is in the details here. Can you give more details?

In general, I would say it will be much easier to enter your data at a specific location and then consolidate that extracts the "views" or "reports" it for each customer, to enter data for each client separately.

But both ways are possible.

SG

-

Mode script for ANY c ++ function

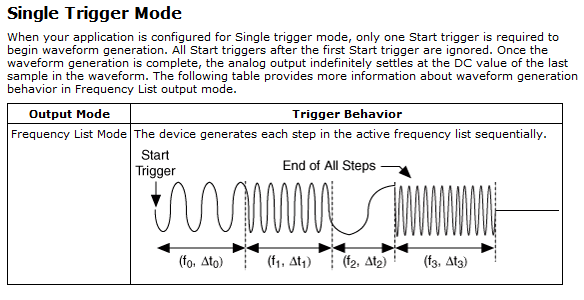

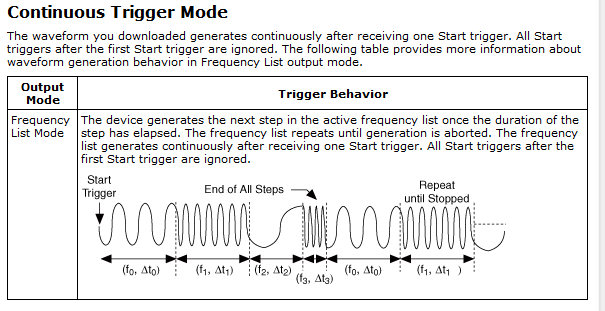

Hi all

My goal was to use the pxi 5406 to implement features of frequency sweep. Right now, we use only the functions on the list of the frequencies of creation. There are four modes of release for the frequency list, signle, continuous, step by step and burst. We use the bleachers through fashion. It is painful to use this mode, since for each frequency, you need to send a rising edge, so if I have several hundred, it means I have to produce this amount of rising edges. This will generate a lot of buffer in another analog card.

My question is, could I use script for the frequency list view? Or is there a smart way to achieve this? From the file of signal aid, he said that there are some c programe on script mode. But I can't find any examples in my computer. If you have any other, could you send me?

Thank you very much.

.Yami.

Yami,

The 5406 has the ability to run script mode. Only for her output modes are Standard function and frequency list. However, I believe that you can do what you want to do with the list mode frequencies. Assuming that you do not want to trigger you can put the camera in single or continuous Mode. Single will play your select frequency scan and then once completed, build:

Continuous is similar, but you continue to repeat the signals to stop:

All the above details are in aid of signal generators of NOR. I looked under the heading devices > 5406 > trigger > triggering Modes.

With simple or continuous, you can specify the length of the waveform step, which could be a good starting point. With regard to the examples for c ++, I do not have, but if you look in the Start Menu, NOR-FGEN, National Instruments, examples, OR-FGEN C examples you can find a folder for "Sweep generator", there is a model and an example C you can look over your program after. I hope this helps!

Maybe you are looking for

-

I am trying to acquire data using a PCIe-6361 with LabWindows/CVI 8.1.0 (with DAQmx) on a Windows XP computer. It is analog, sync data with a digital trigger signal, and I have the data entering ai0, with the trigger going into PFI0. Right now, the t

-

I don't know anything about the notebook, but when I open a photo from my photo folder, I get a note gebrish.and at the top indicating, Notepad. What I did to create this? There, can someone help me please. Thank you Norma Jean Thompson

-

RunDLL"error loading C:\windows\ekagosixaxet.dll not found.

Whenever I have the connection as a guest on Windows XP (home edition) I get this RUNDLL error message c:\windows\ekagosixaxet.dll could not found.

-

Windows Media Center Internet TV

When I try to access internet TV via Media Center, I have a box saying that it is not available in the Canada. I live in the State of New York. How can I fix it?

-

BlackBerry Smartphones how BIS works with MDaemon

I had installed mdaemon 11.03, I want to set up BIS on the server, I m new for BIS can any body explain to me how this works...?