table mgmt_current_availability at rest EM

Salvation in the mgmt_current_availability in rest EM table there is a 'current_status' column, which stores numerical values. Is it possible to know the meaning of these status codes.

For example 1 What is - this means status. Is there a table where I know the corresponding values against these status codes

Hello

Here are the meanings of the values for the CURRENT_STATUS column. However, consider using the AVAILABILITY_CURRENT view of $ MGMT as Courtney suggested.

0: "target down."

1: "target upwards.

2: metric "error".

3: "officer down".

4: "Inaccessible".

5: "Blackout".

6: 'in the waiting/Unknown ".

Kind regards

-Loc

Tags: Enterprise Manager

Similar Questions

-

Get the 500 error trying to create a table using the REST API

Hello

I tried to create a table using the REST API for Business Intelligence Cloud, but I got 500 Internal Server Error for a while now.

Here are the details that I use to create a table.

and the json to create the schema that I use is

[{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [18], 'columnName': ["ROWID"]}]

, {'Nullable': [true], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [18], 'columnName': ['RELATIONID']},

{'Nullable': [true], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [18], 'columnName': ['ID']}

, {'Nullable': [true], 'defaultValue': 'dataType' [null],: ['TIMESTAMP'], 'precision': [0], 'length': [0], 'columnName': ['RESPONDEDDATE']},

{'Nullable': [true], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [255], 'columnName': ['RESPONSE']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['TIMESTAMP'], 'precision': [0], 'length': [0], 'columnName': ['SYS_CREATEDDATE']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [18], 'columnName': ['SYS_CREATEDBYID']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['TIMESTAMP'], 'precision': [0], 'length': [0], 'columnName': ['SYS_LASTMODIFIEDDATE']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [18], 'columnName': ['SYS_LASTMODIFIEDBYID']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['TIMESTAMP'], 'precision': [0], 'length': [0], 'columnName': ['SYS_SYSTEMMODSTAMP']},

{'Nullable': [false], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [10], 'columnName': ['SYS_ISDELETED']},

[{'Nullable': [true], 'defaultValue': 'dataType' [null],: ['VARCHAR'], 'precision': [0], 'length': [50], 'columnName': ['TYPE']}]

I tried this using postman and code, but I always get the following response error:

Error 500 - Internal server error

Of RFC 2068 Hypertext Transfer Protocol - HTTP/1.1:

10.5.1 500 internal Server Error

The server encountered an unexpected condition which prevented him from meeting the demand.

I am able to 'get' existing table schemas, delete the tables, but I'm not able to make put them and post operations. Can someone help me to identify the problem, if there is no fault in my approach.

Thank you

Romaric

I managed to create a table successfully using the API - the only thing I see in your JSON which is different from mine is that you have square brackets around your values JSON where I have not. Here is my CURL request and extract my JSON file (named createtable.txt in the same directory as my CURL executable):

curl u [email protected]: password UPDATED h x ' X-ID-TENANT-NAME: tenantname ' h ' Content-Type: application/json '-binary data @createtable.txt https://businessintell-tenantname.analytics.us2.oraclecloud.com/dataload/v1/tables/TABLE_TO_CREATE k

[

{

'columnName': 'ID',

'dataType': 'DECIMAL ',.

'Length': 20,.

"accuracy": 0.

'Nullable': false

},

{

'columnName': 'NAME',

'dataType': 'VARCHAR ',.

'Length': 20,.

"accuracy": 0.

'Nullable': true

},

{

"columnName': 'STATUS."

'dataType': 'VARCHAR ',.

'Length': 20,.

"accuracy": 0.

'Nullable': true

},

{

"columnName': 'CREATED_DATE."

'dataType': 'TIMESTAMP '.

'Length': 20,.

"accuracy": 0.

'Nullable': true

},

{

'columnName': 'UPDATED_DATE ',.

'dataType': 'TIMESTAMP '.

'Length': 20,.

"accuracy": 0.

'Nullable': true

}

]

-

Remove unwanted in table 2D lines not knowing the index for which you want to remove

Hello!

I'm new on Labview and I hope someone can help me with my problem.

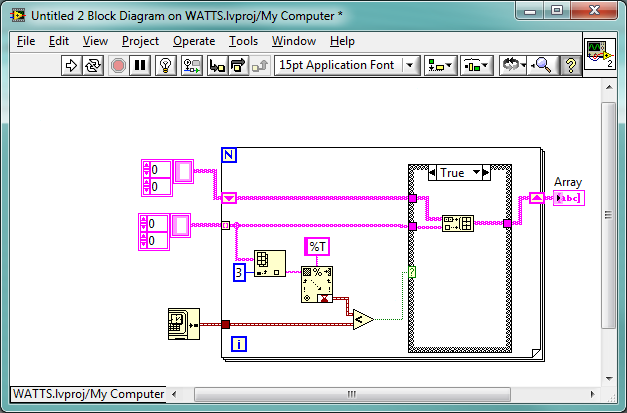

First import an Excel worksheet into a 2D-string table. For each row in the table, then I want to compare the data in a specific column (which is a date (timestamp)) with the current date. Lines containing a date with number less than today's date, I want to put in a new table and the rest I have no need.

I managed to create a time stamp for today so that it matches the timestamp of Excel. But for comparison and the creation of a new part of table, I have no idea how do. I tried several ways with no luck at all (probably because I don't understand all the screws that I use).

Happy for all the help I can get!

You are on the right track! (although your attempt lists only the datetime values)

What you need to add/change, it is the resulting table needs to be connected to the loop as a Shift register (with an initial empty table), and in the case you use either Build table to add the current line or send the non modified through.

You'll probably want to send all through and not only the datetime value.

You will probably need some tweak to use only the date, but similar to this:

/Y

-

Iterator for the table and form is a problem during the cleaning of the records

Hello

I use JDev 12.1.2.

I have an object of the detail view. I represent a part of its fields in a table and the rest in a form. I use the same iterator. Basically, I dragged and dropped the object even from the view of the data control and created a table and form layout with the fields I wanted in each provision. I put in place a clear feature for the rows in the table with a clear"" key. -on click I delete the line of the iterator in the managed bean. When I do this the selected record is deleted and the next record is displayed. But fields entered in the form layout also gets deleted. (the form should also be showing the record currently selected - it shows but entrable fields in the form are deleted because of claire that I did on the previous line.) If anyone can help get this resolved?

Not use the same iterator for the tables and forms here? The links are in the row (row.bindings...) for the table, but for the form it is (links...). The Delete on the current row operation is causing the iterator delete all fields of links instead of from the line? Please shed some light.

Thank you

UMA

How do you rank on the iterator compensation?

use resetActionListener in your clear button and let us know what is happening?

Ashish

-

Data in the table not migrated

Hi all,

im getting a problem when migrating mysql to oracle table data.

IM using sql developer 4.0 for migration.

here on the 720 tables, I got given in 450 tables only, the rest is blank.

I found an error in my diary as follows

Impossible to move data: Streaming result set com.mysql.jdbc.RowDataDynamic@109f37d is still active.

No statement can be issued when the continuous result sets are open and in use on a given connection.

Ensure that you called. close() on any active streaming result sets before trying more requests.

im running on windows 7 32 bit os, oracle 10 g.

could someone help me.

How can I ask a question in the forums?

-

RESTful Service search parameters

Hello

I use APEX 4.2.2... with earphone 2.0.3

I want to expose data in the EMP table as a RESTful Service with parameters:

The source is:

Source type: SQL query. Format: JSON select * from emp where (:job IS NULL OR job = :job) and (:ename is null or ename = :ename)

Model of the URI: employeesfeed / {job} / {ename} If I use this URL, it works very well...

http://oraclesrv/real_estate/property/HR/employeesfeed/Manager/Blake

and this gives:

{"next":{"$ref":"http://oraclesrv/real_estate/property/hr/employeesfeed/MANAGER/BLAKE?page=1"},"items":[{"empno":7698,"ename":"BLAKE","job":"MANAGER","mgr":7839,"hiredate":"1981-04-30T20:00:00Z","sal":2850,"deptno":30}]}But, as you will notice may be in the logic of the Source, the "end user" should be able to retrieve all MANAGERS using this link.

http://oraclesrv/real_estate/property/HR/employeesfeed/Manager/null

But, this gives:

{"items": []}

So, how can I retrieve all managers? (using this Source).

Kind regards

The four letters (n, u, l, and l) at the end of your URL are a string of length 4.

Basically, you make this comparison:

'null' is null (which is false)

If you want to keep this syntax, add

or upper(:job) = 'NULL '.

MK

-

a lot of the great process time table

Hello world

I have a problem with a process that calculates 6 columns in a table with 260 million lines. Initially, the computing time was about 1.2 minutes per line. which obviously takes more than a lifetime to the end (about 500 years).

To improve this process naturally so my first step was to the partition table. This create 64 partitions ranks 5 million in total. My second step was to use a big raise with a limit of 500,000 by block, in this way, I have my table and the next step is to calculate my columns. Unfortunately, my first 2 columns just take 2 hours to complete the 260 million (Note: I need to calculate all the rows in the table), but the rest of the columns, take 90% of total or even more. Finally, I make a big day with forall using data calculated on my paintings.

After all this, calculation time was reduced from 1.2 minutes by the line to 6 or 7 seconds per rank. time, which is great, but only reduced from 500 years to 49 years.

With this Setup, my next logical step is to parallelize my request, but if one have a degree of 8, my best shot is to take 6 years.

Now, four of the six columns, the problem is that I need to calculate some values which requires some avg and sum over from these last six months before recording data.

So, here's my real question. How I do it works?... surely I can't wait many years. the company is perhaps broken before this finish.

Who is the professional way to make this real? I think that there, businesses have tables with many more lines, or even billions, I saw in other forums.

I need this, somehow, somehow, it takes not more than one or two months... still less is even better. Help, please

My DB is a 11G, running on 64 bit. 32 GB of RAM, CPU Quad Core 6.-Please explain what makes this request

Select nvl (avg (chargeable), 0), max (flactual), max (flanterior), max (lactual), max (lanterior)

in v_facturable_ant, v_flactual_ant, v_flanterior_ant, v_lactual_ant, v_lanterior_ant

of mv_data_sec

where v_periodo (idx) period

and cod_empresa = v_cod_empresa (idx)

and id_cliente = v_id_cliente (idx)

and id_medidor = v_id_medidor_consulta

and flactual = (select max (flactual)

of mv_data_sec

where cod_empresa = v_cod_empresa (idx)

and id_cliente = id_cliente

and id_medidor = v_id_medidor_consulta

and v_periodo (idx) period

and flactual<>1 v_flactual (idx)-online the value of an entry in the PL/SQL table

2 v_periodo (idx)-online key to score even a record in the PL/SQL table

3. the aggregate of all partitions except v_periodo (idx)

4 find the max (flactual) value is less than the value # 1 above

5 only where aggregate data flactual value = value # 4 above and

cod_empresa, id_cliente, id_medidor a recording in the PL/SQL table matchThis is an aggregation for each record in each partition table

"where id_tipo_documento ('b', 'F').The aggregation will include all the records with the largest value flactual which is

less than the record value of PL/SQL regardless of the value of id_tipo_documento.So, if there are records with values of 5, 4, 3, 2 and 1 flactual

1 rec with value of 5 will include records that have a value of 4

2 rec with value 4 will include records that have a value of 3

3. rec with value 3 will include records that have a value of 2

4. rec with value of 2 will include records that have a value of 1With the approach you use if there are 1,000 records with the same values, flactual, cod_empresa, id_cliente and id_medidor, your code will be

perform exactly the same aggregation on the other values (lactual, lanterior, flanterior, facurable) 1,000 times.This suggests that you do what I suggested in a previous post as #2

>

2. create a separate query to roll up the data in the table to get the max and avg values, you want to use for the calculation and the update. This query can roll by 'cod_empresa', 'id_cliente' and 'id_medidor '. You can roll it for each period or for each 'anti period' (with period1, periode2 and period3 that the anti-periode periode2 includes all other periods - Yes period.1 & 3).

>

Something along the lines ofselect periodo, cod_empresa, id_cliente, id_medidor, count(facturable), sum(facturable), max(flactual), max(flanterior), max(lactual), max(lanterior) from mv_data_sec group by periodo, cod_empresa, id_cliente, id_medidorand record the results in a new work table that is indexed appropriately. It doesn't once aggregations in advance.

Use this work table in your query above. You can now change the query or cursor to work with only one partition at a time by adding a WHERE clause with the partition key that is passed as a parameter to your procedure.

You may even be able to change the CURSOR to join the main table with the new table of work and iterate that, without needing to make a second request at all.

One thing. that I don't know. You eliminate aggregation online because you probably aggregate values several times according to the grouping of data.

I would still like to see a small set of sample data and the results of your calculations on it.

-

Find the largest tables (disk space) in a database

Our problem: customers who have problems that we cannot recreate are asked to export their data as a dmp and then we download the dmp to investigate to import locally and to implement were the problem is.

Some customer data sets are too large to download (3-4 GB - I know that this shouldn't be a problem but some customers have very slow connection speeds and it would take a lifetime).

To work around this, they put on a DVD and send (which takes a few days).

The thing is, that these data sets are probably around 400 MB with a table showing the rest with blobs (and this table is probably not the cause of the problem). So indeed, we can it exclude from the export.

What we want to achieve:

There are potentially some of these tables that could be large so we would like to produce a script to determine which tables are the largest disk space. We then (if the problem that the customer experiences is nothing to do with these tables) will not include these export on the DMP. Which means we can get their faster data and subsequently solve their problem in a shorter time.

Where to start any help will be much appreciated. We use db 10gYou can query s/n | WHERE user_segments, ordered by decreasing bytes.

http://download.Oracle.com/docs/CD/B19306_01/server.102/b14237/statviews_4097.htm#REFRN23243

Something like:select segment_name , segment_type , bytes from ( select segment_name , segment_type , bytes from dba_segments where owner = user order by bytes desc ) where rownum <= 10For the 10 largest segments.

-

Hi all

I'm working on a project that would require one program to talk to another (same computer) program, where the use of the network variable.

Now say that I have a list or a table of names that the changes in a single program, what I would do is to take parts of the list that is in a single program and transfer parts of the list to another program.

The list must be transferred at least 40 unique names of different sizes with letters.

I have data tables ready I just need to understand the part of the transfer.

I tried the 3D.sim, but it seems to be a problem when I'm with strings, especially when the strings are of different lengths.

Also when using the CNVCreateArrarDataValue errors on table place being small on the size of the array?

String array lists are usually 2D (line and size) does that factor in?

Assume the network variable is declared

code exp just to get the idea...

int i = 0, numItems = 10;

Data CNVData = 0, NVlist [120]= {0};

size_t dim= {0};

size_t dim2 = {0};

for (i = 0; i<>

{

char name [20] list = "0" / "."

GetTabelCellValue (Panel, PANEL_LIST, MakePoint(2,i), name of the list);

Dim [0] = strlen (listName); I tried size_of but definalty did the Sun big

CNVCreateArrayDataValue (& NVList [i], CNVString, name of the list, 1, Sun) ///i get questions of size table here

dim2 ++;

}

CNVCreateStructDataValue (& data, NVList, dim2) ///here is another problem with dimensions

CNVPutDataInBuffer (handle, data, 2000);

I also tried

for (i = 0; i<>

{

char name [20] list = "0" / "."

GetTabelCellValue (table, list, MakePoint(2,i), name of the list);

Dim [0] = strlen (listName);

CNVCreateDataValue (& NVList [i], CNVString, name of the list)

dim2 ++;

}

CNVCreateStructDataValue (& data, NVList, dim2) transfers of value of string ///one

CNVPutDataInBuffer (handle, data, 2000);

I think I'm close, but just can't get the right sequence

Thanks

lriddick,

You can write an array of strings easily, but it must be an array of strings. It cannot be a two-dimensional character array, which is not the same thing. For example, the following code should work (I replaced your table with a table called "table", but the rest should be the same). Note that I have create only a single CVNData, not a picture of CNVData:

char * array [] = {'one', 'two', ' three', 'four', 'five', "six", "seven", "eight", "nine", "ten"};

char * name [10] list;

CNVData = 0, NVList data;

size_t dim [] = {10};

for (i = 0; i<>

{

name of list [i] = malloc (strlen (table [i]) + 1);

strcpy (name of list [i] table [i]);

}

CNVCreateArrayDataValue (& NVList, CNVString, listName, 1, Sun);

for (i = 0; i<>

free (listName [i]);CNVPutDataInBuffer (handle, NVList, CNVWaitForever);

-

Please delete my concept

I have sequence level database created for my paintings.

In Jdev. When I select the type of an attribute such as DBSequence, that DBSequence assigned to this attribute? There are a several sequences in DB

J Dev Version: 11.1.2.0.0

Hello

Check this box

A common case to refresh an attribute after insertion occurs when a primary key attribute value is assigned by a

BEFORE INSERT FOR EACH ROWtrigger. Often the trigger affects the primary key of a database sequence using the logic of PL/SQL. Example 4-9 shows an example of this.Example 4-9 PL/SQL Code affecting a primary key from a database of sequences

CREATE OR REPLACE TRIGGER ASSIGN_SVR_ID BEFORE INSERT ON SERVICE_REQUESTS FOR EACH ROW BEGIN IF :NEW.SVR_ID IS NULL OR :NEW.SVR_ID < 0 THEN SELECT SERVICE_REQUESTS_SEQ.NEXTVAL INTO :NEW.SVR_ID FROM DUAL; END IF; END;

In the Edit attribute dialog box, you can set the value of the built-in Type for the type of data field named

DBSequenceand the primary key will be assigned automatically by the database sequence. The definition of this type of data automatically selects refresh after insert checkbox.Note:

The sequence name appears in the tab sequence is only used at design time when you use the feature to create Tables of data described in Section 4.2.6 'How to Create Database Tables from entity objects.' The sequence shown here will be created with the table on which rests the entity object.

When you create a new line of the entity whose primary key is a

DBSequence, a single negative number is assigned as a temporary value. This value serves as the primary key for the duration of the transaction in which it is created. If you create a set of interrelated entities in the same transaction, you can assign this temporary value as a foreign key value on the other lines of the new related entity. At the time of validation of transaction, the entity object issues hisINSERTby using the operation theRETURNING INTOclause to retrieve the database real relaxation-assigned primary key value. In a composition relationship, any news related entities that previously used the temporary negative value as a foreign key will get this updated value to reflect the new real primary key of the master.You also generally assign a primary key Updatable property to a value of DBSequence forever. The entity object assigns the temporary ID and then it refreshes with the real value of ID after the

INSERToperation. The end user never needs to update this value.and also check this link also

http://docs.Oracle.com/CD/E17904_01/Web.1111/b31974/bcadveo.htm#CEGBJJIA

The Desciprtion above shows in the oracle documentation

Concerning

Vincent

-

FRM-40353 - query cancelled on Execute_Query

Hello

I have a simple code - followed go_block ('blk') execute_query;

After running "Execute_query", I get an error message "frm-40353 - cancelled query". "." Why is it so? When I run the select query on the DB table on which rests the block, I can see the documents.

Thank youThis is the description of forms for the Mode of Interaction property using:

Specifies the mode of interaction for the module of the form. Interaction mode dictates how a user can interact with a form during a query.

If the Interaction is set on blocking, then users cannot resize or otherwise interacting with the form until that records for a query are retrieved from the database.

If the value of Non-blocking, then end users can interact with the form while the records are be recovered.

Non-blocking interaction mode is useful if you plan that the query will take a lot of time and you want that the user can interrupt or cancel the request.

In this mode, the runtime of forms will display a dialog box that allows the user to cancel the query.

You cannot set the mode programmatic interaction, however, you can get the interaction by programming mode using the built-in function GET_FORM_PROPERTY.

If you want to leave the Mode of Interaction = Non-blocking (by default),

then comes to Maximum length of query = 0 (default, medium unlimited time) in order to avoid "frm-40353 - cancelled request".

Concerning

-

Hello

Anyone know if FDMEE or previously FDM is able to connect and get data DB400 on AS400?

I did integrations with DB2 FDM and had no problem.

Open a Table Interface is a source for FDMEE. What you need to implement is the process of loading data in this table. The rest will be just as the standard workflow FDMEE.

To feed on the table, you can either fix ODI package (if you have knowledge of ODI) or create an event (BefImport) of Jython/VB script that will load the data from your source DB in Table IO.

I don't ' t think you will to character set issues that cannot be resolved.

Getting data from the db table is more directly forward to create flat files, but it depends on your needs of course.

-

Hi Master,

I have a master table that contains duplicate records. I wanted to insert original recording in a single table and double rest of the records in another table. I wanted to write a pl/sql block

for this senerio. Please help me.

creating table example (name varchar2 (10), sal number (5));

Insert in the values('ABC',100) sample.

Insert in the values('BCA',200) sample.

Insert into sample values ('CAB', 300);

Insert in the values('ABC',100) sample.

Insert in the values('BCA',200) sample.

Insert into sample values ('CAB', 300);

Insert in the values('ABC',100) sample.

Insert in the values('BCA',200) sample.

Insert into sample values ('CAB', 300);

create table union_a (name varchar2 (10), number (5));create table dup_b (name varchar2 (10), number (5));

Please find above the table script and insert commands... I'm using Oracle 11 g (11.2.01). I wanted to insert a separate record in the table union_a... duplicates in the dup_b table.

Thanks to the adv.

Concerning

SA

Hello

It looks like a Query of Top - N.

The ROW_NUMBER analytic fucnton allows to assign numbers 1, 2, 3,... for all lines, with a separate set of kinds for each group

Use a multi-table INSERT to put all lines numbered 1 in one table and all other rows in the other table, like this:

INSERT ALL

WHEN r_num = 1

THEN IN THE VALUES of union_a (name, sal)

Of ANOTHER INTO dup_b VALUES (name, sal)

SELECT name, sal

, ROW_NUMBER () OVER (PARTITION BY name, sal)

ORDER BY NULL - or else

) AS r_num

SAMPLE

;

You must use an OREDER BY with ROW_NUMBER clause, even if you n;' t care which line is assigned 1.

-

Error ORA-00932 - expected - got CLOB

Summer of work on request and if necessary, to add comments that have been added to a service call. First time I tried were a failure. So I backed up and worked on an idea to put comments into a temporary table and the rest of the data in another temporary table (which will have filtering in from outside our data systems) and combine them in the evaluation table. This table is analyzed to determine if the call is sent by e-mail to the supervisors.

The comments are a clob collected a variation of a function found here and as long as he stays, temporary table right. When the query tries to move this clob in the clob table corresponding assessment, I get the error ORA-00932 for incompatible data types: expected - got CLOB. (The destination column is a column clob as well.)

The select statement which I use is

The hc_clob_temp.comments is a CLOB, just like the jc_hc_curent.comments in which it happens. the two columns of ad_sec and Eid are NUMBER (10.0) and the rest are VARCHAR2 of different sizes.INSERT INTO jc_hc_curent(ad_sec, eid, ad_ts, ag_id, tycod, sub_tycod, udts, xdts, estnum, edirpre, efeanme, efeatyp, edirsuf, eapt, ccity, unit_count, comments) SELECT cu.ad_sec, cu.eid, cu.ad_ts, cu.ag_id, cu.tycod, cu.sub_tycod, cu.udts, cu.xdts, cu.estnum, cu.edirpre, cu.efeanme, cu.efeatyp, cu.edirsuf, cu.eapt, cu.ccity, COUNT(cu.unid), cl.comments FROM hc_curent_temp cu JOIN hc_clob_temp cl ON cu.eid = cl.eid GROUP BY cu.ad_sec, cu.eid, cu.ad_ts, cu.ag_id, cu.tycod, cu.sub_tycod, cu.udts, cu.xdts, cu.estnum, cu.edirpre, cu.efeanme, cu.efeatyp, cu.edirsuf, cu.eapt, cu.ccity, cl.comments;

So what am I I lack and what I have wrong here...?

I would like to know if there is everything you need to view the problem.

Thanks in advance,

Tony>

So what am I I lack and what I have wrong here...?

>

To solve the problems of this kind I find useful to remove all superfluous objects and focus on getting to work.So what happens if you use a query that simply inserts the CLOB column? Does it work? Then add a few more columns, and then even more until you find what does not work.

If necessary to create a table that is a clone of jc_hc_curent but does not index or other unnecessary constraints.

-

best practices for placing the master image

Im doing some performances / analysis of load tests for the view and im curious about some best practices for the implementation of the image master VM. the question is asked specifically regarding disk i/o and throughput.

My understanding is that each linked clone still reads master image. So if that is correct, then it seems that you would like the main image to reside in a data store that is located on the same table as the rest of the warehouses of data than the House related clones (and not some lower performing table). The reason why I ask this question, it is that my performance tests is based on some future SSD products. Obviously, the amount of available space on the SSD is limited, but provides immense quantities of e/s (100 k + and higher). But I want to assure you that if, by putting the master image on a data store that is not on the SSD, I am therefore invalidate the IO performance I want high-end SSD.

This leads to another question, if all the linked clones read from the master image, which is general practices for the number of clones related to deploy by main image before you start to have problems of IO contention against this single master image?

Thank you!

-

Omar Torres, VCP

This isn't really neccissary. Linked clones are not directly related to the image of the mother. When a desktop pool is created and used one or more data stores Parent is copied in each data store, called a replica. From there, each linked clone is attached to the replica of the parent in its local data store. It is a replica of unmanged and offers the best performance because there is a copy in every store of data including linked gradient.

WP

Maybe you are looking for

-

All my downloads on ~ 200 MB either fail or end up corrupted

Whenever I try to download something that size ~ 200 MB or higher, it will take several (5-10 +) try to download successfully. The download will fail somewhere in the middle of the process, or it "will end" as soon as it should, and when I try to ope

-

Toshiba DR570 - need help to identify a DVD burner

I recently bought a Toshiba DR570 and have been very happy with it so far.The only problem I have is that I can't get my DVD from PC Player to see the disc.Not even after a firmware upgrade on my older PC, any more than see it my new laptop. I also t

-

Try to reinstall WinXP Pro, but it won't let me activate.

OEM OF VISTA HOME PREMIUM, DOWNGRADED to WINXP Pro... Hard drive failure try to reinstall WinXP Pro, but don't let me activate. Everyone I have cn talk? Don't auto activation wizard no good.

-

Screen delay on calls received

Hello I just noticed that all of my incoming calls have a delay until the screen lights up. Anyone having this problem? Thank you.

-

I bought the mp3 music and they told me that have been downloaded in a zip file, where can I find this file to in order to transfer music to my mp3 player? And how to transfer downloaded music as soon as I find the file?