The data buffer series before transmitting

Hello, I'm relatively new to LabVIEW and had a question about how buffer data to the serial port before transmitting. I've attached what I have so far for a VI, and that's how I expected to work.

- Read in hexadecimal string (example: 001122334455)

- For loop repeats for half the length of the chain because I am transmitting into blocks of size bytes (example: 6F)

- Read the first byte of the ascii string and converts it to hexadecimal equivalent byte and transmits.

- Repeats until the chain is completed.

Basically, I need to place a buffer that fills up my time loop ends then all the data at once. At the moment there is enough of delays between each loop causes errors.

Any suggestions?

everettpattison wrote:

Basically, I need to place a buffer that fills up my time loop ends then all the data at once. At the moment there is enough of delays between each loop causes errors.

Do you mean the while loop or loop for? In the picture, you put the note that you need a buffer inside the loop for, loop not quite awhile. The amount of calculations going on inside this loop is so small that I can't imagine how it is at the origin of the delays, but you can easily replace it to transmit an entire string at a time. Put Scripture VISA outside the loop for. wire the output channel of the conversion at the border of the loop for, creating a tunnel. Connect the output of this tunnel to concatenate strings, which will combine an array of strings into a single string. Then connect to the entry VISA.

EDIT: even better, get rid of the conversion, the U8 to wire directly for the edge of the loop, use the byte array to a string to be converted to a string, send this string to Write VISA. There is probably an even easier approach, but I'm not looking too carefully.

Tags: NI Software

Similar Questions

-

Quality of the data buffer Cache

Hi all

Can someone please please tell some ways in which I can improve the quality of data buffer? He is currently at 51.2%. The DB is 10.2.0.2.0

I want to know all the factors of wat should I keep in mind if I want to increase DB_CACHE_SIZE?

Also, I want to know how I can find Cache Hit ratio?

In addition, I want to know which are the most frequently viewed items in my DB?

Thank you and best regards,

Nick.Bolle wrote:

You can try to reduce the size of the buffer cache to increase hit ratio.Huh? It's new! How would it happen?

Aman... -

What are the data size of the buffer on PX1394E - 3 50

Hello

I bought this drive a few days ago and I'm a little confused on the

the size of the data buffer. The box indicates 16 MB, Toshiba web page says 8 MB, etc...Nobody knows, what are the data buffer size of this model?

Is there a SW or the request to meet with whom?BTW, great car, fast, noise, however, extremely when it comes to finding large files.

Thank you

FranciscoHello

I have lurked around a bit and it seems that your drive has buffer of 16 MB that is absolutely definitely sure. To check the details of your computer, you can use the tool "Sisoft Sandra". You can download [url href = http://www.sisoftware.net/index.html?dir=&location=downandbuy&langx=en&a=] here [/ URL].

After download, just install it and you will get all information about your computer, as the size of the buffer of your external hard drive.

Welcome them

-

dynamic action... How to make date is always before the "to date".

Hello

using a form, I ask my users to provide the dates...

can I know (step by step) how to set up a dynamic action so that if it dates back to article P20_FROM_DATE after P20_TO_DATE then an error message to be generated...

Thanks in advanceOK, let's start again. Delete your current setup you date pickers and try these.

P22_FROM_DATE settings

Display on focus

View other months YesUse the same settings for P22_TO_DATE.

Create a dynamic Action called say, Compare the Dates

Event Before the Page is sent

Condition Expression of JavaScript

Value $v(P22_TO_DATE')<>Real Actions

Action Draw the attention of

Fire when the result of the event is True

Text The date cannot be less than this day.Another real Action

Action Update fixed

Fire on the loading of the Page Checked

Assigned to elements Selection type Article (s) P22_TO_DATEJeff

-

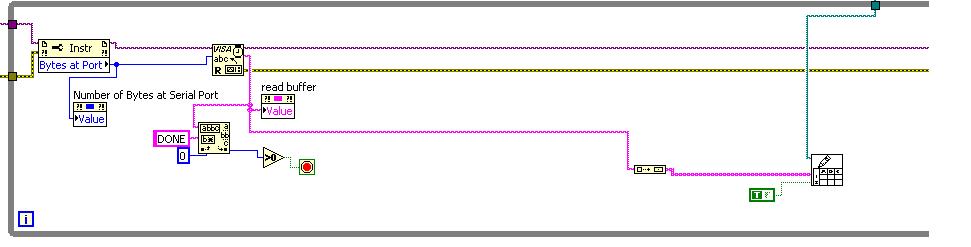

reading serial port constantly and update the string buffer

Hi guys,.

I am facing a problem in reading the data in series using LabVIEW.

I have an unknown size of data to be read on the RS-232 (Serial) and using read write serial.vi (example) I have read the data permanently and monitor for chain DONE on the buffer, but the channel indicator that displays the output not updated data.

Chain should:

1 2 3 4

5 6 7 8

I don't see one character at a time on the indicator.

This is the screenshot of the vi

Is there any method which will help me to do this?

Hi Dave,.

Thanks a lot... you helped me finish all my work... "Always small things blink quickly.

See you soon,.

Sailesh

-

How to automatically change the date format: DDMMYYYY to exact

Hi all

I'm working on pdf with acrobat pro X forms.

I'd like to be able to type the date without punctuation and when I press tab to move to the next field, the date will be automatically transmitted from DDMMYYYY to exact

Could someone help me please? Thank you very much.

Crissy

You must write your own validation and format scripts to achieve this goal.

Unless you do not want to validate that the entry is a valid date, in which case, you can use the following arbitrary mask to achieve:

99.99.9999

-

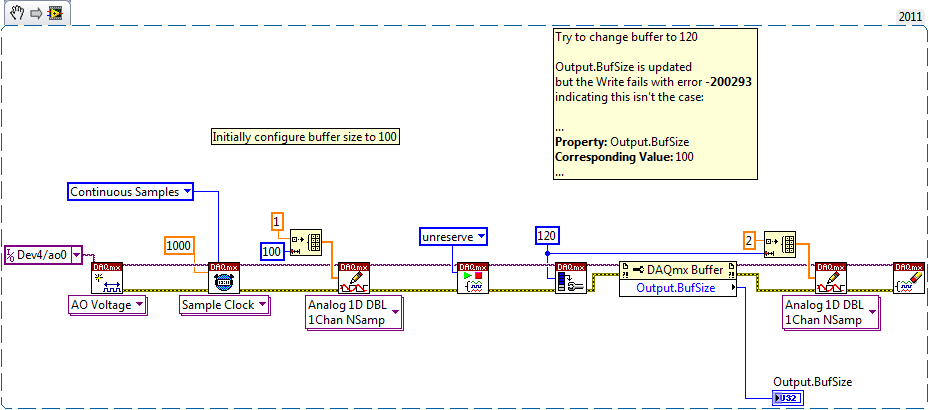

How to clear the output buffer, possibly resize and burn again, before you begin the task of output

I use PyDAQmx with a USB-6363, but I think the question is generic to DAQmx.

I have an output buffer that I want to be able to (re) write to without starting the task output.

More specifically, I have a graphical interface and a few sliders, the user can move. Whenever the slider changes, a new set of values is loaded into the buffer output through DAQmxWriteAnalogF64. After you set the value, the user can click on a button and start the task output.

In some cases the change in cursor does not require a change in buffer size, only a change in the data. In this case, I get the compalint following DAQmx as they tried writing:

The generation is not yet started, and not enough space is available in the buffer.

Set a larger buffer, or start the generation before writing data more than content in the buffer.

Property: DAQmx_Write_RelativeTo

Value: DAQmx_Val_CurrWritePos

Property: DAQmx_Write_Offset

Corresponding value: 0

Property: DAQmx_Buf_Output_BufSize

Corresponding value: 92In other cases the change in cursor requires both change in the size of the buffer and data modification. In this case, I get the following, but only after that do a few times each time increase the size of the writing.

DAQmx writing failed because a previous writing DAQmx configured automatically the size of output buffer. The size of the buffer is equal the number of samples written by channel, so no additional data can be written before the original task.

Start the generation of before the second writing DAQmx or set true in all instances of writing DAQmx Auto Start. To gradually write to the buffer before starting the task, call DAQmx Configure an output buffer before the first writing DAQmx.

Task name: _unnamedTask<0>State code:-200547

function DAQmxWriteAnalogF64I tried to configure the output via DAQmxCfgOutputBuffer buffer (in some cases, by setting it to zero or a samples, then save again, in an attempt to clear it) but that doesn't seem to do the trick.

Of course, I can work around the problem by loading data only when the user clicks the end button, but not what I'm asking here.

Is it possible to "remake" the writing of output before you begin the task?

Thank you

Michael

Today I have no material practical to validate, but try unreserving task before writing the new buffer:

DAQmxTaskControl (taskHandle, DAQmx_Val_Task_Unreserve);

With a simulated device, he made the error go away in case the buffer is the same size. You will need to validate if the data are in fact correct, but I think it should be (unreserving I would say reset the write pointer so the old buffer are replaced with the new data).

I always get errors when you try to change the size of buffer if (on my 6351 simulated). I posted some similar mistakes about the reconfiguration of the tasks here, I guess it is possible that this issue has also been set at 9.8 (I always use 9.7.5 on this computer). If the behavior is still present in the new driver, and also appears on real hardware (not just simulated), then it seems that this is a bug of DAQmx someone at OR should be considered.

I wrote a simple LabVIEW VI that captures the error in order to help people to NOT reproduce it:

The best solution at the moment would be likely to re-create the task if you need to change the size of the buffer (or avoid writing data until you are sure what will be the size of buffer).

Best regards

-

Save the data before and after the occurrence of a Trigger Condition.

Hello

I am worrking on an application that acquires data from 64 channels and performs many analyses.

Necessary to implement something is recording the data in the event of alarm condition.

I've implemented datalogging in many previous applications, but this one is difficult.

I need to save data for a few seconds before and a few seconds after the alarm has occurred in a single file.

I thought constantly write data to a file PDM and at the same time deleting the old data, until the alarm trigger occurs.

but I was not able to do, since I have no blocks to remove data from a PDM file.

I'm looking for the ideal approach to this recording.

Any help will be appreciated.

What I have to do this in the past, use of a queue with loss as a circular buffer. When you get the relaxation, dump you the data in the queue to your file and then save however many data you want after the outbreak.

For the purposes of the memory allocation, do not use the queue to rinse. Use rather a Dequeue element inside a conditional FOR loop with a 0 timeout (reading the queue stops when you have a timeout or you read X samples).

-

Problem reading video image from the camera IP Axis - confusion of variant data buffer.

Hi there;

I am writing a VI for an Axis IP camera. He went to work, but I need to extract individual video stream images so I can overlay text using the functions of text IMAQ overlay.

The thing is when I take a picture using the axis dll library (GetCurrentFrame), it returns a buffer size and a Variant representing the raster data. The data is = 921 640 bytes representing a 40 byte header, and then the 640 x 480 x 3 raster data. When I run the vi, I get a correct value for the size of the buffer (921 640) so I know it works.

What don't understand me, it's the video image is placed in a buffer of type variant. I don't quite know how to extract the raster data of the data type of "buffer" which is a type variant. My apologies, I'm a bit ignorant in how to handle the types of "Variant".

Does anyone have a suggestion?

This is the VI

Hi Peter,.

Just to confirm, what IMAQdx version do you use? You want to get the version 2010.3 since it is the latest version: http://joule.ni.com/nidu/cds/view/p/id/1641/lang/en

The next issue would be that the Ethernet cameras discover is not necessary and that it is only used for GigE Vision cameras. The VI was named before IP camera support has been added and the name is unfortunately confusing now. In any case, the discovery of cameras takes place in the background and is automatic. The VI list must list your camera. It may be worth trying just to see if the camera appears in first MAX. Note that the IP camera must be installed on your local subnet, so it can be discovered.

Regarding the examples, virtually none of the IMAQdx examples included in help-> find examples should work.

Eric

-

Smart way to save large amounts of data using the circular buffer

Hello everyone,

I am currently enter LabView that I develop a measurement of five-channel system. Each "channel" will provide up to two digital inputs, up to three analog inputs of CSR (sampling frequency will be around 4 k to 10 k each channel) and up to five analog inputs for thermocouple (sampling frequency will be lower than 100 s/s). According to the determined user events (such as sudden speed fall) the system should save a file of PDM that contains one row for each data channel, store values n seconds before the impact that happened and with a specified user (for example 10 seconds before the fall of rotation speed, then with a length of 10 minutes).

My question is how to manage these rather huge amounts of data in an intelligent way and how to get the case of error on the hard disk without loss of samples and dumping of huge amounts of data on the disc when recording the signals when there is no impact. I thought about the following:

-use a single producer to only acquire the constant and high speed data and write data in the queues

-use consumers loop to process packets of signals when they become available and to identify impacts and save data on impact is triggered

-use the third loop with the structure of the event to give the possibility to control the VI without having to interrogate the front panel controls each time

-use some kind of memory circular buffer in the loop of consumer to store a certain number of data that can be written to the hard disk.

I hope this is the right way to do it so far.

Now, I thought about three ways to design the circular data buffer:

-l' use of RAM as a buffer (files or waiting tables with a limited number of registrations), what is written on disk in one step when you are finished while the rest of the program and DAQ should always be active

-broadcast directly to hard disk using the advanced features of PDM, and re-setting the Position to write of PDM markers go back to the first entry when a specific amount of data entry was written.

-disseminate all data on hard drive using PDM streaming, file sharing at a certain time and deleting files TDMS containing no abnormalities later when running directly.

Regarding the first possibility, I fear that there will be problems with a Crescent quickly the tables/queues, and especially when it comes to backup data from RAM to disk, my program would be stuck for once writes data only on the disk and thus losing the samples in the DAQ loop which I want to continue without interruption.

Regarding the latter, I meet lot with PDM, data gets easily damaged and I certainly don't know if the PDM Set write next Position is adapted to my needs (I need to adjust the positions for (3analog + 2ctr + 5thermo) * 5channels = line of 50 data more timestamp in the worst case!). I'm afraid also the hard drive won't be able to write fast enough to stream all the data at the same time in the worst case... ?

Regarding the third option, I fear that classify PDM and open a new TDMS file to continue recording will be fast enough to not lose data packets.

What are your thoughts here? Is there anyone who has already dealt with similar tasks? Does anyone know some raw criteria on the amount of data may be tempted to spread at an average speed of disk at the same time?

Thank you very much

OK, I'm reaching back four years when I've implemented this system, so patient with me.

We will look at has a trigger and wanting to capture samples before the trigger N and M samples after the outbreak. The scheme is somewhat complicated, because the goal is not to "Miss" samples. We came up with this several years ago and it seems to work - there may be an easier way to do it, but never mind.

We have created two queues - one samples of "Pre-event" line of fixed length N and a queue for event of unlimited size. We use a design of producer/consumer, with State Machines running each loop. Without worrying about naming the States, let me describe how each of the works.

The producer begins in its state of "Pre Trigger", using Lossy Enqueue to place data in the prior event queue. If the trigger does not occur during this State, we're staying for the following example. There are a few details I am forget how do ensure us that the prior event queue is full, but skip that for now. At some point, relaxation tilt us the State. p - event. Here we queue in the queue for event, count the number of items we enqueue. When we get to M, we switch of States in the State of pre-event.

On the consumer side we start in one State 'pending', where we just ignore the two queues. At some point, the trigger occurs, and we pass the consumer as a pre-event. It is responsible for the queue (and dealing with) N elements in the queue of pre-event, then manipulate the M the following in the event queue for. [Hmm - I don't remember how we knew what had finished the event queue for - we count m, or did you we wait until the queue was empty and the producer was again in the State of pre-event?].

There are a few 'holes' in this simple explanation, that which some, I think we filled. For example, what happens when the triggers are too close together? A way to handle this is to not allow a relaxation to be processed as long as the prior event queue is full.

Bob Schor

-

check the free space on the data store before making svmotion

I'm trying to understand how to check free space on the data store until the proceeds of the svmotion.

Example:

-must first check that that sizeof (VMs to be moved) < sizeof (destination) - 50 GB before in terms of the

-should leave a 50 GB buffer so that the script ends when all the VMS are moved from source data store or there are only 50 GB left on the source data store

-must move VM to store data source to the destination data store 2 at a time, until the completion

-must create a local log file, and then send this log file using the relay smtp server.

I just started using powershell and have played with different things for about a week. I'm trying to understand this problem, anyone has advice or suggestions for me?

Any help would be greatly appreciated...

Hello

Yes, the $vmname is empty, change the line as below

Move-VM -VM (Get-VM -Name $vmm.Name) -Datastore (Get-Datastore -Name $DSname)

-

How can I erase the data on my laptop before I give it away?

How can I erase the data on my laptop before I give it away?

See this document.

-

Hello

I sold my iPad of the week. Before the restore to factory default, I made a backup (already backup place regularly to icloud anyway). I did manually a couple of times to make sure that the data has been saved.

I have just connected on iCloud on my PC but it is empty. I can try to go in Mail, Notes etc but there is nothing there. Can someone tell me what I am doing wrong and how I can find the data backup?

Thank you

Your backup data are not visible on iCloud.com

Where you want to restore data to? You did an archive of data which cannot be used on another device. It is not accessible in any other way.

-

AT100 - how you back up the data before software update?

Hi guys,.

I'm in Australia, you just buy an AT100 (model PDA01A-005001) and am trying to get my head wrapped around how it works.

This sounds like a question of noob, but despite the manual tells me to save the (AT100) compressed, data on an external device several times... it does not say how? I searched the forum and no one asked such a noob question that has me thinking I'm missing something.

I am new to Android - until now, I had Windows and apple-based products.

I activated the option to backup to google, but that sounds about right for the account, not the applications and data app... Please correct me if I'm wrong.so, just to give a little more background, I've specifically hunted around for the model PDA01A-005001 because Toshiba States what is already updated to Android 3.1 - imagine my surprise when I see that the system is actually 3.0.1? So, I shot an email to customer support from Toshiba and said through the workstation service.

Well then... so I'll have in SS and see that Android 3.2.1 is the update available, but before you start, SS gives a big warning about backing up my data... okey dokey I'll just read the manual on how to do this... oh, wait...

Thank you in advance for your help!

Rob

Hello

The AT100 supports an SD card, so you can move all of the files (images and the like) on the SD card.

Also there is a USB port that allows you to connect external hard drives. So you can move the files to the HARD drive connected.

You can use an application preinstalled as Toshiba File Explorer to see your files, or you could install another app from the Android market. -

Cannot restore the Favorites before the date I have improved the way to solve it

The problem is I've upgraded to the beta of ff 4 just to test it, but I went back to ff 3.6 and lost all my favorites and settings. but I tried febe, xmarks also mozbac. they do go back no further than the date, I upgraded. Can someone help me with this problem. I would be very grateful if someone can solve my problem. Thank you all .x

This thread solved from locking

Did you uninstall the beta version of Firefox 4.o or simply go back to the use of 3.6?

If you have uninstalled actually 4.0 beta, I hope that you have selected remove my Firefox personal data and customizations in the uninstall routine. If you used this option, you have deleted all your Firefox data, for all versions of Firefox.

Xmarks, FEBE and mozback work only for recovery if you made a backup with these programs / extensions before you uninstalled Firefox. They retrieve the data that you deleted from your PC; they are not file recovery programs. They only restore their own backups.

Maybe you are looking for

-

Satellite A210-1AP - work time battery issue

How long is the working time for the laptop on battery life in secure mode enegry?

-

I have the options checked "Show hidden files", I still don't see any records

Even though I have checked options "Show hidden files", I still see no records, I KNOW that I put in place. If I have "research" them I can see, but if I prepare a document and want to then save it in a folder known... the file will not be displayed

-

Please help me. My material measurement of vibration on a different section of the power gen

Please help me. My equipment measures vibrations on a different section of material power .it has 8 channels analog input with each channel a preamp and dsp, then withFinal microprocessor display. How could I Connector channels read and analyze the s

-

Cannot install updates of security KB265639 - KB2686828

I can not install updates of security for Net Framework Win XP x 86 KB265639 and KB2686828. I have installed all updates, but these failed three times two, could you please help. Thank you.

-

Clear the check box 'Results post successfully' after a report on internal server.

I found how to disable the dialog box asking for name and email id, but how do I disable the checkbox:Or change the words / title? but turn off would be better.Thank you-James