Calculation of the CRC-16 CCITT

I need help for generating a CRC - 16 CCITT. I've searched high and low and can't find the right one. Here is a list of the sites that I have used to try to find a LabVIEW VI to do the trick:

http://zone.NI.com/DevZone/CDA/EPD/p/ID/1660

https://decibel.NI.com/content/docs/doc-1103

http://forums.NI.com/T5/LabVIEW/CRC-CCITT-16bit-initial-value-of-0xFFFF-or-0x1D0F/TD-p/896664

http://forums.NI.com/T5/LabVIEW/CRC-16-CCITT-of-serial-packets/TD-p/1471386

http://forums.NI.com/T5/LabVIEW/CRC-CCITT/TD-p/109061

http://forums.NI.com/T5/LabVIEW/computing-CRC/TD-p/825325

Some of these even have several CRC to the choice.

Now, what I have is a piece of code C. And this is where I ask for help because I have no idea how to turn C in G. And I'm certainly not fair why this CRC is different from 'standard' ones found in the links above.

I have attached the PDF with the code.

Here are a few hexadecimal strings and their corresponding CRC:

String: 00 CRC 08:07 1

String: 00 09 CRC: 1-06

Chain: 02 22 03 E8 CRC: E5 6a

Chain: 02 27 03 E8 CRC: B5 CF

Chain: 02 29 00 02 CRC: EB 57

String: 04 29 00 01 00 03 CRC: CC 94

Chain: 02 00 64 CRC 3D: 05 38

If there is someone out there willing to give a shot of this I am CERTAINLY happy!

Paul.

Ah. There is a small tweak in there that makes it different from the 'standard' CRC How sneaky of them. This code gives me the results you're looking for.

Tags: NI Software

Similar Questions

-

Converting C code for the calculation of the CRC in labVIEW

Hi guys, I am a bit stuck. I'm trying to implement a piece of code C in LabVIEW and I make an apparent error. You could someone compare code C my VI and tell me what I'm missing? Thanks in advance.

For combination of Bytes1 and 2 CRC results must be (by decade):

Octet1 octet2 CRC

254 0 061

253 0 002

252 0 023

251 0 124

250 0 105

249 0 086

248 0 067

247 0 128

246 0 149

245 0 170I did very little improvement + correction (you forget the binary inversion)

-Benjamin

-

calculation of the average value of the sorted data and polar route drawing

Hello

I did a VI that calculates the average value of the wind rotor/speed-ratio in the sections of 30 degrees (wind direction). He also called the polar plot of calculated data. Everything works, but I would like to make more detailed calculations and drawings, by increasing the resolution to 1 degree, or...

Problem is VI, I did, is not easy on a large scale. At the moment, I have 12 parallel structures of switch-box to calculate the average value and build the array function to collect data calculated for Polar plot to draw the image in real time. I know it's probably the worst way to do it, but since I have done a few things with LV, it was the only way I managed to do what I wanted.

Now, if I continue in same way to reach my goal, I have to create 360 Parallels switch-case structures... that are crazy.

Something like the calculation of the average of the table or matrix (zero/empty values should not be calculated on average) inside the loop or similar way would probably be the best solution.

So, polar plot drawing is not a problem, but creating a reasonalbe average metering system is. Any ideas?

I would also like to rotare northward to the top (0 deg), and degrees of increase in a clockwise direction on polar ground dial plate (as on the compass).

VI on the attachment. (simplified version of the complete system)

I have signals:

-Wind speed

-wind direction

-Rotor speed

I want to:

-calculate the average value of the speed of the wind / rotor - ratio in sections (5 degrees, 1 degree)

-Draw a polar path of the wind rotor/speed-ratio of averages in propotion of wind direction

I'm using LabView 2009

Thank you very much.

It is closer to what you're looking for?

-

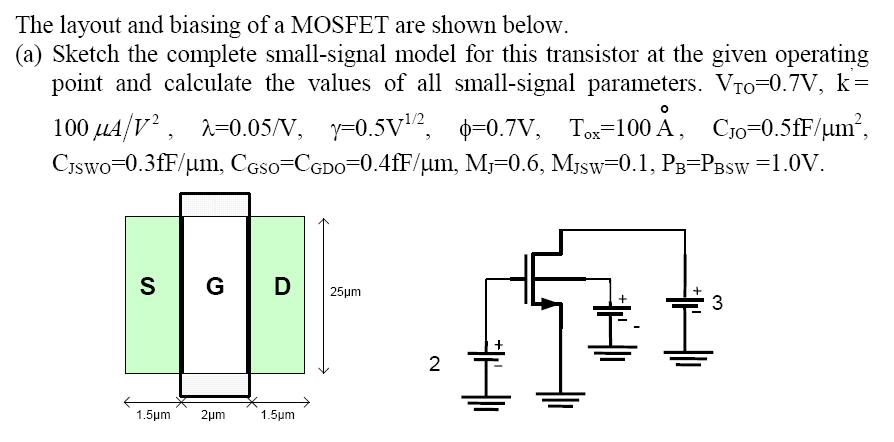

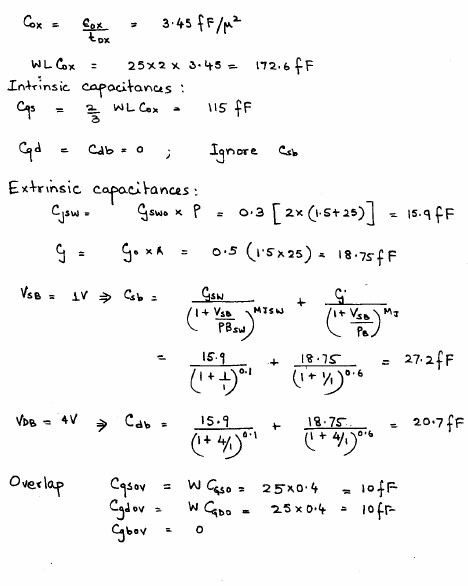

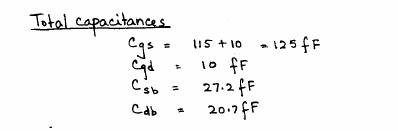

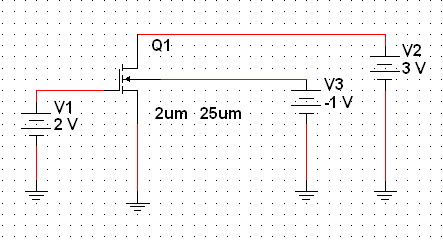

Translate 'Weird' in the calculation of the capacity of MOSFET

Hello world

I have a problem with the result of the simulation to calculate N - CH Mosfet capacitance (Cgs, CGD and CBD).

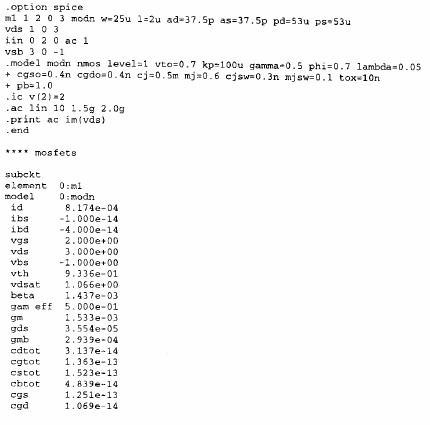

In this simulation, I tried to check my manual calculation with the result of Spice to 11.0 Multsim. But the result in Multism simulation is stopped different from the manual calculation or Pspice/Hspice simulation.

Manual calculation:

Multisim simulation:

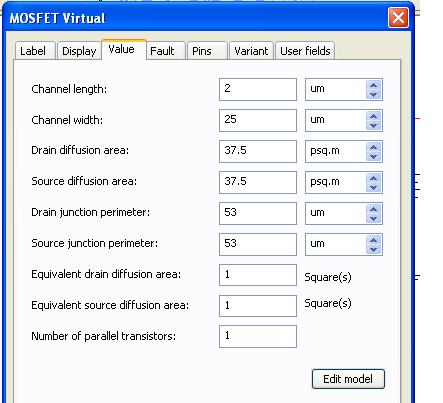

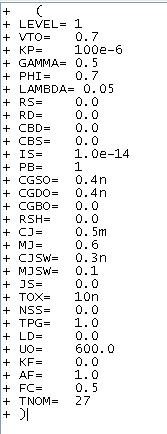

Definition of parameters in Multisim:

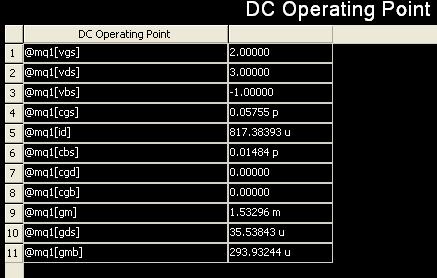

. Multisim DC

> DC of PSPICE

It appears from these results that Pspice gave a value close to the theoretical calculation of the MOSFET here. And I got the result in Multisim.

Can someone help me solve this problem?... Multisim is not as powerful as other spices software? or simply, I messed up with the Multisim formatting settings and ended up with the wrong answer?

According to me, you go always peripheral the capacitances output variables. These would be only the capabilities reported and identical to the DC - incorrect. We checked the code and capabilities used in the analysis are the right ones.

To illustrate so, using variables real circuit - voltage and current, I calculated the impedance looking in the door to a range of 1 GHz (its a little non-trivial to demonstrate the impedance of specific capabilities because you can't get out easily aware of the capacity). The results are similar to PSpice.

So to reiterate - the real circuit test results are not affected. For now, we ask that you do not watch the variable capacitance device because it is incorrect.

Hope that helps.

-

Rapid calculation of the exponential decay constants

Hi all

I try to develop a routine that quickly calculates the exponential decay of a given waveform constant. I use two different techniques, dealing with the calculation of the directions and another using corrects successive integration (LRS). The two usually give the correct time for the input waveform even constant with a significant amount of noise. The LRS solution is significantly less sensitive to noise (desirable), but much more slowly (DFT computations run the order of 10s of microseconds for a waveform pt 1000, while the LRS, such that it is coded in Labview, running at about 1.5 ms). The LRS technique has been developed by researchers at the George Fox University in Oregon, and they claim that they could perform some computation time on the order of 200 US for both techniques. I have been unable to reach this time with the LRS technique (obviously) and attempted to use a node of the library Call to call a dll compiled this code in C. However, at best, I get a growth factor 2 in speed. In addition, additional calculations using the dll seem to be additive - i.e. for four calculations similar running in the same structure with no dependence on each other, the total computation time is about 4 times that of one. In my case, this is not enough because I try to calculate 8 x to 1kH.

Looking through the discussion, I have been unable to determine if I should wait for a performance for C gain well written on Labview well written (most seem to ask why you want to do something external). In any case, I join the code, then you can be the judge as to if it's well-written, or if there is no improvement in performance. The main function is the Test analysis Methods.vi that generates a wave exponential scale, offset and noise and then the decay constant tau is calculated using the VI is Tau.vi. In addition, I am attaching the C code as well as the dll for solving the equations of LRS. They were coded in Labview 8.6 and the C has been encoded using the latest version of Visual C++ Express Edition from Microsoft. Themain VI uses the FPGA VI module ' Tick count to determine the rate of computation in microseconds, so if you do not have this module you should remove this code.

Any thoughts are appreciated. Thanks, Matt

Hi Matt,

After changing the summation loop in your calculation of CWR, the routine runs as fast (or faster) than the variants of the DFT... Anyway: check the results to be sure it is still correct.

-

How do reinstall you the calculator in the start menu?

original title: HOW to REINSTALL THE CALCULATOR ON YOUR COMPUTER at STARTUP PROGRAMSHOW youHOW DO REINSTALL YOU THE WINDOWS CALCULATOR IN THE MENU START IN ACCESSORIES?

Go to: Add or remove programs, click Add/Remove Windows components, accessories and tools, click details, accessories, click details, and then put a checkmark in the calculator click OK, OK, then click.

If there is already a check calculator uncheck, click OK, OK, then. Then go back and check, click OK, OK, then.

-

Close an interface based on the CRC errors

We have 4510 with double 10 gig links to 6509. When one of our interfaces begins to accumulate the crc errors its impact on latency and availability of our users behind the 4510. Either the interface err - disable or manually stop us it and the other link 10 gig is unlocked by spanning tree and the traffic starts to flow as usual. Is it possible to use the EEM to detect these errors and entry of the crc and stop the interface?

I'm looking at the below as resolution:

Te5/1 trap to bypass authorization event manager applet

"event TenGigabitEthernet5/1 setting input_errors_crc entry-op name interface entry-val 0 entry value output-comb type or output-gt exit-val 0 exit value type op - the exit time 5000 output-event-polling interval of the true 100 maxrun 10000.

action 1 cli command "configures terminal.

Action 2, command cli "te5/1 interface.

Action 3 cli command "shutdown".

An EEM applet will work for you, but the way you have it designed could not do what you want. Try this:

authorization of monitor_te51 event handler applet work around

event interface name TenGigabitEthernet5/1 setting input_errors_crc entry-op gt entry-val 0 increment type entry exit-val 0 out of operation eq output-type increment

command action 1.0 cli 'enable '.

Action 2.0 cli command "config t.

Action 3.0, command cli "int DUREE5/1.

action 4.0 'closed' cli command

-

How is the Score calculated to the Disqualification/OWS priority

How is the Score calculated to the Disqualification/OWS priority?

I tried to ask support and they directed me in the direction of this forum?

The implementation guide did not help http://www.oracle.com/technetwork/middleware/ows/documentation/ows-impl-guide-2235293.pdf

Thank you

Karen

Pretty simple (and support should have been able to say, sorry).

An alert can have several connections. Normally because registration of a given client (name) matches a number of names of different aliases of the same person or entity.

Each of these relationships has a priority score that is configured in the matching to the Disqualification rules. Whatever the rule of correspondence formed this relationship has a priority associated with score that indicates the power of this game.

(Default), the score of the priority of the alert is set to be the highest score of any relationship in the alert priority; in other words, the score of the game stronger.

Mike

-

Hi all

I came across this error last Monday. I tried all the recommendations and configurations and nothing seems to work to solve the problem.

Here is the error message-

[Game Sep 24 12:04:27 2015] Local, ARPLAN, ARPLAN, Ess.Tee@MSAD_2010/9240/Error (1012703)

Type [0] unknown calculation for the dynamic calculation. Only default agg/formula/time balance operations are managed.

[Game Sep 24 12:04:33 2015] Local, ARPLAN, ARPLAN, Ess.Tee@MSAD_2010/9240/Warning (1080014)

Abandoned due to the State [1012703] [0x2e007c (0x56042d17.0xeadd0)] transaction.

[Game Sep 24 12:04:33 2015] Local, ARPLAN, ARPLAN, Ess.Tee@MSAD_2010/8576/Warning (1080014)

Abandoned due to the State [1012703] [0x40007d (0x56042d18.0x781e0)] transaction.

[Game Sep 24 12:04:34 2015] Local, ARPLAN, ARPLAN, Ess.Tee@MSAD_2010/736/Info (1012579)

Total time elapsed Calc [Forecast.csc]: [621,338] seconds

The script I'm running-

SET CACHE HIGH;

SET MSG SUMMARY;

LOW GAME REVIEWS;

UPDATECALC OFF SET;

SET AGGMISSG

GAME CALCPARALLEL 2;

SET CREATEBLOCKONEQ

SET HIGH LOCKBLOCK;

FIX ('FY16', 'Final', 'Forecasts', '11 + 1 forecasts', 'prediction of 10 + 2', '9 + 3 forecast', '8 + 4 forecasts', "forecast 7 + 5", "6 + 6 forecast", "forecast 5 + 7", 'forecast of 4 + 8', '3 + 9 forecast', 'forecast 2 + 10', '1 + 11 forecasts')

DIFFICULTY (@IDESCENDANTS ('entity'))

CALC DIM ("account");

ENDFIX

DIM CALC ("entity", "Currency");

ENDFIX

In the essbase.cfg I have already included-

NETDELAY 24000

NETRETRYCOUNT 4500

/Calculator cache settings

CALCCACHEHIGH 50000000

CALCCACHEDEFAULT 10000000

200000 CALCCACHELOW

Lockblock/set limits

CALCLOCKBLOCKHIGH 150000

CALCLOCKBLOCKDEFAULT 20000

CALCLOCKBLOCKLOW 10000

Please suggest if there is a way to fix this error. I get a similar error for other calculations as well.

Kind regards

EssTee

And you are positive that no one came in a new Member at level 0 as dynamic Calc?

What are the versions do you use?

-

Calculations of the rate of aggregation in essbase ASO SEEP cubes

The question (limitation to ASO) we tried to find a solution/workaround. ON PBCS (Cloud)

Details of the application:

Application: type of ASO in cloud Oracle planning 11.1.2.3 (PBCS) application

Dimension : Total 8 dimensions. Account to the dynamic hierarchy. Remaining 7 dimensional hierarchies Stored value. Only 2 dimensions have about 5000 members and others are relatively small and flat hierarchies.Description of the question of the requirement: We have a lot of calculations in the sketch that use amount = units * rates type of logic. The obligation is such that these calculation logic should apply only to the intersections of Level0 and then data resulted must roll up (down) to the respective parents across all dimensions. But when apply us this hierarchical logic / formula calculation to ASO, the logic(i.e.,amount=units*rate) of calculation is applied at all levels (not only the leaf level) of remaining dimensions. Here, rates are also numbers derived using the formula MDX.

Some of the options explored so far:

Option1: This is an expected behavior in ASO as all stored hierarcies are calculated first, then the dynamic hierarchies. So we tried to change the formula for each of the calculated members to explicitly summarize data at parent levels using algorithm as shown below.IF (Leaf Level combination)

amount = units * rate

Else / * for all levels parents * /.

Use the function sum adding up the amounts between the children of the current members of dimension1, dimension2 and so on.

EndResult: Recovery works through the parents for a dimension. When the summary level members are selected in 2 or more dimensions, the recovery freezes.

Option2: Change the type of hierarchy to group all the dimensions to "Dynamic" so that they calc after account (i.e. after amount = units * rate runs at intersections Level0).

Result: Same as option 1. Although the aggregation works through one or 2 dimensions, it freezes when the summary level members are from many dimensions.

Option3: ASO use custom Calc.

We created a custom calc by fixing the POV Level0 members of any size and with the amount of formul = units * rate.Result: Calc never ends because the rate used is a dynamic calc with formula MDX (which is needed to roll forward rates for a specified period at all the following exercises).

If you could get any help on this, it would be a great help.

Thank you and best regards,

Alex keny

Your best bet is to use the allocation of the ASO, what difference does make. (one ton)

There are a few messages blog out there that can help you meet this goal. (including mine), the trick is to create a member calculated with a NONEMPTYMEMBER in the formula

Then it will be a member with an inside MDX formula

NONEMPTYMEMBER units, rates

Units * rates

Now, make a copy of data (allocation) of this member-to-Member stored.

http://www.orahyplabs.com/2015/02/block-creation-in-ASO.html

Concerning

Celvin Kattookaran

PS I found NONEMPTYTUPLE does not and still used NONEMPTYMEMBER

-

How do I create a custom calculation for the portion of the tax of a subtotal field?

The calculation must be the number x 3 divided by 23 (for example 100 x 3 divided by 23 = 13.0435). I think we should have code written for it, but I don't know what it should be, and how I should do this.

You have really no code. Use Simple field Notation (the

a second) the calculation of the target on the field tab and enrolled him

(Assume the name of the field that contains the number of variables is

Text1):

Text1 * 3 / 23

The game, may 12, 2016 at 06:05, justineh55480397, [email protected]>

-

Calculation of the size of the database.

Salvation of DBA. Which is exactly the way to calculate the size of the database? Is it calculate the sum of the sizes of the segments or the calculation of the sum of the sizes of the redo logs, data files, control files?

You please suggest the correct answer

Thank you

As usual: depends: do you need to calculate the size allocated on storage, you simply go to the current size of the file dba_data_files bytes column data. If you need the storage used the sum of dba_segments fits better. If you need the size of all files used for the database, you must add the controlfiles, newspapers and db_recovery_file_dest_size files.

-

Dear team of SQL Developer,

It seems that the calculation of the length of the column in the expected and received recordsets behaves differently in some cases (when stored proc variables are used in the generation of the REF CURSOR, despite the explicit definition of the type of record): the length of the header and the length of the value in the set of records received are truncated to arbitrary length based on the returned value which leads to a false failure of a unit test. I.e. registries are the same in both sets of records, but the test run fails to the comparison of the recordsets because of different length. Please see the screenshot below:

Here's the test case if you need to reproduce the problem/bug in your environment:

1. use the default schema of HR of the Oracle examples package that comes with an 11 g database.

2. change the HR. Table EMPLOYEES with the addition of a new column VARCHAR2 (4000) LONG_LAST_NAME:

ALTER TABLE HR. EMPLOYEES

ADD (LONG_LAST_NAME VARCHAR2 (4000));

Update hr.employees set long_last_name = last_name;

commit;

3. create a PKG_TEST2 package with the source code below in the HR schema:

----------------------------------------------------------------------------------------------------------------------

create or replace PACKAGE PKG_TEST2 AS

TYPE EmployeeInfoRec IS RECORD

(

long_last_name employees.long_last_name%TYPE,

first name employees.first_name%TYPE,

E-mail employees.email%TYPE

);

TYPE EmployeeInfoRecCur IS REF CURSOR RETURN EmployeeInfoRec;

FUNCTION getEmployeeInfo (p_Emp_Id employees.employee_id%TYPE)

RETURN EmployeeInfoRecCur;

END PKG_TEST2;

----------------------------------------------------------------------------------------------------------------------

CREATE OR REPLACE PACKAGE BODY PKG_TEST2 AS

FUNCTION getEmployeeInfo (p_Emp_Id employees.employee_id%TYPE)

RETURN EmployeeInfoRecCur AS

v_EmployeeInfoRecCur EmployeeInfoRecCur;

v_LongLastName varchar2 (4000);

BEGIN

Select long_last_name from v_LongLastName

employees

where employee_id = p_Emp_Id;

--

OPEN FOR V_EmployeeInfoRecCur

V_LongLastName SELECT long_last_name,

first name,

E-mail

This_is_very_long_table_alias employees

WHERE employee_id = p_Emp_Id

order by 1 CSA;

--

RETURN v_EmployeeInfoRecCur;

EXCEPTION

WHILE OTHERS THEN

LIFT;

END getEmployeeInfo;

END PKG_TEST2;

----------------------------------------------------------------------------------------------------------------------

4. create a unit test for the PKG_TEST2.getEmployeeInfo stored procedure: (click the command create Test, select the stored procedure, click Ok for the pop-up message, click Next, click Finish).

5. update the default value of dynamic query of value with the one below and save/post changes.

Select the cursor)

SELECT long_last_name,

first name,

E-mail

Employees

WHERE employee_id = idqry.employee_id

order of the 1 CAD

) RETURNS $,.

idqry.employee_id as P_EMP_ID

from (select employee_id

employees

where rownum < = 5) idqry

6. run the unit test newly created in the debug mode to display the shot.

Thus, the record type 'EmployeeInfoRec' in the package clearly defines the LONG_LAST_NAME as VARCHAR2 (4000) through reference for the data type of column in the referenced table.

But for some reason, the SQL Developer does not calculate correctly its length in the recordset "Receipts" If a variable is used (could be one as variable simple varchar2 in this reproducible test or complex variable of type of the object).

Any ideas on that? Looks like another bug...

Thank you

Val

The bug has been reproduced by the SRB and documented within the system of Support of Oracle SQL Developer team to pick it up:

Bug 19943948 - TEST UNIT RETURNS EXPECTED ERROR: [LONG_LAST_NAME

Hope the bug name can later be changed to something more descriptive, but it is not really... my only concern is the speed at which the known bugs would be fixed...

Thank you

Val

-

Calculation of the cardinality.

Hello

Selectivity is the fraction of rows in the rowset that meets the requirement of predicate.

It can be calculated as the inverse of the number of separate in the column lines, if the distinct values in the column are distributed evenly.

If the distinct values in the column are not distributed evenly then optimizer search histogram for selectivity.

Cardinality refers to the number of rows in the rowset.

Can it be calculated as selectivity * num_rows in the table/column?

In the Bitmap Index.

We used to create indexes of bitmap on a column, whose cardinality is low.

Here the cardinality can be calculated by:-num_distinct_rows/total_num_rows

My question is, what is the difference between the cardinality of the optimizer and the cardinality based on which the Bitmap index is created?Thank you

The total number of lines is not a parameter

That's a setting BOTH the 'hand' two parameters to determine the selectivity and the determination of costs that will determine if an index can be used or not.

1 - total number of lines to select

2. total number of lines to select the

Oracle decide NOT to use an index just because the result may contain 1 million rows. But if the table has only 1 million lines then it can get these lines by using a full table rather than an index scan.

Or if the table contains rows 1 billion so the index will be suddenly much more attractive.

As part of a 1 million line table the term 'little' may not apply if you're talking about 1 million rows. But 1 million lines is indeed "little" in the context of this 1 billion row table.

That's why I said the word 'little' has little or no sense. There must be a meaningful 'context'

And when the glossary speak of a threshold of 1% in the "degree of cardinality", in my humble OPINION both the term and the definition is wrong.

Sure - this 1% threshold ' part is bad, but it is related to the bitmap index and has NOTHING to do with the definition of the degree of cardinality provided.

Not to argue with the basic premise - just try to clarify things a little.

-

Realizing automatic calculation in the form field Oracle Apex.

Hi all

I m facing a problem in my Apex Application.

I have three ActualAmt, CommAmount, and Comm % fields in my form.

My requirement is when % ActualAmt and Comm is entered then automatically CommAmount should be calculated on the basis of ActualAmt and Comm %, in the second case, when I enter ActualAmt and CommAmount Comm % should be calculated on the basis ActualAmt and Comm %.

I have included my formula in Post calculation calculation but does not.

Please help find the solution.

Thanks, Mindmap, it worked.

Maybe you are looking for

-

Dear experts, Please help me on what I am making a solution for about 4 hours now... Somehow my recovery partition is damaged, so I use the recovery disk that I created when I bought the computer. I used the disc to start the computer and after loadi

-

Satellite C660 - does not not Minecraft

So, Yes, I have a Toshiba c660 i3 and windows 7 64 bitWhenever I go on minecraft I get this very long errorAfter some research, I found that there was a problem with the drivers (I think u may have to comply with it)I really need help I'm losing my f

-

While I'm typing my cursor moves to the previous parts of this paragraph and types where I certalnlydon can't like. How can I stop this. I can be anywhere, typng as now, and the cursor moves and put my letters in some parts of the paragraph I just ty

-

is there a log of time changed under xp?

Nice day I need check time date changes.In windows 7, I can do this by using the event viewer, but in windows XP, the event viewer does not record the change of time Where can I find these data. Kind regards

-

Computer freezes Vista, get the error of the audio control panel has stopped working

my computer is running windows vista Home premium and after it starts and connects to the home screen, it immediately freezes and it says the audio control panel stopped working and the mouse and the keyboard does not work Reference Dell inspiron 530