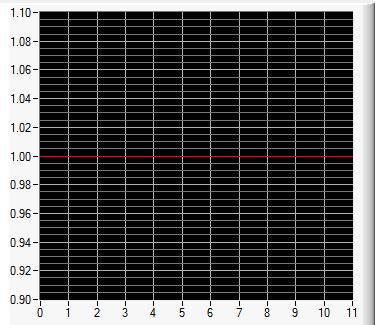

Chart from a data file

I have a data file with these values

1

1

1

-1

-1

-1

1

1

1

-1

-1

-1

I need to plot a graph XY with values of Y that this data and

1,2,3,4,5,6,7,8,9,10,11,12 = X values.

What's possible with a chart control, to read the values in the data file and chart egraph th?

thnx

static char file_name[MAX_PATHNAME_LEN];

static double wave[12];

void CVICALLBACK PlotGraph (int menuBar, int menuItem, void *callbackData,int panel)

if (FileSelectPopup ("", "*.txt", "*.txt", "Name of File to Read",

VAL_OK_BUTTON, 0, 1, 1, 0, file_name) > 0)

{

memset(wave, 0, sizeof(wave));

FileToArray (file_name, wave, VAL_DOUBLE, 12, 1,

VAL_GROUPS_TOGETHER, VAL_GROUPS_AS_COLUMNS, VAL_ASCII );

DeleteGraphPlot (panelHandle, PANEL_GRAPH, -1, 1);

PlotY (panelHandle, PANEL_GRAPH, wave, 12, VAL_DOUBLE,

VAL_THIN_LINE, VAL_EMPTY_SQUARE, VAL_SOLID, 1,VAL_RED);

}

}

I tried to read the values in the .txt file in a table using the FileToArray function. Can I use this chart to be drawn.

But I'm getting a parcel of just a straight line. See figure wave. Any ideas why?

Tags: NI Software

Similar Questions

-

Update from McAfee DAT file blocks LabVIEW

Here is some information... Map of MIO PCI data acquisition, basket SCXI, TC 128 channels 10 scans per second, graphing 20 channels every 2 seconds, once datalogging / minute. Datalog file is opened and closed during each write operation.

Manual VS performed when LabVIEW is not running.

When running LabVIEW, Altiris Agent and EPolicy daily press McAfee DAT updates. Either way, LabVIEW lock ups / hangs when the DAT file is pushed down to the machine. Graph, datalog file and McAfee-On-Access scanner log check the time of the event.

The obvious answer would be to disable the Altiris Agent and EPolicy. However, this does not work on other machines configured the same, and is not a consideration for the purposes of network management. Any suggestions?

Summer is here...

If McAffe doesn't let you configure or control where and how you can end up having to do what one of my clients when ended up doing.

They pull the network cable before a test starts and put it after the test is finished.

"A man (PC) can be seen serve two masters."

Ben

-

Retrieve a data file from a backup on another server

Hi all

I usually take backup hot data files to a different like that server because I don't have enough space in the same server. I have

SQL > alter tablespace XXXX begin backup;

SCP/bu1/oradaTA/XXXXX/XXXXX host [email protected]: / db1dw/oradata/backup/dec2010/XXXX

alter tablespace end OPS backup;

If I want to recover the datafile can I normally do the drop offline from the data file and copy the backup data file that is located in differnet thorugh scp server and check the online data file? and the database is in archivelog mode, but I dislike abt log files. I just need to recover the data file as the last backup.

or do we have to use all the controls recover datafile? If need to use everything recover datafile command go where it will recover the data file?

I have not any backup or restore script, I just manually PCs from data files.

Help please, thanks in advance...It is a ' user backups managed by "in the terminology of the Oracle.

The database does not care how you copy (backup or restore) the data file from a backup location. So, you can use scp to copy the backup to the backup file.

HOWEVER , you can recover only a datafile to the single point of the backup. Given that the rest of the database (i.e. all the other data files) have advanced further, you must RETRIEVE the DATA file and apply all ArchiveLogs since this backup of data file point to the current point (i.e. recovery would be until it again online that would apply automatically).

Therefore, if you have had the "transactions" (e.g. deletions) against this tablespace, they will be replayed.Hemant K Collette

-

Hello

I'm nologging operations + deleting some files in the primary and you want to roll forward the day before using the incremental backup Yvert.

I do in particular, as the files are dropped?

I got to meet ( Doc ID 1531031.1 ) which explains how to roll forward when a data file is added.

If I follow the same steps, to make the move to restore the data file newly added, will it work in my case?

Can someone please clarify?

Thank you

San

I was wondering if reocover noredo is performed before restored controlfile, oracle will apply the incremental backup error-free files, and in this case, what would be the status of the data file in the control file.

Why do you consider to retrieve the day before first and then in the restaurant of the controlfile will lead to problems. Please read my first post on this thread - I had clearly mentioned that you would not face problems if you go with the method of deployment.

Here is a demo for you with force logging is disabled. For the first time the day before resuming and restored then the controlfile ensures:

Primary: oraprim

Standby: orastb

Tablespace DataFile of MYTS is removed on primary:

SYS @ oraprim > select force_logging in the database of v$.

FORCE_LOGGING

---------------------------------------

NO.

Currently the tablespace is to have 2 data files.

SYS @ oraprim > select file_name in dba_data_files where nom_tablespace = 'MYTS;

FILE_NAME

-------------------------------------------------------

/U01/app/Oracle/oradata/oraprim/myts01.dbf

/U01/app/Oracle/oradata/oraprim/myts02.dbf

In standby mode, the tablespace is to have 2 data files:

SYS @ orastb > select name from v$ datafile where ts #= 6;

NAME

--------------------------------------------------------------------------------

/U01/app/Oracle/oradata/orastb/myts01.dbf

/U01/app/Oracle/oradata/orastb/myts02.dbf

Postponement of the day before on the primary log shipping

SYS @ oraprim > alter system set log_archive_dest_state_3 = delay;

Modified system.

Dropped 1 MYTS datafile on the primary.

SYS @ oraprim > alter tablespace myts drop datafile ' / u01/app/oracle/oradata/oraprim/myts02.dbf';

Tablespace altered.

Removed some archives to create a space.

[oracle@ora12c-1 2016_01_05] $ rm - rf * 31 *.

[oracle@ora12c-1 2016_01_05] $ ls - lrt

13696 total

-rw - r - 1 oracle oinstall 10534400 5 January 18:46 o1_mf_1_302_c8qjl3t7_.arc

-rw - r - 1 oracle oinstall 2714624 5 January 18:47 o1_mf_1_303_c8qjmhpq_.arc

-rw - r - 1 oracle oinstall 526336 5 January 18:49 o1_mf_1_304_c8qjp7sb_.arc

-rw - r - 1 oracle oinstall 23552 5 January 18:49 o1_mf_1_305_c8qjpsmh_.arc

-rw - r - 1 oracle oinstall 53760 5 January 18:50 o1_mf_1_306_c8qjsfqo_.arc

-rw - r - 1 oracle oinstall 14336 Jan 5 18:51 o1_mf_1_307_c8qjt9rh_.arc

-rw - r - 1 oracle oinstall 1024 5 January 18:53 o1_mf_1_309_c8qjxt4z_.arc

-rw - r - 1 oracle oinstall 110592 5 January 18:53 o1_mf_1_308_c8qjxt34_.arc

[oracle@ora12c-1 2016_01_05] $

Current main MYTS data files:

SYS @ oraprim > select file_name in dba_data_files where nom_tablespace = 'MYTS;

FILE_NAME

-------------------------------------------------------

/U01/app/Oracle/oradata/oraprim/myts01.dbf

Current data of MYTS standby files:

SYS @ orastb > select name from v$ datafile where ts #= 6;

NAME

--------------------------------------------------------------------------------

/U01/app/Oracle/oradata/orastb/myts01.dbf

/U01/app/Oracle/oradata/orastb/myts02.dbf

Gap is created:

SYS @ orastb > select the process, status, sequence # v$ managed_standby;

STATUS OF PROCESS SEQUENCE #.

--------- ------------ ----------

ARCH. CLOSING 319

ARCH. CLOSING 311

CONNECTED ARCH 0

ARCH. CLOSING 310

MRP0 WAIT_FOR_GAP 312

RFS IDLE 0

RFS IDLE 0

RFS IDLE 0

RFS IDLE 320

9 selected lines.

Backup incremental RMAN is taken elementary school.

RMAN > incremental backup of the format of database of SNA 2686263 ' / u02/bkp/%d_inc_%U.bak';

From backup 5 January 16

using the control file of the target instead of recovery catalog database

the DISC 2 channel configuration is ignored

the DISC 3 channel configuration is ignored

configuration for DISK 4 channel is ignored

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID = 41 type device = DISK

channel ORA_DISK_1: starting full datafile from backup set

channel ORA_DISK_1: specifying datafile (s) in the backup set

Enter a number of file datafile = 00001 name=/u01/app/oracle/oradata/oraprim/system01.dbf

Enter a number of file datafile = name=/u01/app/oracle/oradata/oraprim/sysaux01.dbf 00003

Enter a number of file datafile = name=/u01/app/oracle/oradata/oraprim/undotbs01.dbf 00004

Enter a number of file datafile = name=/u01/app/oracle/oradata/oraprim/users01.dbf 00006

Enter a number of file datafile = name=/u01/app/oracle/oradata/oraprim/myts01.dbf 00057

channel ORA_DISK_1: starting total, 1-January 5, 16

channel ORA_DISK_1: finished piece 1-January 5, 16

piece handle=/u02/bkp/ORAPRIM_inc_42qqkmaq_1_1.bak tag = TAG20160105T190016 comment = NONE

channel ORA_DISK_1: complete set of backups, time: 00:00:02

Backup finished on 5 January 16

Saved controlfile on primary:

RMAN > backup current controlfile to Eve format ' / u02/bkp/ctl.ctl';

Cancel recovery in standby mode:

SYS @ orastb > alter database recover managed standby database cancel;

Database altered.

Recover the day before by using the above backup items

RMAN > recover database noredo;

From pick up to 5 January 16

the DISC 2 channel configuration is ignored

the DISC 3 channel configuration is ignored

configuration for DISK 4 channel is ignored

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID = 26 type of device = DISK

channel ORA_DISK_1: from additional data file from the restore backup set

channel ORA_DISK_1: specifying datafile (s) to restore from backup set

destination for the restoration of the data file 00001: /u01/app/oracle/oradata/orastb/system01.dbf

destination for the restoration of the data file 00003: /u01/app/oracle/oradata/orastb/sysaux01.dbf

destination for the restoration of the data file 00004: /u01/app/oracle/oradata/orastb/undotbs01.dbf

destination for the restoration of the data file 00006: /u01/app/oracle/oradata/orastb/users01.dbf

destination for the restoration of the data file 00057: /u01/app/oracle/oradata/orastb/myts01.dbf

channel ORA_DISK_1: backup /u02/bkp/ORAPRIM_inc_3uqqkma0_1_1.bak piece reading

channel ORA_DISK_1: room handle=/u02/bkp/ORAPRIM_inc_3uqqkma0_1_1.bak tag = TAG20160105T190016

channel ORA_DISK_1: restored the backup part 1

channel ORA_DISK_1: restore complete, duration: 00:00:01

Finished recover to 5 January 16

Restored the controlfile and mounted the day before:

RMAN > shutdown immediate

dismounted database

Instance Oracle to close

RMAN > startup nomount

connected to the database target (not started)

Oracle instance started

Total System Global Area 939495424 bytes

Bytes of size 2295080 fixed

348130008 variable size bytes

583008256 of database buffers bytes

Redo buffers 6062080 bytes

RMAN > restore controlfile eve of ' / u02/ctl.ctl ';

From 5 January 16 restore

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID = 20 type of device = DISK

channel ORA_DISK_1: restore the control file

channel ORA_DISK_1: restore complete, duration: 00:00:01

output file name=/u01/app/oracle/oradata/orastb/control01.ctl

output file name=/u01/app/oracle/fast_recovery_area/orastb/control02.ctl

Finished restore at 5 January 16

RMAN > change the editing of the database;

Statement processed

output channel: ORA_DISK_1

Now the data file does not exist on the standby mode:

SYS @ orastb > alter database recover managed standby database disconnect;

Database altered.

SYS @ orastb > select the process, status, sequence # v$ managed_standby;

STATUS OF PROCESS SEQUENCE #.

--------- ------------ ----------

CONNECTED ARCH 0

CONNECTED ARCH 0

CONNECTED ARCH 0

ARCH. CLOSING 329

RFS IDLE 0

RFS IDLE 330

RFS IDLE 0

MRP0 APPLYING_LOG 330

8 selected lines.

SYS @ orastb > select name from v$ datafile where ts #= 6;

NAME

--------------------------------------------------------------------------------

/U01/app/Oracle/oradata/orastb/myts01.dbf

Hope that gives you a clear picture. You can use this to roll forward day before using the SNA roll forward Eve physical database using RMAN incremental backup | Shivananda Rao

-Jonathan Rolland

-

Migration - MySQL to Oracle - do not generate data files

Hello

I have been using the SQL Developer migration Assistant to move data from a MySQL 5 database to an Oracle 11 g server.

I used it successfully a couple of times and its has all worked.

However, I am currently having a problem whereby there is no offline data file generated. Control files and all other scripts generated don't... just no data file.

It worked before, so I'm a bit puzzled as to why no logner work.

I looked at newspapers of migration information and there is no errors shown - datamove is marked as success.

I tried deleting and recreating rhe repository migration and checked all grants and privs.

Is there an error message then it would be something to continue but have tried several times and checked everything I can think.

I also tried the approach of migration command-line... same thing. Everything works fine... no errors... but only the table creation and control script files are generated.

The schema of the source is very simple and there is only the tables to migrate... no procedure or anything else.

Can anyone suggest anything?

Thank you very much

MikeHi Mike,.

I'm so clear.

You use SQL Developer 3.0?

You walked through the migration wizard and choose Move Offline mode data.

The generation of DDL files are created as are the scripts to move data.

But no data (DAT) file is created and no data has been entered in the Oracle target tables.With offline data move, Developer SQL generates (saved in your project, under the DataMove dir directory) 2 sets of scripts.

(a) a set of scripts to unload data from MySQL to DAT files.

(b) a set of scripts to load data from the DAT files in the Oracle target tables.

These scripts must be run by hand, specifying the details for the source databases MySQL and Oracle target."no offline data file generated. Control files and all other scripts generated don't... just no data file. »

«.. . but only the creation and control file table scripts are generated. »What you mean

(1) the DAT files are not generated automatically. This should, if we need to run the scripts yourself

(2) after manually running the scripts that the DAT are not present, or that the DAT files are present, but the data does not load in Oracle tables.

(3) the scripts to move data in offline mode does not get generatedKind regards

Dermot

SQL development team. -

What background process is used for data files retriving data

Hello

What background process is used to retrieve data from data files

as I know dbwn is used to write the data to the SGA buffer cache in data files

and I want to know that when the recovery of data from the data file in the buffer cache that back ground process is used

I want to process structure

Thank you

GitaHello

What process will write to connect to buffer?

Process server :)

Kind regards

Rakesh Jayappa -

Hello

I have red the DBW do that beside Scripture, is that correct?

Thank youJin

DBWR writing data in data file... hope that the server process reads the data from the data file...

Kind regards

Deepak -

If earlier I transferred my old files from the computer to my new computer and when I did I tried that my firefox books brands added when I did he just make an old firefox data file. I went in there it has the markings of books, but the problem is it is not really readable a little mixed up and I want to know if I can import it to my only current or somehow get it organized so I can at least see them outside or on a note pad just each bookmark on its own line? I added a picture what it looks like

You can use the notebook (Firefox/tools > Web Developer) to inspect and edit files in JSON format quite easily.

There is a "Pretty Print" button to add line breaks. -

How to write data from the INI file for the control of the ring

Hai,

I need to write the data read from the INI file to a control of the RING. Doing this operation using variants I get the error.

I will be happy if someone help me. I have attached the file special INI and VI.

-

VI data on average compared to the data from the Excel file on average .lvm

I am trying to build a .VI to measure voltages on a channel of a transducer of pressure for a period of 3 minutes. I would like the .VI to write all the samples of blood to a file .lvm with another .lvm file that comes from the average voltage over the period of 3 minutes. I built a .VI making everything above so I think that... The problem I'm running into is when I opened the file .lvm of all samples of blood in MS Excel and take the average of them using the built-in Excel function (= AVERAGE(B23:B5022) for example) averaged and compare it to the .lvm file, which has just 3 minute average .VI voltage, they do not correspond to the top.

This makes me wonder if I use VI with an average of function correctly or if maybe VI averages data different voltage than what is written in the .lvm file.

Does anyone know why the two averages are different and how I can match.

I have attached a picture of my functional schema with the file .VI for clarity.

The Type of dynamic data of LabVIEW you use is a special data type that can take many forms. It therefore requires the use "Of DDT" and "DDT" for the conbert to and from other data types. These special conversion functions can be configured by double-clicking them and specifying the format you are converting from. You can find the functions on the pallet handling Express-Signal.

I've attached a screenshot of the modification of the code using the "DDT" and the average is very good.

Please mark this as accepted such solution and/or give Kudos if it works for you. We appreciate the sides for our answers.

Thank you

Dan

-

How to get data from an Excel file exist using report generation tools

I try to use the Excel vi get the data of the report generation tool, but I can't understand how to activate a path can be explored in the right type for the VI. I tried to use the new Report.vi, but this does not work unless you use a template. It will not open an existing excel file and make an open report to extract data from.

Essentially, I have a bunch of excel files that have data in them, and I want a VI allows to analyze the data. I'm going to pull in all the data directly from the excel file so I don't have to reproscess them all in text so I can use the more standard datasheet live but to convert even the excel file programtically in labview I still need to be able to open the excel file and get the data?

I found my problem. It turns out that only not to have had a problem with the tool box new report vi. I had accidentally wired an input control of path of folder instead of an input control of path of file to it. Changing the file type took care of her and I was able to access excel files, I tried using the new report VI to extract the file, and Excel Get Data to extract the data.

-

I have a new computer with Windows Vista. My old computer does not start (lights turn on but just spinning noise that nothing appears on the monitor). I need to get the data/files from my old hard drive. Can I connect the old drive to my new computer to access the files?

Connect the older hard drive to your computer and transfer the content:

How do I add an additional hard drive

http://www.WikiHow.com/add-an-extra-hard-drive -

I installed Oracle database on Linux 6.6, Virtualbox 11.2.0.1.0.

I used ASM for database storage.

When I want to perform a backup in offline mode. I stop the database data files and copy to the filesystem as follows (for example).

ASMCMD [+] > cp +DATA/ora11g/datafile/system.270.883592533/u01/app/oracle

The files copied with success and also any other data files, logs and controlfiles files. Then, I also rename all the files in editing mode.

After that, I used the backup files to start the oracle of backup (storage non - ASM with new pfile edited)

Open successfully from a backup database.

But when I want to use old spfile to open the database for the storage of the DSO again, a few errors have occurred as no data files in ASM storage.

I check the contents of the ASM storage with ASMCMD commands. And realize that there only spfile and controlfile located ASM storage and other files: data files and online redo logfiles automatically deleted.

Why the data files and log files deleted ASM storage? Is this normal? I have no delete all files of the DSO.

It is actually deleted from the use of the cp command?

Exactly what we say. Data in ASM files are OMF (Oracle managed files). The RENAME translates the DSO by deleting the original file.

Hemant K Collette

-

Unable to display data from a csv file data store

Hi all

I'm using ODI 11 g. I'm trying to import metadata from a csv file. To do this, I have created physical and logical diagrams corresponding. Context is global.

Then, I created a model and a data store. Now, after reverse engineering data store, I got the file headers and I changed the data type of columns to my requirement and then tried to view the data in the data store. I am not getting any error, but can't see all the data. I am able to see only the headers.

Even when I run the interface that loads data into a table, its operation without error, but no data entered...

But the data is present in the source file...

Can you please help me how to solve this problem...

Hi Phanikanth,

Thanks for your reply...

I did the same thing that you suggested...

In fact, I'm working on the ODI in UNIX environment. So I went for the record separator on UNIX option in the files of the data store tab and now its works well...

in any case, once again thank you for your response...

Thank you best regards &,.

Vanina

-

How to move data files from drive C to drive E in MS SQL Server 2005 used for hyperion

Hi all

We have installed Hyperion with MS SQL Server 2005. Hyperion 11.1.1.3 works well.

But in the SQL server data files will register in C drive only. But we have to back up the files in drive E.

I used the Attach and Detach method to move files from C drive to E drive.

After that, I am reconfiguring the EPM system. but I get errors in the configuration.

Now I get the planning of database failed when Confiuring planning.

Configuration of data source interface has failed in the Configuration of EPMA.

Please suggest the best way to make this scenario.

Thank you

Mady

Why do you need to reconfigure after that move data files, if it is done properly it should be without data loss.

See you soon

John

Maybe you are looking for

-

I want to know the benefits of the VISA by GPIB in labview when we connect our pc to a device that has interface GPIB What could be the reason behind the use of the VISA program I want at least 5 advantages of visa by GPIB.

-

HP Pavilion - the best way to optimize?

SYSTEM INFORMATION: Pavilion PU131AV / a1050y, Windows XP SP3 32-bit, Nvidia GeForce 6600, MCE, Intel Pentium 4 CPU 3.20 GHz, 2 GB RAM PROBLEM: Office seems to have problems running the basic operations. For example: a. when copying large amounts of

-

Can not open google chrome more. It's really annoying. Can you help me?

-

HP Photosmart B110 series main: identification of drivers to download

When I search the software to download on my laptop in order to operate my printer HP Photosmart B110, I gives me a choice of the driver for B110a, the B110b, B110c, B110e or B110d. My problem is that nowhere on the printer gives the exact model. I

-

ListField: menu appears after clicking navigation

Hello members of the community. I have a problem with the menu displayed on the screen with ListField after navigation click event is raised. Here is what I do to reproduce this problem: -navigation click on the selected entry of the ListField contro