Large number of samples by FIFO - avoid the saturation of the buffer target

My hardware is a PXI system, with a 7952R module FlexRio FPGA and a 14 bit 250 digitizer MECH NI5761. / s.

Most of the posts I've seen facing some Mech acquisition rates. / s or less and the number of samples of a few 1000. In addition, my analog read as well as write FIFO is in a SCTL don't while loop.

I want to buy say a 100000 samples each folder at the rate of 250 Mech. / s or more (millions of samples) at this rate.

Here's the basic problem I condensed (I think that is). I use the example of the Acquisition of SingleSample as a test project.

The FIFO DMA includes if the target and the host buffer. In practice, the maximum number of items that the target buffer can have is 32767.

Now, once on the host, I request more samples to 32767, the memory stream buffer target above (overflow), the host THAT FIFO read node waits forever, until finally the time-out has been reached and the number of items remaining in the FIFO (I guess the host buffer) get enormously large (scales with the time, the read node waits (plus I Specifies the time-out is the largest the number gets).

This is even if the depth of the FIFO is quite large (the host buffer is 5 x the number of samples per record).

First of all, this suggests that the DMO transfer rate is too slow. However, this also happens if I acquire only 125 MECH. / s (take 2 samples of ns, which means 14 bits 8 x 125 Ms, so about 250 MB/s. This is well below the rate of transfer, as far as I know, so it should not be the reason. Or am I oversee something?

The only solution I see is to limit the size of the records up. 32767 elements at a time.

Someone at - it experience with large quantities of samples on a FPGA - digitizer using a FIFO for reading configuration?

Just run the example CLIP unique sample vi and try to acquire 1000 samples, it will work. Try to acquire say 10000 samples and it will timeout as described above.

Thank you!

Tags: NI Software

Similar Questions

-

Units of the number of samples and rates for the DAQ Assistant units

Hello

I use the DAQ assistant for analog voltage of an input OR data acquisition card. What is the difference between the rate and the number of samples in the DAQ assistant and what are the units of the two?

Thank you.

The number of samples is how many discrete to measures. Rate (per second) is how fast to acquire the specified number of samples.

If number of samples is 100 and the rate is 1000 samples per second, then the acquisition would take 0.1 second (100 / 1000).

-AK2DM

-

original title: remove folders

I have Vista with Windows Mail. A large number of messages I put in the folder deleted in my email are missing when I go to pick up at a later date. How can I get back them?

Windows Live Mail

The difference. Wrong forum.

Windows Live Mail Forum

http://www.windowslivehelp.com/forums.aspx?ProductID=15Bruce Hagen

MS - MVP October 1, 2004 ~ September 30, 2010

Imperial Beach, CA -

I can't find a way to the iPad to choose a large number of photos at the same time (similar to the use of the SHIFT key to select a group of files in windows). I would like to delete a large number of photo files to save storage space can be used for the future taking pictures. I wonder if there is an easier way to do that than to have to select and remove each file individually rather than delete them as a group. By the way this should be done twice if you want immediate deletion. There must be an easier way. We appreciate and support.

Open your photos > press Select > drag your finger through the photos you want to delete. To remove deleted lately, open the recently deleted album > press Select > tap Delete everything in the upper left corner. -AJ

-

all samples n transferred from the buffer

Hi all

I have a question for every N samples transferred DAQmx event buffer. By looking at the description and the very limited DevZones and KBs on this one, I am inclined to believe that the name is perfectly descriptive of what must be his behavior (i.e. all samples N transferred from the PC buffer in the DAQmx FIFO, it should report an event). However, when I put it into practice in an example, either I have something misconfigured (wouldn't be the first time) or I have a basic misunderstanding of the event itself or how DAQmx puts in buffer work with regeneration (certainly wouldn't be the first time).

In my example, I went out 10 samples from k to 1 k rate - so 10 seconds of data. I recorded for every N samples transferred from the event of the buffer with a 2000 sampleInterval. I changed my status of application of transfer of data within the embedded memory in full with the hope that it will permanently fill my buffer with samples regenerated (from this link ). My hope would be that after 2000 samples had been taken out by the device (e.g., take the 2 seconds) 2000 fewer items in the DMA FIFO, it would have yielded 2000 samples of the PC for the FIFO DMA buffer and so the event fires. In practice it is... not to do so. I have a counter on the event that shows it fires once 752 almost immediately, then lights up regularly after that in spurts of 4 or 5. I am at a loss.

Could someone please shed some light on this for me - both on my misunderstanding of what I'm supposed to be to see if this is the case and also explain why I see what I see now?

LV 2013 (32 bit)

9.8.0f3 DAQmx

Network of the cDAQ chassis: 9184

cDAQ module: 9264Thank you

There is a large (unspecced, but the order of several MB) buffer on the 9184. He came several times on the forum, here a link to an another discussion about this. Quote me:

Unfortunately, I don't know the size of this buffer on the 9184 on the top of my head and I don't think it's in the specifications (the buffer is also shared between multiple tasks). This is not the same as the sample of 127 by buffer slot AO which is present on all chassis cDAQ - controller chassis ethernet / wireless contains an additional buffer which is not really appropriate I can say in published specifications (apparently it's 12 MB on the cDAQ wireless chassis).

The large number of events that are triggered when you start the task is the buffer is filled at startup (if the on-board buffer is almost full, the driver will send more data — you end up with several periods of your waveform output in the built-in buffer memory). So in your case, 752 events * 2000 samples/event * 2 bytes per sample = ~ 3 MB of buffer memory allocated to your task AO. I guess that sounds reasonable (again would really a spec or KB of...) I don't know how the size of the buffer is selected).

The grouping of events is due to data sent in packages to improve efficiency because there is above with each transfer.

The large buffer and the consolidation of the data are used optimizations by NOR to improve flow continuously, but can have some strange side effects as you saw. I might have a few suggestions if you describe what it is you need to do.

Best regards

-

How to assign write access to a large number of forms in HPM at the same time?

as usual, we can assign write access to forms by going to manage forms of data and select each shape, then go to the section "affect access.

but when the forms become in bulk to affect how to manage? is there a shorter way to assign access to all forms at the same time without assigning to everyone who takes time?Hello

It is possible to import the safety of the command for the following line in Hyperion Planning:

-Members *.

-Form records

-Form *.

-Composite forms

-To-do lists *.

-Calc files (if you use the calc Manager)

-Calc (if you use the calc Manager)Those that are marked with a * I did in the past, some of the others appear to me of new features you may need to check the version you are working. Take a look at the admin guide below, section starting pg 58.

http://docs.Oracle.com/CD/E17236_01/EPM.1112/hp_admin_11122.PDF

Hope this helps

Stuart -

samples per channel and the number of samples per channel

in my DAQ mode samples finished program, there are two screws: timing and read.vi DAQmx DAQmx.

I have to set the parameter to "samples per channel" DAQmx timing.vi and 'number of samples per channel' on DAQmx read.vi... Is there a relationship between these two?

My laser runs at 1 K Hz. I want to go to the wavelength, wait for a number of shooting lasers, read the data and move on to the next page...

Thank you

Lei

In your case, the VI will acquire the lesser of either:

The "samples per channel" that you have defined on the timing DAQmx VI

-OR-

The number of iterations of your for loop (N) times the 'number of samples per channel"that you have defined on the DAQmx read VI

The "samples per Channel" VI DAQmx of timing for a finite acquisition dictates how many samples the DAQ hardware should acquire in it's onboard buffer before indicating that the acquisition is complete. "The number of samples per Channel" on the read DAQmx VI dictates how many samples the DAQmx driver must return buffer on board the aircraft to your application.

Let's say the "samples per channel" on the calendar DAQmx VI is set to 50. Thus, the card will acquire 50 samples and place them in the edge of the buffer, then stops. Suppose we have the 'number of samples per Channel"on the DAQmx reading VI the value 3 and what we call the VI in a loop For which runs 10 times. Thus, every time the DAQmx lu VI is called, it will wait until there are at least 3 samples in the buffer, and then return these three. We call the VI a total of 10 times, then we will answer 30 total samples. Thus, the last 20 samples acquired the card remains in the buffer and are destroyed when the task is disabled.

Now let's say that we increase the "number of samples per Channel" on our DAQmx Read VI at 10. VI Read will wait until 10 or more samples are in the buffer, and then return these 10. Thus, we will be back all 50 samples map acquired by the 5th iteration of the loop For. The 6th time we call him VI DAQmx Read it expires, because there will never be another 10 samples in the buffer, and the VI returns a warning.

This clarifies things?

The purpose of this behavior is to allow you to both set the total number of samples that the DAQ hardware will acquire and also control how much of these samples is returned whenever you call the DAQmx Read VI.

Kind regards

-

Hi all

I would like to continuously acquire an input channel analog and, if necessary, be able to stop the acquisition as quickly as possible.

I use a card NI USB-6289. I implemented a DAQmx (voltage) analog input task, set the clock sampling DAQmx 'continuous samples' and the rate required (IE 1000, which is 1 kHz). For the acquisition, I used a while loop with inside the DAQmx Read with Terminal 'number of samples per channel', wired (IE 1000). The while loop waits until 1000 samples are acquired and therefore continuously reads the channel in uniform batches of 1000 samples every second ticking. The while loop can be easily interrupted when the DAQmx has completed the acquisition of the lot being 1000 samples, but it takes the DAQmx finish their current task.

My problem is how to stop the loop while the DAQmx Read lies in the middle to get 1000 samples WITHOUT delay all 1000 samples are received? Is it possible to interrupt the DAQmx Read?

I could reduce "the number of samples per second", increasing the responsiveness of the while loop, but this is not the solution I prefer. I tried to destroy the task (outside the while loop), but that does not stop immediately the acquisition, Read DAQmx still finish acquire 1000 samples. I've included an example Subvi. When I was using a PCI card, I used a timed while loop with inside the DAQmx Read with Terminal 'number of samples per channel' wired-1 (= which means ' read everything in the buffer "). In this case, it was easy to stop the acquisition at any time: the timed, while the loop is abandoned and the acquisition has ceased immediately. But it does not work with an NI USB-6289 map (see thread http://forums.ni.com/ni/board/message?board.id=170&message.id=386509&query.id=438879#M386509) because of the different way the data are transferred to the PC.

Thank you very much for your help!

Have a great day,

LucaQ

Hi LucaQ,

Your solutions are decrease of the number of samples, or record the time you want to stop and remove samples that have been acquired beyond this time. There is no other way to stop the actual reading out of the hardware store.

Flash

-

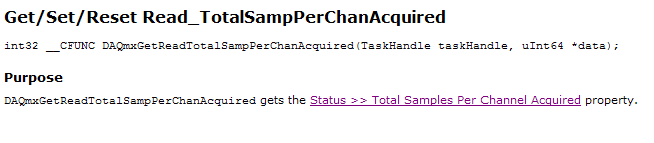

Total number of samples per channel

Hello

I was wondering if you can call a function that returns the total number of samples per channel read. I try to get a precise timestamp.

I use DAQmx ANSI C developer environment.

According to NOR:

Option #1:

Try and do exactly what the driver is doing. This will require you to do exactly what you do in the displayed example. Call time current system immediately before calling the DAQmx Read and subtract dt * x where x is the number of samples already acquired. It will take you to know exactly how many samples were acquired. It can be found by calling the Total property of the samples by chain acquired immediately before the DAQmx Read. This is to introduce some points for innaccuracy. For example, you're time system is already innaccurate to a certain amount. In addition, it takes some time between the system times call, calling the total number of samples acquired and calling the DAQmx reading. If 2 samples are acquired between the call to the time system and the total number of samples acquired, you could be shifted a few samples. For the slower clock rates, you will have more precision.

I try to attempet to program this solution, but cannot find the total number of samples per channel acquired property. If anyone can help me, your help will be very appreciated.

Thank you for your help,

Vladimir

Hi Vladimir,.

Here is the property you are looking for (as the C reference help):

You will be able to get accurate relative timestamps, since we know that our acquisition is based on a sample with a certain dt clock. The value of initial time must still come from the OS.

Best regards

John

-

PCI-6070E: maximum number of samples (analog in) by Hz?

In MAX and LV8.6 I have converted 1kS (analog in) at a rate of 1 kHz (the 6070E is estimated to be 1.25. MECH / s), therefore, seems OK.

However, why conversion fails to 10kS to 100 Hz, just the same 1 MECH/s?

Something to do with the maximum size of the buffer of an 6070E internal?

If so, is Labview 8.6 fast enough to read and store 1 MECH. / s adjacent to the coast of the 6070E although 6070E data acquisition presents data of Labview 1000 pieces/s.

I've run an AMD64 x 2 @ 6 GHz @ 4 GB RAM @ 2 TB HDD, must be fast enough.

I certainly don't miss something, but what?

In the data acquisition textbooks, I couldn't find lights on this topic.

Thanks for any explanation.

I'm having a hard time to understand what you are doing. You say "conversions fail to 10kS to 100 Hz, comes to be the same MECH/s 1." What is the actual sampling frequency you are using? Is - this samples 100/s? And you ask 10 k samples? If so, why are you doing it like that? If you want 10 k samples at this pace, you should probably ask 100 samples and make multiple readings. To get 10 k samples at a frequency of 100 hz, which would take 100 seconds, wouldn't you get anything until the 100 seconds is upward and probably the buffer overflow. Take 100 samples at a time and repeat 100 times would give you the same number of samples, but you get the data every second and not needing a such great buffer.

-

Best practices for the handling of data for a large number of indicators

I'm looking for suggestions or recommendations for how to better manage a user interface with a 'large' number of indicators. By big I mean enough to make schema-block big enough and ugly after that the processing of data for each indicator is added. Data must be 'unpacked' and then decoded, for example, Boolean, binary bit shifting fields, etc. The indicators are updated once / sec. I'm leanding towards a method that worked well for me before, that is, binding network shared variable for each indicator, then using several sub-vis to treat the particular piece of data, and write in the appropriate variables.

I was curious what others have done in similar circumstances.

Bill

I highly recommend that you avoid references. They are useful if you need to update the properties of an indicator (color, police visibility, etc.) or when you need to decide which indicator update when running, but are not a good general solution to write values of indicators. Do the processing in a Subvi, but aggregate data in an output of cluster and then ungroup for display. It is more efficient (writing references is slow) - and while that won't matter for a 1 Hz refresh rate, it is not always a good practice. It takes about the same amount of space of block diagram to build an array of references as it does to ungroup data, so you're not saving space. I know that I have the very categorical air about it; earlier in my career, I took over the maintenance of an application that makes excessive use of references, and it makes it very difficult to follow came data and how it got there. (By the way, this application also maintained both a pile of references and a cluster of data, the idea being that you would update the front panel indicator through reference any time you changed the associated value in the data set, unfortunately often someone complete either one or another, leading to unexpected behavior.)

-

How to choose the maximum number of items for DMA FIFO to the R series FPGA

Greetings!

I'm working on a project with card PCIe-7842R-R series FPGA of NOR. I use to achieve the fast data transfer target-to-host DMA FIFO. And to minimize overhead costs, I would make the size of the FIFO as large as possible. According to the manual, 7842R a 1728 KB (216KO) integrated block of RAM, 108 000 I16 FIFOs items available in theory (1 728 000 / 16). However the FPGA had compilation error when I asked this amount of items. I checked the manual and searched online but could not find the reason. Can someone please explain? And in general, what is the maximum size of the FIFO given the size of the block of RAM?

Thank you!

Hey iron_curtain,

You are right that the movement of large blocks of data can lead to a more efficient use of the bus, but it certainly isn't the most important factor here. Assuming of course that the FIFO on the FPGA is large enough to avoid overflowing, I expect the dominant factor to the size of reading on the host. In general, larger and reads as follows on the host drive to improve throughput, up to the speed of the bus. This is because as FIFO. Read is a relatively expensive operation software, so it is advantageous to fewer calls for the same amount of data.

Note that your call to the FIFO. Read the largest host buffer should be. Depending on your application, you may be several times larger than the size of reading. You can set the size of the buffer with the FIFO. Configure the node.

http://zone.NI.com/reference/en-XX/help/371599H-01/lvfpgaconcepts/fpga_dma_how_it_works/ explains the different buffers involved. It is important to note that the DMA engine moves data asynchronously read/write on the host nodes and FPGAs.

Let me know if you have any questions about all of this.

Sebastian

-

Large number of files in the directory profile with at sign in name

Hello

I noticed that my wife s v35 Firefox running Windows 8.1 32-bit has a large number of files such as:cert8@2014-03-25T19;02;18.DB

Content-prefs@2014-01-30T21;28;58.SQLite

Cookies@2014-01-08T18;12;29.SQLite

HealthReport@2015-01-20T06;44;46.sqlite

Permissions@2015-01-19T10;26;30.SQLite

webappsstore@2015-01-20T06;44;48.SQLiteSome files are entirely new.

The original files get backed up somehow, but I can't figure out how. My PC does not contain such files.Thank you

I'm sorry, this has nothing to do with Firefox. I was watching the backup directory created by Memeo autobackup. The original profile directory is OK.

Sorry once again. -

How can I restore iTunes a large number of items currently in the Recycle Bin?

How can I restore iTunes a large number of items currently in the Recycle Bin?

See if the menu in iTunes to undo option is available. If it is not, go to the Recycle Bin and drag the files into the folder automatically add to iTunes. iTunes 9: understanding the "Automatically add to iTunes" folder - http://support.apple.com/kb/HT3832 - files placed in this folder are actually moved from this folder to the correct location in the iTunes Media folder.

-

Recently, I moved a large number of emails (filtered by sender) in a new folder I created in Thunderbird.

The emails themselves arranged in threads that are independent of any discernible factor. Some e-mails with 7 years in dfference are strung together. Also, date / reception function triage is not properly sort, IE the new emails are not at both ends of the sort, nor in the nets. Once again, no visible trend.

I checked on gmail, where emails are hosted, and none of these issues is apparent.

It's important questions to find emails, especially since when I enter a search term in the filter and it has no result, but then generate results when I use the search function.OK, right click on the folder, select properties and then repair. Just in case that helps.

Maybe you are looking for

-

When I click on a file .nrl extension (belongs to the autonomy I - manage your software) it returns a string of code (which is in the shortened link) instead of following the link and the return document. Is it possible to say Forefox connect to the

-

Satellite Pro A300 PSAG9E. Should I keep or not?

Hello I just bought a Toshiba Satellite Pro A300 PSAG9E. It came pre-installed with Vista and also a DVD to upgrade to windows 7 Professional (that I installed). I tried to start the machine this morning, but for some reason it wouldn't start. All th

-

BlackBerry Bold 9900 smartphones. Bluetooth car conection

I'm unable to connect my 9900 automatically with the car via bluetooth. My Pearl did but the 9900 request "do you want to accept connection" does anyone know how to make it become automatic when you enter the car?

-

I need to turn it off, but my computer was irreparable at Best Buy.They gave a replacement.How to make your system to turn off so I can install on the new computer?Mike Griffin[personal information deleted - mod]

-

I signed the year last September, so I'm just charged me for my second month of the year a few days ago...Also if I need to cancel my account can I register to the promotion of education where you pay one year in advance and get 2 months free?