Sharing between several executable LabVIEW data queue

I have 2 Executables (Exe) of LabVIEW where an Exe generates data and other we consume the same. Is there anyway that I can use a single queue for the same reference? Exe first gets the number of queue and stores the reference number in V.I.G where the second Exe to read this this V.I.G. reference number The error of the VI second dequeue mentioned the invalid queue.

Is there anyway that I can share the same data between different exe files with the functionality of a queue?

Each exe is mapped in its own memory space so in a context resource refs will not work in another, but...

If you presentation engine of the Action in an instance must be used via the server VI Invoke node on the other, EI (Note: it will run in the context of the server) could queue data in the customer's name.

Plese Note:

If the alternate server is idle all of its resources will be invalidated (including the queue) but a ref to the EI will be valid. In this case, the enqueue op will fail if you have the code to take account of this situation. (been there, done that  )

)

The same approach works in any network where serve architecture VI can be used. I've used this approach about 10 years (less the queue) to allow the control of a plant of a laptop computer with an internet connection.

Ben

Tags: NI Software

Similar Questions

-

global variables shared between several targets of RT

Hello

I'm looking for some information about variables shared between RT communication network.

Scenario: a PXI 'master' and several 'slave' PXIs running that screw RT. data is available in the form of a cluster of definied type on each slave PXIs (inside a non deterministic while loop). I guess I create global variables of network shared for each PXI slaves. A variable for each slave. I only write data in these variables in one place (above mentioned, while loops), on the PXIs slave. I need to read that data in a way with loss (I just need that value the most recent, as tags) from the master PXI.

Question: can I simply use "network shared variable" settings by default? I mean, I do not enable the option RT FIFO (single element), because I think that for a cluster that is a no go in the case of RT (variable length)?

What do you think? Or would you have better idea how to share data between slaves and masters RT targets (I do not have deterministic/losless transmissions, only these last values)?

I think that the way to proceed is to use the shared Variable Aliasing/binding - they allow you to 'link' shared variables in any of the multiple targets. https://forums.ni.com/t5/LabVIEW/Bind-Alias-shared-variable-to-scan-engine-variable/td-p/3290043.

You can also use shared variables of programming access to access running on another target: http://zone.ni.com/reference/en-XX/help/371361G-01/lvconcepts/usingdynvarapi/

With regard to the other methods - you could always disseminate data via UDP multicast (or with a TCP/IP connection manager).

-

tape drive sharing between ndmphost and admin server usnig osb.

Hello.

It is available to share the tape drive between san usnig adminserver and ndmphost the switch?

We have 6 lto5 disks and will be attached to the machine oracle ss7420 NDMP.

The customer site has no backup of the customer unless the nas data.

Reason why I'm asking as OSB catalogdb to tape drive backup.

Is it safe to admin osb catalogdb backup as client or tape drive sharing is available?

Another issue is that when we set up unit NDMP, NDMP host can control robot or robot control should assign to the server administrator or the two availble?

Thank you.Yes it's available by design. You have to configure the NAS with the role of mediaserver and add a 2nd point of attachment to the device. You can then create calendars that limit to those fixing points. Allows you to share all the drives between all media servers.

For the robot, I let the server admin to do that, just tape devices were mapped to the time. Controlling Robotics is a light enough task.

Here is an example of one of my setups where the drive is shared between several media servers, Oracle Linux 4, Linux 5 Oracle and NetApp

L700-1-lect1:

Device type: Ribbon

Model: ULTRIUM-TD2

Serial number: 7MHHY00202

In service: Yes

Library: L700-1

DTE: 2

Automount: Yes

Error rate: 8

Frequency of application: [unknown]

Debug mode: no

Blocking factor: 512

Blocking max factor: 512

The current band: 999

Use the list: all the

In-car use: 7 months, 3 weeks

Cleaning required: no

UUID: 558a34da-045e-102c-8443-002264f35328

Annex 1:

Host: dadbdn01

Raw device: / dev/tape/by-id/scsi-1IBM_ULTRIUM-TD2_7MHHY00202

Appendix 2:

Host: dadbdh01

Raw device: / dev/tape/by-id/scsi-1IBM___ULTRIUM-TD2___7MHHY00202__

Annex 3:

Host: dadbeh01

Raw device: / dev/tape/by-id/scsi-1IBM___ULTRIUM-TD2___7MHHY00202__

Appendix 4:

Host: ap1030nap

Raw device: nrst1a

Annex 5:

Host: dadbak01

Raw device: / dev/tape/by-id/scsi-1IBM___ULTRIUM-TD2___7MHHY00202__Thank you

Rich

-

Using variables shared between the different versions of labview

Hello

I look at the use of variables shared between two different versions of LabVIEW. We have a 2 of the PXI system and a single PC. We are currently updating the code on the PXI system to 2014, but the PC still work 8.2.1. We now need a map of ARINC-429, which is executed only 2009 +, that is why we are to day. First tests show that shared variables do not communicate between the two. I think that the reason may be that the PXI running the version of variable motor 2014 shared, while the PC is running 8.2.1, but I don't know if it is precisely for this reason it does not work. Is there a way to communicate between two different versions of LabVIEW with shared Variables?

Thank you!

SOLUTION

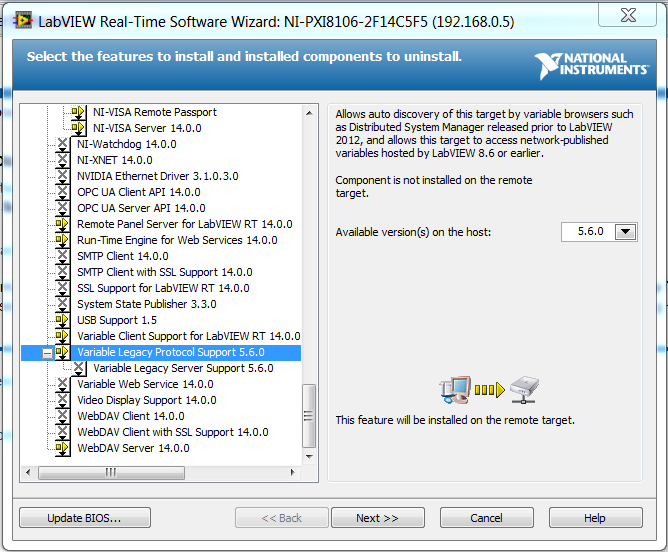

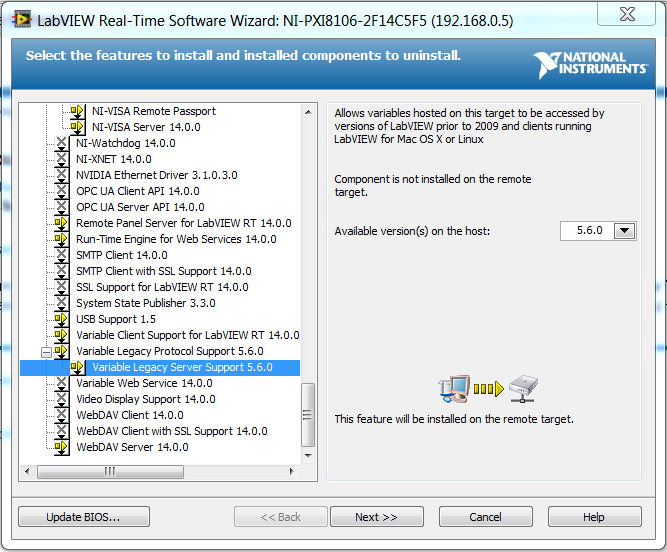

There are two key elements in the process of installing software that are essential for PXI to communicate with another version of LabVIEW: Legacy Server Support Variable and Variable Support of Legacy Protocol. The first, in charge of the Protocol, was automatically installed through the selection of the other ingredients (although I do not know which triggered its installation). The second has been nested in the Protocol, but was not selected automatically.

The Protocol allows the PXI 2014 connect to shared variables hosted by devices 8.6 or an earlier versions, while the server allows before 2009 devices to communicate to shared variables hosted on PXI of 2014 (which was the initial problem)!

-

Sharing the drive between several virtual machines

Hi all

We have a configuration with a SAN (Dell Compellent) and use vSphere 6 to host a number of Windows servers. We now have a giant machine of Windows with a large number of small files for a particular application.

Now, we would like to create a cluster with a LoadBalancer in front of him, so that we can handle more load. To do this, we want to create a disk that is shared between 2 (or more) of windows machines.

One of the problems is that NTFS is not a supported clustered file system. So, I did a lot of research on Google to see what my options are. In my opinion, they are the following:

1. set up a Cluster Shared Volume (CSV) Microsoft (use SMB if I read the documentation)

2. the user Windows shares on a separate file server

3. the user NFS (depreacted under Windows)

4. switch to Linux and use NFS

The problem I have, is that they use all the network traffic. For example, option 2 is ridiculous slow. NFS under Linux is also a way slower than the local drive (managed by VMWare iSCSI) disk access and NFS on windows does not appear to be

support very well.

I know that there are aware filesystems, clustered as VMFS etc. Is there a way to access it directly from my Windows VM or are there devices SAN which is directly accessible from the virtual Windows machine?

Or maybe there are other solutions to set up a shared drive?

I know that there are aware filesystems, clustered as VMFS etc. Is there a way to access it directly from my Windows VM or are there devices SAN which is directly accessible from the virtual Windows machine?

VMFS is a really clustered file system and allow access of multiple virtual machine the same amount of storage (data store) which is different from that to create a virtual disk (hard) and present to multiple virtual machines. You can do this, BUT the guest virtual machine should take care of several accesses to the hard, for Windows, you must turn on the feature... without this clustering with failover data may be corrupted.

I think the best and supported solution for you is to create another cluster with Failover Cluster functionality and create a resource sharing files with files that will access your NLB nodes.

-

Store of data sharing between 4 ESX and ESXi 5

Hello

I have a vSphere environment 4.1 with a vCenter and a cluster of 3 ESX 4.1 access to the content of data through fiberchanel (type scneario) warehouses.

And now I need to install a new ESXi 5.x (cannot be a different version) in a separate environment, outside of the current vCenter, BUT to access the warehouses of data even as the cluster of vSphere 4 current.

Anyone know if there should be no problem sharing between 4 ESX and ESXi 5 access to the same data storage?

Thanks in advance

As long as the FC zoning is configured properly, avoid all the problems. ESXi 5 will be able to see the data created by ESX4 VMFS3 stores, it will not be able to create the same size of virtual machine he can on a 5 VMFS datastore.

-

Account shared between cubes does not receive data

Hello

I created an application Multicurrency, consisting of 2 cubes. The first cube has only the default dimensions, the second cube additional custom two-dimensional. Custom2 stores members 'Amount' and 'Description', CUSTOM1 stores data for transactions (T1, T2, etc.).

Cube 2 there is a form that uses an account shared between cubes ("Transactions") with cube 2 as plan source type. Thus, in cube 2 data is stored for a combination of account "Opérations", "T1" (Custom1) and 'Amount' (Custom2).

Cube 1 there is a form that shows the account 'Operations' (read only due to the plan of different source type), however after I entered some data for the form assigned to cube2, train in cube1 is giving me all the data.

I checked the essbase outline and cube 1 account 'Opérations' has the value dynamic Calc and formula automatically genereated during refresh XREF. For cube 2, this account is set to store.

It seems that I'm missing something, however, I am not in a position to define what is originally the function XREF does not give results.

The gurus of the Hyperion, you help me?

Concerning

Marcin StawnySilly question

If you do not have these dimensions in the Cube 1. This means that they take the total of these dimensions when he jumps from 1 Cube for Cube 2

But you you aggregate Cube 2 entirely on these 2 dimensions.

If you do not have a value at the top of these 2 dimensions, then you will see nothing in 1 Cube sure

-

Limitation of the APEX: sharing pages between several APEX applications

I was hoping if someone with a little more experience architect APEX could comment on some of the limitations that I see and I have to make good decisions, working around the problem.

Any suggestions are welcome.

One of the limitations I've seen, it's how APEX handles applications and pages. Each application appears as an island, it has its own set of pages, the processes of connection, templates, CSS, JS, references etc.. Regarding the CSS and Javascript, you can be defined in a central location, but still, every new developed APEX application must be configured to make use of your 'standard '.

The more traditional web solutions showing each as stand-alone web page and any web page can call/reference any other page and pass information to session between them.

With APEX, however this doesn't seem to be the case.

A solution would be to have a huge demand for APEX, where all the pages are the property of this unique application.

Unfortunately, we use of APEX and EBS R11 (& R12) together; all APEX applications are started in the menus of the EBS. I had drawn a SSO solution between the two (without using oracle SSO). However, each APEX application is unique, as small modules instead of complete applications. In other words, we tend to use APEX as an alternative for Oracle Forms - each APEX application is a standalone program.

I always wanted to be able to apply the same connection process and look and feel in the brand new APEX app we write.

The way I went to this subject has been meticulously creating a 'model' APEX application. Embedded in the application of this model are all our shared CSS, JS and models that apply the site appearance. It also contains our custom library of signature unique code and debugging custom logger (think log4j but for APEX).

So whenever a developer began work on a new APEX application, they start by cloning the model and automatically inherit all the functionally and website presentation.

My concern is if this is really the best way to do it, or if I'm missing something?

I am also concerned move forward when I wants to establish the change in appearance across all of our web applications that I need to touch each APEX application since. I'm trying to mitigate this by ensuring that almost all the programming logic is contained in packages of PL/SQL, CSS and Javascript only referenced and external application of the APEX. But this does not always with models, or with a logic that is rooted in the actual pages of the APEX etc.

At least APEX allows you to easily enough copy pages between applications, but it does compare not just to have a single page shared between all applications of the APEX.

Is there a better way to go about this?Hi attis.

I think you can't really compare a module with an APEX application forms. I wouldn't say to create an APEX application every time when you have created a module of forms in the past.

A forms module is just a few pages of an application to the APEX. Then you really should have more then a few pages in an APEX application. Certainly, a huge application is also not the way to go, but I group them for example by logical areas such as HR, logistics, order...As Sc0tt already mentioned, you must use the 'Subscribe' mechanism of shared components to make it easier to update all your applications with the model updates. But there is more, you should also share your user name and just have in one place and use the "simple APEX based SSO" that allows all applications within a workspace can share the same session. Simply set the 'Cookie' namesake in your authentication for all your applications and when you bind an application to another and include a valid session id you should not have to log in again and you can also set the session state in that other application.

So here's what I'd do

(1) creating a master application that contains

(a) your theme and other components shared that you want to share

(b) must be also some basic navigation lists to navigate between your applications...

(c) create a sign with the name "Redirect to connect main app" and type accounts APEX (no matter) where you define the name of 'Cookie' to 'Cbx_missmost' and the URL of invalid session to the login page of your creation 'hand connection app '. For example: f? p = 9999:101: & SESSION.(2) create a model application which is a copy of the claim, but where change you the "subscription" of your models, authentication scheme and components shared in the main application. This will serve as your App developers if they want to create a new application.

(3) create your 'hand connection app' (eg. app 9999 id) by

) a copy of your new model application

(b) remove the inside authentication scheme (this is just made for this main connection app)

(c) create a new type to use. But important: set the name of 'Cookie' to 'Cbx_missmost '. This will ensure that all applications have the same session cookie.

(d) If you use APEX 4.1.1 you will automatically be redirected to the calling application after successful login.(4) start to create new applications by copying your 'model' application that contains all subscriptions.

(5) If you run this application, it automatically redirects to your main connection application if the current session is not valid. This will avoid to have the same logic of authentication in your applications.

(6) If you need to change your templates or shared components you just do in the "master" application and click on the "publish" button to push the changes for all your applications.

But still, try to avoid many small applications, I think that make handling just unnecessarily complicated. It is an APEX application has a few hundred pages. For example, our APEX app Builder (app 4000) has more than 1260 pages.

Concerning

Patrick

-----------

My Blog: http://www.inside-oracle-apex.com

APEX Plug-Ins: http://apex.oracle.com/plugins

Twitter: http://www.twitter.com/patrickwolf -

Photos - photos of iCloud - several users share data bases

Hi hoping someone can point me in the right direction here as it is driving me crazy!

New Mac mini, OS X and photos app.

Basically, I want to share a unique data base of Photos between two users on the same Mac accounts. Have followed all the advice online, I'm always running into difficulty. I put the database on an external SSD with permissions in place for any user access to the base (but only one at a time of course).

All very well so far, but here comes the question. I want pictures of iCloud enabled on one of the accounts with complete download of all pictures to iCloud, when I get out of the photos, the photolibraryd process remains active. This means that when I change the account and you are trying to access the photos, I can't because photoslibraryd in the other account is accessed again. To work around this I started to enter photoslibraryd monitor and the murder of activity as well as the Photos itself before you log in to the second account and to access the Photos. This seems to work (but is a bit of a pain if I forgot!) - which is a lot more pain, it's that if I add some photos in the second account and then go back to the first, rather than synchronize the new photos with iCloud, product photos across the entire library 20,0000 resynchronization photo! It's bad!

I have approached the wrong way? Is there a better way to achieve what I want - that is entirely database of shared photos between two users on the same Mac (with photo stream enabled on both accounts for these devices which have not iCloud photo activated) and iCloud photo enabled on one of the Mac accounts - without killing the process or any what fees General massive sync?

Grateful for any help.

Thank you very much

Rich.

It is not possible to share a library of photos between several users, if the library in question is your system library. If you cannot share the library used as the iCloud photo library with others. If you want to share photos with others, use a separate library of Photos to share and transfer photos from there to your iCloud library.

I found this in the documentation for PowerPhotos:

Share a library between several user accounts on a Mac

Photos is not designed with use multi-user in mind, which makes it very difficult to create a configuration where only one library of Photos are accessed through accounts on your Mac. If you want to share a library in this way, the following restrictions will be applied (for the bottom of discussion, we have two users named A and B we want to share a library):

- Account that a single user can never open a library given in Photos at the same time. Before you can use the shared library as long as the user has, make sure to leave Photos as user B.

- None of the user accounts that access the library may designate as their "library system". The library system is actually open at any time by OS X in the background, as long as that user is connected, even while quitting smoking pictures not free the library so that another user can open. You must designate another library system library, or completely close the session user B before trying to access the library as user A. Note that this also prevents having this synchronization of library with iCloud.

- The library cannot be stored on your internal drive or any other drive where the permissions are respected. Fighting against the permissions on OS X is a losing battle. While you can play whack-a-mole to try to correct the permissions on the library before you open it, then something goes wrong and it is likely that you will be unable to access a part of your library. You should keep the library on an external drive and in the read window information in the Finder, make sure the checkbox "Ignore permissions on this volume" is checked.

Re: How to share your photo on the same Mac without iCloud library

-

Can I use is to view and run several executable files from the same GUI?

I have two executables Labview that performs some IO analog-to-digital, each using an independent USB-6008. I would like to run both these executables in a single UI. Is this possible using sub-panels? Or another method?

I found KB exe and Server VI in what concerns but can't seem to get the workaround solutions implemented.

http://digital.NI.com/public.nsf/allkb/8545726A00272EB0862571DA005B896C?OpenDocument

I use Labview2009

Thank you

Dan

No subgroup on the screws still share data space. If you limited the number of instance to a single (this delicate and requires the brain twist) you can use a template in a template, but once again, one instance in each model. If you restruture your code such that the shared sub - VI do not use SR or local storage (as a control not on the connector) you can keep all the specific instance stuff in the model and the PSA to the Sub screws.

Ben

-

Shared vs datastore stores local data and DNS in 5.1

I read page 56 of the vSphere, ESXi, vCenter Server 5.1 Upgrade Guide

It addresses the issue of DNS load balancing and vCenter Server data store name.

I think it's to discuss the issue of shared storage and multiple hosts accessing a data store-same and that each host must VMware it the same name, due to how 5.1 doesn't solve VoIP DNS names, but now uses the DNS name for the data store.

I have no shared storage. They are all my data store (3 now 3 guests) local. I guess I have in no way the need to appoint all my local data stores the same. Correct?

Here is the text directly from the page 56:

DNS load balancing solutions and vCenter Server Datastore Naming

vCenter Server 5.x uses different internal numbers for the storages of data than previous versions of vCenter Server. This

change affects the way you add NFS shared data warehouses to the hosts and can assign the vCenter Server updates

5.x.

names of host IP addresses versions before version 5.0 vCenter Server convert data store. For example, if you

mount a NFS data by the name \\nfs-datastore\folder, pre - 5.0 vCenter Server store versions convert the name

NFS-data store to an IP address like 10.23.121.25 before putting it away. The original name of nfs-data store is lost.

This conversion of hostnames to IP addresses a problem when using DNS load balancing solutions

with vCenter Server. Replicate data and appear as a single logical DNS load balancing solutions

data store. The load balancing occurs during the conversion to IP host datastore in solving the

host name to different IP addresses, depending on the load data store. This load balancing happens outside

vCenter Server and is implemented by the DNS server. In versions prior to version 5.0, features vCenter Server

like vMotion don't work with this DNS balancing solutions because the load balancing causes a

data store logic to appear that several data stores. vCenter Server fails to perform vMotion because he cannot

recognize that what it considers multiple data warehouses are actually a single logical datastore that is shared between

two hosts.

To fix this, versions of vCenter Server 5.0 and later do not convert names to IP addresses data store

When you add data warehouses. This measure of vCenter Server to recognize a store of data shared, but only if you add the

data store for each host in the same data store name. For example, vCenter Server does not recognize a data store

as shared between hosts in the following cases.

-The data store is added by hostname and IP address on host1 to host2.

-The data store is added by host name to host1 and host2 to hostname.vmware.com.

VCenter Server to recognize a shared data store you must add the store of data of the same name in each

host.

You have reason - the section relates to the NFS shared storage - with the same NFS server load balancing is multiple IP addresses - in older versions of vCenter as the name of the NFS server is converted into intellectual property that could be different that would cause problems with vMotion and other opt vCenter - with vCenter 5.1 NFS host name is maintained.

-

Bind several web service data controls on the same page

Hello

JDev version: 11.1.1.6.0

I using SOAP web services. I have the following usage:

I have the data control for the web service, that records the values entered into the form. I created the entry form using the data control (DC1).

There is an input field [T1], on the tab, I need to fill an another text field, for which the data is returned to an another web service (DC2) data control.

For the same input text [T1] on the tab out, I need fill in the object list in the component selectonechoice, for which the data is returned to an another web service (DC3) data control.

Is it possible to use bind several data controls on the same page to reach the use case?

However, I want to avoid the use of managed bean. Is it possible to do this?

Concerning

FabiolaHello

I can make the case to use even without the use of the managed bean?

Yes, although the managed bean solution seems reasonable to me.

Difficulties are

+ 1) REQ1 and REQ2 are the parameters that is common to both 1 and 3 services. +

Text fields that provide arguments to a method are related to an input variable (file PageDef has an iterator variable in the executable section). A variable can be referenced from method arguments

+ 2) REQ1 is the parameter that is common to both 2 and 3 services. +

Identical to 1. Create a setting for service 2 and map variables in the arguments of the method of service 3

+ 3) Service3 needs all REQ1, REQ2, REQ3, and REQ4 parameters as parameters to save the data. +

As said, fields can be referenced from variables, dependent, the linking of the attribute fields (as I guess it is a return value in the bound field)

There are problems of links as the same setting is available between several departments.

It's new to me

Is it possible to solve these problems of binding addresses?

Yes. Make sure however that WS DC iterators you see in PageDef have their property Refresh ifNeeded for the refreshment of the bound field work properly

Frank

-

Using VI Server to check whether another executable LabVIEW is running

Hello to you all, helpful people.

I need to have a single executable check LabVIEW if an different LabVIEW executable is running. The simplest way to do seems to be to ask Windows if the name of the executable runs. A good thread is here.

However, this requires the use of a tool (task list) that does not exist on all Windows operating systems I need support (all flavors of XP, Vista and 7). In addition, even if I found an equivalent for each OS, I need to make sure that they continue to work and update my program whenever a new OS was released.

A much better solution would seem to be to use Server VI in LabVIEW, so it is cross-platform. However, despite reading over my printed manuals, by reading lots of subjects in the electronic manual, scanning through the forums and make some limited looking for a manual on the Web site of NOR, I still can't understand the basics of the implementation of a communication server VI between two executables. It's very frustrating, because I'm sure it's a simple task, but I can't find the right instructions.

A lot of instructions to configure a server VI say to go to tools-> Options-> VI Server: Configuration and enable the TCP/IP option. Which seems exaggerated, if I only need communication on a local computer, but ok. However, in other places told me that this sets the default settings for the instance of the main application (another subject I am still unclear on), so how does it apply to my existing LabVIEW project? My current project is an executable, and I thought I would create a new build for the second executable specification. Executable has all my existing code, while B executable would have just a reference VI available server ping, in order for an executable say if it is running.

So I built executable B, and I joined his VI for your reference. Deciding that the VI settings programmatically server configuration will be more comprehensible, I put options that seemed logical: a unique TCP Port number listen to Active = True, TCP/IP access list = IP Address of my computer and the executable = B.vi VI access list.

In my test executable A.i, which I use to this get up and running before changing my main code, I use Open Application references with the IP of my computer, the unique port number and a short timeout. I hooked up to a property App node to determine if I get the correct connection. All I really need is to check the name of the Application and I'm good. However, I keep getting 'LabVIEW.exe.

If anyone call tell me that I forget in this simple configuration, I would be very grateful. Some basics on something else I'm missing about how works the server VI, how the tools-> Options from the settings relate to all, etc., would be a big bonus. Eventually I'll need a way to specify no IP address of the computer (or a way to interrogate LabVIEW), so I can broadcast these two executables on any random PC.

Thank you in advance for your help!

-Joe

P.S. in the preview window that all of my text has run together; I hope sincerely that is not happy when he published, in particular given its length. If so, I apologize!

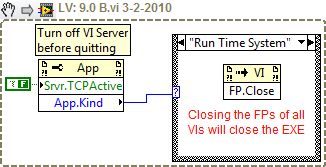

The application ini file should contain the following line:

Server.TCP.Enabled = True

Not to mention that this is a better way to see if your inside an executable file:

Tone

-

Cache objects VI shared between 32 and 64 bit?

Transferred into the LAVA: http://lavag.org/topic/16349-vi-object-cache-shared-between-32-and-64-bit/page__pid__99898

I am currently working on a major project that is to be deployed in 32 - bit and 64-bit flavors. This means that I must periodically switch between installed versions of LV to build.

It * seems * that if I work in 32-bit BT, and then I close and re-open my project in 64-bit BT, that everything needs to recompile... even if I had only changed a few things since the last, I worked in 64-bit.

It seems that while LV keeps a Cache of objects VI separate for each version of LV, that it does NOT keep a cache separate for 32-bit versions and 64-bit. Is this really the case?

Fabric Hello,

LabVIEW maintain separate caches for versions 32-bit and 64-bit of the same version, but in the same file. They are separate entries in the objFileDB. Because 32-bit and 64-bit are very different beasts, the project must be recompiled in order to adapt to the new platform every time that you open in a new environment. It is possible to separate the compiled source code code. Research in the other forum posts, this separation likely to cause a higher risk of corruption VI.

Best,

tannerite

-

Execution of several executables in time real (.rtexe)...

For PXI (or any embedded controller OR) several executables in time real developed in LabVIEW (.rtexe files) can work simultaneously?

I'm almost certain that the answer is 'No', because the whole point of a RTOS is the code to be "as deterministic as possible", with the Jig and schedule reduced by removing items (such as "interference of the BONE") out of control or understanding of the developer.

Pouvez, however, write a single RT executable that loads into two routines that work "in parallel". If you have a multi core processor, you could book separate processors for two routines that should improve their independence from each other.

We met a slightly different variant of this issue during the migration of code LabVIEW 7 to a LabVIEW Project "oriented" development (introduced in LabVIEW 8.x). We used to have several different programs of LabVIEW RT who has used a code different on the PXI - when we ran the host executable, it loaded and run the appropriate code of RT (recorded in a .llb). This works well with executable RT.

What we developed was a system where we have recorded each RT executable as a routine named separately ( not Startup.rtexe) and set them (initially) to run as a startup. When the host code runs, it queries the PXI for the name of the startup routine, and if it is not 'just', change us it on the PXI and make the PXI to restart.

[In fact, don't really do us this way, that 99% of the time, we always run the same executable RT. What we did do was to write a separate "define RT Startup" routine that we almost never executed, unless we "know" we run an earlier version or something like that. This, in turn, tells us that the PXI is configured to run and what executable RT can be defined as startup, then makes the changes, restarts the PXI and exit. The 'rule' is that if you change the executable RT, don't forget to change the back when you're done.]

Bob Schor

Maybe you are looking for

-

My Podcasts stopped showing upward in iTunes

Good evening About three weeks ago my stopped podcasts appear in iTunes. At first, I thought it was just a problem of update on their end, but they never came again. Support podcast Apple told me that it was an RSS question and I had to validate my R

-

What external DVD burner for MacBook Pro late 2011

My internal superdrive drive just died, a kind of inner part rattling... sounded like a trombone bouncing around. Has to be replaced. Wanted to buy an external drive of Supe but it won't work with my MacBook Pro late 2011. I need to burn DVDs for my

-

Don't know what you want in this space. Content of what?

-

Why don't have a Meta Trader 4 Mac app?

-

SSH access to LWAPP Access Point

Hello I have just a question about the access point (in LWAPP) using SSHv2. When can I see the beginning of the AP (in my case a 1242AG) the SSHv2 is enabled, but when I try to connect to the AP by SSH, my SSH connection is cancelled immediately. My