The log buffer size

HelloCould someone please confirm that the following query returns log buffer size is correct?

select

a.ksppinm name,

b.ksppstvl value,

a.ksppdesc description

from

x$ksppi a,

x$ksppcv b

where

a.indx = b.indx

and

a.ksppinm = '_ksmg_granule_size';Thank you.

Hello..

The '_ksmg_granule_size' "shows the size of granule not size log_buffer. If the total size of the SGA is equal to or less than 1 GB, then granule size is 4 MB. For greater than 1 GB EAG, granule size is 16 MB.

What is the size of the SGA of your database.

For my database SGA > 1 GB the output of the query is 16 MB

{code}

21:41:47 TEST > value col to a25

21:41:56 TEST > col DESCRIPTION for a30

21:42:05 TEST >

21:42:05 TEST >

21:42:05 TEST >

21:42:05 TEST > select

21:42:08 2 a.ksppinm name,

21:42:12 3 b.ksppstvl value,

21:42:12 4 a.ksppdesc description

21:42:12 5 of

21:42:12 6 x$ ksppi has.

21:42:12 x $7 ksppcv b

21:42:12 8 where

21:42:12 a.indx 9 = b.indx

21:42:12 10 and

21:42:12 11 a.ksppinm = '_ksmg_granule_size ';

NAME VALUE DESCRIPTION

-------------------- ------------------------- ------------------------------

granule _ksmg_granule_size 16777216 bytes in size

{code}

HTH

Anand

Tags: Database

Similar Questions

-

Can make the size of the log buffer be changed or is managed internally by oracle

Can again change the log buffer size? or is managed internally by oracle in the SGA? We are on oracle 10.2.0.3.

The reason why I asked the question was that our construction team do estimates for data/memory sizing properly... so we wanted to know if it can be changed or not?Hi S2k!

The SGA Memorystructure is handled automatically by Oracle, if the SGA_TARGET initializationparameter is set. But nothing less you are able to configure the size of a memorystructure by yourself. Here is a good article on the optimization of the log buffer.

[http://www.dba-oracle.com/t_log_buffer_optimal_size.htm]

I hope this will help you along.

Yours sincerely

Florian W.

-

How do to the size of the log buffer reduce with huge Pages Linux

Data sheet:

Database: Oracle Standard Edition 11.2.0.4

OS: Oracle Linux 6.5

Processor: AMD Opteron

Sockets: 2

Carrots / power outlet: 16

MEM: 252 GB

Current SGA: GB 122 automatic shared memory (EAMA) management

Special configuration: Linux huge Pages for 190 GB of memory with the page size of 2 MB.

Special configuration II: The use of the LUKS encryption to all drives.

Question:

1. How can I reduce the size of the log buffer? Currently, it appears that 208 MB. I tried to use log_buffer, and it does not change a thing. I checked the granule size is 256 MB with the current size of the SGA.

Reason to reduce:

With the largest size of log buffer the file parallel write newspaper and the synchronization log file is averaged over 45 ms most of the time because she has dumped a lot of stuff at the same time.

Post edited by: CsharpBsharp

You have 32 processors and 252 GB of memory, so 168 private discussions, so 45 MB as the public threads size is not excessive. My example came from a machine running Oracle 32-bit (as indicated by the size of 64 KB of threads private relative to the size of 128 KB of your son) private with (I think) 4 GB of RAM and 2 CPUs - so a much smaller scale.

Your instance was almost inactive in the meantime so I'd probably look outside the Oracle - but verification of the OS Stats may be informative (is something outside the Oracle using a lot of CPU) and I would like to ask you a few questions about encrypted filesystems (LUKS). It is always possible that there is an accounting error - I remember a version of Oracle who report sometimes in milliseconds while claiming to centisecondes - so I'll try to find a way to validate the log file parallel write time.

Check the forum for any stats activity all over again (there are many in your version)

Check the histogram to wait for additional log file writes event (and journal of file synchronization - the lack of EPA top 5 looks odd given the appearance of the LFPW and and the number of transactions and redo generated.)

Check the log file to track writer for reports of slow writes

Try to create a controlled test that might show whether or not write reported time is to trust (if you can safely repeat the same operation with extended follow-up enabled for the author of newspaper which would be a very good test of 30 minutes).

My first impression (which would of course be carefully check) is that the numbers should not be approved.

Concerning

Jonathan Lewis

-

In the API, RIM gives us the following code,

void getViaContentConnection(String url) throws IOException { ContentConnection c = null; DataInputStream is = null; try { c = (ContentConnection)Connector.open(url); int len = (int)c.getLength(); dis = c.openDataInputStream(); if (len > 0) { byte[] data = new byte[len]; dis.readFully(data); } else { int ch; while ((ch = dis.read()) != -1) { ... } } } finally { if (dis != null) dis.close(); if (c != null) c.close(); } }My problem is, I have a file which is usually about 10 k, what I download in my application. It returns-1 as the length so he then attempts to download the file byte-by-byte. It takes forever. I tried to add a fixed 1 KB buffer size, but the end of the file is cut off. What can I do to keep fast download speeds and the end of the capture file?

Thank you

Greg

-

the ad7476A buffer size and device

Hi, I use the BF Analog read ad7476a (modified) vi.

But I can't get the size I want filled a tampon...

Somehow I think the buffer is limited to an array of 128 items, but I've set with 256, when I show the initialized array, it says I have 128 elements... together using array size it shows 256...

If this is a limitation of blackfin, please tell me the best way to build an array with progressive buffer readings...

PS - I need a sample of 10 kHz and a good number of samples filled in a table, or calculations in real time, but I don't know how to do, please help me!

Thanks in advance

Hello:

I discovered the problem... the 128 size is a parameter that can be changed when 'Configuration target - Debug Options' (ADSP-BF537 in my case)!

Thank you

-

Hello

Can someone tell me how do you determine the size of the local logging on a Pix buffer, I was not able to find this point, any help would be really appreciated.

Thank you

Stu

Hello

Currently, the logging buffer size is set to 4K.

Check the following:

Overall record buffer should be configurable

http://www.Cisco.com/cgi-bin/support/Bugtool/onebug.pl?BugID=CSCdv91497&submit=search

I hope it helps

Franco Zamora

-

the buffer size of DBA public has already reached the maximum size of 1703936.

This error shows up in the journal of my 12 c database alerts.

I guess it must be linked to some hidden settings.

_bct_public_dba_buffer_size 0 total size of all dba buffers, in bytes, of the public change tracking _bct_initial_private_dba_buffer_size 0 initial number of entries in the private sector s/n change tracking buffer _bct_public_dba_buffer_dynresize 2 allow dynamic resizing of the stamps public s/n, zero to disable _bct_public_dba_buffer_maxsize 0 the maximum buffer size allowed for stamps public s/n, in bytes But when I check, it seems to be ZERO.

Not sure where are from 1703936 value?

Smit

figured it's not default side effect the LOG_BUFFER parameter.

Smit

-

Redo Log Buffer 32.8 M, looks great?

I just took on a database (mainly used for OLTP on 11 GR 1 matter) and I'm looking at the level of the log_buffer parameter on 34412032 (32.8 M). Not sure why it is so high.

(database was for 7.5 days)select NAME, VALUE from SYS.V_$SYSSTAT where NAME in ('redo buffer allocation retries', 'redo log space wait time'); redo buffer allocation retries 185 redo log space wait time 5180

Any advice on that? I normally keep trying to stay below 3 m and have not really seen it above 10 M.Sky13 wrote:

I just took on a database (mainly used for OLTP on 11 GR 1 matter) and I'm looking at the level of the log_buffer parameter on 34412032 (32.8 M). Not sure why it is so high.11g, you should not set the log_buffer - only Oracle default set.

The value is derived relative to the setting for the number of CPUS and the transaction parameter, which can be derived from sessions that can be derived processes. In addition, Oracle will allocate at least one granule (which can be 4, 8 MB, 16 MB, 64 MB, or 256 MB depending on the size of the SGA, then you are not likely to save memory by reducing the size of the log buffer.

Here is a link to a discussion that shows you how to find out what is really behind this figure.

Re: Archived redo log size more than online redo logsConcerning

Jonathan Lewis

http://jonathanlewis.WordPress.com

Author: core Oracle -

Hi experts,

My question is: = whence the changes in the log buffer? Does cache buffers UGA or database. If UGA then what part of the UGA. And copy that into the log buffer. This is the server process.800978 wrote:

Hi experts,My question is: = whence the changes in the log buffer? Does cache buffers UGA or database. If UGA then what part of the UGA. And copy that into the log buffer. This is the server process.

Well, you ask too many questions that can be answered in the documentation. Please note that the following messages which must mention your version of db (4 digits) regardless of how irrelevant you think that maybe.

For your question, this post of mine,.

http://blog.aristadba.com/?p=17Read the article to Redo buffers and buffers Sales.

HTH

Aman... -

Remove the log during recovery, but everything is ok

Hello

I delete the log during recovery file, insert a value in a table and commit, it is committed and no error in the alert.log file

I turn on logfile, it switches, once again no error

What is the reason?

Thank you

JohnBut when I commit, where do the Oracle stores data?

First of all in the log buffer which is empty on commit to the CURRENT redo log in the redo log writer.

As stated previously the writer newspaper most likely open the file before you delete - so he acquired a file descriptor and is still able to write to this file, even if you subsequently deleted. The journal writer retains the redo log permanently open at least until the file becomes inactive (I think even the file handles are never closed for performance reasons; but this should be verified).

-

Hi master,

This seems to be very basic, but I would like to know internal process.

We know all that LGWR writes redo entries to redo logs on the disk online. on validation SCN is generated and the tag to the transaction. and LGWR writes this to online redo log files.

but my question is, how these redo entries just redo log buffer? Look at all the necessary data are read from the cache of the server process buffers. It is modified it and committed. DBWR wrote this in files of data, but at what time, what process writes this committed transaction (I think again entry) in the log buffers cache?

LGWR do that? What exactly happens internally?

If you can please focus you some light on internals, I will be grateful...

Thanks and greetings

VDVikrant,

I will write less coz used pda. In general, this happens

1. a calculation because how much space is required in the log buffer.

2 server process acquires redo copy latch to mention some reco will be responsible.Redo allocation latch is used to allocate space.

Redo allocation latch is provided after the space is released.

Redo copy latch is used copy redo contained in the log buffer.

Redo copy lock is released

HTH

Aman -

Question about the size of the redo log buffer

Hello

I am a student in Oracle and the book I use says that having a bigger than the buffer log by default, the size is a bad idea.

It sets out the reasons for this are:

>

The problem is that when a statement COMMIT is issued, part of the batch validation means to write the contents of the buffer log for redo log to disk files. This entry occurs in real time, and if it is in progress, the session that issued the VALIDATION will be suspended.

>

I understand that if the redo log buffer is too large, memory is lost and in some cases could result in disk i/o.

What I'm not clear on is, the book makes it sound as if a log buffer would cause additional or IO work. I would have thought that the amount of work or IO would be substantially the same (if not identical) because writing the buffer log for redo log files is based on the postings show and not the size of the buffer itself (or its satiety).

Description of the book is misleading, or did I miss something important to have a larger than necessary log buffer?

Thank you for your help,

John.

Published by: 440bx - 11 GR 2 on August 1st, 2010 09:05 - edited for formatting of the citationA commit evacuates everything that in the buffer redolog for redo log files.

A redo log buffer contains the modified data.

But this is not just commit who empty the redolog buffer to restore the log files.

LGWR active every time that:

(1) a validation occurs

(2) when the redo log is 1/3 full

(3) every 3 seconds

It is not always necessary that this redolog file will contain validated data.

If there is no commit after 3 seconds, redologfile would be bound to contain uncommitted data.Best,

Wissem -

Increase the length of size and recorder circular buffer

Hello again!

First of all:

I have a problem with the circular buffer.

I was able with a sampling frequency of 1000 Hz I used 6 circular buffers, each with a size of buffer for 30 minutes. It was no problem for the PC. I do not have such a high sampling rate, but I need a buffer size that is larger, something between 90 and 120 minutes with 8 pads. so I decided to reduce the sampling rate of what I need, it's 50 Hz. Because I reduced the sampling rate to 1/20 of the original sampling frequency I thought that a buffer size of 120 minutes should be possible.

Now, after trying the new sampling rate, I still can't use the 8 pads with more than 60 minutes. Any ideas why I had this problem? How can I provide a larger buffer, no possibility?

Second:

I use the recorder for display. I would like to display voltage in the long term. With the recorder the maximum length is approximately 3.5 hours. How can I view on longer periods? What I need to display the length in days. Is this possible? Perhaps with the other modules or almost?

That's it for now, thank you a lot for the useful tips.

Good day!

Good bye

The maximum time width of the graphic recorder or Y/t diagram is a function of memory (RAM) on the computer, the number of channels to display and the sampling frequency. Dynamically, the memory is allocated to the maximum. To avoid using RAM available on the computer, DASYLab is limited to half of the memory of the computer.

That being said... How you appear more time? You can reduce the number of samples using average or separate modules - in effect, reducing the sampling frequency. Alternatively, you can consider if the trigger on demand and a relay may be able to effectively reduce the samples for data to slow evolution.

Some clients have two screens high resolution on a shorter and more low resolution over a longer period.

-

buffer size and sync with the cDAQ 9188 problems and Visual Basic

Hi all, I have a cDAQ-9188 with 9235 for quarter bridge straing caliber acquisition module.

I would appreciate help to understand how synchronization and buffer.

I do not use LabView: I'm developing in Visual Basic, Visual Studio 2010.

I developed my app of the NI AcqStrainSample example. What I found in the order is:

-CreateStrainGageChannel

-ConfigureSampleClock

-create an AnalogMultiChannelReader

and

-Start the task

There is a timer in the VB application, once the task begun, that triggers the playback feature. This function uses:

-AnalogMultiChannelReader.ReadWaveform (- 1).

I have no problem with CreateStrainGageChannel, I put 8 channels and other settings.

Regarding the ConfigureSampleClock, I have some doubts. I want a continuous acquisition, then I put the internal rate, signal source 1000, continuous sample mode, I set the size buffer using the parameter "sampled by channel.

What I wonder is:

(1) can I put any kind of buffer size? That the limited hardware of the module (9235) or DAQ (9188)?

(2) can I read the buffer, let's say, once per second and read all samples stored in it?

(3) do I have to implement my own buffer for playback of data acquisition, or it is not necessary?

(4) because I don't want to lose packets: y at - it a timestamp index or a package, I can use to check for this?

Thank you very much for the help

Hi Roberto-

I will address each of your questions:

(1) can I put any kind of buffer size? That the limited hardware of the module (9235) or DAQ (9188)?

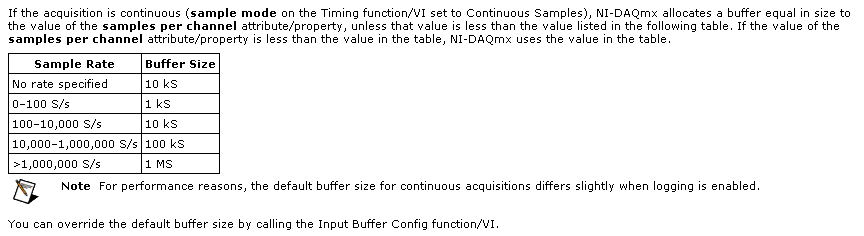

The samplesPerChannel parameter has different features according to the synchronization mode, you choose. If you choose finished samples the parameter samplesPerChannel determines how many sample clocks to generate and also determines the exact size to use. But if you use streaming samples, the samplesPerChannel and speed settings are used together to determine the size of the buffer, according to this excerpt from the reference help C DAQmx:

Note that this buffer is a buffer software host-side. There can be no impact on the material available on the cDAQ-9188 or NI 9235 buffers. These devices each have relatively small equipment pads and their firmware and the Driver NOR-DAQmx driver software transfer data device to automatically host and the most effective way possible. The buffer on the host side then holds the data until you call DAQmx Read or otherwise the input stream of service.

(2) can I read the buffer, let's say, once per second and read all samples stored in it?

Yes. You would achieve this by choosing a DAQmx Read size equal to the inverse of the sampling frequency (during 1 second data) or a multiple of that of the other playback times.

(3) do I have to implement my own buffer for playback of data acquisition, or it is not necessary?

No, you should not need to implement your own stamp. The DAQmx buffer on the host side will contain the data until you call the DAQmx Read function. If you want to read from this buffer less frequently you should consider increasing its size to avoid the overflow of this buffer. Which brings me to your next question...

(4) because I don't want to lose packets: y at - it a timestamp index or a package, I can use to check for this?

DAQmx will meet you if all packets are lost. The default behavior is to stop the flow of data and present an error if the buffer of the side host DAQmx overflows (if, for example, your application does not pick up samples of this buffer at a rate equal or faster than they are acquired, on average).

If, for any reason, you want to let DAQmx to ignore the conditions of saturation (perhaps, for example, if you want to sample continuously at a high rate but want only interested in retrieving the most recent subset of samples), you can use the DAQmxSetReadOverWrite property and set it to DAQmx_Val_OverwriteUnreadSamps.

I hope this helps.

-

I update an old VI (LV7.1) which produces a waveform that can be modified by the user in the amplitude and time using DAQ traditional. I used Config.vi to buffer AO (traditional DAQ) to force the buffer to be the same length that the waveform asked so there is no point of excess data that fill all extra buffer space or the required wave form has not been truncated by a short buffer. If I want to perform this action in DAQmx, is there a DAQmx VI to do this, or should I just use the traditional buffer Config.vi AO? Thanks to a highly esteemed experts for any direction I take to do this.

Hi released,

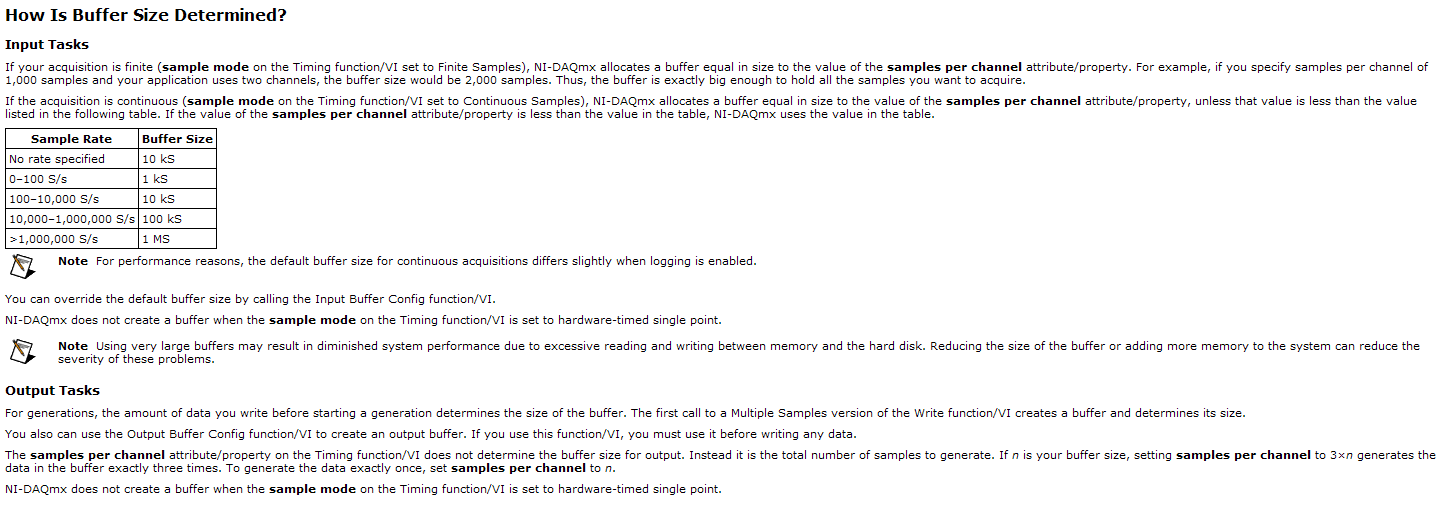

You can explicitly set the buffer using the DAQmx Configure output Buffer.vi. Alternatively, you can leave DAQmx automatically configures your buffer based on the amount of data that you write before you begin the task. You cannot mix and match functions DAQmx and traditional DAQ on the same device, so using the traditional buffer Config.vi AO is not an option if you want to use DAQmx on your Board.

Here's a screenshot of the DAQmx help that explains how the size of the buffer is determined by DAQmx:

I hope this helps!

Best regards

John

Maybe you are looking for

-

Notification of Firefox at the bottom right of the screen freezes Windows 7

It seems that recently, Firefox (and maybe pop up notifications) are freezing my computer Windows 7. I used Firefox for years without this problem. In recent weeks, I noticed that system freeze seems to happen when a Firefox pop-up notification appea

-

Screen sharing with my father who is new to Apple

How on his Mac to help him when we are on different networks? (He's at home and I'm at the mine). We have all two OS X El Capitan.

-

If it received Blue error screen and auto restart past since I did the sp1 update. I have Windows Vista-32 and the error I get is: Stop: 0x0000008E (0xc0000005, 0x93F042F9, 0X92CD3C50, 0x00000000) Win32k.sys - address 93F042F9 base at 93E00000, DateS

-

integrated live cam HP pavillion disappeared,

I have a built-in web cam problem since I upgraded to vista uilimate, I also upgraded to a 2x2gig chip... My laptop is a hp pavillion 2410ca, the function just diappeared,

-

java.lang.NoClassDefFoundError: FilterConfig

Hello, we are trying to launch an ear file created by Maven on our server using weblogic. When we run the .ear, we get an error. Here's what the logs show:java.lang.NoClassDefFoundError: FilterConfigat java.lang.Class.getDeclaredFields0 (Native Metho