Transfer of register in Labview

Hi guys,.

Forgive the question, but I'm completely new to Labview and recently inherited a fairly large code base that I will work on in the coming months. The code is quite complex, so I don't know that I'll be referring to documents available to their full extent while trying to figure out.

My question is this. Former student graduate, who built the original code could not understand something that seems to be very simple. We have several discrete variables we use, and at each time step, the two previous values are held. In a sort of functional form, it might look like this:

x(t-2) = x(t-1);

x(t-1) = x (t);

x (t) = (t) sensor; the value is read from an external sensor.

Currently, all of the current and previous values must be registered to be introduced into the data streams at each time step. Ideally, it would happen automatically, but I don't have the slightest idea how to do it. Any help here?

I am competent in VHDL, C, Javascript, and Mathematica, if this is helpful to all.

Shift registers. You can extend the terminals on the left side of the loop down to get older values of the shift and more register just the previous iteration.

I recommend you watch the LabVIEW tutorials online

LabVIEW Introduction course - 3 hours

LabVIEW Introduction course - 6 hours

Tags: NI Software

Similar Questions

-

Transfer the results of labview to teststand. How does it work?

Hello

Sometimes, despite the tact that my code LV module returns the data with precision - exactly - two significant digits, in the report of TS, I see sixteen-digit precision.

For example, the returned data (no not only displayed) by the module code is - 0.09 (DBL), but TS reports appear to me as - 0.0899999999999999.

Why is it like that?

Because you transfer a double-precision, floating-point number. The screen has nothing to do with the actual data.

-

Change of ownership and company registered in LabVIEW

Hello!

We outsource our IT and my laptop has recently needed a reinstall of Windows. They used their company name as my owner name in Windows and I did not change it until I installed LabVIEW. LabVIEW has now entered the fault of the owner and there is no company name. Its a minor annoyance to not see my name and company on the splash screen when I launch the LabVIEW application. Its a little more than a mind that whenever I create a new VI, Build or setup of the author and company do not autopopulate in forms of ground. Is anyway to change that, if I installed LabVIEW?

Thank you for your time and your input!

-

Data transfer from matlab to labview

I'm having a problem importing data I save with Matlab in Labview. From the Web site of NOR, I gathered these information:

- To record a vector or a format ASCII of Xin matrix with delimiter to the tab, enter the following in the command window, or m-script file in the MATLAB® environment:

>>SAVE filename X -ascii -double -tabs

This creates a file whose name is the name of the file, and it contains the X data in ASCII format with a tab delimiter.

- ' Import the file in LabVIEW using reading of spreadsheet files VI located on the programming "file IO palette.

However, when I have something like an m x n matrix import in labview, I get a m × (n + 1) matrix, with the additional column is all zeros. This should be a simple answer... I know that I can remodel matrices, etc., but it seems as if it could be avoided. I can't seem to understand and am pressed for time. Any help would be appreciated.

How big are the files? Simply remove the last colum using "remove table" is probably the simplest solution.

- To record a vector or a format ASCII of Xin matrix with delimiter to the tab, enter the following in the command window, or m-script file in the MATLAB® environment:

-

Transfer eBooks registered one Adobe ID to another?

Hello community,

I had two adobe ID's, one at the office and another for use private.

I recorded a few books with my Office ID and others with my private ID. As I don't want to leave the company, I work at home I would like to transfer my books recorded the ID Office to my private ID. Is this possible? If Yes, could you please tell me how?

Thanks in advance for your help.

Tanja

N °

The best you will get request to the place where you bought the books if you can redownload the files to a new ID.

You can also change the email address associated with your AdobeID ID Office to something else.

-

Transfer of files of arduino for Labview

Hey.

I do my b.a. in Labview using an Arduino 2560. The arduino controls a temperature probe, with 16 pixels.

I made the connection with the microcontroller of the sensor, using I2C - bus. Arduino MI send via USB as a package of 16 bytes, that contains the temperature measurement.

I have a problem with how I should read the transfer of files in Labview string text to table.I downloaded the Labview interface for arduino and have connection with Labview and arduino.

I have searched the Labview interface for arduino modules but can't seam to find a drive bytes.

The end result would be a graph of intensity which show temperature mearsurement.

https://decibel.NI.com/content/groups/LabVIEW-interface-for-Arduino

-

Dear community,

I have a problem with the transfer of data between LabView and my implementation of memory as a library (dll) function node. Fast construction: two parallel while loops for acquisition and output of the tasks I want to Exchange data between the two. First storage (ring buffer) is initialized for this Exchange. Reading and writing using pointers of table data.

Writing: the pointer of table data (entry node) is used to create a copy in memory

Read: a table of LabView (entry node) data pointer is used and memory data will be transferred (output node)

A debug report revealed that pointer apparently invalid as 0x00000000 and 0xFFFFFFFF spent. The error is a bit difficult to describe because LabView closes instantly or registers a bug, try to debug, then close program applications. Accidents completely random, sometimes after a long measure, sometimes appear at the close of the tasks (not to the first call), sometimes during initialization (not the first call). In addition, it seems that the first call to VI does not crashes. It seems that the memory interferes with LabView and thus produce some error in memory of LabViews. Anyone have experience with the management of the memory of LabView and can tell when a memory would receive the pointer 0 x 000000000 oder 0xFFFFFFFF? Have some global variables and shiftregister still an address fixed or are they managed dynamically? Is it possible that LabView gives unacceptable values of the data pointer table, so the dll tries to get data with an invalid pointer?

Memory without task acquisition and output works fine. Only when adding tasks, LabView accidents as described.

I'm grateful for any help!

Problem solved, the length of the structs was different for the calculation. The 'pack = 1' value has helped.

-

Why the mathscript well below matlab performance?

Hi all

I do the signal processing on a signal acquired with an ADC. The flow of information is roughly 10,000 x 1000 int8 Matrix per second. I'm trying to process the data at the same speed. Signal processing is done in a ".m" matlab file. The treatment is much to complicated to be coded in the G code.

I have two choices:

1. use matlab nodes

2. using the Mathscript node.

I've implemented the solution 1, but loses a lot of time just for the transfer of data between labview and matlab (matrix transfer ~ 10 MB). MATLAB is very quick to do signal processing. A faster version of this solution was to write the matrix in a bin file and pass the name of the file to matlab. It is faster (don't ask me why), but is not fast enough.

Solution 2 sounds better, because as Mathscript is a native language, it is not necessary for the transfer. Prior to joining Mathscipt, I did the following test: run the same simple code on two solutions: calculate the fft of a matrix of 1024 x 1024 ten times (code attached).

As a result, Matlab version node is 0. 5s compared to 1.6 s for the Mathscript node version.

Why is the performance of mathscript so much less than the performance of the matlab node?

If really Mathscript is much slower than Matlab, so I'm afraid I'll stay with node of matlab. Is there a better way to transfer data through files?

Thanks in advance for your answers!

You do not know, I can help you, but I found the same thing.

I have a few guesses - do you use 64-bit Matlab? If so you may have noticed that MathScript runs under 32-bit LabVIEW.

The other possibility is that Mathscript runs as a process integrated into LabVIEW, while if you use MatLab you use Simulink to communicate with Matlab in its own thread.

Third option could simply be that Matlab is a commercial product that is much more optimized.

Honest answer is that I don't know!

-

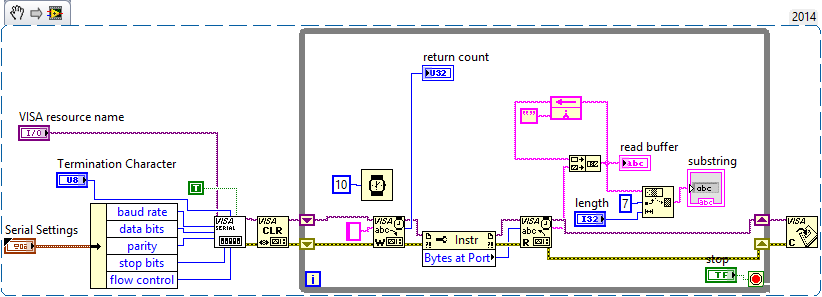

Subset of the Serial error string

Hello world

I have a simple program. I used arduino to transfer a string to labview.

And in Labview, I want to chain of subset. But she works only first string and the strings later be not subset.

Can you help me find my error?

Thanks for reading

Here is my code and my mistake

Here is my video: https://www.youtube.com/watch?v=Gm5nOrZ3iyo

Here's my arduino code:

#include

#include

int i = 0;

LiquidCrystal_I2C lcd (0 x 27, 20, 4); set address 0 x 20 for a 2 line display and 16 characters LCDvoid setup()

{

LCD.init (); initialize the LCD

Serial.begin(9600) (9600);

attachInterrupt(0,irt1,CHANGE);

attachInterrupt(1,irt2,FALLING);

Print a message on the LCD screen.

LCD.backlight ();

LCD. Print ("Hello, world!");

Serial.Print ("Hello, everyone");

Delay (1000);

LCD. Clear();

}void loop()

{While (Serial.available ())

{

tank r = (char) Serial.read ();

if(r=='t')

{

lcd.setCursor (0,0);

LCD. Print ("you're a robot");

Serial.Print ("you robot\n");

}

ElseIf (r = ' a')

{

lcd.setCursor (0,0);

LCD. Print ("you're human");

Serial.Print ("you human\n");

}

}

}Sub irt2()

{

Delay (1500);

i ++ ;

If (i %2)

{Serial.print ("ngaysinh010071992\n") ;}

on the other

{Serial.print ("ngaysinh123455\n") ;}

}I advanced and did a cleansing of your diagram. See if that makes sense.

-

Hello, ladies and gentlemen. I'm reading a TRAK Microwave GPS clock. The clock is a GPIB instrument attached to a card on a remote PC 488. VISA address for this device is GPIB0::7:INSTR. I would like to issue the command 'RQLT\r', but failed. See attached screenshot.

I suspect that the problem is the address of the instrument. I have no problem to access the clock using Vee Pro. I thought that this instrument may not yet be registered with LabVIEW, but could not find any application of this instrument in.

If you want to use a resource VISA name, you must use the VISA. If you want to use the GPIB functions, you must use a GPIB (i.e. ' 5') address. I advise to use VISA. You also need to activate remote VISA on the other pc.

-

Problems of decimal Point with easy Excel Table VI and Excel 2007

Hello

We used the Excel simple table VI transfer to Excel 2002 LabView measurement data. Now, we have updated our Excel for Excel 2007. After that, there is something wrong at the time of the transfer of LabView to Excel. It seems that excel now ignore the decimal Point.

Hi Martin,

It is a known issue with LV, excel and the European regional settings.

To work around the problem, do the following:

To transfer the correct data to Excel, disconnected "use localized comma" in tools-> Frontpanel.

You can use the "." instead of ',' when you enter numbers, for example "0.99" instead of "0.99".

Stefan

-

Strange corrupted data in the transition to the DLL

I'm working on an application that needs to communicate with a set of instrument drivers in a DLL. Mostly it works very well, and I can transfer data backwards between LabVIEW and the DLL without any problem except for a very frustrating bug. I created a small test DLL, with only the features of 'Set' and 'Get' and another function to print a report of the values within the DLL. This library (with source code) and a set of screws similar to ones I use in the main application are attached.

The device settings are stored in the DLL in a series of structures of C, for which I made corresponding clusters in LabVIEW. Using the 'MoveBlock' function to transmit data to and from the DLL by reference, most of the clusters/structures transfer perfectly. A same contains strings (arrays of char in c) which are complicated to deal with, but it works very well.

Unfortunately, there is a single cluster ("General settings" in the attached screws) that contains a set of 13 digital items, mainly the type integer, with two double rooms at the end ("Acq. "delay" and "Hold after"). All integer types successfully transfer to the DLL, but double rooms are corrupt, usually appearing as a gibberish as #. # 315 e. When you use the Visual Studio debugger and setting breakpoints to check the values inside the DLL, they appear generally as denormalized (#DEN). The corrupted values change when the entries of LabVIEW are changed - sometimes even the two values in the DLL change when only a single entry is adjusted. In addition, when the data is passed to LabVIEW the correct values are retrieved, so it seems that the binary representation must be preserved in the transfer, even if it is somehow misinterpreted in the DLL. Even more curious is that another cluster in the application ("Presets") contains also full and double types these values are all successfully transferred to the DLL, so it doesn't seem to be anything special about the double.

I tried on two different computers (Win7, a 32-bit and 64-bit) and see exactly the same thing - same corrupted values are the same. If anyone has experience with something like this, or can offer suggestions, I'll be very grateful, because I am pulling my hair out over this. I'm sorry the joint screws are so complicated - I wanted to keep as much structure as possible from the actual application. The main 'TestLIB_SetAndGet.vi' VI sets all the values in the DLL, writes a text file with some of them of in the DLL and then copies the data to LabVIEW. As mentioned above, the relevant parameters are "Acq. delay "and" Hold after ' cluster 'General Settings'. " Their values appear in the report on the 4-5 lines. Also note that the double 'Preset event' and 'Preset time' in the 'presets' cluster are transferred correctly, appear on lines 17 and 16 of the report.

Thanks in advance for your suggestions!

It seems that troubled alignment.

Read this Article: http://zone.ni.com/reference/en-XX/help/371361H-01/lvconcepts/how_labview_stores_data_in_memory/ (especially the section on clusters)

Try adding #pragma pack (1) before the struct declarations

Andrey.

-

CPU register accessible in LabView FPGA FlexRIO

Hello people, I wonder if it is possible to get the following behaviors of Labview. I think that it is not.

Description of the system: application of CVI which communicates with SMU FlexRIO via controls and indicators.

Problem: The design of a CPU-FPGA interface specification which lists the "books" as a combination of reading and reading/writing-the bit fields.

Example:

According to the specification, there should be a 32-bit register. 31: 16 bits are read-only, and 15:0 bits are read/write, from the perspective of the CPU. In the world of labview, I would just do a uint16 control and indicator of uint16 and do with it.

However, to meet the specification (written for microprocessor buses) traditional, a reading of 32 bits of an address should read back the full content of the 32-bitregister to this place (implemented as flops on the FPGA, with appropriate memory within the FPGA device mapping). In the same way a 32 bits of an address entry must store the values in this registry (properly masking wrote at 31: 16 bits within the FPGA device).

Is it possible for me to have a unique address (basically, a component unique labview block diagram) that will allow me to accomplish this behavior? It seems to me that the only solution is to pack my records with bit fields that are all read, or all the read-write in order to register in the paradigm of labview. This means that the spec should go back and be re-written and approved again.

Thanks in advance,

-J

Thanks for the detailed explanation. I am familiar with the reading and writing in the FPGA registers - I did a lot of work non-LabVIEW recently with an Altera FPGA. I haven't, however, used the CVI to LabVIEW FPGA interface, I only used the LabVIEW interface. I'm not sure if your question is about the CVI, LabVIEW FPGA interface or both.

JJMontante wrote:

Thus, a restatement of my original question: y at - it a mechanism with the use of indicators of controls where both the FPGA AND the CPU can write to the same series of flip-flops in the FPGA? If I use an indicator, the FPGA can write to the indicator, but the CPU cannot. If I use a control, the CPU can write in the control, but can't the FPGA. Is this correct?

On LabVIEW FPGA, a control and indicator are essentially identical. You can write a check, or read a battery / battery, using a local variable in the FPGA code. It is common to use a single piece of front panel to transfer the data in either sense, and it's okay if it's a command or an indicator. For example, a common strategy uses a Boolean façade element for handshake. The CPU writes a value to a numeric control, and then sets the value Boolean true to indicate that the new data is available. FPGA reads this numerical value, and then sets the Boolean false, which indicates the processor that the value has been read. The LabVIEW FPGA interface (side CPU) covers also all elements of frontage on the same FPGA whether orders or the lights--they can be as well read and written.

That answer your question at all?

-

Hello world

I want to deploy my project Teststand and got a strange error when creating the image.

For many additional files with the suffix as .tso, alias, lvlpb, exe, dll... deployment tool launches the error message "unable to locate all the subVIs screws saved because a Subvi is missing or the VI is not registered in the last version of LabVIEW.» (see also the attached picture).

If I exclude the files of my distribution or set them to "include without treatment or dependencies of elements of" the generation works fine.

After the error, I checked the Temp folder. The temporary project LV is not yet created.

I used the deplyoment-installation in the same way within a fromer project and had no problems like that...

I use Win7 / LV2013 SP1 & Teststand 2013 f1 (5.1.240).

Could someone help or support? Thank you!

Finally, I tried the construction with LV2015 & TS2014 - no problems... Everything works fine...

-

How to transfer activation labview on another computer

We bought Labview 8.5 in THIS Department. It works well, but since the PC must be formatted so we must transfer the Labview activated on another system. How to transfer the activation code to another system? or how to acquire the new activation code based on the series ID and the ID of the computer? Provider does not return.

You must open the Program Files NI License Manager > National Instrument > NI License Manager. Then select your labVIEW active (green), right click and disable. This frees up the initial Activation. Now you can format the PC and not lose activation.

Maybe you are looking for

-

Recently (after using Yahoo.com years) when loading my homepage it will fail to load the graphics and when I go to my email account, the page is severely faded and hard to read. I've never had this problem before and it only occurs at Yahoo.com. If I

-

How can we replace the display of a keon?

I am a representative of the Mozilla's Rwanda. I gave one of the 3 keons, I got from Remo to a member of my community to work on applications, and the screen is broken on his watch. How can I replace the screen? AZ charadiMozilla Rwanda Rep https://m

-

Hi all I'm having a problem with Firefox facebook page display. First I thought it might be something related to character encoding, but it relates only to certain parts of a message or a page. It seems to be linked to the links. They appear in stran

-

User profile of Stack overflow is not displayed correctly

User Stack overflow profile page is displayed wrongly, hides an empty space at the top of the page and some info.

-

Then the Satellite 1900 manage W - LAN?

I own a Satellite 1900. Is it able to handle WLAN? [Edited by: admin on 22 January 06 20:54]