Windows 10 fewer resources than Windows 8.1?

Is Windows 10 less resources than Windows 8.1 on low end computers?

I used Windows 8.1 very briefly on my new Acer Aspire E 11 (ES1 - 111 M-C72R), then upgraded to Windows 10 and Windows 10 seems to go a bit smoother.

When I took off my old Toshiba laptop (Core2Duo) Windows 7.1 and put Windows 10 on it, it felt like a new laptop.

This should answer your questions

Tags: Acer Software

Similar Questions

-

I recently spent (force) of Adobe SendNow send. Using the service to send records to the customers and receipts to send and download revenues have proved to-vaulable later down the line. Is there a way to activate this feature in the new Adobe product send?

Hi cmagroup,

Please see this Faq: Adobe send | Getting started

I hope this would help you. Let us know for help.

Kind regards

Florence

-

The most resource efficient OS

Guys, what is the most effective resource operating system you have found to work with EXSI on smaller servers, or those for which important.

I want to use it for RDP in use of Sage, Microsoft Outlook accounts.

Answers gratefully received.

Knowledge is a wonderful thing.

Neonewbie

Choose the first applications can find the OS that supports them. XP requires fewer resources than Windows 7, but if the applications do not work on XP then you have a problem.

-

datasocket VI of the client side just stops

Hi guys, I'm trying to send his micro-captured to another PC (two PCs connected by a crossover cable). I tried various options such as TCP, Datasocket but nothing has worked. With TCP, it always shows the input buffer overflow. With Datasocket, it shows no errors, but stop just the VI on the remote PC. I was not able to understand the problem. I'm using LabVIEW 2009 version 9. I enclose two screws for your tests. Help, please. Thank you. Kind regards.

Hi Rocky,

I looked at your code and made some changes. First of all, I couldn't tell why you were dividing the samples/ch by two for the audio playback function. I disabled who. In addition, the "initial data" fed you on the first 'handwriting' VI and at the entrance to the function default data DataSocket Read was a cluster of waveform. Audio data sent through the connection DataSocket are an array of clusters of waveform (a cluster by channel). This probably caused DataSocket problems for you.

I put the configuration values of the sound of what I found in the example of "continuous sound Input.vi' VI. I also added some graphics for Visual indications.

Note that you must have the DataSocket Server (under windows: start-> programs-> National Instruments-> DataSocket-> DataSocket server).

If these don't work, I would look at the network/firewall issues. Also, if you want less latency, consider using UDP straight-up. He should have fewer resources than DataSocket (and with a filter connection, I guess you won't have much to worry about data loss).

-

MSN newfeed for vista sidebar gadget

I received two of the same news feed lines for each item, so I uninstalled the gadget sidebar news feed and now I can't get any extra gadget to load in the sidebar selection box. What I am doing wrong? I need very specific instructions to follow. Thank you.

Hello

To download Gadgets click on the + sign on the top in the middle of the sidebar.

Try these to erase corruption and missing/damaged file system repair or replacement.

Run DiskCleanup - start - all programs - Accessories - System Tools - Disk Cleanup

Start - type in the search box - find command top - RIGHT CLICK – RUN AS ADMIN

sfc/scannow

How to analyze the log file entries that the Microsoft Windows Resource Checker (SFC.exe) program

generates in Windows Vista cbs.log

http://support.Microsoft.com/kb/928228Then, run checkdisk - schedule it to run at next boot, then apply OK your way out, then restart.

How to run the check disk at startup in Vista

http://www.Vistax64.com/tutorials/67612-check-disk-Chkdsk.html--------------------------------------------------------------

Then proceed as follows:

How to restore the sidebar of Windows?

http://support.Microsoft.com/kb/963010If necessary to do it manually:

Logon as Admin - Start - type in the area of research-> COMMAND - find top - RIGHT CLIC on it-

RUN AS ADMINReplace C:\Program Files\Windows Sidebar by copying and pasting this command->

CD C:\Program Files\Windows Sidebar

Press enter (this is assuming that your sidebar is on C:, otherwise use the letter of the drive is on)Guest should be--> C:\Program Files\Windows Sidebar >

Then copy / paste each of them following their tour each come into

regsvr32-u sbdrop.dll

regsvr32-u wlsrvc.dll

regsvr32 atl.dll

regsvr32 sbdrop.dll

regsvr32 wlsrvc.dll

Sidebar should now work

Type in the search-> Sidebar box - find top - make a RIGHT CLIC top - RUN AS ADMIN

========================================================

Try to load this clock. Download - SAVE - go to where you put it-click on - OPEN WITH

Sidebar - then click on the + on the top in the middle of the sidebar to add it.You should get this clock because it has more features and consumes fewer resources than the default Sidebar clock.

Digital Dutch clock 3.2 (a analogue dial, so it seems that one even better windows.)

http://Gallery.live.com/liveItemDetail.aspx?Li=a91668c2-c82c-48cb-8939-a8d20af60347&BT=1&PL=1I hope this helps.

Rob - bicycle - Mark Twain said it is good. -

I am user of window vista. I noticed, sometimes, change in the gadgets. First, they appear on the left side before they appear on the right side of the desktop. And now one of them (clock) is totally absent, even if I never tried to change their configuration.

Hello

Right click the icon to the sidebar in the Notification area (near clock bottom right of the screen) - properties-

See the sidebar on this side of the screen - RIGHT - click APPLY / OK.Also right click again - add Gadgets (or click on the symbol at the top of the sidebar +) and add the clock

back in.You should get this clock because it has more features and consumes fewer resources than the default Sidebar clock.

Digital Dutch clock 3.2 (a analogue dial, so it seems that one even better windows.)

http://Gallery.live.com/liveItemDetail.aspx?Li=a91668c2-c82c-48cb-8939-a8d20af60347&BT=1&PL=1I hope this helps.

Rob - bicycle - Mark Twain said it is good. -

We have a particular server that hosts several Synergex databases. There are also a number of flat type files that get deleted/copied/added every day. According to Windows Server 2008 R2, the disk is highly fragmented. This server exists on the network SAN Equallogic PS6000XV. It is part of the RAID 50, where it has its own dedicated volume. The VMDK is thick-set service.

When he was a physical server and the data was hosted on Server 2003 with a local RAID-5, we had installed Diskeeper and could run a defrag every week. It would be a good idea now? Looking through the forums, I've seen arguments in two ways. Most are quite dated, so I wondered what was the current consensus. I see three ways to accomplish this:

1 defragment the SAN, which is even possible? Does anyone know if Equallogic has a tool for that?

2 defragment the VMDK? Again, it is like Voodoo?

3 defragmentation of the file system in the VMDK - using tools like Diskeeper disk or something?

or

4. just leave well enough alone, because Windows doesn't know what he's talking about.

When he was physical, we saw significant performance increases after the first defragmentation. In both cases, it works a lot better in a virtual environment with fewer resources than what he has done as a physical server. Virtual stuff never ceases to amaze me.

We have a particular server that hosts several Synergex databases... This server exists on an Equallogic PS6000XV... part of RAID 50, where it has its own dedicated volume. The VMDK is thick-set service.

The expected outcome of defragmentation, it's what to consider - as André, there is no certainty about what made the EqualLogic with blocks and in any case, even if there was, because the volume is spread over 14 pin, what exactly is sequential access even when? The EqualLogic provides storage for something else? Databases the most tend to show a random IO load because they meet (and scattered) data tables so how it is organized physically just not important really.Positioning system EqualLogic data is skilled enough - for example, if in the future an SSD array had to be added, the system could migrate hot blocks in this table.Replication is also extremely important. IIRC, EqualLogic use the block of tracking changes with a block size of 64 MB. So at the end of the replication interval, all 64 MB blocks that have been affected by a write will be transmitted over the Wan - clearly defrag will touch a massive number of blocks and potentially make this impractical.There are also a number of flat type files that get deleted/copied/added every day.In the same sense, it may be necessary to consider. For example, you might consider such temporary space running in folders with NTFS compression enabled. If these are text files that can be very effective to reduce the disk space required (and therefore the number of blocks received for WAN replication). Another option would be to run these from the temporary space provided by a server of files on a volume that is not replicated.Hope that helps! -

Questions about math broadband features

Hello

I'm just trying to understand what advandages High Troughput Math Funtions have. I so ask a few Questions.

I always talk about beeing inside a SCTL.

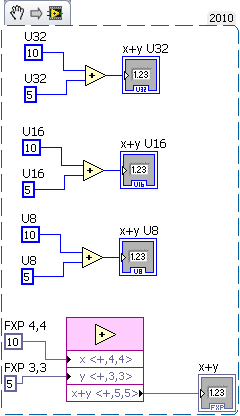

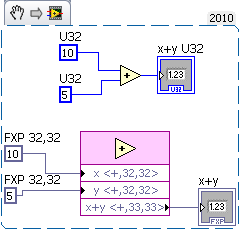

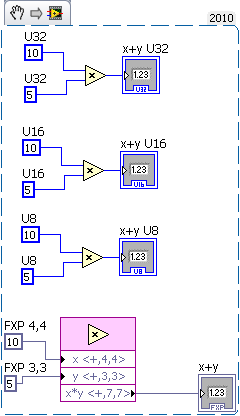

1. in the Image you can see four functions to add. One with U32 who must use more resources than with the U16Datatype that uses mor than the U8. But my broadband Math FXP uses fewer resources than the U8 Version?

2. who this four functions add will take less time for execution?

3. If I add two 32 bit a number with the normal add-in and the other with broadband Add. Which functions uses less resources and who will be fastest?

4. How would it bee if I had a Multiplication instead? When I understand the concept of a Multiplication on the right it will be done with a DSP48E. This logical block is able to multiply a little 25 number with a number of 18 bits. So the U32 multiply will use 2 DSP48Es and the other three functions would use a DSP48E.

I guess that the U32 Version will be slower enforcement?

Whats on the other three will be their equal execution speed, or Versions with smaller data types will be faster?

With greetings

Westgate

@JLewis: thank you for your answer it helped a lot to understand functions HT!

-

Use of Variables shared with RT-project / home-VI

Hi all

I have a small question which is certainly easy to answer for those who have already worked with a RT - VI containing two periods loops and a user interface that is deployed on a host PC.

Q: is there an advantage to the data acquisition in the urgent loop by using a variable shared unique process (active FIFO) and then through the data on the host computer the nondeterministic loop simply through a shared network-published (new FIFO active) variable that directly passes the data on the host PC?

Thanks in advance,

David

Hi Kolibri,

The advantage of having the network publishes the variables in your non-deterministic loop as opposed to the evanescent loop is that it reduces the resources needed to perform the evanescent loop.

Send the data to a different loop in the same program with unique shared variable or process with RT FIFOs requires fewer resources than to send data over the network. This allows the loop of high priority execute more deterministic way, without having to manage communication networks.

Kind regards

Stephen S.

-

LabVIEW stores Boolean values as a U8 in memory article.

http://zone.NI.com/reference/en-XX/help/371361J-01/lvconcepts/how_labview_stores_data_in_memory/

My question is what happens when you expand in FPGA? More precisely if I made 8 tables of choice with the data type in a Boolean value, which takes the same amount of resources as a single table to match U8? Or it takes fewer resources than 8 tables U8?

HERE you can find out exactly how much space is used by different things on the FPGA target.

Normally a logical structure of X - bit consume X bit from FPGA has no limitation in this regard.

Shane.

-

Calculate the material database

Hi all

I'm new Oracle DBA in the industry, not a lot of experience in the database. I have some confusion. Maybe it's stupid question but this turning point in my mind. Please help to solve these.

(1) for new projects comes, I'm confusing all cache/RAM required and CPU. Although I can calculate the capacity of the hard drive. for example - my Application user say we have total TPS (transactions per second) is 1000 (200 update, delete 300, 100 and 200 insertion selects), so in this case how can I calculate hardware capacity, and how I can answer for them.

(2) this is the reverse of the first question. Suppose a database running, and everything works fine. I want to calculate the GST (transactions per second). means how select/update/insert/delete running in the second. How to calculate or identified TPS.

Thanks in advance...

Reg,

Hard

Thank you all, I got my answer...

Oracle Capacity Planning

Introduction - Database management

The best way to perform the Oracle capacity planning is with planningsof the worksheet of the ability of the Oracle. Database management has increased over the years to the simple management of a few tables and indexes to a complex of interlocking responsibilities ranging from management of objects of database to participate in decisions large enterprise on hardware, software and development tools.

In order to fully discharge these functions the modern Oracle DBA needs one big together skills. In the last hours, we will discuss the skills needed, and specifically how they apply to an Oracle DBA.

Capacity planning system

In a green field operation (the one where you're here in front of the equipment and the database), a DBA will have a critical role to play in planning and configuration of the new system. Even in existing environments the ability to predict new servers, new databases, or improvements to existing databases and systems is crucial.

Essentially the DBA should be concerned with two main questions:

- Get enough server to ensure an adequate return

- Sufficient backup and power of recovery for backup and restoration carried out respecting the time constraints required.

All this is actually under the heading planning resources and capabilities. Resources and capacity planning Oracle is a database intensive resources system. The more CPU, memory and disk resources, you can provide Oracle, better it performs. Planning with Oracle resources becomes more a game of "How can we afford to buy" instead of "what is the minimum configuration. A minimally configured Oracle server won't work effectively. Specification of resource for Oracle In the specification of resources, there are several questions that should be answered.

- How many users will use the system today and in the future?

- Data will include the system contain both now and in the future, we don't know the growth rate?

- What response time are expected?

- The availability of this system is planned?

Why are these issues important?

- How many users will use the system today and in the future?

This issue is important, because it effects how much processing power is going to be necessary. The number of users will determine the number and speed of the processors, memory, the related network configuration size.

- Data will include the system contain both now and in the future, we don't know the growth rate?

This issue is important because it determines the disk needs, how much storage will need to take the data we have today and how it will be necessary for the growth. The answer to this question also allows to determine how much memory will be needed.

- What response time are expected?

This question is important because it pushes the number, type and speed of CPU resources, but also of network problems. He will lead also issues such as the number and speed disk configuration disks, number and speed of the controllers, disk partitioning decisions.

- The availability of this system is planned?

This question is important because the availability of the system grows the type of RAID (1, 0, 0/1, RAID5) configuration, the type of scheduled backup (cold, warm) and parallel server problems. Requirements change if all that is expected is the system to be available during business hours from Monday to Friday, or if the system is supposed to be available 24 X 7 seven days a week. This also leads the type of backup media, be it a single tape drive is all that is necessary or is it a hi-speed, tape-stacker, multichannel, solution based silo?

To properly perform a capacity planning cooperative efforts should be made between system administrators, database administrators, and network administrators.

Step 1: Size of the Oracle database

A starting point for the whole process of capacity planning is to know how many and what size, databases will be based on a given server resource. The physical size of the tables, indexes, clusters, and LOB storage areas will play an essential role in the sizing of the overall database, including areas of shared global memory and disk farm. DBAS and developers must work together in order to properly size the physical files of the database. The design of the database will also lead the placement and the number of storage spaces and other resources of database such as the size and quantity of newspapers of recovery, rollback segments and their associated buffers.

Typically areas of buffer of data to an LMS database block size out to between 1/20 to 1/100 the physical sum relative to the total number of database file sizes. For example, if the physical size of the database is 20 gigabytes the database block buffers should on about 200 megabytes to 1 gigabyte in size depending on the how the data is used. In most cases the LMS shared pool would be size out to about 20-150 megabytes maximum according to the usage model for the common areas of SQL (see next lesson). For a system of 20 gigabytes the redo logs would most likely run between 20 and 80 megabytes, you want mirrored volumes and probably not less than 5 groups. The pad of paper to support a 50 megabyte redo log file would be a minimum of 5 megabytes may be as large as 10 megabytes. The last major factor for BMG would be the size of the sorting box, for this size of a database, a sort of 10 to 20 megabytes field is on the right (according to the number and size of all kinds). Remember that sort areas can be a part of the shared pool or a part of the large pool, so we'll cover in a future lesson.

So on that basis we've determined? Gives a choice of 400 megabytes for our size memory buffer of data block, 70 megabytes for the shared pool, buffers of the newspaper of 4 to 10 MB (40 MB) and a size of 10 megabytes sort field. We look at a megabyte of 500-600 LMS with the important factors of non - DBA added in. Since you are not supposed to use more than 60% of the physical memory (according to the interviewee) it means w will need at least a gigabyte of RAM. With this size of database a single UC probably won? t give enough performance so we are probably looking at least a 4-processor machine. If we have more than one instance installed on the server, the memory requirements will increase.

Step 2: Determine the number and Type of users:

Of course, a database of a user will require fewer resources than the user database of a thousand. Generally, you will need to take a LOOT at how many memory resources and drive each user will need. An example would be to assume that a user installed 1,000 users base, only 10 percent of them at the same time will use the database. That leaves 100 concurrent users, those who perhaps a second 10 percent will do the activity requiring sorting areas, this brings the number up to 10 users each use (in our previous example) 10 megabytes of memory each (100 MB). In addition each of the 100 users simultaneous need about 200 k of space (depending on the activity, BONE, and other factors) process so there is an additional charge of 20 megabytes just for the user process space. Finally, each of these users will require probably some amount of disk resource (or less if they are client-server or web-based) let? s give them 5 meg of disk to start each, which adds up to 5 gigabytes of disk (give or take a meg or two.)

Step 3: Determine the hardware requirements for the required response time meet and support of user Support:

This step is the system administrator and maybe the seller of equipment. Given our mix of 1000:100:10 users and any required response time numbers, they should be able to configure a server that will obtain good results. Usually, this will require several, interfaces multi-lane disks and several separate physically the drive bays.

Step 4: Determine the material backup to support the requirements of availability required:

Here again the admin system and hardware vendor will have a helping hand in the decision. Based on the size of the disks and the speed of the maximum recovery time backup solution should be developed. If there is no way to meet your requirements of availability required using backup schemas simple more esoteric architectures can be specified as strips of multiple channels, hot databases in standby or even Oracle Parallel Server. Let? s say we need a requirement of availability 24 X 7 with instant failover (no recovery time because of the critical nature of the mission of the system). This type of specification would require Oracle Parallel Server in a configuration of automated failover. We also use either a double or triple mirror on disk so that we could divide the mirror to perform backups without losing the protection of mirroring.

Let? s compile what we have determined so far:

Material: 2-4 CPU (for higher-speed CPU we can) with at least 1 gigabyte (preferably 2) of RAM shared, at least 2 disk controllers each with many channels, 90 gigabytes of disk resource using a three way mirror to give us a matrix to mirror a triple 30 concert. The systems themselves should have a sufficient internal disk subsystem to support the operating system and the swap and the requirements of pagination. Systems must be able to share the disk resources so should support clustering. High-speed tape backup to minimize the folding times mirror.

Software: Oracle Parallel Server, Cluster, the network management software, software backup management software to support backup material.

Capacity and resource planning is not an exact science. Basically, look for a moving target. Dual Pentium II 200 NT Server with 10 GB of 2 GB SCSI disks for $5 k, I bought 2 years ago has a modern equivalent in the Pentium III 400 with the builtin 14 concert my father-in-law just bought for $1 k. At the time where we specify and purchase of a system, it is already replaced. You should insist on power substitute options more effective, more inexpensive they are available during the phases of specification and procurement.

Reg,

Hard...

-

Max vCPU / customer regarding totals pCPU host?

Our guests have total 8 cores (2 quad core). In the past I've cautioned against creating 8 vCPU invited (or leaste very limited) fearing the potential unwanted CPU performance in the whole of the host.

With the new software and architectures of CPU, I'm reviewing it to see if it is still a valid concern. As we try to pull heavy loads of work, it may be necessary for the guests to 8 vCPU so either, I need to be confident that they can be supported with our current host CPU account, or consider adding physical CPU.

I plan to watch some laboratory and test measures but, does anyone have a rule of thumbs, experience, white paper or advice in this regard. It's always a good idea to have a guest VM that is configured to use all the cores on a given host?

Applications of the minimum requirements on CPU (vCPU) provider is something that everyone faces. I'm in this battle at this moment where, say they, that if we want to run their virtualized configuration should be devoting resources to the virtual machine. I can't take the credit but Tom gave me a great idea that is afford to give this particular virtual machine if you must, but start low as Troy suggested. Starting at the bottom you can see if the system will work very well with fewer resources than that requested, if it is not, or you need solve the problems you have the means to get it.

-Kyle

«"RParker wrote: I guess I was mistaken, everything CAN be virtualized"»

-

Overhead of Movie Clips vs Sprites

I want to understand something, and there is no other reason that I just want to understand.

Again and again, I read that the Sprites have fewer resources than to a Clip. But nowhere someone explains why. They say just use a Sprite if the MovieClip doesn't have a timeline as a Sprite is less demanding in CPU.

Let's say you want to remove an object from a library and change its size. (and let's say that the symbol editing mode has only a picture)

var myMC:MovieClip = new thing();

addChild (myMC)

myMC.scaleX = 1.2

myMC.scaleY = 1.2

COMPARED TO

var mySprite:Sprite = new thing();

addChild (mySprite)

mySprite.scaleX = 1.2

mySprite.scaleY = 1.2

What happens in memory, which makes the MovieClip have more overhead? Something get more exported when the movie is published with instantations unnecessary MovieClip rather than Sprites? Should the Flash Player do additional with the statements of MovieClip stuff that if these same MovieClips were declared as Sprites? Or????????

I realize the difference in overhead is probably negligible with some of these objects. But if you have several hundred then why is a Sprite better? What happens with a MovieClip which does not happen with a Sprite that increases the amount of overhead?

Thanks for any help to understand this!

The schedule and the need for the dynamic nature of it. There is no timeline in a Sprite. This is 1 frame with as many layers as you like (objects on the display list).

Probably as much as the calendar and the futility of dealing with functions, MovieClip is a class dynamic, while Sprite isn't. It's huge. Anything marked dynamics is subject to expansion and by reducing on the fly, which causes memory allocation predictive excess. A Sprite does not suffer what you cannot change its default nature without its extension, and therefore, the compiler knows exactly how to allocate effectively for it.

MovieClips are usually created in the Flash IDE. A host uses the timeline and on a number of images creates any type of animation they want. The MovieClip class to support a large number of features a Sprite isn't. You can't gotoAndPlay() on a Sprite, etc..

This does not mean that a Sprite has no frames. You can assign an Event.ENTER_FRAME loop to a Sprite and see that he works at the document frame rate and therefore you can liven things up with the code inside a Sprite just as if it were a MovieClip. You cannot create a chronology in the code, so you don't need the functions requiring only a timeline.

The reduction of these costly needs (dynamic and extra functionality) are overhead costs that result in a degradation in performance.

http://help.Adobe.com/en_US/FlashPlatform/reference/ActionScript/3/Flash/display/MovieClip .html

http://help.Adobe.com/en_US/FlashPlatform/reference/ActionScript/3/Flash/display/sprite.ht ml

-

Remote XML Dataprovider for the tree control

Try to create a class of the tree instead of load application XML node list, to try to read from an external file called "nodes.xml". I tried to edit code snippets I've found from various sources and it's current iteration, I'm sure. Please advise on how to solve this problem!

Note: In the XML itself I tried with and without the tags < mx:XMLList > wrapping, doesn't seem to work. Here is a link to a live demo: http://81.100.103.105/flex_dev/treeExample.html

Thank you very muchAlex, I was able to do the job of cleaning the XML file a little. Remove the XMLList and the? lines of xml, it looks like this:

FWIW, if the XML is static (that is to say, won't come running), then you can consider using the tag mx: Model. It has fewer resources than the URLLoader and it cooked the XML code in the SWF at compile time.

HTH,

Matt Horn

Flex docs -

Time capsule of access on the Internet (PC MAC or Windows)

Hi, I have a Time Capsule, and I want to create a folder and be able to access from anywhere on the Internet (regardless if it's on a MAC or PC based on Windows). I read several posts and google, but still I am unable to set up the time Capsule. Can any experienced user me explained step by step?

Thank you

SGL

You need to tell us a few things.

1 do you have TC as the only router in the network.

If this is not the case, the configuration is entirely via the main modem router combo.

Without a public IP address on the interface wan everything that is your router, you will not be able to access it remotely. For the most part, wireless, satellite or internet shared building will not allow always remote access.

2. double NAT will never work, so if you already have another router modem the TC must be in the bridge.

3 Apple offers NO WAY for PC/windows remote access to the TC. Neither iOS btw... the Apple of the only method allows is AFP via a Mac.

Local people do via SMB to TC port mapping... It is poor and does not always work.

The tool used for iOS, filebrowser shows how this is possible.

http://www.Stratospherix.com/support/gsw_timecapsule.php?page=6Remote

I do not recommend this somehow... It's open ports in a way that reduces your security seriously.

I repeat... Apple has no PC access.

4. There is a safe way to do it... buy a vpn router and use before the TC. VPN is recognized global method for secure remote access. Who will work for any device having vpn client available... OpenVPN is the easiest in terms of cost, configuration and security.

5. There is another method entirely, and that is to store the files you need to join in the cloud... Cloud is a better resource than to use your own router as Pseudo-nuage.

If you eliminate the need for PC access then use BTMM for access to the TC.

CCMM is authorized only and only the Apple method which are likely to work.

Have you even tried yet?

See Tesserax doco on remote access.

Maybe you are looking for

-

control windows 7 bits driver for G6-2292sa network controller

I have just rebuilt computer HP laptop laptop G6-2292sa of Windows 8 for Win 7 64 bit can't find onboard network controller drivers and USB drivers I install ok drivers. Can you please help?

-

data record in the micro sd card

I just bought a z3 and insert a micro sd card. My photos and videos automatically for the storage of the phone and I need to manually transfer the map. Is there a way for photos and videos to automatically record on the map.

-

A question pertaining to the Tablespace while performing a Transportable Tablespace migration.

Hi allI'm in the middle of a migration, and currently I am performing a VINE for this. The tablespace should I migrate is 3TO in size.I've checked endian, its perfect, the two BONES are similar.No violation not found for verification of transport, th

-

How to assign the value to the text field dynamically

HelloI created a form that accepts input from the front desk. As soon as I select ACCOUNT_CODE using LOV, I would like to define a DISPLAY only field INSTALMENT_AMOUNT with values that is available in another table based on the value of the ACCOUNT_

-

Error Installation Tools (shared folder) - Ubuntu 15.04 VMware [SOLVED!]

11.1 workstationGuest operating system Ubuntu 15.04Kernel 3.19.0 - 15-generic9.9.2 - VMwareTools-2496486When I try to install vmwaretools in my 15.04 Ubuntu, I have the following of receive the compile errors (feature shared folder do not work):Initi