Activity indicator for loading data model

Hello

I'm looking in using an activity indicator to show for my list view to load the user (as it takes 2-3 seconds to display list to be filled with data that it receives the files from my server).

Here is my list view & data source:

ListView {

id: listView1

dataModel: dataModel1

leadingVisual: [

Container {

id: dropDownContainer1

topPadding: 20

leftPadding: 20

rightPadding: 20

bottomPadding: 20

background: Color.create("#212121")

DropDown {

id: dropDown1

title: qsTr("Date:") + Retranslate.onLocaleOrLanguageChanged

Option {

id: all

text: qsTr("All") + Retranslate.onLocaleOrLanguageChanged

selected: true

}

Option {

text: qsTr("23/06/2014")

value: "23/06/2014"

}

Option {

text: qsTr("24/06/2014")

value: "24/06/2014"

}

Option {

text: qsTr("25/06/2014")

value: "25/06/2014"

}

Option {

text: qsTr("26/06/2014")

value: "26/06/2014"

}

Option {

text: qsTr("27/06/2014")

value: "27/06/2014"

}

Option {

text: qsTr("28/06/2014")

value: "28/06/2014"

}

Option {

text: qsTr("29/06/2014")

value: "29/06/2014"

}

Option {

text: qsTr("30/06/2014")

value: "30/06/2014"

}

Option {

text: qsTr("01/07/2014")

value: "July 1 2014"

}

Option {

text: qsTr("02/07/2014")

value: "July 2 2014"

}

Option {

text: qsTr("03/07/2014")

value: "July 3 2014"

}

Option {

text: qsTr("04/07/2014")

value: "July 4 2014"

}

Option {

text: qsTr("05/07/2014")

value: "July 5 2014"

}

Option {

text: qsTr("06/07/2014")

value: "July 6 2014"

}

onSelectedIndexChanged: {

if (selectedOption == all) {

dropDownDataSource1.sQuery = ""

} else

dropDownDataSource1.sQuery = dropDown1.at(dropDown1.selectedIndex).value;

}

}

}

]

listItemComponents: [

ListItemComponent {

type: "item"

StandardListItem {

title: ListItemData.fixtureInfo

description: Qt.formatTime(new Date(ListItemData.timestamp * 1))

}

}

]

onTriggered: {

var selectedItem = dataModel1.data(indexPath);

var detail = fixtures.createObject();

detail.fixtureInfo = selectedItem.fixtureInfo

detail.dateInfo = selectedItem.dateInfo

detail.timeInfo = selectedItem.timeInfo

detail.timeZone = Qt.formatTime(new Date(selectedItem.timestamp * 1))

detail.courtInfo = selectedItem.courtInfo

detail.resultInfo = selectedItem.resultInfo

navigationPane1.push(detail)

}

}

GroupDataModel {

id: dataModel1

sortingKeys: [ "dateNumber", "id" ]

grouping: ItemGrouping.ByFullValue

sortedAscending: false

},

DataSource {

id: dataSource1

property string sQuery: ""

onSQueryChanged: {

dataModel1.clear()

load()

}

source: "http://tundracorestudios.co.uk/wp-content/uploads/2014/06/Fixtures.json"

type: DataSourceType.Json

onDataLoaded: {

//create a temporary array tohold the data

var tempdata = new Array();

for (var i = 0; i < data.length; i ++) {

tempdata[i] = data[i]

//this is where we handle the search query

if (sQuery == "") {

//if no query is made, we load all the data

dataModel1.insert(tempdata[i])

} else {

//if the query matches any part of the country TITLE, we insert that into the list

//we use a regExp to compare the search query to the COUNTRY TITLE (case insenstive)

if (data[i].fixtureInfo.search(new RegExp(sQuery, "i")) != -1) {

dataModel1.insert(tempdata[i])

//Otherwise, we do nothingand donot insert the item

}

}

}

// this if statement below does the same as above,but handles the output if there is only one search result

if (tempdata[0] == undefined) {

tempdata = data

if (sQuery == "") {

dataModel1.insert(tempdata)

} else {

if (data.fixtureInfo.search(new RegExp(sQuery, "i")) != -1) {

dataModel1.insert(tempdata)

}

}

}

}

onError: {

console.log(errorMessage)

}

},

onCreationCompleted: {

dataSource1.load()

}

In another part of my application, I use an activity indicator to load a webView but I couldn't reshape it for the list view.

The following code works when my webView loads:

WebView {

id: detailsView

settings.zoomToFitEnabled: true

settings.activeTextEnabled: true

settings.background: Color.Transparent

onLoadingChanged: {

if (loadRequest.status == WebLoadStatus.Started) {

} else if (loadRequest.status == WebLoadStatus.Succeeded) {

webLoading.stop()

} else if (loadRequest.status == WebLoadStatus.Failed) {

}

}

settings.defaultFontSize: 16

}

Container {

id: loadMask

background: Color.Black

layout: DockLayout {

}

verticalAlignment: VerticalAlignment.Fill

horizontalAlignment: HorizontalAlignment.Fill

Container {

leftPadding: 10.0

rightPadding: 10.0

topPadding: 10.0

bottomPadding: 10.0

horizontalAlignment: HorizontalAlignment.Center

verticalAlignment: VerticalAlignment.Center

ActivityIndicator {

id: webLoading

preferredHeight: 200.0

preferredWidth: 200.0

horizontalAlignment: HorizontalAlignment.Center

onStarted: {

loadMask.setVisible(true)

}

onStopping: {

loadMask.setVisible(false)

}

}

Label {

text: "Loading Content..."

horizontalAlignment: HorizontalAlignment.Center

textStyle.fontSize: FontSize.Large

textStyle.fontWeight: FontWeight.W100

textStyle.color: Color.White

}

}

}

onCreationCompleted: {

webLoading.start()

}

Therefore, what I am trying to make is: get the activity indicator to show when the list view is charging and when it's over, for the activity indicator be invisible. Also, if the user doesn't have an internet connection or loses the signal while the data is filling: would it be possible to recover data from a file stored locally instead ("asset:///JSON/Fixtures.json")?

Thanks in advance

With the help of a few other developers I maneged to make everything work properly.

Jeremy Duke pointed out that I would need to use the onItemAdded in my data model of the Group:

onItemAdded: {

myActivityIndicator.stop();

myActivityIndicator.visible = false;

loadMask.visible = false;

searchingLabel.visible = false;

}

Adding that, the loading stops when an element has completed the list.

Thanks for your help

Tags: BlackBerry Developers

Similar Questions

-

Several models available for single data model?

Hi all

Currently I'm working on the XML Editor, here I need information on how to create a multiple page layouts for the unique data model using RTF.

Please provide me with information, such as, how we can load several files of patterns page/how we can save and the process to select the specific provision based on the

requirement.

Thanks in advance...

Kind regards

Gopi.CHIf you had heard a little more on the requirement, you could get best suggestions for your needs.

Now it is not clear on what you expect, anyway here's my entries too.In addition to the submodel logic suggested by user928059, you can also create several models of XMLP liability provision.

These models can have the same data source as your data model.

At runtime, you can choose the desired model of the 'Options' tab in the screen SRS.Or if this is for a series of reports, then select the custom layout created in the configuration screens.

-

How/where can I view activity indicator when loading new records in listview

Hello

I would like to display a kind of activityindicator when I load more records into my view of the list... IE when atEnd struck in ListView:

nScollingChanged(). My question is can I view the ActivityIndicator in the listview control itself or I view wihtin the bottom of the container containing listview?

nScollingChanged(). My question is can I view the ActivityIndicator in the listview control itself or I view wihtin the bottom of the container containing listview?I don't know there must be a standard way that people go on display an indicator when additional data are retrieved...

Thank you

In the World of Blackberry app in the view by categories:

-A view at the bottom of the list become visible, showing a small LoadIndicator.

But I do not like much because the first loading, the screen is black, and all data that happens then both. A big LoadIndicator to the Center must have been added, as in the home screen.

But this way, we have two indicators of management, which is not cool. I prefer so usually just a big focus indicator, which appears behind the list loading data 'more '.

It's your call. But I like to listen to other choices.

-

Hello OTN.

I don't understand why my sql query will pass by in the data model of the BI Publisher. I created a new data model, chose the data source and type of Standard SQL = SQL. I tried several databases and all the same error in BI Publisher, but the application works well in TOAD / SQL Developer. So, I think it might be something with my case so I'm tender hand to you to try and let me know if you get the same result as me.

The query is:

SELECT to_char (to_date ('15-' |)) TO_CHAR(:P_MONTH) | » -'|| (To_char(:P_YEAR), "YYYY-DD-MONTH") - 90, "YYYYMM") as yrmth FROM DUAL

Values of the variable:

: P_MONTH = APRIL

: P_YEAR = 2015

I tried multiple variations and not had much luck. Here are the other options I've tried:

WITH DATES AS

(

Select TO_NUMBER (decode (: P_MONTH, 'JANUARY', '01',))

'FEBRUARY', '02',.

'MARCH', '03'.

'APRIL', '04'

'MAY', '05'.

'JUNE', '06'.

'JULY', '07',.

'AUGUST', '08'.

'SEPTEMBER', '09'.

'OCTOBER', '10',.

'NOVEMBER', '11'.

"DECEMBER", "12."

'01')) as mth_nbr

of the double

)

SELECT to_char (to_date ('15-' |)) MTH_NBR | » -'|| (TO_CHAR(:P_YEAR), 'DD-MM-YYYY') - 90, "YYYYMM")

OF DATES

SELECT to_char (to_date ('15-' |: P_MONTH |)) » -'|| ((: P_YEAR, 'MONTH-DD-YYYY')-90, "YYYYMM") as yrmth FROM DUAL

I'm running out of ideas and I don't know why it does not work. If anyone has any suggestions or ideas, please let me know. I always mark answers correct and useful in my thread and I appreciate all your help.

Best regards

-Konrad

So I thought to it. It seems that there is a bug/lag between the guest screen that appears when you enter SQL in the data model and parameter values, to at model/value data.

Here's how I solved my problem.

I have created a new data model and first created all my settings required in the data model (including the default values without quotes, i.e. APRIL instead "Of APRIL") and then saved.

Then I stuck my sql query in the data model and when I clicked ok, I entered my string values in the message box with single quotes (i.e. "in APRIL' instead of APRIL)

After entering the values of string with single quotes in the dialog box, I was able to retrieve the columns in the data model and save.

In the data tab, is no longer, I had to enter the values in single quotes, but entered values normally instead, and the code worked.

It seems the box prompted to bind the values of the variables when the SQL text in a data model expects strings to be wrapped in single quotes, but no where else. It's a big headache for me, but I'm glad that I solved it, and I hope this can be of help to other institutions.

See you soon.

-

How we call OLAP to make unnecessary aggredations for loading data?

Hello

I am trying to create an OLAP cube relatively simple two-dimensional (you can call it "Square OLAP"). My current environment is 11.2EE with MN for the workspace management.

A dimension is date,-> year-> month-> day annually, the other is production unit, implemented as a hierarchy with some machine on the lower level. The fact is defined by a pair of low level of these dimensions values; for example, a measure is taken once per day for each machine. I want to store these detailed facts in a cube as well as aggregates, so that they can be easily drilled up to without questioning the original fact table.

The rules of aggregation are on 'aggregation level = default' (which is the day and the machine respectively) for two of my dimensions, the cube is mapped to the fact with dimension tables table, the data is loaded, and the everything works as expected.

The problem is with the charge itself, I noticed that it is too slow for my amount of sample data. After some research on the issue, I discovered a query in cube_build_log table, query the data is actually loaded.

< SQL >

<! [CDATA]

SELECT / * + bypass_recursive_check cursor_sharing_exact no_expand no_rewrite * /.

T4_ID_DAY ALIAS_37,

T1_ID_POT ALIAS_38,

MAX (T7_TEMPERATURE) ALIAS_39,

MAX (T7_TEMPERATURE) ALIAS_40,

MAX (T7_METAL_HEIGHT) ALIAS_41

Of

(

SELECT / * + no_rewrite * /.

T1. "" T7_DATE_TRUNC DATE_TRUNC. "

T1. "" T7_METAL_HEIGHT METAL_HEIGHT. "

T1. "" T7_TEMPERATURE OF TEMPERATURE. "

T1. "" POT_GLOBAL_ID "T7_POT_GLOBAL_ID

Of

POTS. POT_BATH' T1)

T7,

(

SELECT / * + no_rewrite * /.

T1. "" T4_ID_DIM ID_DIM. "

T1. "" ID_DAY "T4_ID_DAY

Of

LAUGHED. ("' DIM_DATES ' T1)

T4,

(

SELECT / * + no_rewrite * /.

T1. "" T1_ID_DIM ID_DIM. "

T1. "" ID_POT "T1_ID_POT

Of

LAUGHED. ("' DIM_POTS ' T1)

T1

WHERE

((T4_ID_DIM = T7_DATE_TRUNC)

AND (T1_ID_DIM = T7_POT_GLOBAL_ID)

AND ((T7_DATE_TRUNC) IN "a long long list of dates for the currently processed cube partition is clipped")))

GROUP BY

(T1_ID_POT, T4_ID_DAY)

ORDER BY

T1_ID_POT ASC NULLS LAST,

T4_ID_DAY ASC NULLS LAST]] > >

< / SQL >

View T4_ID_DAY, T1_ID_POT in the high level of the column list - here are identifiers of low level of my dimensions, which means that the query is not doing an aggregation here, because there is that one made by each pair of (ID_DAY, ID_POT).

What I want to do is somehow to load data without doing that (totally useless in my case) the aggregation intermediaries. Basically I want it to be something like

SELECT / * + bypass_recursive_check cursor_sharing_exact no_expand no_rewrite * /.

T4_ID_DAY ALIAS_37,

T1_ID_POT ALIAS_38,

T7_TEMPERATURE ALIAS_39,

T7_TEMPERATURE ALIAS_40,

T7_METAL_HEIGHT ALIAS_41

Etc...

without aggregations. In fact, I can live even with this load query, such as the amount of data isn't that great, but I want that things in the right way to work (more or less ).

Any chance to do?

Thank you.

I thought about it. There is a mistake in my correspondence, I've specified a column dim_table.dimension_id in a column field source of my section of the mapping, rather than the fact_table.dimension_id column, and (supposed) building tried to group by keys of the dimension tables. After this the definition of primary key did the trick (just the unique index, however, was not enough).

-

active cursor for select data for further processing

I have included my data and partial vi with annotated text. Briefly, my input data is of a DEM so line # represents distance in x - ed., column # represents the distance in y - dir and data is elevation. I would like to deal with the sequentially a column of data at a time where I visually select the data I want to keep for further processing. The position of the data is important, so the data in the selected range will be prepared in the same column and lines as it was in any of the original input data. The rest of the column in the selected range can be NaNs.

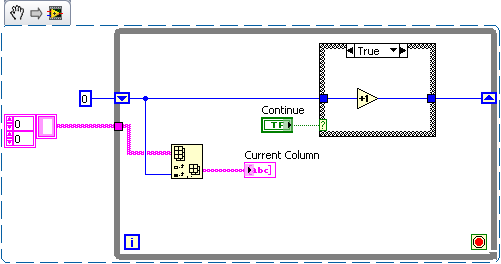

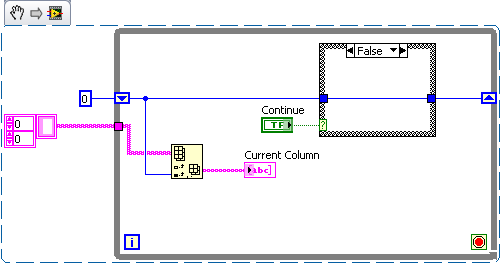

(1) it seems to pause fine when I run it. What happens when you click on the pause button?

(2) this can be accomplished better by using a shift register for the column instead of the iteration number. Then use a button continue triggering the shift register to increment. One thing to check is that the button continue action mechanical latch when released (right click then mechanical Action). Here is an example of this:

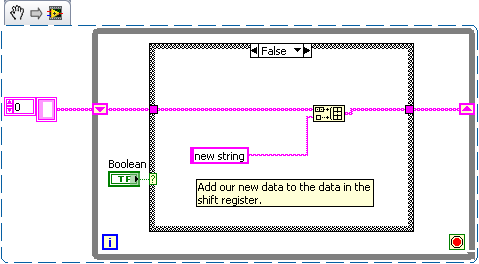

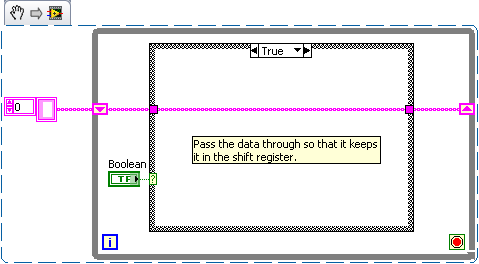

(3) for a shift register contain the values you need to write to the file, you will need to make sure that it is always passing the table you add to in the right side of the team to register. You'll also need to use table to build instead of insert in the table. Here is an example:

(4) this should automatically after the previous steps.

-

Implementation of Open Data Source SQL for loading data

Hello

I've set up a system DSN odbc for Oracle database link I want to leave the interface and tested successfully (on odbc), but when I try to use the same in Open Sql Data Sources, so that it appears in the SQL data sources menu OK list I get all the data returned when I click "OK/recycling" - just the message - "unable to establish a connection to the SQL database server. Check the log for details

Can someone tell me where the journal is if I can get a clue as to why it breaks down, I assumed Essbase or ODBC, but can see nothing in the two?

Thank you

Robert.

You use the 'Data Direct Oracle Wire Protocol x.x', otherwise try with this driver.

Do not fill anything in the open field of connection on the right side of the window of 'SQL Data Sources '.

Make sure you enter a valid sql statement without "select."

Check the log of essbase applications.

See you soon

John

-

Need data files and rules file to create and load data for sample.basi

I need emergency data files to build dimensions dynamically and also the rule files for loading data to the application Sample.Basic for Essbase 9. Kindly let me know if anyone can provide them. Otherwise any link frm where I can get it?

Thanks in advance.Outline and all the data (calcdat.txt) are included in the zip, so you don't need to rebuild something with the loading rules

See you soon

John

http://John-Goodwin.blogspot.com/ -

How can I Data Modeler does not generate constraints on NNC_ tables?

Hi all

Unfortunately I have not found the answer using Google ;-)

When I generate my DDL scripts on my model on some tables Data Modeler (version 4.0.0) automatically generates constraints on the columns of the table "NNC_" for example:

CREATE TABLE STG_DURCHGANGSKNOTEN

(

ID NUMBER CONSTRAINT NNC_STG_DURCHGANGSKNOTENv1_ID NOT NULL,

Kilometrierung VARCHAR2 (20) CONSTRAINT NNC_STG_DURCHGANGSKNOTENv1_Kilometrierung NOT NULL,

Letzte_Aenderung DATE CONSTRAINT NNC_STG_DURCHGANGSKNOTENv1_Letzte_Aenderung NOT NULL,

Knotentyp VARCHAR2 (100) CONSTRAINT NNC_STG_DURCHGANGSKNOTENv1_Knotentyp NOT NULL,

Name VARCHAR2 (100),

BZ_Bezeichner VARCHAR2 (100),

GUI_Bezeichner VARCHAR2 (100),

Spurplanabschnitt_ID NUMBER NNC_STG_DURCHGANGSKNOTENv1_Spurplanabschnitt_ID CONSTRAINT NOT NULL,.

XML_Document XMLTYPE

);

How can I avoid this? I like to just get something like this:

CREATE TABLE STG_DURCHGANGSKNOTEN

(

IDENTIFICATION NUMBER NOT NULL,

Kilometrierung VARCHAR2 (20) NOT NULL,

Letzte_Aenderung DATE NOT NULL,

Knotentyp VARCHAR2 (100) NOT NULL,

Name VARCHAR2 (100),

BZ_Bezeichner VARCHAR2 (100),

GUI_Bezeichner VARCHAR2 (100),

Spurplanabschnitt_ID NUMBER NOT NULL,

XML_Document XMLTYPE

);

Thank you

Matthias

Hi Matthias,

The NOT NULL Constraint clause appears likely because 'Not Null Constraint Name' property is set to the column. (It is indicated on the Panel "forced by default and ' in the column properties dialog box.)

To stop these products, you can go to the Data Modeler/DOF of the preferences page (on the Tools menu) and set the option 'generate short form of NO forced NULL.

Note that there now is a forum specifically for the Data Modeler: SQL Developer Data Modeler

David

-

SQL Developer - JRE or JDK Data Modeler

Hello

Mainly, is - anyone of you can confirm that I need the JDK (or J2SE SDK as indicated in the error message) - and not only JRE as indicated on the OTN download pages?

I just downloaded "SQL Developer Data Modeler" w/o JRE. Mainly because I already have installed JRE 1.6.0_17, so download it delivered is not necessary - and secondly I would not risk of intefering with the automatic software update the JRE on my company IT support staff.

I might well be a complete Java lover, but shouldn't JRE is sufficient, if you want to run java applications?

Soon, KHi Hauskov,

JRE is sufficient for the Data Modeler work but there is a restriction in datamodeler.exe which comes from JDeveloper. If you don't want a JDK, then you can use datamodeler.bat - in this case you must bin directory Java to be listed in the PATH environment variable.Philippe

-

Question to load data using sql loader in staging table, and then in the main tables!

Hello

I'm trying to load data into our main database table using SQL LOADER. data will be provided in separate pipes csv files.

I have develop a shell script to load the data and it works fine except one thing.

Here are the details of a data to re-create the problem.

Staging of the structure of the table in which data will be filled using sql loader

create table stg_cmts_data (cmts_token varchar2 (30), CMTS_IP varchar2 (20));

create table stg_link_data (dhcp_token varchar2 (30), cmts_to_add varchar2 (200));

create table stg_dhcp_data (dhcp_token varchar2 (30), DHCP_IP varchar2 (20));

DATA in the csv file-

for stg_cmts_data-

cmts_map_03092015_1.csv

WNLB-CMTS-01-1. 10.15.0.1

WNLB-CMTS-02-2 | 10.15.16.1

WNLB-CMTS-03-3. 10.15.48.1

WNLB-CMTS-04-4. 10.15.80.1

WNLB-CMTS-05-5. 10.15.96.1

for stg_dhcp_data-

dhcp_map_03092015_1.csv

DHCP-1-1-1. 10.25.23.10, 25.26.14.01

DHCP-1-1-2. 56.25.111.25, 100.25.2.01

DHCP-1-1-3. 25.255.3.01, 89.20.147.258

DHCP-1-1-4. 10.25.26.36, 200.32.58.69

DHCP-1-1-5 | 80.25.47.369, 60.258.14.10

for stg_link_data

cmts_dhcp_link_map_0309151623_1.csv

DHCP-1-1-1. WNLB-CMTS-01-1,WNLB-CMTS-02-2

DHCP-1-1-2. WNLB-CMTS-03-3,WNLB-CMTS-04-4,WNLB-CMTS-05-5

DHCP-1-1-3. WNLB-CMTS-01-1

DHCP-1-1-4. WNLB-CMTS-05-8,WNLB-CMTS-05-6,WNLB-CMTS-05-0,WNLB-CMTS-03-3

DHCP-1-1-5 | WNLB-CMTS-02-2,WNLB-CMTS-04-4,WNLB-CMTS-05-7

WNLB-DHCP-1-13 | WNLB-CMTS-02-2

Now, after loading these data in the staging of table I have to fill the main database table

create table subntwk (subntwk_nm varchar2 (20), subntwk_ip varchar2 (30));

create table link (link_nm varchar2 (50));

SQL scripts that I created to load data is like.

coil load_cmts.log

Set serveroutput on

DECLARE

CURSOR c_stg_cmts IS SELECT *.

OF stg_cmts_data;

TYPE t_stg_cmts IS TABLE OF stg_cmts_data % ROWTYPE INDEX BY pls_integer;

l_stg_cmts t_stg_cmts;

l_cmts_cnt NUMBER;

l_cnt NUMBER;

NUMBER of l_cnt_1;

BEGIN

OPEN c_stg_cmts.

Get the c_stg_cmts COLLECT in BULK IN l_stg_cmts;

BECAUSE me IN l_stg_cmts. FIRST... l_stg_cmts. LAST

LOOP

SELECT COUNT (1)

IN l_cmts_cnt

OF subntwk

WHERE subntwk_nm = l_stg_cmts (i) .cmts_token;

IF l_cmts_cnt < 1 THEN

INSERT

IN SUBNTWK

(

subntwk_nm

)

VALUES

(

l_stg_cmts (i) .cmts_token

);

DBMS_OUTPUT. Put_line ("token has been added: ' |") l_stg_cmts (i) .cmts_token);

ON THE OTHER

DBMS_OUTPUT. Put_line ("token is already present'");

END IF;

WHEN l_stg_cmts EXIT. COUNT = 0;

END LOOP;

commit;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

for dhcp

coil load_dhcp.log

Set serveroutput on

DECLARE

CURSOR c_stg_dhcp IS SELECT *.

OF stg_dhcp_data;

TYPE t_stg_dhcp IS TABLE OF stg_dhcp_data % ROWTYPE INDEX BY pls_integer;

l_stg_dhcp t_stg_dhcp;

l_dhcp_cnt NUMBER;

l_cnt NUMBER;

NUMBER of l_cnt_1;

BEGIN

OPEN c_stg_dhcp.

Get the c_stg_dhcp COLLECT in BULK IN l_stg_dhcp;

BECAUSE me IN l_stg_dhcp. FIRST... l_stg_dhcp. LAST

LOOP

SELECT COUNT (1)

IN l_dhcp_cnt

OF subntwk

WHERE subntwk_nm = l_stg_dhcp (i) .dhcp_token;

IF l_dhcp_cnt < 1 THEN

INSERT

IN SUBNTWK

(

subntwk_nm

)

VALUES

(

l_stg_dhcp (i) .dhcp_token

);

DBMS_OUTPUT. Put_line ("token has been added: ' |") l_stg_dhcp (i) .dhcp_token);

ON THE OTHER

DBMS_OUTPUT. Put_line ("token is already present'");

END IF;

WHEN l_stg_dhcp EXIT. COUNT = 0;

END LOOP;

commit;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

for link -.

coil load_link.log

Set serveroutput on

DECLARE

l_cmts_1 VARCHAR2 (4000 CHAR);

l_cmts_add VARCHAR2 (200 CHAR);

l_dhcp_cnt NUMBER;

l_cmts_cnt NUMBER;

l_link_cnt NUMBER;

l_add_link_nm VARCHAR2 (200 CHAR);

BEGIN

FOR (IN) r

SELECT dhcp_token, cmts_to_add | ',' cmts_add

OF stg_link_data

)

LOOP

l_cmts_1: = r.cmts_add;

l_cmts_add: = TRIM (SUBSTR (l_cmts_1, 1, INSTR (l_cmts_1, ',') - 1));

SELECT COUNT (1)

IN l_dhcp_cnt

OF subntwk

WHERE subntwk_nm = r.dhcp_token;

IF l_dhcp_cnt = 0 THEN

DBMS_OUTPUT. Put_line ("device not found: ' |") r.dhcp_token);

ON THE OTHER

While l_cmts_add IS NOT NULL

LOOP

l_add_link_nm: = r.dhcp_token |' _TO_' | l_cmts_add;

SELECT COUNT (1)

IN l_cmts_cnt

OF subntwk

WHERE subntwk_nm = TRIM (l_cmts_add);

SELECT COUNT (1)

IN l_link_cnt

LINK

WHERE link_nm = l_add_link_nm;

IF l_cmts_cnt > 0 AND l_link_cnt = 0 THEN

INSERT INTO link (link_nm)

VALUES (l_add_link_nm);

DBMS_OUTPUT. Put_line (l_add_link_nm |) » '||' Has been added. ") ;

ELSIF l_link_cnt > 0 THEN

DBMS_OUTPUT. Put_line (' link is already present: ' | l_add_link_nm);

ELSIF l_cmts_cnt = 0 then

DBMS_OUTPUT. Put_line (' no. CMTS FOUND for device to create the link: ' | l_cmts_add);

END IF;

l_cmts_1: = TRIM (SUBSTR (l_cmts_1, INSTR (l_cmts_1, ',') + 1));

l_cmts_add: = TRIM (SUBSTR (l_cmts_1, 1, INSTR (l_cmts_1, ',') - 1));

END LOOP;

END IF;

END LOOP;

COMMIT;

EXCEPTION

WHILE OTHERS THEN

Dbms_output.put_line ('ERROR' |) SQLERRM);

END;

/

output

control files -

DOWNLOAD THE DATA

INFILE 'cmts_data.csv '.

ADD

IN THE STG_CMTS_DATA TABLE

When (cmts_token! = ") AND (cmts_token! = 'NULL') AND (cmts_token! = 'null')

and (cmts_ip! = ") AND (cmts_ip! = 'NULL') AND (cmts_ip! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:cmts_token))' cmts_token,

cmts_ip ' RTRIM (LTRIM(:cmts_ip)) ")". "

for dhcp.

DOWNLOAD THE DATA

INFILE 'dhcp_data.csv '.

ADD

IN THE STG_DHCP_DATA TABLE

When (dhcp_token! = ") AND (dhcp_token! = 'NULL') AND (dhcp_token! = 'null')

and (dhcp_ip! = ") AND (dhcp_ip! = 'NULL') AND (dhcp_ip! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:dhcp_token))' dhcp_token,

dhcp_ip ' RTRIM (LTRIM(:dhcp_ip)) ")". "

for link -.

DOWNLOAD THE DATA

INFILE 'link_data.csv '.

ADD

IN THE STG_LINK_DATA TABLE

When (dhcp_token! = ") AND (dhcp_token! = 'NULL') AND (dhcp_token! = 'null')

and (cmts_to_add! = ") AND (cmts_to_add! = 'NULL') AND (cmts_to_add! = 'null')

FIELDS TERMINATED BY ' |' SURROUNDED OF POSSIBLY "" "

TRAILING NULLCOLS

('RTRIM (LTRIM (:dhcp_token))' dhcp_token,

cmts_to_add TANK (4000) RTRIM (LTRIM(:cmts_to_add)) ")" ""

SHELL SCRIPT-

If [!-d / log]

then

Mkdir log

FI

If [!-d / finished]

then

mkdir makes

FI

If [!-d / bad]

then

bad mkdir

FI

nohup time sqlldr username/password@SID CONTROL = load_cmts_data.ctl LOG = log/ldr_cmts_data.log = log/ldr_cmts_data.bad DISCARD log/ldr_cmts_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

nohup time username/password@SID @load_cmts.sql

nohup time sqlldr username/password@SID CONTROL = load_dhcp_data.ctl LOG = log/ldr_dhcp_data.log = log/ldr_dhcp_data.bad DISCARD log/ldr_dhcp_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

time nohup sqlplus username/password@SID @load_dhcp.sql

nohup time sqlldr username/password@SID CONTROL = load_link_data.ctl LOG = log/ldr_link_data.log = log/ldr_link_data.bad DISCARD log/ldr_link_data.reject ERRORS = BAD = 100000 LIVE = TRUE PARALLEL = TRUE &

time nohup sqlplus username/password@SID @load_link.sql

MV *.log. / log

If the problem I encounter is here for loading data in the link table that I check if DHCP is present in the subntwk table, then continue to another mistake of the newspaper. If CMTS then left create link to another error in the newspaper.

Now that we can here multiple CMTS are associated with unique DHCP.

So here in the table links to create the link, but for the last iteration of the loop, where I get separated by commas separate CMTS table stg_link_data it gives me log as not found CMTS.

for example

DHCP-1-1-1. WNLB-CMTS-01-1,WNLB-CMTS-02-2

Here, I guess to link the dhcp-1-1-1 with balancing-CMTS-01-1 and wnlb-CMTS-02-2

Theses all the data present in the subntwk table, but still it gives me journal wnlb-CMTS-02-2 could not be FOUND, but we have already loaded into the subntwk table.

same thing is happening with all the CMTS table stg_link_data who are in the last (I think here you got what I'm trying to explain).

But when I run the SQL scripts in the SQL Developer separately then it inserts all valid links in the table of links.

Here, she should create 9 lines in the table of links, whereas now he creates only 5 rows.

I use COMMIT in my script also but it only does not help me.

Run these scripts in your machine let me know if you also get the same behavior I get.

and please give me a solution I tried many thing from yesterday, but it's always the same.

It is the table of link log

link is already present: dhcp-1-1-1_TO_wnlb-cmts-01-1 NOT FOUND CMTS for device to create the link: wnlb-CMTS-02-2

link is already present: dhcp-1-1-2_TO_wnlb-cmts-03-3 link is already present: dhcp-1-1-2_TO_wnlb-cmts-04-4 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-5

NOT FOUND CMTS for device to create the link: wnlb-CMTS-01-1

NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-8 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-6 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-0 NOT FOUND CMTS for device to create the link: wnlb-CMTS-03-3

link is already present: dhcp-1-1-5_TO_wnlb-cmts-02-2 link is already present: dhcp-1-1-5_TO_wnlb-cmts-04-4 NOT FOUND CMTS for device to create the link: wnlb-CMTS-05-7

Device not found: wnlb-dhcp-1-13 IF NEED MORE INFORMATION PLEASE LET ME KNOW

Thank you

I felt later in the night that during the loading in the staging table using UNIX machine he created the new line for each line. That is why the last CMTS is not found, for this I use the UNIX 2 BACK conversion and it starts to work perfectly.

It was the dos2unix error!

Thank you all for your interest and I may learn new things, as I have almost 10 months of experience in (PLSQL, SQL)

-

Issue while loading data using the file Rules Essbase

Hi all

I am facing problem while loading data using the Rules file. In the rules file, I rejected several members in two areas (two dimensions). Now if I load the data using the rules file I'm getting errors for all members in the dataload.err file. If I reject mutiple members of a single field, the data load without settling errors in the dataload.err file.

I want to know how rmany members of several fields of ejection for loading data using the file Rules Essbase? Is it possible?

Okay, okay... I think that you must assign Global Select / reject Boolean in the parameters of loading data as 'Or':

-

Need a sql script loader to load data into a table

Hello

IM new to Oracle... Learn some basic things... and now I want the steps to do to load the data from a table dump file...

and the script for sql loader

Thanks in advance

Hello

You can do all these steps for loading data...

Step 1:

Create a table in Toad to load your data...

Step 2:

Creating a data file... Create your data file with column headers...

Step 3:

Creating a control file... Create your control file to load the data from the table data file (there is a structure of control file, you can search through the net)

Step 4:

Move the data file and the control file in the path of the server...

Step 5:

Load the data into the staging table using sql loader.

sqlldr control =

data = connect as: username/password@instance.

-

Name of user and password invalid executes the Plan of loading data

I get the following error when I try to execute the plan of loading data for loading data from my EBS server. I don't know what username and password she is claiming is not valid or where to change the value. Any ideas where I can find it?

ODI-1519: series step "start load Plan.

(InternalID:4923500) ' failed, because the child step "Global Variable Refresh.

(InternalID:4924500) "is a mistake.

ODI-1529: refreshment of the variable 'BIAPPS.13P_CALENDAR_ID' failed:

Select CASE WHEN 'The Global Variable Refresh' in (select distinct)

group_code from C_PARAMETER_VALUE_FORMATTER_V where PARAM_CODE =

"13P_CALENDAR_ID")

THEN (select param_value

of C_PARAMETER_VALUE_FORMATTER_V

where PARAM_CODE = '13P_CALENDAR_ID. '

and group_code = 'Global Variable Refresh'

and datasource_num_id = ' #BIAPPS. WH_DATASOURCE_NUM_ID')

ON THE OTHER

(select param_value in the C_GL_PARAM_VALUE_FORMATTER_V where PARAM_CODE =

'13P_CALENDAR_ID' and datasource_num_id =

' #BIAPPS. WH_DATASOURCE_NUM_ID')

END

of the double

0:72000:Java.SQL.SqlException: ORA-01017: name of user and password invalid.

connection refused

java.sql.SQLException: ORA-01017: name of user and password invalid. opening of session

denied

to

oracle.odi.jdbc.datasource.LoginTimeoutDatasourceAdapter.doGetConnection(LoginTimeoutDatasourceAdapter.java:133)

to

oracle.odi.jdbc.datasource.LoginTimeoutDatasourceAdapter.getConnection(LoginTimeoutDatasourceAdapter.java:62)

to

oracle.odi.core.datasource.dwgobject.support.OnConnectOnDisconnectDataSourceAdapter.getConnection(OnConnectOnDisconnectDataSourceAdapter.java:74)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.executeVariableStep(LoadPlanProcessor.java:3050)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.refreshVariables(LoadPlanProcessor.java:4287)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.AddRunnableScenarios(LoadPlanProcessor.java:2284)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.AddRunnableScenarios(LoadPlanProcessor.java:2307)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.SelectNextRunnableScenarios(LoadPlanProcessor.java:2029)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.StartAllScenariosFromStep(LoadPlanProcessor.java:1976)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.startLPExecution(LoadPlanProcessor.java:491)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.initLPInstance(LoadPlanProcessor.java:384)

to

oracle.odi.runtime.agent.loadplan.LoadPlanProcessor.startLPInstance(LoadPlanProcessor.java:147)

to

oracle.odi.runtime.agent.processor.impl.StartLoadPlanRequestProcessor.doProcessRequest(StartLoadPlanRequestProcessor.java:87)

to

oracle.odi.runtime.agent.processor.SimpleAgentRequestProcessor.process(SimpleAgentRequestProcessor.java:49)

to

oracle.odi.runtime.agent.support.DefaultRuntimeAgent.execute(DefaultRuntimeAgent.java:68)

to

oracle.odi.runtime.agent.servlet.AgentServlet.processRequest(AgentServlet.java:564)

to

oracle.odi.runtime.agent.servlet.AgentServlet.doPost(AgentServlet.java:518)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:727)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:820)

to

weblogic.servlet.internal.StubSecurityHelper$ ServletServiceAction.run (StubSecurityHelper.java:227)

to

weblogic.servlet.internal.StubSecurityHelper.invokeServlet(StubSecurityHelper.java:125)

to

weblogic.servlet.internal.ServletStubImpl.execute(ServletStubImpl.java:301)

at weblogic.servlet.internal.TailFilter.doFilter(TailFilter.java:26)

to

weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:56)

to

oracle.security.jps.ee.http.JpsAbsFilter$ 1.run(JpsAbsFilter.java:119)

at java.security.AccessController.doPrivileged (Native Method)

to

oracle.security.jps.util.JpsSubject.doAsPrivileged(JpsSubject.java:324)

to

oracle.security.jps.ee.util.JpsPlatformUtil.runJaasMode(JpsPlatformUtil.java:460)

to

oracle.security.jps.ee.http.JpsAbsFilter.runJaasMode(JpsAbsFilter.java:103)

to

oracle.security.jps.ee.http.JpsAbsFilter.doFilter(JpsAbsFilter.java:171)

at oracle.security.jps.ee.http.JpsFilter.doFilter(JpsFilter.java:71)

to

weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:56)

to

oracle.dms.servlet.DMSServletFilter.doFilter(DMSServletFilter.java:163)

to

weblogic.servlet.internal.FilterChainImpl.doFilter(FilterChainImpl.java:56)

to

weblogic.servlet.internal.WebAppServletContext$ ServletInvocationAction.wrapRun (WebAppServletContext.java:3730)

to

weblogic.servlet.internal.WebAppServletContext$ ServletInvocationAction.run (WebAppServletContext.java:3696)

to

weblogic.security.acl.internal.AuthenticatedSubject.doAs(AuthenticatedSubject.java:321)

to

weblogic.security.service.SecurityManager.runAs(SecurityManager.java:120)

to

weblogic.servlet.internal.WebAppServletContext.securedExecute(WebAppServletContext.java:2273)

to

weblogic.servlet.internal.WebAppServletContext.execute(WebAppServletContext.java:2179)

to

weblogic.servlet.internal.ServletRequestImpl.run(ServletRequestImpl.java:1490)

at weblogic.work.ExecuteThread.execute(ExecuteThread.java:256)

at weblogic.work.ExecuteThread.run(ExecuteThread.java:221)

Caused by: java.sql.SQLException: ORA-01017: name of user and password invalid.

connection refused

at oracle.jdbc.driver.T4CTTIoer.processError(T4CTTIoer.java:462)

at oracle.jdbc.driver.T4CTTIoer.processError(T4CTTIoer.java:397)

at oracle.jdbc.driver.T4CTTIoer.processError(T4CTTIoer.java:389)

at oracle.jdbc.driver.T4CTTIfun.processError(T4CTTIfun.java:689)

to

oracle.jdbc.driver.T4CTTIoauthenticate.processError(T4CTTIoauthenticate.java:455)

at oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:481)

at oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:205)

to

oracle.jdbc.driver.T4CTTIoauthenticate.doOAUTH(T4CTTIoauthenticate.java:387)

to

oracle.jdbc.driver.T4CTTIoauthenticate.doOAUTH(T4CTTIoauthenticate.java:814)

at oracle.jdbc.driver.T4CConnection.logon(T4CConnection.java:418)

to

oracle.jdbc.driver.PhysicalConnection. < init > (PhysicalConnection.java:678)

to

oracle.jdbc.driver.T4CConnection. < init > (T4CConnection.java:234)

to

oracle.jdbc.driver.T4CDriverExtension.getConnection(T4CDriverExtension.java:34)

at oracle.jdbc.driver.OracleDriver.connect(OracleDriver.java:567)

to

oracle.odi.jdbc.datasource.DriverManagerDataSource.getConnectionFromDriver(DriverManagerDataSource.java:410)

to

oracle.odi.jdbc.datasource.DriverManagerDataSource.getConnectionFromDriver(DriverManagerDataSource.java:386)

to

oracle.odi.jdbc.datasource.DriverManagerDataSource.getConnectionFromDriver(DriverManagerDataSource.java:353)

to

oracle.odi.jdbc.datasource.DriverManagerDataSource.getConnection(DriverManagerDataSource.java:332)

to

oracle.odi.jdbc.datasource.LoginTimeoutDatasourceAdapter$ ConnectionProcessor.run (LoginTimeoutDatasourceAdapter.java:217)

to

java.util.concurrent.Executors$ RunnableAdapter.call (Executors.java:441)

to java.util.concurrent.FutureTask$ Sync.innerRun (FutureTask.java:303)

at java.util.concurrent.FutureTask.run(FutureTask.java:138)

to

java.util.concurrent.ThreadPoolExecutor$ Worker.runTask (ThreadPoolExecutor.java:886)

to

java.util.concurrent.ThreadPoolExecutor$ Worker.run (ThreadPoolExecutor.java:908)

at java.lang.Thread.run(Thread.java:662)

Found the answer after 4 days of research. Opening ODI Studio. Go to the topology, expand-> Oracle Technologies. Open BIAPPS_BIACOMP. In my system, he had "NULLBIACM_IO" has changed nothing to my correct prefix and it worked.

Now, my data load gives me the error that the table W_LANGUAGES_G does not exist. At least I liked long.

-

Dear all,

I am facing problam by downloading the Arab data to SIT jet API. When I import data from excel to an intermediate table. the Arabic text is showing question marks. If I download throw SIT API show the same points mark in the front screen SIT.

Can you help me how to solve this problem.

Concerning

SAI

Published by: ' sai on November 10, 2011 10:05SAI,

If your chatracterset of the database supports Arabic, then it's the NLS_LANG to be addressed properly at the level of the client to load the data into the correct format.

The correct NLS_LANG in an environment Windows [179133.1 ID]

NLS_LANG explained (how work of transformation of characters Client / Server)? [158577.1 ID]

What character does support the value which language [ID 62421.1]Have you tried to use a different tool for loading data?

Thank you

Hussein

Maybe you are looking for

-

Average of horrible judgment, I downloaded something that turned out to be just another way for MacKeeper Run Amok. I turn on my firewall before you download anything either, and I'm glad that I did because weird things happened. Chrome unexpectedl

-

How can I use two counters simultaneously to pulse width measurment

Hello, everyone! I'm new to Labview. I currently have some cDAQ9171 and width measurment with 9401 impulses. My understanding is that the 9401 was 4 meters, which means that I can use these meter separately. However I have the following problem. 1. I

-

I just bougt garage a 5530 envy. Plugged into the power supply. Lights, printer makes a noise and then turns off. Cannot turn it on. So, it seems to have failed in step 1. Help!

-

He can not find the apostrophe on my laptop G62? (there really a strange keyboard layout)

-

You can synchronize the photos from Windows Live Photo Gallery to an iPad?

Original title: Synch Photos You can synchronize photos from Windows Live Photo Gallery for I Pad? If so, how?