algorithms of data processing

I use the R client to connect to oracle. my local computer has 1 GB of RAM and server is superior to it. My data is huge and I do not want the functions of R as lm. But I don't know that makes data load on the client and then treat the client or my service running on the server?If 2' th is true (it's good for me) made any other algorithm to do R and the algorithms of ore?

Published by: Nasiri Mahdi on 23 February 2013 01:16

Nguyen,

Both approaches are supported. It really depends on what you're trying to do. For linear regression, you can try ore.lm or ore.odmGLM. If you build models partitioned on a large data, you can try ore.groupApply lm inside. If you do not yet take a look at the examples that we have in our 'Learning R Series' [url http://www.oracle.com/technetwork/database/options/advanced-analytics/r-enterprise/index.html] here.

Denis

Tags: Business Intelligence

Similar Questions

-

AO HAVE synchronized with the data processing

Hi all

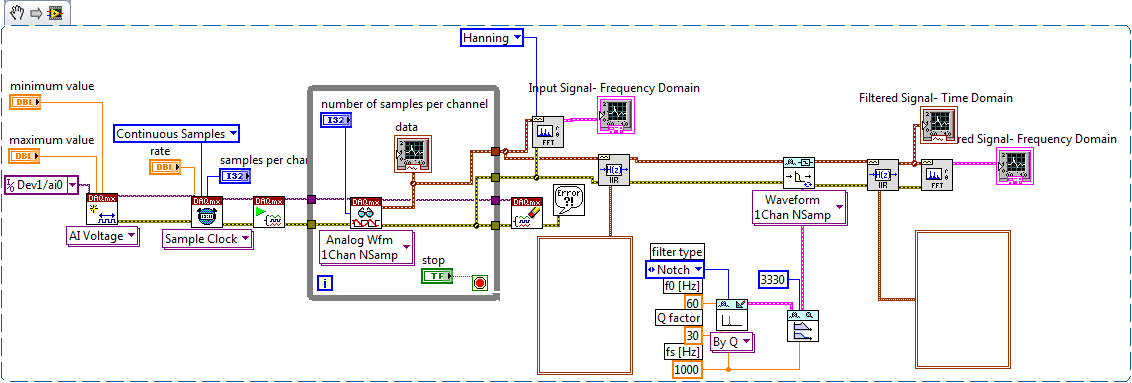

I'm building a pretty simple VI that should work in the following way:

1: acquire the data of two channels of AI with a sampling rate of 2000 Hz

2: process data: some time on average and the scaling of the output of the signal depends on the entry

3: write data to both channels of the AO. (A button control if the output of the AO is either 0, dependent on the entry)

More important is that the delay between real HAVE and AO transformed is reduced to a minimum.

Following several examples I came with the attached VI.

There are two problems:

1: Although the principle of synchronization works without the Subvi data processing in the meantime, integrating the Subvi and all screens

makes a sinusoid of simple test of a function generator with a lot of defects (not being is not smooth). I know all the screens to slow down time of the loop, but those which are essential

for the application. Any suggestions?

2: although it runs, it keeps giving E - 209802, something with the task without name, is not really make sense to me.

Thanks in advance for any help,

Mark

Mark,

I think that only timed material point is appropriate for this application, however, I suspect that you will have questions turning over 10s of Hz on Windows without eventually see such errors that 209802 as the OS itself may decide to suspend your application for a quantity of unpredictable weather. I recommend that you take waveform graph remains out of your IO loop. I would recommend that you reduce your loop to display a little, because she's still going to pull data from the queue and update shows as quickly as possible. I think this logic added to wait a number of elements to be present in the queue before the queue and display of data can be reasonable. If you do this, you'll wait also added 'queue' function in this loop so that you're not the queue to vote and by using too much CPU as possible.

Point single timed material, DAQmx will always return the most recent example. This means that if your loop runs slowly, you lose a few samples. If this is acceptable for your application, then receives the 209802 error may not be important. You can use the DAQmx in time real property property of the knot, '' convert errors late warnings in custody '' to make it a non-fatal condition.

My final suggestion is to determine which parts of your code are taking the most time to execute. If you know where you spend your time, it can direct you to the places where you can optimize and remove some run time. To do this, you can try to remove parts of the logic and see how it affects the rate of the loop, you are able to maintain.

Hope that helps,

Dan

-

Profile of the action: prepare data process help

Hello

I was working with prepare data process (in the action profile) to get the user name of the workspace. I did successfully.

I have a lot of steps in my process in which task is attributed to many users over its lifetime. (Image below)

I got the history of users in the form which goes through the process. As below:

User 1 (initiator):

User 2:

3 the user:

I do this by using the set operation after each user submitted the job (by assigning the respective current value of the user for the text fields). But it does not work. Maybe because I use the same process for preparing data for each user. Therefore hide the history (maybe XML data for form updates each time and old value XML are deleted) and only fill the current user details without touching the other virgins.

I also used the temporary XML variable but still does not.

Please tell me how!

Thank you.

-

Afonso

Yes, since the process of data prepare is of short duration, it will get the default value of the form variable (xmlPrepareData). That means the content of the form empty.

The context of the task will be of the form data, whose use can be used in the process of preparing data.

Here is the solution:

Add a SetValue activity as the first element in the process of data preparation.

Location: / process_data/xmlPrepareData

Expression: /process_data/taskContext/object/@inputDocument

Hope it should work. Or you can send the LCA to [email protected]

Nith

-

fatal error restore file error data processing: 0xc000009a hiberfill.sys

Recently, I restored my Compaq Presario CQ56-219WM laptop to its original factory condition.

When I put my computer in hibernation mode and back I get a screen that says:

status: 0xc0000009a

News: fatal error occurred during the processing of restore data

File: hiberfill.sys

Ive done a search on the internet and it seems to be associated with Windows 7.

My OS is windows 7 64 bit sp1.

Delete the hiberfil.sys on drive C (can be hidden, you can havbe to show the files hidden by using the folder options)

After removing restarted the PC, after using it for awhile hibernate the PC, see if it always generates this error after standby.

If it isn't, an another factory restore.

-

Hello

I have a small question on the removal of the old processdata gathered by the Investigation of feedback process cartridge.

I had activated the retention policies after a long period of collection of GPI and according to https://support.quest.com/SolutionDetail.aspx?id=SOL52431 (Cause) old data will not be deleted if you have activated the purge after collecting the data with example of GPI.

I could delete the data directly from the data management dashboard, but I'm not sure if it is safe.

There is another, better way to do this?

See you soon!

Erik Alm

Erik,

Have you tried the 'delete expired data Assistant "?

You can find it under environment VMware - Administration tab - review data has expired.

/ Mattias

-

How to determine tables of tracking, data, processes and relationships of Table requirements

I need to determine the 4 things from an existing of APEX application

1. process flow

2. necessary data

3. the tables used

4 all the relationships that exist between the tables

Can someone help me plese. I worked on it for too long!

# 3, you can use the utilities, the dependency ratio of object database to determine which tables and views are used by your application...

# 4, you can get in SQL Developer and produce a data model that should show you the relationships between the tables (if they are enabled)

The first two will involve actually evaluate the application itself...

Thank you

Tony Miller

Software LuvMuffin

Ruckersville, WILL -

custome, update the date process

Hello everyone,

I have a page with the box where the user can select a record and update the record by pressing the button.

I have three processes, and each of these process to update a field (which is a date field) of the page by pressing the specific button. The three processes are:

declare

Start

I'm looping 1.apex_application.g_f01.count

UPDATE REC_RET_ADD_RECORDSET DATE_ADMIN_APPROVED =: P8_DATE_ADMIN_APPROVED

WHERE REC_RET_ID = APEX_APPLICATION. G_F01 (I);

end loop;

commit;

end;

Condition: When you press the button (submit) Admin

The second process:

declare

Start

I'm looping 1.apex_application.g_f01.count

UPDATE REC_RET_ADD_RECORDSET DATE_COMMITTEE_APPROVED =: P8_DATE_COMMITTEE_APPROVED

WHERE REC_RET_ID = APEX_APPLICATION. G_F01 (I);

end loop;

commit;

end;

Condition: When you press the button (submit) Committee

The third method:

declare

Start

I'm looping 1.apex_application.g_f01.count

UPDATE REC_RET_ADD_RECORD

SET DATE_OHS_APPROVED =: P8_DATE_OHS_APPROVED

WHERE REC_RET_ID = APEX_APPLICATION. G_F01 (I);

end loop;

commit;

end;

Condition: When you press the button (submit) OSH

Now the problem is, when the user selects the row and selects the date for the Admin field and press the button Admin, the system inserts the date, but after that, if the user wants to choose the same line and update the date of OSH, the system will remove the date for the Admin field and enters the date of OSH in the verse of field and visa OSH.

I don't know why this is happening. Could you please help me. I appreciate it.

As you say, you have three buttons, get the names of all three buttons. (I mean the names of the buttons that are unique per page and not the labels. Do not confuse between the labels and the button names)

Go to each of your processes, make button no condition

Go to block of condition and select request = e1

In each respective process enter the exact button names

-

FPGA VeriStand personality is late? and latent FPGA data processing

I use a FPGA 7853 (only) in a SMU 1071 chassis with a controller 8135 and run VeriStand 2013 SP1. At the end of my test, I want to ensure the integrity of the test, which includes the audit of the FPGA interface is never late.

I first thought to expose the terminal 'Is?' late as a channel, but then I noticed it isn't really an account, it's just a flag. In addition, it seems that this flag is not locked, it does report by iteration of loop interface. This makes me think that I alarm an VeriStand on the later is for VeriStand FPGA interface design? channel. Am I correct, and if not, how NOR have I use East late? terminal?

As the DMA in the FPGA nodes then never expire, there no sense watching the Timed Out? terminals on the FPGA. But the effect of a timeout will appear in the East towards the end? Terminal Server. I'm tempted to change the end is? U64 to a real number in the number of late? the defined indicators synchronize to the host VI. is there a reason to not do this?

How VeriStand manages a FPGA end? If the RT side of the DMA buffer became more complete, data from the FPGA would be more latent, which could lead to the instability of the system. Hopefully the VeriStand engine should purge the latency of the data, but I don't see anything in the FPGA interface which would facilitate this.

Thanks for your help,

Steve K

Hey Steve,

If the PCL NIVS reads this flag as true, it incrememnts the County of HP system channel.

For the question of FIFO depth: The PCL is always expected to read and write a # fixed packages each iteration (as defined by the XML) and FPGA always reads and writes the same number of packets of each loop of comm iteartion and since the timeout is set to-1... orders may not be combined. Packets act as a handshake.

-

FastFormula to retrieve certain data processing component

Hello

I want to use a certain element/component of salary at a given date, for example I want to base pay on a certain date and this date is stored in a local variable or a section of the database. All fastformula oracle planted to achieve, how can do this in a fastformula, I will then use this pay portion for some other treatments in the same fastformula

Thank youDo not open the subjects in double-

How to use a dimension of balance in a FastFormulaAs mentioned in the thread of previoud, you can get the values for the current month using DBIs.

Thus, a Gross_ASG_PTD, the ASGQTD, the ASGYTD will always give you the data based on current payroll run.You can get data for the month of run_result_values... as mentioned in the thread previoud code.

See you soon,.

Vigneswar -

How to read the data processed on frm loop on each iteration of the loop?

Hello...

I need to display numbers as 1, 2, 3... constantly using the loop on each iteration (IE out of the loop using threads). I generated the numbers 1 2 3... using the back power node... I can read the final value after the last iteration of the loop for.

I connected an indicator of the loop for. This indicator displays the value as a 4 If the number of loops that 4. I couldn't view 1 2 3 4 an indicator of items at each iteration.

I'm in a position to read the value of the variable on each iteration of the loop. Please help me fromthis regard...

Thanks in advance...

In fact, I'm programming for multiplication of two table 2D.

I need to select the particular code to perform this action using the structure of the case. who is right for which I need to give the value select 1,2,3 to a case selector, so I finish the task. you got my point?

Thanks for the reply

-

How to export a single job Disqualification as well as the related processes and data warehouses

Hello

I'm new to OEDQ. May be that it is a fundamental issue. But I am not able to find any way. Help, please.

I use OEDQ version9 and installed locally in my system. I prepared a working with a CSV file as source and used some transformations of control (data type, control the length) in my process ( I've also enabled publish it on the dash for this process option) to prepare the work.

Then I took the export of .dxi of employment by right-clicking on the name of the job and by selecting the package.

But when I import this package dxi to another machine is not able to find the related processes and then I am unable to run the TASK.

Now, is it possible in OEDQ where I can take export a JOB with connections and related processes?

Kind regards

Samira

It is normally easier to pack the whole project, but for any object, you can filter a project first and then package it. To do this for a job, right-click on the task and select filter of dependence - elements used by the selected item. Then package the filtered project - this upward work package manager and all other dependencies (data banks, data interfaces - you won't have to 9.0), snapshots, data staging, refer to data, process etc..) The same works for other objects and processes.

I would note that since three version major 9.0 so you are encouraged to use a newer version if possible.

-

Import ASCII dates with different format

Hello

I need to import data from a CSV of ASCII.

The problem is the date format in this particular data file (.csv)

The date format for day< 10="" and="" month="" from="" jan="" to="" sep="">

AAAA/_M/_Dthe character '_' is a simple space, not an underscore literally

The format of the date days > = 10 and Jan to Sep month is:

_M/JJ/AAAA

The format of the date days > = 10 and months of Oct to dec is:

YYYY/MM/DD

The date format for day< 10="" and="" months="" from="" oct="" to="" dec="">

YYYY/MM/_D

Possible solution:

I already create a routine that recognizes the date in the title and one of the 4 (.stp) filter uses for data processing. These are the works.

Next problem:

The days are separated into 2 files, a file from 09:00 to 21:00 one day and the other from 21:00 to 09:00 on the following day. It's the way I've lost data when the day changes from 9th to the 10th of each month and the month change of Sept Oct and Dec to Jan.

I just need to delete the blankspace unconfortable before the changes of dates 2-digit, but I do not know how to deal with the before CSV imported to tiara (10.2).

My other idea is to recognize this file and any double (once with each please) but I need to exactly position the import is not the problem with the data of novalue. (Until know I got complicated for a simple mistake of blankspace).

I hope that you have ideas...

Thanks in advance...

I don't know if I understand that measures are not clear. Here's what I think you want to do:

(1) load data from different files in DIAdem

To do this, you must use "DataFileLoad("E:\Customer_Requests\caracasnet\log(111231).csv","caracasnet_log","Load") call.

You call DataFileLoad for each of the files.

(2) you want to concatenate the groups.

This should be no different than what you've done up to now(3) you want to store the data in a file (TDM).

To do this, you must call DataFileSave (...)Let me know if you have any other questions...

-

Process synchronization strategy

Trying not to reinvent the wheel...

I started to rethink an older data acquisition/processing application using as much parallelism as possible.

UI/data acquisition / Data Saving/computer/display of data are all handled in separate loops or screw that communicate and Exchange data via queues or the authors of the notifications. It works great so far.

Then, I realized that the way I have provided the data processed by the data processing (DP) for tasks of the display (D) wasn't going to work in all circumstances.

I use a notification utility, as well as several tasks D can access data and such that there is not much cost memory (no queue) if tasks D cannot hold. If the data are generated faster D tasks can get it, they miss some but get a chance to catch up more late or at least get some data from time to time. That's what I had in mind of origin.

Then I realized that I I want to display all the data (for example in offline mode). This architecture will not work. If I had to keep the approach of notifying data, I would need a way to tell the task of DP to send data to the system to alert only when all D tasks are done with their previous data segment.

What is the best way to achieve this? I need to be able to dynamically change the number of clients (task D), so I thought Rendezvous could work. But then looking at the example "Wait for all Notifications Demo.vi", it seemed that this might be an approach more natural but a bit tedious to handle since notifiers would be created (and destroyed() dynamically saw separate...

The fact is I want DP about how many customers it is supposed to wait (at any time) to send a new notification (with the new data).

Thanks for your comments.

X.

In any case, since LV 9 SP1 crashed and threw all my work today (what about auto save every 5 minutes? Is it not working the 15th of each month or something?), I'll take a break and to describe the solution I came with:

-J' created a process parallel to the VI of DP, what I call "Consumer Response Monitor" (CRM). This process asks a queue (waiting for messages to arrive using Dequeue, so no waste of resources) which is populated by the DP and D

-DP warns CRM that new data has been sent (and thus to refocus its attention on the fate of the new data.)

D the first register with CRM (and unregister when they left) and then tell CRM when they received data (who and who they are).

-CRM monitors the number of registrants D receiving the new data and tells DP when all have (or there is a timeout). She did this by using a different queue.

-If I am in 'direct mode' and don't care not if the D to time to process all the data, I just ignore the traffic with CRM.

-

Continuous data acquisition and real-time analysis

Hi all

It is a VI for the continuous acquisition of an ECG signal. As far as I understand that the analog read DAQmx VI must be placed inside a while loop so it can acquire the data permanently, I need perform filtering and analysis of the wave in real time. How I implemented the block schema means that data stays int the while loop, and AFAIK the data will be transferred on through the tunnels of data once the loop ends the execution, it clearly isn't real-time data processing.

The only way I can think to fixing this problem is by placing another loop that covers the screw scene filtering and using some sort of registeing shift to transmit the data in the second while loop. My question is whether or not it would introduce some sort of delay, and weather or not it would be supposed to be the treatment in real time. Wouldn't be better to place all the screws (aquicition and filtering) inside a while loop? or it is a bad programming practice. Other features I need to do is back up the data I na file, but only when the user wants to do.

Any advice would be appreciated.

You have two options:

- A. as you said, you can place the code inside your current while loop to perform the treatment. If you're smart, you won't need to put one another while loop inside your existing (nested loops). But it totally depends on the type of treatment that you do.

- B. create a second parallel loop to perform the treatment. This would be separate processes to ensure that the treatment is not obstacle to your purchase. For more information, see here .

Your choice really depends on the transformation that you plan to perform. If it's much the processor, this could introduce delays as you said.

I would recommend that you start at any place in the first loop and see if your DAQ buffer overruns (you can monitor the rear of the buffer during operation). If so, you should decouple the process in separate loops.

In what concerns or not ' it would be considered as real time processing ' is a trick question. Most of the people on these forums say that your system is NEVER in real time because you're using a desktop PC to perform processing (note: I guess it's the code that runs on a laptop or desktop?). It is not a deterministic systemand your data is already "old" by the time wherever he leaves your DAQ buffer. But the answer to your question really depends on how you define "real time processing". Many lay it will set as the treatment of 'live' data... but what is "actual data"?

-

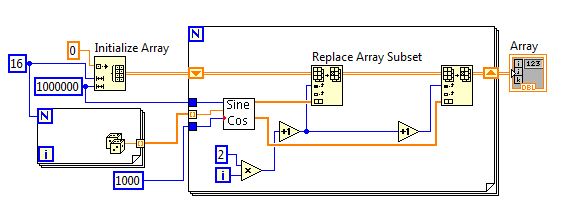

Optimize the creation of table 2D for the use of memory and parallel processing

I have, and application of data processing that requires generating a large matrix 2D with sin and cos values that must be updated constantly. It's my program in terms of speed and memory use and I want to optimize to the maximum the neck of the bottle. This is my current code:

I tried to paralellisme iteration in the loop for to take advantage of the multi-core processor, but with shift registers I can't do. Also, I read that with in place element, structures I can reduce the memory usage, but don't know how to do this with a 2D array. This code can be optimized to improve the use of memory and speed?

Maybe you are looking for

-

How to manage a large library of Photos with iCloud

I have an iMac with a 1 TB drive. El Capitan. I have a library of Photos that is 450 GB. I use NOT currently in iCloud photo library. Library to backup to external hard drive. I would switch to 500 GB SSD drive, so I need to cut the pictures library

-

Installation of Windows 7 x 64 SP1 on Aspire F5-573-56UC

So far... I managed to enter the Windows installation using a DVD player via the USB port (on port 2.0) but as soon as I get in the collection of information, the installation program is conventional me for required of drivers of CD/DVD missing. Once

-

My computer is not able to close properly to win 10, and my computer is not modded or anything. When I stopped by means of Windows 10, it keeps the power light and a backlit keyboard, but when you move the mouse or press the keypad buttons, it does n

-

PXE - e61:media test failure check cable _ _

I am building a new laptop, then after I put all the components and try to boot on cd-rom, he said PXE-check the cable of e61: media test failure I restar and selet at startup CD-Rom and told me the same message. If the pilot work, why not read the

-

Reference Dell scroll pad not working not not Windows 10

Hello I have a Dell XPS 13 (beginning 2013), and after the upgrade to Windows 10, my trackpad does not scroll in any window, despite my settings for trackpad being all together for this upward. I have the latest driver to trackpad of Cypress that wor