Average pace calculation

How the average pace calculation is done in outdoor training apps option?

I used my watch for the 3rd time for my market. I walked total 34 minutes and 1.34 miles. Average cadence he shows is 25'25 "e, which is completely false.

For my other walk 1 mile in 20 minutes, it shows speed avg as 22' 08 ".

Ditto for my inner courtyard. Speed is forever with my treadmill.

I don't understand how this calculation is done. Can someone please explain?

Thank you

Hello

"Ditto for my inner courtyard. Speed is forever with my treadmill.

I don't understand how this calculation is done. Can someone please explain? »

Get the best measure

Your Apple Watch will estimate your measurements during workouts based on the information that you entered during the installation, but you can still improve the accuracy of the information with these tips:

- Wear your Apple Watch regularly to improve the accuracy of the applications of training and activity.

- Choose the workout that best fits what you are doing. For example, if you run on a treadmill, choose run inside.

- For the accuracy of the GPS when you walk, run, or outside the cycle, select the proper training and bring your iPhone for indoor and outdoor works and race, this will also help Calibrate the accelerometer in Apple Watch for times when you don't have GPS.

- To get the most accurate heart rate measurement when you use the drive mode, make sure your Apple Watch fitsperfectly on top of your wrist. The heart rate sensor should stay close to your skin.

- You can also use another frequency heart monitoring device, like a chest belt. Learn how pair a heart rate monitor with your Apple Watch.

- Learn more about the sensor heart rate Apple Watch, the accuracy of the information and its limits.

For the accuracy of a GPS, you can also calibrate your Apple Watch.

Use of the workout on your Apple Watch

Tags: Apple Watch

Similar Questions

-

Create only average column calculated date field

Hello

I am creating a single field at the bottom of a report to calculate the average number of days, based on the calculation of the 2 other fields date similar to this example below. I understand that I need a subquery, but can't seem to build the SQL correctly. In the table below is an example of what I'm trying to build, AVERAGE = 7.5. The SQL code that I use to create the cycle time field that I'm trying to average is "ROUND (GLOGOWNER.» THE INVOICE. DATE_RECEIVED - GLOGOWNER. SHIPMENT_STOP. ACTUAL_DEPARTURE, 0) AS "" DURATION in days".

SELECT ROUND (GLOGOWNER. THE INVOICE. DATE_RECEIVED - GLOGOWNER. SHIPMENT_STOP. ACTUAL_DEPARTURE, 0) IN the 'Cycle time in days.

OF GLOGOWNER. SENDING

JOIN INTERNAL GLOGOWNER. INVOICE_SHIPMENT

ON GLOGOWNER. DELIVERY. SHIPMENT_GID = GLOGOWNER. INVOICE_SHIPMENT. SHIPMENT_GID

JOIN INTERNAL GLOGOWNER. INVOICE

ON GLOGOWNER. THE INVOICE. INVOICE_GID = GLOGOWNER. INVOICE_SHIPMENT. INVOICE_GID

JOIN INTERNAL GLOGOWNER. SHIPMENT_STOP

ON GLOGOWNER. DELIVERY. SHIPMENT_GID = GLOGOWNER. SHIPMENT_STOP. SHIPMENT_GID

JOIN INTERNAL GLOGOWNER. SHIPMENT_STOP SHIPMENT_STOP1

ON GLOGOWNER. DELIVERY. SHIPMENT_GID = SHIPMENT_STOP1. SHIPMENT_GID

WHERE GLOGOWNER. DELIVERY. DOMAINNAME LIKE "UPS/CP/%B".

AND GLOGOWNER. SHIPMENT_STOP. STOP_NUM = 1

AND SHIPMENT_STOP1. STOP_NUM > 1

ACTUAL_DEPARTURE DATE_RECEIVED Cycle in days time 01/07/2014 14/07/2014 13 04/07/2014 06/07/2014 2 01/08/2014 11/08/2014 10 02/08/2014 07/08/2014 5 AVERAGE 7.5 Thank you very much for the help.

Kind regards

-Adam

Add the "CUMULATIVE" at the end of the group by, and NOT in the select section

SELECT Glogowner.Shipment.Domain_Name AS field

, SUBSTR (Glogowner.Shipment.Servprov_Gid,-4) AS Scac

, Glogowner.Shipment.Transport_Mode_Gid AS 'Mode '.

CASE

WHEN Ir.Insert_Date<= i.insert_date="" then="">

WHEN I.Invoice_Source = 'I' and THEN 'EDI '.

WHEN I.Invoice_Source = 'G' THEN 'debit '.

WHEN I.Invoice_Source = am' THEN 'manual '.

END

AS a Source

, Glogowner.Shipment.Shipment_Xid AS 'Expedition ID ".

, Glogowner.Shipment_Stop.Actual_Departure AS "Delivery Date".

, Shipment_Stop1.Actual_Arrival AS "Delivery Date".

, I.Invoice_Number AS "invoice number".

, I.Invoice_Date AS "Date of the invoice.

, The LEAST (I.Insert_Date, Ir.Insert_Date) AS 'receipt of Invoice Date.

, TOWER (I.Date_Received - Glogowner.Shipment_Stop.Actual_Departure, 0)

AS of the time of Cycle "within days".

INVOICE I have

INNER JOIN Invoice_Refnum Ir ON I.Invoice_Gid = Ir.Invoice_Gid

JOIN INTERNAL Glogowner.Invoice_Shipment

ON I.Invoice_Gid = Glogowner.Invoice_Shipment.Invoice_Gid

JOIN INTERNAL Glogowner.Shipment

ON Glogowner.Shipment.Shipment_Gid =

Glogowner.Invoice_Shipment.Shipment_Gid

JOIN INTERNAL Glogowner.Shipment_Stop

ON Glogowner.Shipment.Shipment_Gid =

Glogowner.Shipment_Stop.Shipment_Gid

INNER JOIN Glogowner.Shipment_Stop Shipment_Stop1

ON Glogowner.Shipment.Shipment_Gid = Shipment_Stop1.Shipment_Gid

WHERE Glogowner.Shipment_Stop.Actual_Departure IS NOT NULL

AND Shipment_Stop1.Actual_Arrival IS NOT NULL

AND Ir.Invoice_Refnum_Value = 'ACS '.

AND Ir.Invoice_Refnum_Qual_Gid = 'SOURCE '.

AND I.Invoice_Type = 'I '.

AND Glogowner.Shipment_Stop.Stop_Num = 1

AND Shipment_Stop1.Stop_Num > 1

GROUP BY ROLLUP (AVG (ROUND (I.Date_Received - Glogowner.Shipment_Stop.Actual_Departure, 0)))

ORDER BY I.Invoice_Gid;

-

Average monthly calculation of daily numbers

I have a basic cube with the following dimension configuration:

Period (time dimension, the members of the month / month/day)

Year (members of the numbers of the year).

Datafacts (size of accounts) that includes only one of the treaties.

How can I set the period dimension average daily figures, rather than summarize them?

Example (M - month, D - Day):

M1 - D1 = 10

M1 - D2 = 20

Need to get M1 figure as 15 instead of 30

Also, I guess as I have my years as a dimension sperate I have to do something fancy with this as well?In the schema editor, make sure that your accounts dimension is labeled as a type of accounts. Then you can select the transformed member and go in change the properties. There will be an option for the balance of the time. Set it on average. Neck may also select if you want to skip missing and or zero in the average of the values.

To do this, your dimension of periods should be marked as a dimension of type time

-

exclusion of a calculation of the average value

Hello people from Adobe,.

I am trying to exclude the "NC" 10 value are used to fill an average value (average rating of text box) on a form, I created. I used the script from another topic on the exact topic thread, but I modified to fit my needs. I think that it is a quick fix very. For some reason, I don't get the average of all 10 drop boxes in my text box. Can someone check this script and see what I might be missing. The text box displays '0' because of this line event.value = 0;

What Miss me?

Here is the code:

Average values of non - NA;

var aFieldNames = new Array ("ListeDéroulante1", "Dropdown2", "Dropdown3", "Dropdown4", "Dropdown5", "Dropdown6", "Drop-down list 7", "Dropdown8", "Dropdown9", "Dropdown10");

counter to the values non - NA;

var nCount = 0;

variable for values of sum of non - NA;

nSum var = 0;

default value of result if no average not calculated;

Event.Value = 0;

Table of process of domain names;

for (i = 0; i < aFieldNames.length; i ++) {}

If (this.getField(aFieldNames[i]).valueAsString! = "NA") {}

field doesn't have a value of "NA";

nCount ++; incrementing counter

nSum += Number (this.getField(aFieldNames[i]).value). Add the value of the sum

} / / end of value not of NA;

} / / end of treatment a loop field;

calculate the average;

If (nCount! = 0) {}

divisor of zero, so we can calculate the average;

Event.Value = nSum / nCount;

}

I noticed that there 'Dropdown 7' as opposed to 'Dropdown7', which is what the field other names are similar. Could this be it?

-

How to calculate average daily time of the Start_Time and End_Time fields

I am using ORACLE 11 G on Red Hat Linux, I have calculated the average daily time of multiple transactions for each day. The followign is the querry that shows the difference between the fields end_time start_ time and time.

Select TRIP_LOAD_STARTED AS START_TIME, TRIP_LOAD_ENDED'RE END_TIME.

ceil ((TRIP_LOAD_EDDT-TRIP_LOAD_STDT) * 1440) "time difference Bw Start and End.

of GATE2GATE_ACTIVITY_VW

Where TRIP_LOAD_STARTED > = February 1, 14 '

AND TRIP_LOAD_ENDED < 3 February 14 '

ORDER BY TRIP_LOAD_STARTED;The querry above gives me the result as follows:

Start_Time End_Time time Diff bw begins and ends

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 18

1 FEBRUARY 14 FEBRUARY 1, 14 12

FEBRUARY 2, 14 2 FEBRUARY 14 29

FEBRUARY 2, 14 2 FEBRUARY 14 29

FEBRUARY 2, 14 2 FEBRUARY 14 29

FEBRUARY 2, 14 2 FEBRUARY 14 29

FEBRUARY 2 FEBRUARY 2, 14 14 19

FEBRUARY 2 FEBRUARY 2, 14 14 19

FEBRUARY 2 FEBRUARY 2, 14 14 19

It shows two dates for which I want to get the average duration in minutes for each group to date by the start time.

I use the following querry that probably gives me the average two days mentioned in where clause

Select the floor (avg ((TRIP_LOAD_STARTED-TRIP_LOAD_ENDED) * 1440)) 'average of Minutes of time.

of gate2gate_activity_vw

Where TRIP_LOAD_STDT > = February 1, 14 '

AND TRIP_LOAD_STDT < 3 February 14 ';

Average duration in Minutes

29

Can someone help me to a daily average time calculated between start and end.

Kind regards

mhamidch

Is that what you want:

SELECT trunc (TRIP_LOAD_STARTED), trunc (TRIP_LOAD_ENDED).

floor (avg (ceil ((TRIP_LOAD_ENDED-TRIP_LOAD_STARTED) * 1440))) Diff

OF GATE2GATE_ACTIVITY_VW

WHERE TRIP_LOAD_STARTED > = to_date('01/FEB/2014','dd/MON-YYYY')

AND TRIP_LOAD_ENDED<>

Trunc GROUP (TRIP_LOAD_STARTED), trunc (TRIP_LOAD_ENDED)

Always the example of create table and insert statements to verify the results and use the to_date with date format function.

Post edited by: Elya

-

[8i] how to calculate a moving average?

I am trying to calculate a moving average over a specific period of time, but I can't quite figure out how to do this. For this particular problem, I am also stuck on a 8i database.

Some examples of data for the problem:

It is a sample of the actual data, which includes the lines around 50K, and is the result of a query, not a table. In addition, the actual data also have a reference number attached to each order, and the end result will be be partitioned by party id number and the region. This sample represents basically something, you might see a unique part number. In addition, these records at the time spacing is not predictable. You might have a single year that has 20 ordered, and another year (or years) that does not.CREATE TABLE my_data ( order_no CHAR(10) , seq_nbr CHAR(4) , area_id CHAR(4) , start_date DATE , unit_time NUMBER(7,2) ); INSERT INTO my_data VALUES ('0000567890','0010','A001',TO_DATE('05/01/2000','mm/dd/yyyy'),0.34); INSERT INTO my_data VALUES ('0000567890','0020','A001',TO_DATE('05/02/2000','mm/dd/yyyy'),0.78); INSERT INTO my_data VALUES ('0000567890','0030','A002',TO_DATE('05/03/2000','mm/dd/yyyy'),0.91); INSERT INTO my_data VALUES ('0000567890','0040','A003',TO_DATE('05/03/2000','mm/dd/yyyy'),0.27); INSERT INTO my_data VALUES ('0000123456','0010','A001',TO_DATE('04/01/2001','mm/dd/yyyy'),0.39); INSERT INTO my_data VALUES ('0000123456','0020','A001',TO_DATE('04/02/2001','mm/dd/yyyy'),0.98); INSERT INTO my_data VALUES ('0000123456','0030','A002',TO_DATE('04/03/2001','mm/dd/yyyy'),0.77); INSERT INTO my_data VALUES ('0000123456','0040','A003',TO_DATE('04/03/2001','mm/dd/yyyy'),0.28); INSERT INTO my_data VALUES ('0000123123','0010','A001',TO_DATE('12/01/2001','mm/dd/yyyy'),0.31); INSERT INTO my_data VALUES ('0000123123','0020','A001',TO_DATE('12/02/2001','mm/dd/yyyy'),0.86); INSERT INTO my_data VALUES ('0000123123','0030','A002',TO_DATE('12/03/2001','mm/dd/yyyy'),0.82); INSERT INTO my_data VALUES ('0000123123','0040','A003',TO_DATE('12/03/2001','mm/dd/yyyy'),0.23); INSERT INTO my_data VALUES ('0000111111','0010','A001',TO_DATE('06/01/2002','mm/dd/yyyy'),0.29); INSERT INTO my_data VALUES ('0000111111','0020','A001',TO_DATE('06/02/2002','mm/dd/yyyy'),0.84); INSERT INTO my_data VALUES ('0000111111','0030','A002',TO_DATE('06/03/2002','mm/dd/yyyy'),0.78); INSERT INTO my_data VALUES ('0000111111','0040','A003',TO_DATE('06/03/2002','mm/dd/yyyy'),0.26); INSERT INTO my_data VALUES ('0000654321','0010','A001',TO_DATE('05/01/2003','mm/dd/yyyy'),0.28); INSERT INTO my_data VALUES ('0000654321','0020','A001',TO_DATE('05/02/2003','mm/dd/yyyy'),0.88); INSERT INTO my_data VALUES ('0000654321','0030','A002',TO_DATE('05/03/2003','mm/dd/yyyy'),0.75); INSERT INTO my_data VALUES ('0000654321','0040','A003',TO_DATE('05/03/2003','mm/dd/yyyy'),0.25);

Here is where I am now in the problem. For example, suppose I want to calculate a moving average over 2 years (average for the 2 years preceding the date of the current row). For my actual data, I probably want to be able to change the period of time (at different times in several queries) on which the moving average is calculated.

I thought that using the analytical function AVERAGE with a window clause might be the way to go, but I don't know exactly how to use the window clause. I can even be completely wrong interpret what is its use, but if all goes well, it should still show what I'm after.

As you can see from the above query, I want to calculate the sum of the unit_time for each order/area_id combo, then roll the way to this total time. (I'm the average total time that a product passes through each area_id per order).SELECT area_id , AVG(tot_area_unit_hrs) OVER ( PARTITION BY area_id ORDER BY min_start ROWS BETWEEN --2 years prior to min_start of current row AND CURRENT ROW ) , --something to indicate the time period the average is over AS time_period FROM ( SELECT order_no , area_id , MIN(start_date) AS min_start , SUM(unit_time) AS tot_area_unit_hrs FROM my_data GROUP BY order_no , area_id ) ORDER BY area_id , time_period

I want to see results like something along those lines... I really don't like how the time period is identified, as long as I can pay by him in chronological order, and he tells me what period of time it covers. The way I show with period_start and period_end in the results of the sample below is just a way that I thought to do this.

Any suggestions?area_id period_start period_end avg_unit_time ---------------------------------------------------- A001 4/30/1998 5/1/2000 1.120 A001 3/31/1999 4/1/2001 1.245 A001 11/30/1999 12/1/2001 1.220 A001 5/31/2000 6/1/2002 1.223 A001 4/30/2001 5/1/2003 1.153 A002 5/2/1998 5/3/2000 0.910 A002 4/2/1999 4/3/2001 0.840 A002 12/2/1999 12/3/2001 0.833 A002 6/2/2000 6/3/2002 0.790 A002 5/2/2001 5/3/2003 0.783 A003 5/2/1998 5/3/2000 0.270 A003 4/2/1999 4/3/2001 0.275 A003 12/2/1999 12/3/2001 0.260 A003 6/2/2000 6/3/2002 0.257 A003 5/2/2001 5/3/2003 0.247Hello

You're close enough. You want a window based on the BEACH, no LINES.

LINES BETWEEN... means that you know exactly how many lines will be in the window, and you don't care what range of values that represents.

RANGE BETWEEN... means that you know the exact scope of the ORDER BY value to include in the window, which could mean any number of lines.When you say "RANGE BETWEEN x BEFORE...". "where the words ORDER BY id of a DATE, x is considered as the number of days. (Which is consistent with the arithmetic DATE Oracle).

Try this:

SELECT area_id , min_start - 730 AS period_start , min_start AS period_end , AVG(tot_area_unit_hrs) OVER ( PARTITION BY area_id ORDER BY min_start RANGE BETWEEN 365 * 2 PRECEDING AND CURRENT ROW ) AS avg_unit_time FROM ( SELECT order_no , area_id , MIN(start_date) AS min_start , SUM(unit_time) AS tot_area_unit_hrs FROM my_data GROUP BY order_no , area_id ) ORDER BY area_id , period_end ;Output of your sample data:

AREA PERIOD_STA PERIOD_END AVG_UNIT_TIME ---- ---------- ---------- ------------- A001 5/2/1998 5/1/2000 1.120 A001 4/2/1999 4/1/2001 1.245 A001 12/2/1999 12/1/2001 1.220 A001 6/1/2000 6/1/2002 1.223 A001 5/1/2001 5/1/2003 1.153 A002 5/4/1998 5/3/2000 .910 A002 4/4/1999 4/3/2001 .840 A002 12/4/1999 12/3/2001 .833 A002 6/3/2000 6/3/2002 .790 A002 5/3/2001 5/3/2003 .783 A003 5/4/1998 5/3/2000 .270 A003 4/4/1999 4/3/2001 .275 A003 12/4/1999 12/3/2001 .260 A003 6/3/2000 6/3/2002 .257 A003 5/3/2001 5/3/2003 .247The period_starts are not quite what you posted, but, if I understand you correctly, you don't mind that.

There should be no problem of partitioning an additional column. -

Programming on Board on the PXI-6132

Hello

This is my first post, so please be abstain.

Asked me to develop a Labview Vi for the acquisition of data. I must acquire and a number of disintegrations exponential (as happens during the discharge of a capacitor through a resistor) averaged for calculating the decay constant. My boss has already bought a chassis PXI-1073 with a PXI-6132 to acquire the data. My question is this: since the sampling rate must use is very high, I want to minimize the amount of data transferred from the PXi system to the computer, with my system (windows 7, LV 2011, nor-DAQmx?), so it is possible to "say" the PXI-6132 to isolate the data belonging to the decomposition of exponantial on average several decays, then send the data to the host?

Thanks in advance for your help

Channel counter in the example I did you, is the meter, you use to generate the clock signal. Therefore a finished with 30 pulse pulse train if you want to acquire 30 samples.

This channel can be configured as redeclenchables and control 'Source', you must select the PFI where the trigger signal is wired. It depends on how you did.

The clock signal is routed internally, which means that you don't need all cabeling, simply select the right source for your route of entry of the AI (for example. Ctr0InternalOutput if you selected against 0).

So external signals will only be ounces you want to measure with Amnesty International and the trigger for the clockgeneration (counter) signal.

In MAX, you can do a right click on the device and see the layout of the pins on your device.

-

I need help with a simple query in an ORACLE database. I tried a lot of things that I found on the internet, but none of them worked for me.

The following query retrieves four lines:

SELECT sampled_date AS VALUE1, VALUE2 AS result, 0 as value3

Of asw_lab

WHERE template_result = 'A' AND analysis = 'B' AND ROWNUM < 5

ORDER BY sampled_date DESC;

I would like to calculate a moving average of the last four values with the date of the sample of the last line.

For example, I have this result for the query above:

Value1 value2 value3

01/04/14-16:00 1 0

01/04/14 15:00 2 0

01/04/14 14:00 3 0

01/04/14 13:00 4 0

I want to extract the below my average/query calculation result:

Value1 value2 value3

01/04/14-16:00 2.5 0

Can you help me create a request to that effect?

Thank you

Maybe it's

Select max (VALUE1) VALUE1, VALUE2 avg (VALUE2), avg (VALUE3) value3

Of

(

SELECT sampled_date AS VALUE1, VALUE2 AS result, 0 as value3

Of asw_lab

WHERE template_result = 'A' AND analysis = 'B' AND ROWNUM<>

)

-

Last Thursday, I reduced some VMs according to the oversized report that included the vcops VMs. Now, I did not change my efficiency and now reports have weird numbers on them for vCPU configured as some of the machines with 2 vCPUs week last broadcast 1.9 vCPUs on the report now when they have only 1 vCPU and so on. Any ideas what's happening? Takes the VMs vcops up to 1 vCPU a problem?

Oversized reports work on average values calculated over a period of time. This time interval is configured in the Configuration section for views no trend. Then, even when you make changes, it takes time so that it is reflected in the result. That said, a change of configuration of VCPU should get reflected immediately if you chose the 'last known capacity' for the determination of capacity under Configuration. Please check this setting. Make sure it's not average.

-

Can I change the time sampling real Graphics Performance

I am preparing a demonstration and want to display the allocation of resources in real time using vSphere performance dashboards. Unfortunately, the default sampling rate and an average of periods are not optimal for display assignments for resources with our demonstration. Can someone tell me if there is an easy way to change these default values?

The sampling rate for the graphics in real time is 20 seconds, which is probably OK, but I WOULD change for 10 seconds. However, the main issue of concern is the number of samples in which this average is calculated. This value seems to be very high (I think I saw 300 in a document). Because this number is very high, the changes I make say every minute (for demonstration purposes), allocation bands don't really show up very well in vSphere performance dashboards. For example:

If my host has had 10 GB memory active during the last 5 minutes and my demo releases suddenly a very large part of this memory, the performance table the sudden deallocation with the last word on average 10 minutes of data and shows a gradual decline in the allocation of memory.

This behavior is probably what is desired in a system of monitoring, BUT for my demonstration, I want to show a more realistic view of the allocation of resources using vSphere performance dashboards. This could be accomplished if periods of rate, for a sample mean are configurable.

Any help is greatly appreciated.

On the other hand, I think it's that they do not change settings realtim. It's good for my purpose

I do not know the slow answer due to the memory alloc/dealloc.

BTW if you think the answer above resolved your problem, you might mark it as response.

-

I have an AC.

http://img412.imageshack.us/img412/6863/averagescore.jpg

of the image, you can see two blocks of data.

This is a form of master / detail.

In the lower right corner, you can see a text_item. It is placed in the block list. And so, it will produce 5 ranks of textbox.

I was told that I can change something in the property of the textbox control so that it becomes only 1 (just like on the picture) and also property of the datablock so that it can calculate the average score (total score in detail, divided by the total of records in detail).

How can I do?Hello

To do this, you must add 2 analytical elements, 1 to store the sum of score and the other for the total number of records.

Set the properties of the element first calculation = summary, summary function = sum, sums up block = detailed block and Article summaries = Score.

And set the properties of the second element, method of calculation = summary, summary function = Count, summarizes block = detailed block and Article summaries = SID.

And set the properties of the element of the average cost calculation formula, formula =

= /. Kind regards

Manu.

If my answer or the answer to another person has been useful or appropriate, please mark accordingly

-

calculation of the average value of the sorted data and polar route drawing

Hello

I did a VI that calculates the average value of the wind rotor/speed-ratio in the sections of 30 degrees (wind direction). He also called the polar plot of calculated data. Everything works, but I would like to make more detailed calculations and drawings, by increasing the resolution to 1 degree, or...

Problem is VI, I did, is not easy on a large scale. At the moment, I have 12 parallel structures of switch-box to calculate the average value and build the array function to collect data calculated for Polar plot to draw the image in real time. I know it's probably the worst way to do it, but since I have done a few things with LV, it was the only way I managed to do what I wanted.

Now, if I continue in same way to reach my goal, I have to create 360 Parallels switch-case structures... that are crazy.

Something like the calculation of the average of the table or matrix (zero/empty values should not be calculated on average) inside the loop or similar way would probably be the best solution.

So, polar plot drawing is not a problem, but creating a reasonalbe average metering system is. Any ideas?

I would also like to rotare northward to the top (0 deg), and degrees of increase in a clockwise direction on polar ground dial plate (as on the compass).

VI on the attachment. (simplified version of the complete system)

I have signals:

-Wind speed

-wind direction

-Rotor speed

I want to:

-calculate the average value of the speed of the wind / rotor - ratio in sections (5 degrees, 1 degree)

-Draw a polar path of the wind rotor/speed-ratio of averages in propotion of wind direction

I'm using LabView 2009

Thank you very much.

It is closer to what you're looking for?

-

What is this calculation of parameters of the analysis executable average reliability has stopped working and was closed

Hi Mary,

· You did any computer change?

· When we get this message?

Follow the steps mentioned below:

How to troubleshoot a problem by performing a clean boot in Windows Vista or in Windows 7

http://support.Microsoft.com/kb/929135

Note: when you have completed troubleshooting by clean boot follow steps 7 to place the computer in his usual wayStart your computer in safe mode

http://Windows.Microsoft.com/en-us/Windows7/start-your-computer-in-safe-mode

If none of the options work perform the system restore

What is system restore?

http://Windows.Microsoft.com/en-us/Windows-Vista/what-is-system-restore

Reply back with the results

-

Calculation of pace for a running log

Google Docs spreadsheet I have things to work

REAL CONSIGNOR min/km km/hr JOURNAL HH MM SS TIME PACE PACE 5.00 0 30 56 1856 0:06:11 9.7 Min/km

= (IFERROR (TIME(F5,G5,H5)/E5), 0)

Km/hr

= IFERROR (E5 /(I5/3600), 0)

Number of Apple, I can't these formulas to work.

I changed even just take time divided by 5.00 30: 56 fast and still get an error. (for example = TIME (30.56) / 5.00)

Can we not divide by the distance or time divided by an integer/decimal number?

Hi cwallbank,

In numbers, time is a moment in time (in fact, Date & time) since midnight at the beginning of January 1, 1904.

Use the duration format.

Enter the duration in B2, 0:30:56

Formula in C2 (fill down)

= ÷A2 DUR2MINUTES (B2)

The sign of fracture resembles a sign more in this small size. Enter it under a slash ' / ' and numbers will change to

Formula in D2 (fill down)

= A2÷DUR2HOURS (B2)

Kind regards

Ian.

-

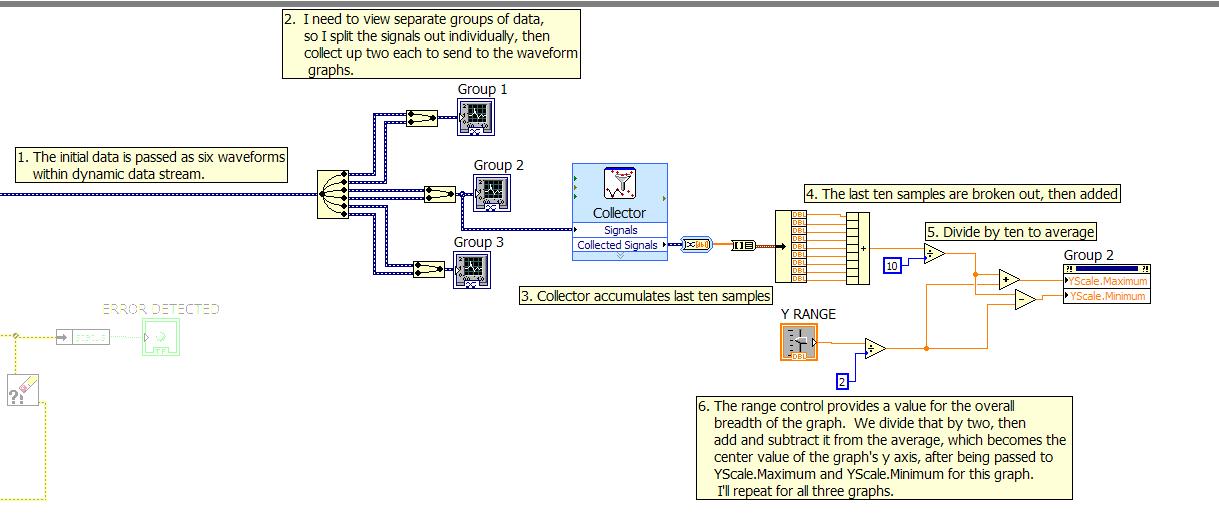

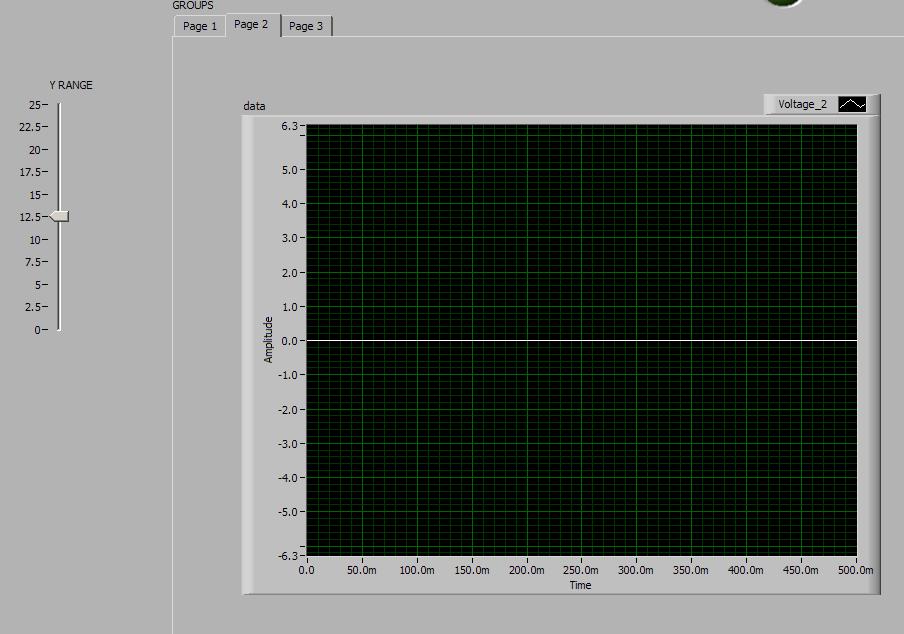

Calculation chart environment using collector, for an average

Hello

I was looking for a way to use a waveform graph, added the ability to set the width of the display of axis are all allowing a development of the chart track data, a little. It is because the self-centering of the graph feature is choppy and does not allow users to zoom in or zoom out on the ground (which my colleagues have asked).

It's basically my current solution posted here because I think that I finally got a right, but also because I know that you guys tell me: ten ways to do it better. :%^)

Note that the data here are slow; I think it would be really embarrassing if we looked at quickly changing data, although you could probably "Collect" more data and on average it all in the same way, to set the center of the axis y of the graph.

The front panel; I put in place the three graphs in a tab control. (Of course, there are no signals on the data acquisition module, when I did; but at least zero focuses on auto, and the range control works).

I guess I'm especially looking for your comments and I was wondering if I missed an obvious way to simplify this process.

Thank you

Danielle

Hey Danielle,

Given that it is a long-term test, are you interested displays all the data of your test or only the most recent game? The waveform table allows to memorize and display historical information because it has a buffer associated with it. From your code, it seems that this collector is just used to store information so that you can average the last few data points. The only thing I see standing in your way of simply with an average of your data is that it seems that you display two signals on the graph. So which signal you want to be centered? If you have a signal you want to reach on average to get the center of your chart, you may son of this signal in a convert from Dynamic Data VI express. When you put everything first down, a configuration window appears. You can then select ' 1 D array of scalars"as the type to convert. The output of this VI will then be an array of data points. You can then use the VI means to find the way to this table 1 d.

Maybe you are looking for

-

HelloHow can set my screen resolution of firefox (I know zom in and outside zom, but when I restart my firefox it chanaged to perviose zoom level)

-

How can I change an icon on the toolbar

I must confess not that I've been a user of default Firefox for some time. Have recently changed to a 64-bit (Windows 8) op system and the only 64-bit browser, I don't see in the Nightly version. The regret I have find the icons in the toolbar very b

-

HP Pavilion slimline s5-1010la memory update

Greetings, I want to improve my memory, but I can't understand how a lot of RAM can support. My OS is Windows 7 Ultimate, and I am planing to buy two memory Corsair 8 GB DDR3 Memory Kit (CMV8GX3M1A1333C9). I looked in the MB specs page but cannot fin

-

Relocation of Fireworks cs3 so I can reinstall fireworks cs6

I just have my deleted computer and windows 7 restored. And I want to reinstall Fireworks cs6, but because I update over the years I can't do just what I reinstall cs3. I downloaded the software, got the serial number but it won't install on my win