Balancing of VMware with Nexus 1000v

With the vmware puts vDS or vSS, I see many designs use the asset-liability approach for binding rising consolidation of NETWORK cards, IE a vmnic is active will fabric and a vmnic is passive will fabric B. This setting is configured in vSphere.

Se this article: http://bradhedlund.com/2010/09/15/vmware-10ge-qos-designs-cisco-ucs-nexus/

Is this correct, that we can put in place a regime with the 1000V? All the network is on the 1000V config, and as far as I know, we can only configure the uplink in these 3 modes:

1. LACP 2. vPC-Host Mode 3. vPC-Host Mode Mac pinning

and they are all 'active' based.

Post edited by: Atle Dale

Yes. All uplinks are used. Each VM virtual interface is pinned to one of of the uplinks. If one uplink goes down, all interfaces pinned gets dynamically likes to remaining uplinks. A mac address will only see on a single interface at a time. This is how MAC pinning prevents STP loops.

Robert

Tags: Cisco DataCenter

Similar Questions

-

iSCSI MPIO (Multipath) with Nexus 1000v

Anyone out there have iSCSI MPIO successfully with Nexus 1000v? I followed the Cisco's Guide to the best of my knowledge and I tried a number of other configurations without success - vSphere always displays the same number of paths as it shows the targets.

The Cisco document reads as follows:

Before you begin the procedures in this section, you must know or follow these steps.

•You have already configured the host with the channel with a port that includes two or more physical network cards.

•You have already created the VMware kernel NIC to access the external SAN storage.

•A Vmware Kernel NIC can be pinned or assigned to a physical network card.

•A physical NETWORK card may have several cards pinned VMware core network or assigned.

That means 'a core of Vmware NIC can be pinned or assigned to a physical NIC' average regarding the Nexus 1000v? I know how to pin a physical NIC with vDS standard, but how does it work with 1000v? The only thing associated with "pin" I could find inside 1000v was with port channel subgroups. I tried to create a channel of port with manuals subgroups, assigning values of sub-sub-group-id for each uplink, then assign an id pinned to my two VMkernel port profiles (and directly to ports vEthernet as well). But that doesn't seem to work for me

I can ping both the iSCSI ports VMkernel from the switch upstream and inside the VSM, so I know Layer 3 connectivity is here. A strange thing, however, is that I see only one of the two addresses MAC VMkernel related on the switch upstream. Both addresses show depending on the inside of the VSM.

What I'm missing here?

Just to close the loop in case someone stumbles across this thread.

In fact, it is a bug on the Cisco Nexus 1000v. The bug is only relevant to the ESX host that have been fixed in 4.0u2 (and now 4.1). Around short term work is again rev to 4.0u1. Medium-term correction will be integrated into a maintenance for the Nexus 1000V version.

Our implementation of code to get the multipath iSCSI news was bad but allowed in 4.0U1. 4.0U2 no longer our poor implementation.

For iSCSI multipath and N1KV remain 4.0U1 until we have a version of maintenance for the Nexus 1000V

-

UCS environment vSphere 5.1 upgrade to vSphere 6 with Nexus 1000v

Hi, I've faced trying to get the help of TAC and internet research on the way to upgrade to our UCS environment, and as a last thought resort, I would try a post on the forum.

My environment is an environment of UCS chassis with double tracking, tissue of interconnections, years 1110 with pair HA of 1000v 5.1 running vsphere. We have updated all our equipment (blades, series C and UCS Manager) for the supported versions of the firmware by CISCO for vSphere 6.

We have even upgraded our Nexus 1000v 5.2 (1) SV3(1.5a) which is a support for vSphere version 6.

To save us some treatment and cost of issuing permits and on our previous vcenter server performance was lacking and unreliable, I looked at the virtual migration on the vCenter 6 appliance. There is nowhere where I can find information which advises on how to begin the process of upgrading VMWare on NGC when the 1000v is incorporated. I would have thought that it is a virtual machine if you have all of your improved versions to support versions for 6 veil smooth.

A response I got from TAC was that we had to move all of our VM on a standard switch to improve the vCenter, but given we are already on a supported the 1000v for vSphere version 6 that he left me confused, nor that I would get the opportunity to rework our environment being a hospital with this kind of downtime and outage windows for more than 200 machines.

Can provide some tips, or anyone else has tried a similar upgrade path?

Greetings.

It seems that you have already upgraded your components N1k (are VEM upgraded to match?).

Are your questions more info on how you upgrade/migration to a vcenter server to another?

If you import your vcenter database to your new vcenter, it shouldn't have a lot of waste of time, as the VSM/VEM will always see the vcenter and N1k dVS. If you change the vcenter server name/IP, but import the old vcenter DB, there are a few steps, you will need to ensure that the connection of VSM SVS corresponds to the new IP address in vcenter.

If you try to create a new additional vcenter in parallel, then you will have problems of downtime as the name of port-profiles/network programming the guestVMs currently have will lose their 'support' info if you attempt to migrate, because you to NIC dVS standard or generic before the hosts for the new vcenter.

If you are already on vcenter 6, I believe you can vmotion from one host to another and more profile vswitch/dVS/port used.

Really need more detail on how to migrate from a vcenter for the VCA 6.0.

Thank you

Kirk...

-

The Nexus 1000V loop prevention

Hello

I wonder if there is a mechanism that I can use to secure a network against the loop of L2 packed the side of vserver in Vmware with Nexus 1000V environment.

I know, Nexus 1000V can prevent against the loop on the external links, but there is no information, there are features that can prevent against the loop caused by the bridge set up on the side of the OS on VMware virtual server.

Thank you in advance for an answer.

Concerning

Lukas

Hi Lukas.

To avoid loops, the N1KV does not pass traffic between physical network cards and also, he silently down traffic between vNIC is the bridge by operating system.

http://www.Cisco.com/en/us/prod/collateral/switches/ps9441/ps9902/guide_c07-556626.html#wp9000156

We must not explicit configuration on N1KV.

Padma

-

migration from 4.1 to 5.1 hosts/guests of Nexus 1000V to VDS using CLI power

Is there scripts out there that will migrate to a host and guests on this respective host of a virtual center of ESXi 4.1 with Nexus 1000v switch, for a new virtual Center 5.1 with a VDS of VMWare ESXi.

I don't want to upgrade the host 5.1, or guests. only move them from 4.1 to 5.1 Virtual Center and upgrade at a later date

Maybe can the Gabe migration distributed vSwitch in vCenter new post help?

-

[Nexus 1000v] Vincent can be add in VSM

Hi all

due to my lab, I have some problems with Nexus 1000V when VEM cannot add in VSM.

+ VSM has already installed on ESX 1 (stand-alone or ha) and you can see:

See the Cisco_N1KV module.

Status of Module Type mod Ports model

--- ----- -------------------------------- ------------------ ------------

1 active 0 virtual supervisor Module Nexus1000V *.

HW Sw mod

--- ---------------- ------------------------------------------------

1 4.2 (1) SV1(4a) 0.0

MOD-MAC-Address (es) series-Num

--- -------------------------------------- ----------

1 00-19-07-6c-5a-a8 na 00-19-07-6c-62-a8

Server IP mod-Server-UUID servername

--- --------------- ------------------------------------ -------------------

1 10.4.110.123 NA NA

+ on ESX2 installed VEM

[[email protected] / * / ~] status vem #.

VEM modules are loaded

Switch name Num used Ports configured Ports MTU rising ports

128 3 128 1500 vmnic0 vSwitch0

VEM Agent (vemdpa) is running

[[email protected] / * / ~] #.

all advice to do this.

Thank you very much

Doan,

Need more information.

The reception was added via vCenter to DVS 1000v successfully?

If so, there is probably a problem with your control communication VLAN between the MSM and VEM. Start here and ensure that the VIRTUAL local area network has been created on all intermediate switches and it is allowed on each end-to-end trunk.

If you're still stuck, paste your config running of your VSM.

Kind regards

Robert

-

Hey guys,.

Hope this is the right place to post.

IM currently working on a design legacy to put in an ESXi 5 with Nexus 1000v solution and a back-end of a Cisco UCS 5180.

I have a few questions to ask about what people do in the real world about this type of package upward:

In oder to use the Nexus 1000v - should I vCenter? The reason why I ask, is there no was included on the initial list of the Kit. I would say to HA and vMotion virtual Center, but the clients wants to know if I can see what we have for now and licenses to implement vCenter at a later date.

Ive done some reading about the Nexus 1000v as Ive never designed a soluton with that of before. Cisco is recommended 2 VSM be implemented for redundancy. Is this correct? Do need me a license for each VSM? I also suppose to meet this best practice I need HA and vMoton and, therefore, vCenter?

The virtual machine for the VSM, can they sit inside the cluster?

Thanks in advance for any help.

In oder to use the Nexus 1000v - should I vCenter?

Yes - the Nexus 1000v is a type of switch developed virtual and it requires vcenetr

Ive done some reading about the Nexus 1000v as Ive never designed a soluton with that of before. Cisco is recommended 2 VSM be implemented for redundancy. Is this correct? Do need me a license for each VSM? I also suppose to meet this best practice I need HA and vMoton and, therefore, vCenter?

I'm not sure the VSMs but yes HA and vMotion are required as part of best practices

The virtual machine for the VSM, can they sit inside the cluster?

Yes they cane xist within the cluster.

-

Nexus 1000v, UCS, and Microsoft NETWORK load balancing

Hi all

I have a client that implements a new Exchange 2010 environment. They have an obligation to configure load balancing for Client Access servers. The environment consists of VMware vShpere running on top of Cisco UCS blades with the Nexus 1000v dvSwitch.

Everything I've read so far indicates that I must do the following:

1 configure MS in Multicast mode load balancing (by selecting the IGMP protocol option).

2. create a static ARP entry for the address of virtual cluster on the router for the subnet of the server.

3. (maybe) configure a static MAC table entry on the router for the subnet of the server.

3. (maybe) to disable the IGMP snooping on the VLAN appropriate in the Nexus 1000v.

My questions are:

1. any person running successfully a similar configuration?

2 are there missing steps in the list above, or I shouldn't do?

3. If I am disabling the snooping IGMP on the Nexus 1000v should I also disable it on the fabric of UCS interconnections and router?

Thanks a lot for your time,.

Aaron

Aaron,

The steps above you are correct, you need steps 1-4 to operate correctly. Normally people will create a VLAN separate to their interfaces NLB/subnet, to prevent floods mcast uncessisary frameworks within the network.

To answer your questions

(1) I saw multiple clients run this configuration

(2) the steps you are correct

(3) you can't toggle the on UCS IGMP snooping. It is enabled by default and not a configurable option. There is no need to change anything within the UCS regarding MS NLB with the above procedure. FYI - the ability to disable/enable the snooping IGMP on UCS is scheduled for a next version 2.1.

This is the correct method untill the time we have the option of configuring static multicast mac entries on

the Nexus 1000v. If this is a feature you'd like, please open a TAC case and request for bug CSCtb93725 to be linked to your SR.This will give more "push" to our develpment team to prioritize this request.

Hopefully some other customers can share their experience.

Regards,

Robert

-

Change the maximum number of ports on Nexus 1000v vDS online with no distribution?

Hello

Change the maximum number of ports on Nexus 1000v vDS online with no distribution?

I'm sure that's what the link

VMware KB: Increase in the maximum number of vNetwork Distributed Switch (vDS) ports in vSphere 4.x

not to say that

I have 5.1 ESXi and vcenter

Thank you

SaamiThere is no downtime when you change quantity "vmware max-ports" a port profile. It can be done during production.

You can also create a new profile of port with a test of the virtual machine and change the "vmware max-ports' If you want warm and ferrets.

-

Upgrade to vCenter 4.0 with Cisco Nexus 1000v installed

Hi all

We have vCenter 4.0 and ESX 4.0 servers and we want to upgrade to version 4.1. But also Nexus 1000v installed on the ESX Server and vCenter.i found VMware KB which is http://kb.vmware.com/selfservice/microsites/search.do?language=en_US & cmd = displayKC & externalId = 1024641 . But only the ESX Server upgrade is explained on this KB, vCenter quid? Our vcenter 4.0 is installed on Windows 2003 64-bit with 64-bit SQL 2005.

We can upgrade vcenter with Nexsus plugin 1000v installed upgrading on-site without problem? And how to proceed? What are the effects of the plugin Nexus1000v installed on the server vcenter during update?

Nexus1000v 4.0. (version 4).sv1(3a) has been installed on the ESX servers.

Concerning

Mecyon,

Upgrading vSphere 4.0-> 4.1 you must update the software VEM (.vib) also. The plugin for vCenter won't change, it won't be anything on your VSM (s). The only thing you should update is the MEC on each host. See the matrix previously posted above for the .vib to install once you're host has been updated.

Note: after this upgrade, that you will be able to update regardless of your software of vSphere host or 1000v (without having to be updated to the other). 1000v VEM dependencies have been removed since vSphere 4.0 Update 2.

Kind regards

Robert

-

VXLAN on UCS: IGMP with Catalyst 3750, 5548 Nexus, Nexus 1000V

Hello team,

My lab consists of Catalyst 3750 with SVI acting as the router, 5548 Nexus in the vpc Setup, UCS in end-host Mode and Nexus 1000V with segmentation feature enabled (VXLAN).

I have two different VLAN for VXLAN (140, 141) to demonstrate connectivity across the L3.

VMKernel on VLAN 140 guests join the multicast fine group.

Hosts with VMKernel on 141 VLAN do not join the multicast group. Then, VMs on these hosts cannot virtual computers ping hosts on the local network VIRTUAL 140, and they can't even ping each other.

I turned on debug ip igmp on the L3 Switch, and the result indicates a timeout when he is waiting for a report from 141 VLAN:

15 Oct 08:57:34.201: IGMP (0): send requests General v2 on Vlan140

15 Oct 08:57:34.201: IGMP (0): set the report interval to 3.6 seconds for 224.0.1.40 on Vlan140

15 Oct 08:57:36.886: IGMP (0): receipt v2 report on 172.16.66.2 to 239.1.1.1 Vlan140

15 Oct 08:57:36.886: IGMP (0): group record received for group 239.1.1.1, mode 2 from 172.16.66.2 to 0 sources

15 Oct 08:57:36.886: IGMP (0): update EXCLUDE group 239.1.1.1 timer

15 Oct 08:57:36.886: IGMP (0): add/update Vlan140 MRT for (*, 239.1.1.1) 0

15 Oct 08:57:38.270: IGMP (0): send report v2 for 224.0.1.40 on Vlan140

15 Oct 08:57:38.270: IGMP (0): receipt v2 report on Vlan140 of 172.16.66.1 for 224.0.1.40

15 Oct 08:57:38.270: IGMP (0): group record received for group 224.0.1.40, mode 2 from 172.16.66.1 to 0 sources

15 Oct 08:57:38.270: IGMP (0): update EXCLUDE timer group for 224.0.1.40

15 Oct 08:57:38.270: IGMP (0): add/update Vlan140 MRT for (*, 224.0.1.40) by 0

15 Oct 08:57:51.464: IGMP (0): send requests General v2 on Vlan141<----- it="" just="" hangs="" here="" until="" timeout="" and="" goes="" back="" to="">

15 Oct 08:58:35.107: IGMP (0): send requests General v2 on Vlan140

15 Oct 08:58:35.107: IGMP (0): set the report interval to 0.3 seconds for 224.0.1.40 on Vlan140

15 Oct 08:58:35.686: IGMP (0): receipt v2 report on 172.16.66.2 to 239.1.1.1 Vlan140

15 Oct 08:58:35.686: IGMP (0): group record received for group 239.1.1.1, mode 2 from 172.16.66.2 to 0 sources

15 Oct 08:58:35.686: IGMP (0): update EXCLUDE group 239.1.1.1 timer

15 Oct 08:58:35.686: IGMP (0): add/update Vlan140 MRT for (*, 239.1.1.1) 0

If I do a show ip igmp interface, I get the report that there is no joins for vlan 141:

Vlan140 is up, line protocol is up

The Internet address is 172.16.66.1/26

IGMP is enabled on the interface

Current version of IGMP host is 2

Current version of IGMP router is 2

The IGMP query interval is 60 seconds

Configured IGMP queries interval is 60 seconds

IGMP querier timeout is 120 seconds

Configured IGMP querier timeout is 120 seconds

Query response time is 10 seconds max IGMP

Number of queries last member is 2

Last member query response interval is 1000 ms

Access group incoming IGMP is not defined

IGMP activity: 2 joints, 0 leaves

Multicast routing is enabled on the interface

Threshold multicast TTL is 0

Multicast designated router (DR) is 172.16.66.1 (this system)

IGMP querying router is 172.16.66.1 (this system)

Multicast groups joined by this system (number of users):

224.0.1.40 (1)

Vlan141 is up, line protocol is up

The Internet address is 172.16.66.65/26

IGMP is enabled on the interface

Current version of IGMP host is 2

Current version of IGMP router is 2

The IGMP query interval is 60 seconds

Configured IGMP queries interval is 60 seconds

IGMP querier timeout is 120 seconds

Configured IGMP querier timeout is 120 seconds

Query response time is 10 seconds max IGMP

Number of queries last member is 2

Last member query response interval is 1000 ms

Access group incoming IGMP is not defined

IGMP activity: 0 joins, 0 leaves

Multicast routing is enabled on the interface

Threshold multicast TTL is 0

Multicast designated router (DR) is 172.16.66.65 (this system)

IGMP querying router is 172.16.66.65 (this system)

No group multicast joined by this system

Is there a way to check why the hosts on 141 VLAN are joined not successfully? port-profile on the 1000V configuration of vlan 140 and vlan 141 rising and vmkernel are identical, except for the different numbers vlan.

Thank you

Trevor

Hi Trevor,

Once the quick thing to check would be the config igmp for both VLAN.

where did you configure the interrogator for the vlan 140 and 141?

are there changes in transport VXLAN crossing routers? If so you would need routing multicast enabled.

Thank you!

. / Afonso

-

Nexus 1000v VSM compatibility with older versions of VEM?

Hello everyone.

I would like to upgrade our Nexus 1000v VSM 4.2 (1) SV1 (5.1) to 4.2 (1) SV2(2.1a) because we are heading of ESXi 5.0 update 3 to 5.5 ESXi in the near future. I was not able to find a list of compatibility for the new version when it comes to versions VEM, I was wondering if the new VSM supports older versions VEM, we are running, so I must not be upgraded all at once. I know that it supports two versions of our ESXi.

Best regards

Pete

You found documentation, transfer of the station from 1.5 to latest code is supported in a VSM perspective. Which is not documented is the small one on the MEC. In general, the VSM is backward compatible with the old VEM (to a degree, the degree of which is not published). Although it is not documented (AFAIK), verbal comprehension is that MEC can be a version or two behind, but you should try to minimize the time that you run in this configuration.

If you plan to run mixed versions VEM when getting your upgraded hosts (totally fine that's how I do mine), it is better to move to this enhanced version of VEM as you upgrade the hypervisor. Since you go ESXi 5.0 5.5, you create an ISO that contains the Cisco VIBs, your favorite driver async (if any), and the image of ESXi 5.5 all grouped together so the upgrade for a given host is all of a sudden. You probably already have this cold technique, but the links generated by the Cisco tool below will show you how to proceed. It also gives some URLS handy to share with each person performing functions on this upgrade. Here is the link:

Nexus 1000V and ESX upgrade utility

PS - the new thing takes clones your VSMs offline. Even if they are fairly easy to recover, having a real pure clone will save some sauce secret that otherwise you may lose in a failure scenario. Just turn off a VSM, then right click and clone. Turn on again this MSM and failover pair HA, then take to the bottom of it and get a clone of it. So as a security measure, this upgrade, get your clones currently out of the current 1.5 VSMs, then some time after your upgrade some clones offline, saved from the new version.

-

I'm working on the Cisco Nexus 1000v deployment to our ESX cluster. I have read the Cisco "Start Guide" and the "installation guide" but the guides are good to generalize your environment and obviously does not meet my questions based on our architecture.

This comment in the "Getting Started Guide" Cisco makes it sound like you can't uplink of several switches on an individual ESX host:

«The server administrator must assign not more than one uplink on the same VLAN without port channels.» Affect more than one uplink on the same host is not supported by the following:

A profile without the port channels.

Port profiles that share one or more VLANS.

After this comment, is possible to PortChannel 2 natachasery on one side of the link (ESX host side) and each have to go to a separate upstream switch? I am creating a redundancy to the ESX host using 2 switches but this comment sounds like I need the side portchannel ESX to associate the VLAN for both interfaces. How do you manage each link and then on the side of the switch upstream? I don't think that you can add to a portchannel on this side of the uplink as the port channel protocol will not properly negotiate and show one side down on the side ESX/VEM.

I'm more complicate it? Thank you.

Do not portchannel, but it is possible the channel port to different switches using the pinning VPC - MAC mode. On upstream switches, make sure that the ports are configured the same. Same speed, switch config, VLAN, etc (but no control channel)

On the VSM to create a unique profile eth type port with the following channel-group command

port-profile type ethernet Uplink-VPC

VMware-port group

switchport mode trunk

Automatic channel-group on mac - pinning

no downtime

System vlan 2.10

enabled state

What that will do is create a channel port on the N1KV only. Your ESX host will get redundancy but your balancing algorithm will be simple Robin out of the VM. If you want to pin a specific traffic for a particular connection, you can add the "pin id" command to your port-type veth profiles.

To see the PIN, you can run

module vem x run vemcmd see the port

n1000v-module # 5 MV vem run vemcmd see the port

LTL VSM link PC - LTL SGID Vem State Port Admin Port

18 Eth5/2 UP UP FWD 1 305 vmnic1

19 Eth5/3 UP UP FWD 305 2 vmnic2

49 Veth1 UP UP 0 1 vm1 - 3.eth0 FWD

50 Veth3 UP UP 0 2 linux - 4.eth0 FWD

Po5 305 to TOP up FWD 0

The key is the column SGID. vmnic1 is SGID 1 and vmnic2 2 SGID. Vm1-3 VM is pinned to SGID1 and linux-4 is pinned to SGID2.

You can kill a connection and traffic should swap.

Louis

-

What does Nexus 1000v Version number Say

Can any body provide long Nexus 1000v version number, for example 5.2 (1) SV3 (1.15)

And what does SV mean in the version number.

Thank you

SV is the abbreviation of "Swiched VMware"

See below for a detailed explanation:

http://www.Cisco.com/c/en/us/about/Security-Center/iOS-NX-OS-reference-g...

The Cisco NX - OS dialing software

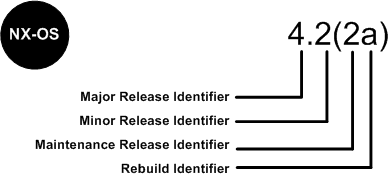

Software Cisco NX - OS is a data-center-class operating system that provides a high thanks to a modular design availability. The Cisco NX - OS software is software-based Cisco MDS 9000 SAN - OS and it supports the Cisco Nexus series switch Cisco MDS 9000 series multilayer. The Cisco NX - OS software contains a boot kick image and an image of the system, the two images contain an identifier of major version, minor version identifier and a maintenance release identifier, and they may also contain an identifier of reconstruction, which can also be referred to as a Patch to support. (See Figure 6).

Software NX - OS Cisco Nexus 7000 Series and MDS 9000 series switches use the numbering scheme that is illustrated in Figure 6.

Figure 6. Switches of the series Cisco IOS dial for Cisco Nexus 7000 and MDS 9000 NX - OS

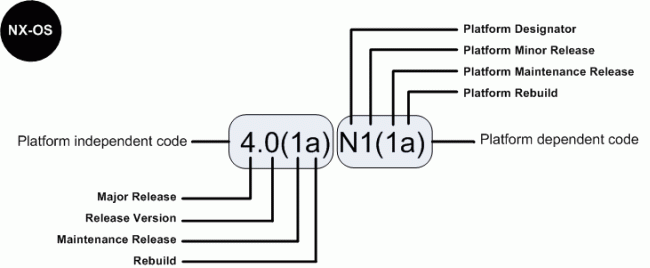

For the other members of the family, software Cisco NX - OS uses a combination of systems independent of the platform and is dependent on the platform as shown in Figure 6a.

Figure 6 a. software Cisco IOS NX - OS numbering for the link between 4000 and 5000 Series and Nexus 1000 switches virtual

The indicator of the platform is N for switches of the 5000 series Nexus, E for the switches of the series 4000 Nexus and S for the Nexus 1000 series switches. In addition, Nexus 1000 virtual switch uses a designation of two letters platform where the second letter indicates the hypervisor vendor that the virtual switch is compatible with, for example V for VMware. Features there are patches in the platform-independent code and features are present in the version of the platform-dependent Figure 6 a above, there is place of bugs in the version of the software Cisco NX - OS 4.0(1a) are present in the version 4.0(1a) N1(1a).

-

Cisco Nexus 1000V Virtual Switch Module investment series in the Cisco Unified Computing System

Hi all

I read an article by Cisco entitled "Best practices in Deploying Cisco Nexus 1000V Switches Cisco UCS B and C Series series Cisco UCS Manager servers" http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9902/white_paper_c11-558242.htmlA lot of excellent information, but the section that intrigues me, has to do with the implementation of module of the VSM in the UCS. The article lists 4 options in order of preference, but does not provide details or the reasons underlying the recommendations. The options are the following:

============================================================================================================================================================

Option 1: VSM external to the Cisco Unified Computing System on the Cisco Nexus 1010In this scenario, the virtual environment management operations is accomplished in a method identical to existing environments not virtualized. With multiple instances on the Nexus 1010 VSM, multiple vCenter data centers can be supported.

============================================================================================================================================================Option 2: VSM outside the Cisco Unified Computing System on the Cisco Nexus 1000V series MEC

This model allows to centralize the management of virtual infrastructure, and proved to be very stable...

============================================================================================================================================================Option 3: VSM Outside the Cisco Unified Computing System on the VMware vSwitch

This model allows to isolate managed devices, and it migrates to the model of the device of the unit of Services virtual Cisco Nexus 1010. A possible concern here is the management and the operational model of the network between the MSM and VEM devices links.

============================================================================================================================================================Option 4: VSM Inside the Cisco Unified Computing System on the VMware vSwitch

This model was also stable in test deployments. A possible concern here is the management and the operational model of the network links between the MSM and VEM devices and switching infrastructure have doubles in your Cisco Unified Computing System.

============================================================================================================================================================As a beginner for both 100V Nexus and UCS, I hope someone can help me understand the configuration of these options and equally important to provide a more detailed explanation of each of the options and the resoning behind preferences (pro advantages and disadvantages).

Thank you

PradeepNo, they are different products. vASA will be a virtual version of our ASA device.

ASA is a complete recommended firewall.

Maybe you are looking for

-

Is it possible to add a note to an email received in mail?

Is it possible to add a note to an email of reception received in the mail? I started using receipts from suppliers when it is possible to eliminate the clutter of waste and cover paper. Good number of receipts list not exactly what was purchased ins

-

Satellite L450 - 13G - No WLAN signal after a few minutes

Hello. I just bought a L450 13 G with Windows 7 pre loaded. I noticed that a lot of times drops wifi connection. It starts as an excellent signal and then suddenly fades to no signal after a few minutes. I can't get it remains connected, if I'm in th

-

Excel string cannot be imported into labview

I want to import column names in excel in labview by copying strings excel and after sticking to the string constant and to convert an array of strings. But labview out a digital picture. I checked the format type. It is just but labview show them al

-

How the PFI to go top-to-bottom with sample clock?

Hello world! I am very new to LabView and I try to do something very simple in the NI PCI-6534 and still not get anywhere (or do not know if it is the limitation of the hardware) My request is to acquire digital data of 2 channels (16-bit each) of ou

-

I downloaded Video4fuze and YouTube Downloader but still do not understand how to get youtube on my rocket clips. This is the main reason I bought the "rocket". Any help would be appreciated. Thanks in advance