Deploy all TestSequence

Hi, I have developed a sequence of TS4.2.1 Test on my development PC. Now, I have to deploy on the client machine. The target computer has only the TS runtime installed, but it has all the software package already installed (llb, screws, ini, dll files, etc.) because on this machine is already running another test sequence.

The customer asked me to install my test in the following sequence:

- Don't change any folder o path on the target computer.

- Don't overwrite the already installed code module.

I should install only my Test sequence! I never did like this installation. Usually, I create a workspace including all.

Do you know if it's possible?

Thank you very much for your support.

Hi, maybe I have not very well explained my problem, for sure it was not really clear, not even me. In my mind the target machine was a virgin machine with none OR sw installed. I thought to run the installer of the test sequence and nothing more (it also install LV runtime). This solution work (and I use it) If you put in the al Installer the software module as the code use test. It creates all the internal links and create a support folder where all the sw modules are installed. But this isn't what I was looking for because I didn't have to change the environment of sw.

The solution is much easier. I installed on the target machine the development of the TS and the BT. I set up TS to work with runtime LV and I left the evaluation license has expired. In this case the sequence didn't need be re-issued to all modules of sw. In this case, I can change a VI or the TestSequence without changing anything else.

Thank you all.

Tags: NI Software

Similar Questions

-

Not able to deploy all libraries

I'm using Labview 8.6.1, but was using 8.6 until today. I had not used LabVIEW for a few months until last week, and before last week, everything worked very well. I loaded a project and he ran and has encountered an error. I checked my manager system distributed and noticed that I have not this process underway, not even system. System is always on, even if I'm not using Labview. So somehow missed something and I need recommendations. As part of the services, I can see that all of the appropriate services are running including shared Variable engine. But when projects will not be the same autodeploy and my system process went, I'm sure something is Jacked. Any ideas? Should I reinstall the EVS? How do you do that? Thank you.

So I have no way of knowing what exactly went wrong and why, but I

uninstalled LabVIEW Edition today and reinstalled. My

first test was to try a work project known, who has not worked since

ghosts in the machine took over last week. I received an error message

indicating that the shared Variable engine had stopped working. What has

relief, a different error message. Then I restarted the project and ran

My VI and Labview told me that this version of windows (Vista) cannot

by program start and stop services, and what I should do

He me. So I started the EVS, and as if by magic, my projects all works

here in my Distributed System Manager is no longer a fool. So I have

guess she needs a full-on kick in the ass. Although he would have

summer well if there was some quick way to reinstall corrupted EVS

instead of 40 minutes uninstall reinstall of Labview. -

reference Subvi model VI deployed

Hi all

We use about 50 testsequences with TestStand 4.1.1 and LabVIEW 8.5. The development is made with LabVIEW and TestStand, production uses the runtime TestStand.

Our reference libraries vi vi: for example "C:\Program Files (x 86) \National Instruments\LabVIEW 8.5\vi.lib\Utility\error.llb\Clear Errors.vi. After development, we are deploying our testsequences on a network share, so the production of PC can run them through the TestStand Runtime. The references to "program files" are deployed in a folder... / SupportVIs for the production of PC can find these references, since they do not have the folder "C:\Program Files (x 86) \National 8.5\vi.lib.

Some vi is reused in new testsequences and copied in the folder /NI_Labview of the testsequence. To check for errors before deploying, I run the option "compile mass" (Labview-> tools-> Advanced-> mass compile...) for the real testsequence /NI_Labview folder. The exit of the mass 'compile' contains odd messages:

# Mass compilation of departure: make April 28, 2016 09:07:24

Directory: "C:\Stefsvn\TESTSET55\NI_Labview".

C:\Stefsvn\TESTSET55\NI_Labview\TESTSET55.llb\ReadConfig_DUT_parameters.VI

-VI should happen to "S:\Teststand models\SupportVIs\Read key (U32) .vi" was loaded "C:\Program Files (x 86) \National Instruments\LabVIEW 8.5\vi.lib\Utility\config.llb\Read key (U32) .vi.

. VI C:\Stefsvn\TESTSET55\NI_Labview\TESTSET55 (Teststand)

-VI should happen to "C:\Program Files (x 86) \National 8.5\vi.lib\Utility\error.llb\Clear Errors.vi" has been loaded "S:\Teststand models\SupportVIs\Clear Errors.vi".

# Mass compilation Finished: make April 28, 2016 09:07:27LabVIEW search references Subvi in deployments of our teststand files!

Using the massive compilation option, I broke a teststand deployment model vi. I want to avoid this scenario not to affect production in the compilation/deployment of a new testsequence.

If I look at LabVIEW-> tools-> Options-> paths-> VI search path, I get the following:

\* \* \* \* C:\Program Files (x 86) \National Instruments\LabVIEW 8.5\resource

Why prefer labview reference in lieu the deployed model vi VI in the | vilib | folder? It's because of 'S:\Teststand models\' in

? Can I avoid labview vi deployed SEO? Can I edit/clear

? Is there a built in option to view all the Subvi their paths? Or what I need to build it myself? I found this http://www.ni.com/example/27094/en/ vi which looks like a good start.

Thanks in advance,

Stef

SVH wrote:

...If I look at LabVIEW-> tools-> Options-> paths-> VI search path, I get the following:

\* \* \* \* C:\Program Files (x 86) \National Instruments\LabVIEW 8.5\resource

Why prefer labview reference in lieu the deployed model vi VI in the | vilib | folder? It's because of 'S:\Teststand models\' in

? Can I avoid labview vi deployed SEO? Can I edit/clear

? Is there a built in option to view all the Subvi their paths? Or what I need to build it myself? I found this http://www.ni.com/example/27094/en/ vi which looks like a good start.

Thanks in advance,

Stef

Hi Stef,

your search paths are used top-down. Get your way

s sought firstly, including subfolders. As your list of . VILIB is searched only after these two. On my installation, I even deleted the list, since it caused me troble on a regular basis. What I don't understand: do you want to change the deployed screws or you are working on a development project?

See you soon

Oli

-

All,

I have a cRIO-9068 I try to use the scan mode for. I have intalled all the latest drivers and software as explained. However, when I put my chassis to scan mode, then select deployment all, I get this error on my chassis and all my modules:

"The current module settings require a NI Scan Engine support on the controller. You can use Measurement & Automation Explorer (MAX) to install a software package recommended NOR-Rio with NI Scan Engine support on the controller. If you installed LabVIEW FPGA, you can use this module with LabVIEW FPGA by adding an element of FPGA target under the chassis and drag and drop the module on the FPGA target element. »

Everyone knows this or know why labVIEW does not recognize that the software is installed on my cRIO or is it not installed correctly?

AGJ,

Thanks for the image. I saw a green arrown beside all my pictures of chip and it seemed that meant that the software wasn't really being installed. I formatted my cRIO and did a custom install. My problem was that I had the two labview 2013 and 2014 installed and the cRIO put conflicting versions of software. After doing a custom installation and choose only the versions of 2014, my picture now looks like yours!

-

How I develop on a crio and deploy on another

How I develop on a cRIO and deploy on another?

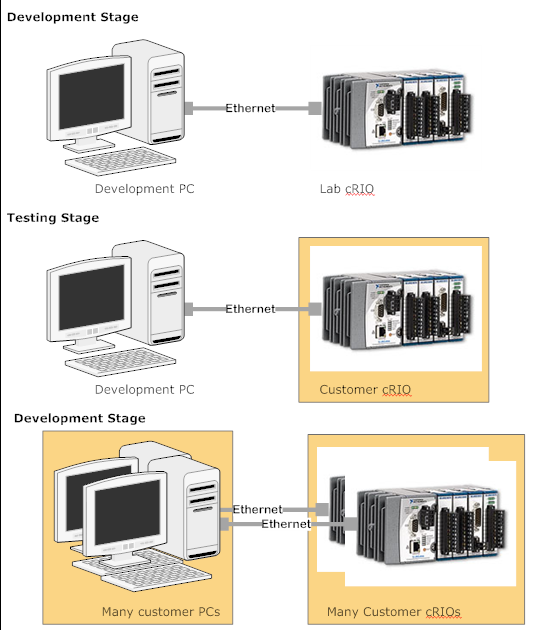

I have developed a cRIO application and test on an another cRIO before deployment on many other cRIOs. (See image below).

I used to do this in real-time systems: develop in our facilities with our materials and test it on their development on the site of the client system. But with the cRIO things are a little different. In the Project Explorer window, I host (my computer) and the target (cRIO Lab). I tried the following:

- Connection: disconnect the cRIO Lab (via the Ethernet connection) and the cRIO of customer connection and then discover the new cRIO in Project Explorer. This works.

- Copy VI & variable: I copied the same file under the cRIO Lab structure in the project for the new cRIO Explorer. I did this by adding a VI and a variable - the same ones on the cRIO Lab shared library. It seems to work.

- Running or not: the problem is when I try to run it because it looks for variables that are shared on the old cRIO.

System Specs: LabVIEW 8.6.1, cRIO 9074 w / various modules, configured in scan mode and to be deployed as an executable

What is the best way to copy an one cRIO to another project?

Hello

First off I will say that it is the problem more beautiful and informative that I saw.

Secondly, you should be able to do the following:

-instead of adding the two targets to your project, just go to the properties of the target (target of click right, select properties) and change the IP that you are testing on. That would be the simplest and cleanest method.

- Otherwise, in your configuration, when you port code from one to the other cRIO, don't forget to right click target and select deploy all. You must rebuild your application as well.

Please let me know how these suggestions work out for you.

Kind regards

Anna K.

-

Deployment of local variables shared on a real-time target

Hello everyone, once again

I read more posts and knowledge base articles about this topic than I can count at this point, and I'm scared, I'm still not clear on exactly how it works, and I hope that someone can delete it for me, if it is to earn themselves some laurels more.

I have a project with a real-time quote and the other Windows. They shared communication via network-published variables. The real-time part also uses shared single process variables to communicate between the loops. I have the intention of all 3 libraries of shared variables (Windows-> RT, RT-> Windows and RT Local) to be hosted on RT target for reliability. Real-time executable must start at startup and run even if side Windows is not started (on the side of Windows is optional).

I realized that real-time executable will not start the variable engine shared and/or deploy itself shared variables. I also read that I can't deploy the shared variables programmatically from the side of RT. This leaves only two options that I know of:

(1) their deployment programmatically in Windows-side program.

(2) deploying the shared variables on the target RT manually via the project in the LabVIEW development environment, and

About option 1, as I said running Windows is supposed to be optional, so you have to run a program on the Windows side before the side RT will work is highly undesirable. Moreover, even if I do a little "Deploy shared variable" application that runs at Windows startup, I can't guarantee that it will work before start of the side RT executable will run. In this case, the executable file RT will fail due to not having the variable engine shared running? If so, and side Windows, and then starts the engine / deploys the shared variables, the side RT begins to work automatically? If not, is it possible to trigger this restart of the Windows startup application side?

Also, I just read everything and tried the option to build to deploy variables shared in the application of the side Windows. Not only that my RT shared Local Library variables not listed as an option (given that the application of the side Windows does refer to it in all for obvious reasons), but when it deployed two other libraries at startup, the program side of RT (which was running in the development environment) stopped. I'm not positive that would happen even if he was running like a real executable file, but it is certainly enough to make me nervous. I assumed that the library is not listed may be resolved by including a variable network-a published in the local library of RT and including the app side Windows.

About option 2, I don't understand how I'm supposed to deploy my libraries shared variables without stopping the execution of the startup on the target real-time application. Once I did, the only way to restart the application of the RT is to restart the computer RT, correct? In this case, I just undid all the interest to deploy the shared variable libraries? Unless libraries remain deployed and variable motor shared running even after restarting the computer of RT, which would solve the problem I guess. Certainly, I would like to know if this is the case.

However, option 2 is complicated by the fact that when I manually right-click on any of my shared variables libraries and select "Deploy" or 'Deploy all', libraries still do not appear in the Manager of the distributed systems, even after clicking Refresh several times, on the local system or the target system. The only thing that shows up, on both sides, is the Group of 'System', with FieldPoint, etc. in it. The same is true when I run my application in real-time in the development environment, even if the shared variables are clearly working, as I mentioned earlier.

So, if you have done so far through this mammoth post, thanks! I have three main questions:

(1) are that all my descriptions above correct in what concerns the variables how work sharing?

(2) what is the best way to meet the requirements I have described above for my project?

(3) why shared variables libraries not appearing in my manager of distributed systems?

Thanks for any help you can give on any of these three questions!

-Joe

1. Yes, as soon as you deploy the project the NSV is tranactional. EVS is loaded by MAX when you configure the RT target and begin to operate as part of the boot sequence.

2. you can see anything on your target rt in the DSM?

3. Yes, NSV and EVS are persistent resets.

-

"Authentication failed" when you try to deploy to the device app

Out of the blue in the middle of the day yesterady, I can't deploy all the apps on my Z30.

The message (tried both Momentics and command line deploy blackberry) simply says "failure of the authentication of the user.

Note that this is different from the message that you get when you specify the wrong password, which is "error: failed authentication." There was 1 of 5 attempts", so I know my password is correct.

I am capable of correctly SSH to the machine after using the blackberry connect command, but cannot download a token to debug or deploy applications.

Who would do it. You must complete this before configuring the device is fully functional by the USB bus. Manually sys.firstlaunch resets this flag.

-

Windows is no longer active after deployment

I have 3 networks that deploy all Windows 7. Two networks use VMWare and is using WDS. When the machines are deployed, they declare windows will try to activate in 3 days but it never does. I can get the machine to turn on either by pressing enable now or a command prompt running at the level of the administrator and by using the command slmgr /ato. Is it possible to automate this?

Better you the problem can be solved if you post on the forum Pro Windows 7: http://social.technet.microsoft.com/Forums/windows/en-US/home?forum=w7itproinstall

J W Stuart: http://www.pagestart.com

-

Several Applications of the ADF and deploy

Hi guys

I am a new user of JDeveloper, from the world IntelliJ and Eclipse, but a little trouble with the installation of the structure of the project in JDeveloper.

I'm trying to set up a project ADF, the configuration is something like this:

- Pilier1

- Pillar2

- Pillar3

- PillarN

- PillarDeploy

Now, all the pillars are ADF applications with a project of model-view-controller and each of them deploy a JAR file (IE. Pillar1-model and pilier1-view).

The PillarDeploy should contain all the JAR files generated from pilier1-PillarN and packaged as a WAR file.

I've tried several things, but it seems to me that I find myself with a solution where I have to open each pillar and select deploy, then copy the JAR file in web-inf/lib folder of a PillarDeploy. Then deploy PillarDeply. Seems very heavy...

I would like to be able to deploy PillarDeploy as easy as possible, both in JDeveloper (by selecting deploy to WAR/IntegratedServer) or run a MAVEN with or without an ANT script. I of course, however, the 'right' way of don't do this, or best practice so I run not in some cautionary notes subsequently.

Is it possible to include all the pillars in PillarDeploy using JDeveloper and when I press on deploy all pillars are compiled and packaged as a WAR file.

It is usually best to opt for a Maven / Ant script? How to deploy the integrated server to JDeveloper?

Thank you

You should look at using the utility ojdeploy for that of ant or maven. https://pinboard.in/search/u:OracleADF? query = Ojdeploy

-

Hyperion 11.1.2.3 Linux based components of server deployment and windows system

Hello

I'm new and this is perhaps a delicate question. I am trying to wrap my head around this idea, is it possible to correctly install Hypeiron planning on linux? The hyperion java web application and especially the services of the Foundation can be installed under linux.

However some server such as the dimension epma server components can only be deployed on windows. What this means is optimal only installs Hyperion Planning on windows servers? To keep all components on a single server? There are stories where some parts of Hyperion was deployed for linux AND windows? There was a small section in the installation guide of PMC 11.1.2.3 on page 151, but we didn't know about how windows and linux would work together.

Let me know if I need to clear up any confusion about my question.

Thank you!

Hyperion works in mixed mode OS. For the deployment of Web applications, you must deploy all on Windows or Linux.

On page 151, its about HFM. If you have HFM as the source for the FR or Web analysis reports, you must install & config HFM, EN/WebAnalysis under Windows to create the new Weblogic domain.

If you do not have HFM in your deployment, you need not worry.

Kind regards

Santy

-

Hello

I am trying to deploy a BP in the environment of 9.12, and the following error message:

"uDesigner has been updated with a newer version since the last import this business process. In order to import again and referenced BP must also be updated. Your uDesigner administrator must open the properties of BP BP referenced in uDesigner (Edit Studio window), click OK, and re-import. »

I tried to deploy all referenced again BP, but it still gives me this error.

Does anyone have a solution?

This often happens when uDesigner is updated with a new version. Mark as project BPs all referenced by the BP you want to deploy. Open their properties and change something in the description. Mark as complete and deploy. Then do the same with the BP of origin.

-

Hi, can someone help me please? I'm trying to deploy the application of these last two days on the remote server, but don't know what's wrong with that.

[17:39:12]-deployment began. ----

[17:39:12] the target platform's (Weblogic 10.3).

[17:39:13] recovery of the existing application information

[17:39:13] analysis of the dependence running...

[17:39:13] building...

[17: 39:14] deployment of 4 profiles...

[17: 39:15] wrote on the Web for C:\JDeveloper\mywork\KNM\All Approvals\APPViewController\deploy\All Approvals_APPViewController_webapp.war application Module

[17: 39:15] Mobile WEB-INF/adfc - config.xml for META-INF/adfc-config. XML

[17: 39:15] wrote the Archives Module C:\JDeveloper\mywork\KNM\All Approvals\APPViewController\deploy\AdfLIBallApproval.jar

[17: 39:15] wrote the Archives Module to C:\JDeveloper\mywork\KNM\All Approvals\APPModel\deploy\All Approvals_APPModel_adflib.jar

[17: 39:16] wrote the Module Enterprise Application C:\JDeveloper\mywork\KNM\All Approvals\deploy\ApprovalEARDeploy.ear

[17: 39:16] Application deployment...

[17: 39:20] [Deployer: 149193] Operation "deploy" on demand "ApprovalEARDeploy" failed on "AdminServer.

[17: 39:20] [Deployer: 149034] an exception has occurred for task [Deployer: 149026] deploy ApprovalEARDeploy on AdminServer. : could not load the webapp: 'All-amenities-APPViewController-context-root ".

[17: 39:20] WebLogic Server Exception: weblogic.application.ModuleException: could not load the webapp: 'All-amenities-APPViewController-context-root ".

[17: 39:20] caused by: weblogic.management.DeploymentException: error: unresolved references Webapp library "[ServletContext@47003767[app:ApprovalEARDeploy module: all-approvals-APPViewController-context-root path: / All-amenities-APPViewController-context-root spec-version: 2.5]", defined in weblogic.xml [Extension-name: jsf, Specification-Version: 2, exact match: false]

[17: 39:20] check the server logs or the console of the server for more details.

[17: 39:20] weblogic.application.ModuleException: failed to load the webapp: 'All-amenities-APPViewController-context-root ".

[17: 39:20] undeployment.

[17: 39:20] - incomplete deployment.

[17: 39:20] remote deployment failed (oracle.jdevimpl.deploy.common.Jsr88RemoteDeployer)

[17: 39:20] caused by: weblogic.management.DeploymentException: error: unresolved references Webapp library "[ServletContext@47003767[app:ApprovalEARDeploy module: all-approvals-APPViewController-context-root path: / All-amenities-APPViewController-context-root spec-version: 2.5]", defined in weblogic.xml [Extension-name: jsf, Specification-Version: 2, exact match: false]

indicates that the remote server is installed the right ADF runtime. Check if the target server has a duration of adf installed that corresponds to the version jdev that allows you to develop the application. Check JDeveloper Versions vs Weblogic Server Versions. JDev & amp; ADF Goodies for corresponding versions.

Timo

-

Deploy the FVO to a pool of resources

Hello

Ive read the pdf file and have tried several different ways but he couldn't understand. I was curious if someone could give me a deployment of an ovf example syntax in a pool of resources on the target.

Thanks for the help.

Ray

Hello, naabers-

By the documentation of the OVFTool (at http://www.vmware.com/support/developer/ovf/ovf20/ovftool_201_userguide.pdf), it seems that you should be able to deploy an OVF to a resource pool by using something like:

ovftool.exe -ds myDatastore01 testAppliance.ovf vi://myVCenterServer/TestDatacenter/host/esx-host1.example.com/Resources/SmallResourcePool

You do not use the correct values for the vCenter server, data center name, host name and the name of the resource pool. The other key words, 'host' and 'Resources' need to stay, because they are static components of the path. The document addresses the Party on the path to the list of resources on page 19.

And, through my tests, I found that if you deploy all right to a host (vs through vCenter), the syntax would be something like:

ovftool.exe -ds=myDatastore01 testAppliance.ovf vi://esxiHostName.example.com/myResPool0

which excludes the path to the list of resources and just uses the name of the list of resources, probably due to the different 'context' when it is connected directly to a host.

How to make them for you?

-

PowerCLI to deploy virtual machines at the same time

Can I deploy multiple VMs usng PowerCLI, but they spread out in the order and with an error. It seems almost easier to deploy them manually so that they are created at the same time and not one after the other. I also want the static IP but don't know how.

For now, I use a card file customization that indicates static IP and guest for this. But he never does, he deploys the vms system then throws an error stating VC ' a specified parameter was not correct. nicsettings: adatpter:ip. "I want to increment IP addresses on virtual computers deploy.

This is my Basic .ps1 file content

New-vm - vmhost vm04a.domain.com - name w2k8sp2s64qa14-vm-model Template_w2k8sp2s64qa - Datastore vm04a_storage OSCustomizationspec - w2k8sp2s64

Can someone help me?

1 assign static IP addresses

2 deploy all virtual machines at the same time

...

The parameter - RunAsync right on the New - VM cmdlet.

She will continue with the line after the New - VM without delay that the cmdlet New - VM to finish.

-

deployment of a BPM Application large Oracle with several projects of the user interface

Hello world

I'm working on a large application with Oracle BPM 11.1.1.5.0.

I use JDeveoper 11.1.1.5.0 to build the application.

The application has many tasks user with a lot of the UI (one for each task of the user).

My problem is, whenever I make a small change in the project, I need to deploy this, which includes a master project and five or six projects UI (for now) for tasks of the user.

As demand grows, I'm sure that this will certainly become a problem.

So my question is if I do this the good sense? or should there be a more effective way?

Thanks in advance

Published by: luke on April 17, 2012 12:16 AMHi Luke

1. as a general rule, there is NO need to have a user interface project for an individual task. As initiator, approver, reviewer, LegalApprover etc etc, you have like 10 tasks (.task files). You can have just a single project of TaskForms UI and have taskDetails.jspx generated for each of these tasks. This is appropriately.2. any Application workflow must have only 2 deployers. Deploy resources are for real Workflow stuff that will have the main BPEL or process, BPM, human tasks etc. Basically, everything that goes into the workflow project. And another official of the deployment is that the REST of things means that the UI TaskForms project and other project support as useful projects, WebServices, EJB projects (if you have Web services). All this will be deployed as a SINGLE EAR.

Lets take an example JDeveloper IDE point of view.

1. application Name: SalesOrderApp

2 workflow project name: SalesProcess (he is a a project jdeveloper project SOA or BPM Project type and has all human tasks, bpels, process bpm, rules etc.).

3 interface user Project: SalesTaskForms (it is a form generated automatically for the first task you choose. Then for the rest of the tasks you can use this same project, to generate jspx for all others. WORK files. VIEW documents online on how to do it. I'll see if I can compile this list of URLS for you).

3. support the EJB projects: MyEJBProject1 (some EJB project that connects to some specific to your back-end database project)

4. support another Service EJB project: MyExtServiceProject1 (as another project EJB that uses data from an external source)

5. some WebService project: SalesCreditCardValidationWebService (some WebService project to be deployed as. WAR file that performs a service).Try to apply the Concepts of SOA for each Service your application needs. Instead of putting all the EJBs and everying in one giant project, try to split their need logically commercial/sage and geenrate separate projects so that they can maintain as it is to other projects and other applications.

Anyway, coming back, in the example above, you should have deployment just 2: 1 is the JAR file of SCA in the Workflow and another complete of the EAR file.

(a) for the workflow project, right-click and generate a deployment profile to deploy all the workflow and NOT on the other projects of the user interface.

(b) for each project individual genereate a profile of deployment such as MyEJBProj1, MyEJBProj2, MyWebService1, etc etc.

(c) for TaskForms project, generate a deployment as SalesTaskForms profile. It is a WAR file.

(d) at the level of the Application, create a new profile of deployment of the EAR type and now assemble all the projects in this EAR, with the exception of the Workflow project.First of all, you deploy file JAR of SCA of the Workflow. You need to redeploy this again only when you make changes to the workflow, humantasks etc etc.

Deploy the full blown EAR file that has all the stuff (EJB JARs, TaskForm WARs, WebService wars etc.).At any time, you change your code taskforms or redeploy a java, ejb, EAR file.

You should have a POT and an EAR to deploy at the end of the day. Infact this is how it should go into UAT, and production. They may not have many pots, multiple wars deployed. The reason why we have separated Workflow SCA Jar file is, because the workflow will not change frequently. And also older versions of SOA/BPM (before 11.5 FP) have a huge disadvantage. Whenever we deploy a workflow project, it will make existing processes VITIATED, unless you deploy a different version. This isn't a problem anymore for 11.5 + FP (Feature Pack applied). Therefore, be careful when you redeploy workflow project.

Thank you

Ravi Jegga

Maybe you are looking for

-

How to find a file on a new nano

I downloaded several audio books from my local library using OVERDRIVE and then transfer them to an IPOD touch. Tried with a new Nano, the transfer seemed normal. But I could not find on the Nano. Using Windows, with the attached Nano 10, I could s

-

Cannot download adobe flash player because of the age of my computer. Impossible to watch videos or play games online. It's my only computer and I can not afford anything else.

-

Satellite M300: Low levels of his microphone and sound of cracking using Skype

Brand new machine and I tried to use Skype.Low noise levels and a crackling through it. Tried in the sound recorder and its low, but no cracking. Can not get sound levels.Driver for Conexant HD SmartAudio 221 watch day (4.36.7.0) Any help would be ap

-

Please tell me how to remove the microsoft Narrator

Separated from this thread. Have failed in the lead of the Narrator, it is aimed at preventing written comments/captions...any effective solution?

-

Unable to save the .pdf file in Adobe Reader 7

I have download Adobe 7, but I can't change the default for adobe 6 .pdf extensions so that pdf open with adobe 7. Adobe 7 works well if I open it and I can read PDFs with it.