Error in the exercise of reconciliation flat file

Hi allI tried to reconcile flat file using the connector of the GTC. The format of my flat file (PPSLocal.txt) is like this:

*##*

Name of the account. Full name | Field | Last login Timestamp | Description | GUID | Mail | Employee ID | First name | Family name

PPS\SophosSAUPPS010 | SophosSAUPPS010 | PPS. LOCAL | Used to download Sophos updates | EED86D86-750 C-404 A-9326-044A4DB07477 |

PPS\GBPPL-SI08$ | GBPPL-SI08$ | PPS. LOCAL | 79677F4D-8959-493E-9CF9-CDDDB175E40B |

PPS\S6Services | Series 6 Services | PPS. LOCAL | Series 6 Services | B4F41EE2-6744-4064-95F6-74E017D0B9AF |

I created a GTC "CurrentDomain" connector specified configurations;

(Identification of Parent data) staging of directory/home/GTC

/ Home/CWG/archive archive directory

PPS file prefix

Specified delimiter.

UTF8 encoding file

Source Format of Date YYYY/MM/DD hh: mm: z

Reconcile the deletion of attribute multivalue box unchecked data

Reconciliation of integral Type

After that I completed the mapping of the connector configuration and run the respective to schedule a task that displays the following on the console error message:

Class/probable: SharedDriveReconTransportProvider/getFirstPage - before calling: getAllData

* DEBUG, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], class/method: SharedDriveReconTransportProvider/getAllData entered.*

* DEBUG, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], class/method: SharedDriveReconTransportProvider/getReconFileList entered.*

* DEBUG, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], class/method: SharedDriveReconTransportProvider.EndsWithFilter/accept entered.*

* INFO, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], staging->/home/CWG dir *.

* INFO, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], the number of files available-> 0 *.

* DEBUG, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], class/method: SharedDriveReconTransportProvider/getReconFileList left.*

* ERROR, August 20, 2009 09:08:49, 202, [XELLERATE. GC. PROVIDER. RECONCILIATIONTRANSPORT], the problem met to reconcile the first page of the data

com.thortech.xl.gc.exception.ReconciliationTransportException: no Parent file in the implementation stage of directory or permissions access (READ) don't miss

* at com.thortech.xl.gc.impl.recon.SharedDriveReconTransportProvider.getAllData (Unknown Source) *.

* at com.thortech.xl.gc.impl.recon.SharedDriveReconTransportProvider.getFirstPage (Unknown Source) *.

* at com.thortech.xl.gc.runtime.GCScheduleTask.execute (Unknown Source) *.

* at com.thortech.xl.scheduler.tasks.SchedulerBaseTask.run (Unknown Source) *.

* to com.thortech.xl.scheduler.core.quartz.QuartzWrapper$ TaskExecutionAction.run (Unknown Source) *.

* to weblogic.security.acl.internal.AuthenticatedSubject.doAs(AuthenticatedSubject.java:321) *.

* at weblogic.security.service.SecurityManager.runAs (Unknown Source) *.

* to weblogic.security.Security.runAs(Security.java:41) *.

* at Thor.API.Security.LoginHandler.weblogicLoginSession.runAs (Unknown Source) *.

* at com.thortech.xl.scheduler.core.quartz.QuartzWrapper.execute (Unknown Source) *.

* to org.quartz.core.JobRunShell.run(JobRunShell.java:178) *.

* to org.quartz.simpl.SimpleThreadPool$ WorkerThread.run (SimpleThreadPool.java:477). *

* ERROR, August 20, 2009 09:08:49, 202, [XELLERATE. GC. FRAMEWORKRECONCILIATION], encountered error of reconciliation: *.

com.thortech.xl.gc.exception.ProviderException: no Parent file in the implementation stage of directory or permissions access (READ) don't miss

* at com.thortech.xl.gc.impl.recon.SharedDriveReconTransportProvider.getFirstPage (Unknown Source) *.

* at com.thortech.xl.gc.runtime.GCScheduleTask.execute (Unknown Source) *.

* at com.thortech.xl.scheduler.tasks.SchedulerBaseTask.run (Unknown Source) *.

* to com.thortech.xl.scheduler.core.quartz.QuartzWrapper$ TaskExecutionAction.run (Unknown Source) *.

* to weblogic.security.acl.internal.AuthenticatedSubject.doAs(AuthenticatedSubject.java:321) *.

* at weblogic.security.service.SecurityManager.runAs (Unknown Source) *.

* to weblogic.security.Security.runAs(Security.java:41) *.

* at Thor.API.Security.LoginHandler.weblogicLoginSession.runAs (Unknown Source) *.

* at com.thortech.xl.scheduler.core.quartz.QuartzWrapper.execute (Unknown Source) *.

* to org.quartz.core.JobRunShell.run(JobRunShell.java:178) *.

* to org.quartz.simpl.SimpleThreadPool$ WorkerThread.run (SimpleThreadPool.java:477). *

Caused by: com.thortech.xl.gc.exception.ReconciliationTransportException: file No. Parent directory or permissions (READ) access to timing are missing

* at com.thortech.xl.gc.impl.recon.SharedDriveReconTransportProvider.getAllData (Unknown Source) *.

* ... 11 more *.

* Put in CUSTODY, 20 August 2009 09:08:49, 203, [XELLERATE. DCM FRAMEWORKRECONCILIATION], a task if planned reconciliation has encountered an error, reconciliation Transport providers have been "completed" smoothly. Any provider operation that occurs during this 'end' or 'cleaning' phase would have been performed such archival data. Where you want the data to be part of the next run of reconciliation, restore it from the staging. Log provider must be containing information about the entities that would have been archived *.

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. DCM PROVIDER. RECONCILIATIONTRANSPORT], class/method: entered.* SharedDriveReconTransportProvider/end

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. DCM PROVIDER. RECONCILIATIONTRANSPORT], class/probable: SharedDriveReconTransportProvider/end - after call: re-definition of instance variables *.

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. DCM PROVIDER. RECONCILIATIONTRANSPORT], class/probable: SharedDriveReconTransportProvider/end - after call: re - fire for example variables *.

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. DCM PROVIDER. RECONCILIATIONTRANSPORT], class/method: left.* SharedDriveReconTransportProvider/end

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. PLANNER. TASK], class/method: left.* SchedulerBaseTask/run

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. PLANNER. TASK], class/method: SchedulerBaseTask/isSuccess entered.*

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. PLANNER. TASK], class/method: SchedulerBaseTask/isSuccess left.*

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. SERVER], class/method: SchedulerTaskLocater /removeLocalTask entered.*

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. SERVER], class/method: SchedulerTaskLocater /removeLocalTask left.*

* DEBUG, August 20, 2009 09:08:49, 203, [XELLERATE. SERVER], class/method: QuartzWrapper/updateStatusToInactive entered.*

* DEBUG, August 20, 2009 09:08:49, 207, [XELLERATE. SERVER], class/method: QuartzWrapper/updateStatusToInactive left.*

* DEBUG, August 20, 2009 09:08:49, 207, [XELLERATE. SERVER], class/method: QuartzWrapper/updateTaskHistory entered.*

* DEBUG, August 20, 2009 09:08:49, 208, [XELLERATE. SERVER], class/method: QuartzWrapper/updateTaskHistory left.*

* DEBUG, August 20, 2009 09:08:49, 209, [XELLERATE. SERVER], compensation of the SAS with scheduled task thread *.

* DEBUG, August 20, 2009 09:08:49, 210, [XELLERATE. SERVER], class/method: left.* QuartzWrapper/run

* DEBUG, August 20, 2009 09:08:49, 210, [XELLERATE. SERVER], class/method: left.* QuartzWrapper/execution

However, to my great astonishment, the event of reconciliation for all users are is created in the console of the IOM Design;

I tried to change the permission of/home/directory to 777 also GTC, but it did not help.

If anyone has any idea on this subject, kindly help.

See you soon,.

Sunny

Caused by: com.thortech.xl.gc.exception.ReconciliationTransportException: file No. Parent directory or permissions (READ) access to timing are missing

Just check the folder where you put the flat file. It shows that the file is not there now and also check the permissions of it.

If please chk and let me know.

Tags: Fusion Middleware

Similar Questions

-

IOM: reconciliation flat file trust

Hello

I am doing trust reconciliation flat file, but no user is imported.

Mapping and the GTC connector settings looks ok. File is moved from stage to check the directory, but there is no any user.

Everything is done as it is written in this OBE (http://www.oracle.com/technology/obe/fusion_middleware/im1014/oim/obe12_using_gtc_for_reconciliation/using_the_gtc.htm).

Is there a way to activate the tracing or record to see what is actually happening?

TNX in advance.

Published by: user5037528 on December 4, 2008 02:03Hello

You still get the same error message? No value of required fields?

The required fields are:

User login

First name

Family name

Email (?) -> I don't know, but you provide it, so it's not the problem

Password (?) -> GTC generates the password during the reconciliation of the tables, it should also do this with files

Type of user

Employee type

Organization

+ All required UDF.It is important that the values provided in the Organization, the Type of user, Type of employee needs already exist in your IOM before reconciliation is running.

If you have all these fields defined in your GTC and you always throw this error, I guess that the problem might be that the mapping change you did.

Kind regards

-

GTC - length reconciliation flat file fixed

Hello

I have a .txt file, consisting of a series of numbers like 12345678912345678912345678912345678-> total 35 in length. (Noted in IOM 9.1.0.1 Administrator guide), specified one after another in a different as line:

12345678912345678912345678912345UIA

87132653912345678912345678912345DIC

12345678912345678912345678954321UIC

.......

These series of numbers to provide values for various UDF I created to IOM for example:

1-5 characters/digits represent the postal code

6-15 characters or numbers represent Contact Info.

I tried the GTC fixed length flat file to reconcile all of these values in IOM belonging to a particular user.

But here's the problem:

It only shows me the first record, that is, the first line (first set of numbers) in the file in the mapping schema. Other that that, he doesn't show me any records at all.

Can someone give me more ideas on how we can configure this particular GTC?

Thanks in advance!

-oidm.So for the first time, users are created successfully. But next time for update it gives the error "Connection ID is duplicate" as stated above. It takes the value of harcoded again and again.

Just to answer that, you have created the user to IOM. NOy, you have made some changes in the attributes of the FF user as mob number, city, zip etc.

Now you say after recon again once he takes hardcdoed userid.

Is he will do the same as this is the treatment that you have updated as well as in FF user name.Just think about the scenario, you have created the user the IOM to FF with SSN as a unique attribute. Now, if you make changes in the zip of FF user attributes, phn no and userid as well.

So according to you, should it do.

It should update the username. I'm wrong?

So what is happening in your case.

To do this, simply delete the FF Recon userid field and insert your code in before Insert and let me know what happens.

Let me know if you still have doubts.

Published by: Arnaud

-

Error reconciliation flat file GTC

I try to bring to users for IOM using a flat file recon.

Here is my error...

< 13 February 2013 10:54:50 EST > < error > < org.quartz.impl.jdbcjobstore.JobStoreCMT > < BEA-000000 > < MisfireHandler: rates of error handling: unexpected runtime exception: null

org.quartz.JobPersistenceException: unexpected runtime exception: null [see nested exception: java.lang.NullPointerException]

at org.quartz.impl.jdbcjobstore.JobStoreSupport.doRecoverMisfires(JobStoreSupport.java:3042)

to org.quartz.impl.jdbcjobstore.JobStoreSupport$ MisfireHandler.manage (JobStoreSupport.java:3789)

to org.quartz.impl.jdbcjobstore.JobStoreSupport$ MisfireHandler.run (JobStoreSupport.java:3809)

Caused by: java.lang.NullPointerException

at org.quartz.SimpleTrigger.computeNumTimesFiredBetween(SimpleTrigger.java:800)

at org.quartz.SimpleTrigger.updateAfterMisfire(SimpleTrigger.java:514)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.doUpdateOfMisfiredTrigger(JobStoreSupport.java:944)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.recoverMisfiredJobs(JobStoreSupport.java:898)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.doRecoverMisfires(JobStoreSupport.java:3029)

to org.quartz.impl.jdbcjobstore.JobStoreSupport$ MisfireHandler.manage (JobStoreSupport.java:3789)

to org.quartz.impl.jdbcjobstore.JobStoreSupport$ MisfireHandler.run (JobStoreSupport.java:3809)

>

< 13 February 2013 10:54:52 EST > < error > < oracle.iam.platform.utils > < BEA-000000 > < cannot be applied DMSMethodInterceptor on oracle.iam.reconciliation.impl.ActionEngine >

< 13 February 2013 10:54:52 EST > < WARNING > < XELLERATE. DCM PROVIDER. RECONCILIATIONTRANSPORT > < BEA-000000 > < FILE ARCHIVED successfully: /home/oracle/Desktop/GTCinput/identities.txt~ >

Any help is greatly appreciated!Select the Advanced tab--> click event of reconciliation of search and then search the events.

Check if the events are generated or not. When they are generated is, check if you see errors on the events.

If Yes, then please paste the error message.

-

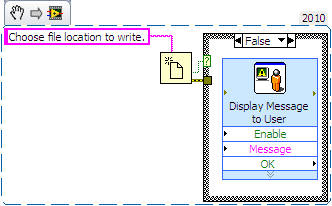

Error with the function "Open/create/overwrite file" with cancel button

I have attached a very simple VI who embodies what I want to do with my function to open the file. I just want to stop the rest of my program (theoretically embedded in the Structure box) to run if the user deigns to do not to specify a file location.

However, if you click on the button cancel an error is produced before the rest of the program is running. If ignore you the error and continue, the "canceled" properly true made variable and the Structure of business runs correctly. "" I just want to remove the error message given to me by LabVIEW.

Any ideas on why or how?

Read the help of LabVIEW on automatic error handling.

In your case, you can just wire the output of cluster of error to the structure of your business.

-

DB not starting not due ORA-00205: error in the identification of 11GRAC control file

Hello

I have it configured in the OEL5 11 g RAC (11.2.0.1.0). When I tried to start the database using the command srvctl or by manually I get the below error. \

RPRC-1079: failed to start of ora.rac.db resources

ORA-00205: error in the identification of control files, see log alerts for more information

CRS-2674: beginning of 'ora.rac.db', 'ractwo' failed

CRS-2632: there is no more servers to try to place the "ora.rac.db" resource on which will achieve its investment policy

ORA-00205: error in the identification of control files, see log alerts for more information

CRS-2674: beginning of 'ora.rac.db', 'racone' failed

The content of the alert below log.

ORA-00210: could not open the specified control file

ORA-00202: control file: "+ RACDG/rac/controlfiles/control02.ctl".

ORA-17503: ksfdopn:2 could not open the file +RACDG/rac/controlfiles/control02.ctl

ORA-15001: diskgroup 'RACDG' does not exist or is not mounted

ORA-15055: unable to connect to the ASM instance

ORA-27140: attach to post/wait installation failed

ORA-27300: OS dependent operating system: invalid_egid failed with status: 1

ORA-27301: OS Error Message: operation not permitted

ORA-27302: an error occurred at: skgpwinit6

ORA-27303: additional information: current startup egid = 500 (oinstall), egid = 503 (asmadmin)

ORA-00210: could not open the specified control file

ORA-00202: control file: "+ RACDG/rac/controlfiles/control01.ctl".

ORA-17503: ksfdopn:2 could not open the file +RACDG/rac/controlfiles/control01.ctl

ORA-15001: diskgroup 'RACDG' does not exist or is not mounted

ORA-15055: unable to connect to the ASM instance

ORA-27140: attach to post/wait installation failed

ORA-27300: OS dependent operating system: invalid_egid failed with status: 1

ORA-27301: OS Error Message: operation not permitted

ORA-27302: an error occurred at: skgpwinit6

ORA-27303: additional information: current startup egid = 500 (oinstall), egid = 503 (asmadmin)

But my ASM instance is running and I am able to see the controlfiles.

State Type sector Rebal to Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files name of the block

EXTERN MOUNTED N 512 4096 1048576 20472 13772 0 13772 0 N RACDG.

I don't know is there any problem of perission. Oracle cluster and DB is running as user 'oracle '.

Nobody does face this problem before? Let me know where to check this error.

Kind regards

007

Hello

Check "the correct permission must be" - rwsr - s - x '.»»»

Stop the CRS.

Change the permissions of the file to GI_HOME/bin/oracle for "- rwsr - s - x":$su - grid

$cd GI_HOME/bin

$chmod 6751 oracle

Oracle-l $lsStart the /CRS.

Start your database.

Thank you

Sundar

-

Print the information in a flat file

Is there a way to say, create a string array and that print to a flat file using Flex?

Thank you

Bill

In Flex, you can not write to the file, but with an AIR application, you can. You may need to use HTTPService to transfer the data to the server and then write in the file on the server using PHP, Python, Java, etc.

If this post has answered your question or helped, please mark it as such.

-

NULL values in the numeric columns in flat files

Some of my sources are flat files that sometimes have NULL values mixed with numbers in numeric columns that are part of the key. Of course causing incompatibilities over the insertion/development integration process to date. Which, if any, is the best way to 'ODI' to change NULL values to zeroes before the integration process?

Thank you!Its hard to create constraints or checks on the models of flat file.

Thus, the way that we do, load us the flat files to the raw tables that look 100% like flat files and then perform these transformations or checks on them.

After that, they are loaded on the target system. -

Library exported to external hard drive, error in the attempt to link to files on hard drive

Hello

I recently started using lightroom and stored the files on the local drive of my macbook pro (current operating system) up to that I ran out of space. Export the catalog a HD external, then deleted the files from the local disk. As I tried to import the .lrcat file for export to the external hard drive and received this error message:

"Cannot import this catalog at this time. The catalog is in use by another copy of Lightroom or has been closed properly. If it was badly closed, please consider getting a health check at the opening. »

I'm not in LR on other computers, so I'm thinking that Lightroom was "closed badly. However I can not know what to do, and technical support will not help me without the serial number which I can't because I'm travelling. Any advice would be sincerely appreciated.

Jordan

If you indeed export the catalog on the external hard drive, you don't need to import it. Just double-click on the copy of the catalog that is located on your external drive.

Furthermore, your serial number is available in the menu help/information system.

HAL

-

PSE9: Get all of a sudden the error during the processing of RAW camps files

I am factory PSE9 and went to process several RAW files today as I have done several times in the past. However, all of a sudden today even though the settings are always set to MAX JPEG I get prompted to save each file as a PhotoShop file. Then all of a sudden he started to process all files automatically as in the past, but once I get the following error for each individual image file.

Start the processing of multiple files

File: 'C:\Users\desktop1\Desktop\2011_08_20_Early_Morning_Garden\_MG_0992.CR2. '

Error: Parameters for the command 'Save' is not currently in effect. (- 25923)I made no changes to the PSE9 and as said have done this several times previously.

Any help would be greatly appreciated.

Thank you

You might have set gross of camera to open the files in 16 bits/channel.

Raw from the camera and at the bottom of the dialog box to open camera raw

Choose 8-bit/channel under the bit depth and click OK and then try

process multiple files.

MTSTUNER

-

create or replace procedure Dynamic_Table AS

iVal VARCHAR2 (32);

iTemp varchar (200): = ";

sql_stmt VARCHAR2 (200);

l_file1 UTL_FILE. TYPE_DE_FICHIER;

l_file utl_file.file_type;

BEGIN

l_file1: = UTL_FILE. FOPEN ('TEST', 'dinput.txt', 'R');

EXECUTE IMMEDIATE ' CREATE TABLE baseline (Item_ID varchar2 (32))';

Loop

BEGIN

UTL_FILE. GET_LINE (l_file1, iVal);

EXECUTE IMMEDIATE ' insert into baseline values (: ival) "using ival;

EXCEPTION

WHEN No_Data_Found THEN EXIT;

While some OTHER THEN dbms_output.put_line (SQLERRM); * /

END;

end loop;

END;

You are approaching this the wrong way. Create an external table based on the file. External tables are CSV, fixed width data in a queryable table.

You will need to create an oracle directory to put the file in (MY_ORA_DIR) I leave it for you to do, and then perform the following...

create table BASELINE)

ITEM_ID varchar2 (32)

)

EXTERNAL ORGANIZATION

(

TYPE ORACLE_LOADER

THE DEFAULT DIRECTORY MY_ORA_DIR

ACCESS SETTINGS

(

RECORDS DELIMITED BY NEWLINE

LOGFILE "dinput.log".

BADFILE "dinput.bad."

NODISCARDFILE

FIELDS

(

ITEM_ID

)

)

LOCATION ("dinput.txt")

)

REJECT LIMIT UNLIMITED

/

All the dubious records appear in dinput.bad. Dinput.log will give you information.

External tables are read-only, so once you set up your file, you can create editable as a normal table.

create table ITABLE_EDITABLE as

Select * from BASELINE

/

Work done, a few lines of code.

-

Windows 7 backup error "the backup completed, but some files were skipped - 0x8100002F."

Original title: error on backup windows 7 files ignored

Have used the backup of windows 7 with no problems for several years.

Get this error now: "the backup completed, but some files were skipped - 0x8100002F"

Log shows:

Backup has encountered a problem during backup of the C:\Users\HOME\Pictures\2013-08-17 file. Error: (the system cannot find the specified file. (0 x 80070002))

Backup has encountered a problem during backup of the C:\Users\HOME\Pictures\2013-08-17-dup file. error: (the system cannot find the specified file. (0 x 80070002))Check my hard drive of the PC with windows Explorer & files above do not exist.

Tried to change my backup settings to not have the folder of images on the home account backed up & still get the error. Store all of my photos on another account (admin account) so no need to save photos on the House.

Please help to get rid of this annoying error.

HelloThis problem occurs if Windows backup ignores certain files when Windows Backup cannot find them. When you perform the backup there backup Documents, photos, music and videos through user libraries. So if Windows detects any changes in the path of the folder then the libraries do not recognize their unless you access the corresponding library via Windows Explorer.

Please, try the steps in the Microsoft KB article and check if it helps.

0x8100002F error code and or error code 0 x 80070002 when you back up files in Windows 7.

http://support.Microsoft.com/kb/979281I hope this helps. If the problem persists, please answer, we will be happy to help you.

-

error when reading flat file of external table... "ORA-01849: time must be between 1 and 12"

My question is - is it possible for me to fix this error at the level of external table definition? Please advice

Here is the data file I am trying to download...

KSEA | 08-10 - 2015-17.00.00 | 83.000000 | 32.000000 | 5.800000

KBFI | 2015-08-06 - 15.00.00 | 78.000000 | 35.000000 | 0.000000

KSEA | 08-10 - 2015-11.00.00 | 73.000000 | 55.000000 | 5.800000

KSEA | 08-08 - 2015-05.00.00 | 61.000000 | 90.000000 | 5.800000

KBFI | 2015-08-06 - 16.00.00 | 78.000000 | 36.000000 | 5.800000

KSEA | 2015-08-07 - 18.00.00 | 82.000000 | 31.000000 | 10.400000

KSEA | 08-10 - 2015-00.00.00 | 65.000000 | 61.000000 | 4.600000

KBFI | 08-08 - 2015-07.00.00 | 63.000000 | 84.000000 | 4.600000

KSEA | 08-10 - 2015-15.00.00 | 81.000000 | 34.000000 | 8.100000

This is the external table script

CREATE TABLE MWATCH. MWATCH. WEATHER_EXT ".

(

LOCATION_SAN VARCHAR2 (120 BYTE),

DATE OF WEATHER_DATETIME,

NUMBER (16) TEMP.

NUMBER (16) OF MOISTURE,

WIND_SPEED NUMBER (16)

)

EXTERNAL ORGANIZATION

(TYPE ORACLE_LOADER

THE DEFAULT DIRECTORY METERWATCH

ACCESS SETTINGS

(records delimited by newline

BadFile "METERWATCH": "weather_bad" logfile 'METERWATCH': 'weather_log '.

fields ended by ' |' missing field values are null

(location_san, WEATHER_DATETIME char date_format DATE mask "YYYY-mm-dd - hh.mi.ss", TEMPERATURE, MOISTURE, wind_speed)

)

LOCATION (METERWATCH: 'weather.dat')

)

REJECT LIMIT UNLIMITED

PARALLEL (DEGREE 5 1 INSTANCES)

NOMONITORING;

Here is the error in the weather_bad which is generated files...

column WEATHER_DATETIME of 55 error processing in the 1st row to the /export/home/camsdocd/meterwatch/weather.dat data file ORA - 01849ther_log.log 55 56 error processing column WEATHER_DATETIME in the row 1 for the /export/home/camsdocd/meterwatch/weather.dat data file 57 56 ORA - 01849ther_log.log: time must be between 1 and 12 58 column WEATHER_DATETIME 57 error during treatment number 2 for the /export/home/camsdocd/meterwatch/weather.dat data file 59 ORA-58 01849: time must be between 1 and 12 60 column WEATHER_DATETIME of 59 error processing 5th for the /export/home/camsdocd/meterwatch/weather.dat data file 61 ORA-60 01849: time must be between 1 and 12 62 column WEATHER_DATETIME of 61 error treatment in line 6 to the /export/home/camsdocd/meterwatch/weather.dat data file 63 ORA-62 01849: time must be between 1 and 12 64 column WEATHER_DATETIME of 63 error treatment in row 7 for datafile /export/home/camsdocd/meterwatch/weather.dat 65 ORA-64 01849: time must be between 1 and 12 66 column WEATHER_DATETIME of 65 error treatment 9 for the /export/home/camsdocd/meterwatch/weather.dat data file online 67: time must be between 1 and 12 My question is - is it possible for me to fix this error at the level of external table definition? Please advice

Yes it is possible. Let's not your date mask. You're masking for 12-hour format when your data is in 24-hour format. Change the mask of your date to be "YYYY-mm-dd-hh24. MI.ss ". Notice the change in "BOLD".

-

faced with the question when writing data to a flat file with UTL_FILE.

Hi gurus,

We have a procedure that writes the data from the table to a flat file. RAC is implemented on this database.

While writing data if the current instance, this procedure creates two copies of the data in parts.

Any body can help me to solve this problem.

Thanks in advance...I also asked this question, but it seems no final solution...

In any case, here are two possibilities

(1) the directory for the file among all nodes share

(2) run you a procedure on a specific node -

I noticed in the latest versions of flat files VMDK disappeared and we're back to the old way of doing with just the VMDK. What version changed it back on? I have not found much info on the interwebs on it.

Can you please clarify your question? There are always two files for each virtual disk, a file descriptor/header and a data file (flat/delta), although the only one without a dish or delta appears in the data store Navigator.

André

Maybe you are looking for

-

can not see the text to type to confirm that I'm not a robot!

I'm trying to set up sync, everything works fine until I get to the page "prove that you're not a robot."I can't see any text to type, so I can't continue.Help, please! p.s. I am not a robot, promise!

-

Today, I was sent an email from windows live messenger telling me I'll lose my account within 3 days, if I don't give them my password, user name, address and ect. It's for real?

-

External ringer on Port FXS VoIP phone w / call Mgr 8.6

Hello I'm doing an external Bell at the same time when a Cisco VoIP phone is called. The environment in which we are is very hard and the ringtone was great before, we have implemented VoIP. I have the external Bell connected to a FXS port out of my

-

Impossible to uncheck 'make hand tool reading the articles '.

I'm trying to uncheck "make labor costs read the articles" but it will not stick. If I open the settings a moment later, that the box is checked again.With the help of Adobe Acrobat Pro DC 2015.010.20060 on Windows 10.Any help is appreciated./ Kristi

-

Can not scan wireless Photosmart Premium C410A

My C410A runs well wireless for all functions except scanning. It will not respond to a command to scan wireless. Support long sessions with HP and Microsoft Netgear (mfr-router) have failed to identify the source of effective workaround of prob. has