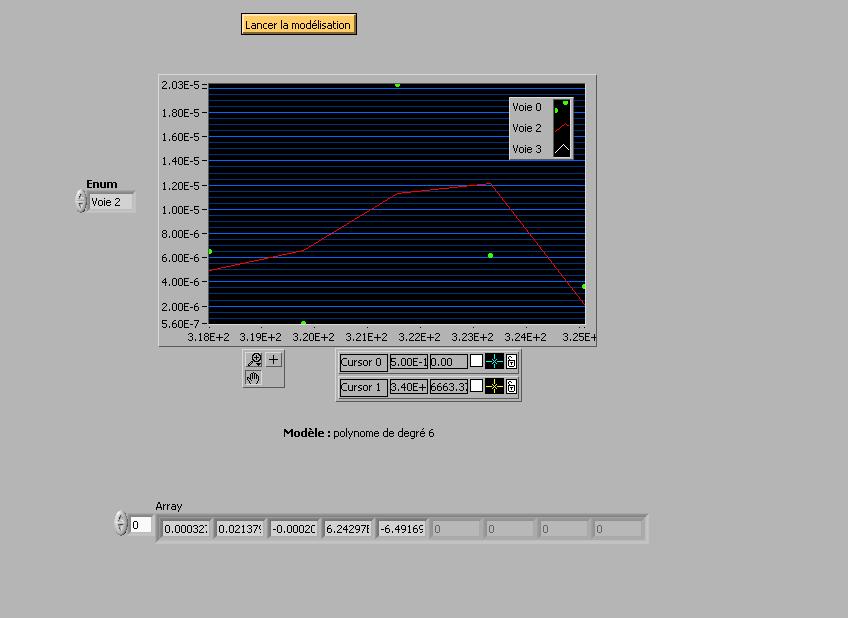

General polynomial adjustment SVD method produces obviously fake bad adjustment

I use 2009 SP1

For orders 0-3, it seems to works. Orders 4 and above to produce results which in so clearly not a better fit, I can imagine how its possible. I've seen other posts about it, but nobody seems to fully recognize that this is clearly a bug. Other techniques work well, by eye anyway and completely agree with the other for least square adjustment.

Can someone explain this? He NEITHER has a bug report for it? 2010 still has this problem?

So you can play with the adjustments and watch the nonsense, I enclose a vi with my data set and a loop.

Floating-point numbers are stored in a mantissa format * 2 ^ (exp), with a certain number of bits to store each. Let's say that you use m bits to store the mantissa and the exponent n. As a general rule, the first bit of the mantissa is supposed to be one, so you actually m + 1 bits. The smallest increment, you can represent is (1/2 ^ {m + 1}) * 2 ^ n or 2 ^(n-m-1). As the number grows, the increment increases as well, that is why we normally say you have x numbers for a given representation. Accuracy, as expressed by the magnitude of this figure depends on the size of the number.

What's my point? Scaling X works in this case because the smaller values X are not exploit fully all the bits at their disposal. Dividing by say 10 ^ 4 does not effect the accuracy of small representations of number but contributes considerably on the great values raised great powers X. At some point you start to lose on the small X values and updated information on the scale is more aid. Then you declare victory, change algorithms, change the representations, the problem of change or move.

The Givens and master of the House (I always use Householder) changes are inherently effective these type problems (no coincidence why they are popular). Most of the algorithms include scaling of measures to increase the stability of digital.

Tags: NI Software

Similar Questions

-

Polynomial trendline does not Polynomial adjustment points

Hello.

I need to create the calibration curve by adjusting the experimental points per 3-order polynom. I tried with Polynom Fit.vi General (see attachment). The strange thing is that the curve goes through the Polynomial adjustment points. Please, take a look at my code, maybe I missed something?

smercurio_fc wrote:

In addition, there is no need even to use the formula node.

We must also calculate explicit polynomial. Just use the polynomial evaluation of the polynomial palette. (See the image)

The good thing is that no code changes are necessary if you later decide to make an adjustment of higher order (or below) instead. This solution is much more scalable.

Do the polynomial order control and you can change it on the fly.

-

"Residue" in general polynomial Fit function

Did anyone of you statistics fans understand how LabVIEW calculates the output of 'residue' in the service of "General polynomial worthy? How is it related to the R ^ 2 value on a polynomial trendline Excel.

I tried to figure this out for hours, but aid LV files do not seem to have the info I'm looking for, and I can't find a good explanation on the line.

Thank you

FB

I thought about it. I was looking at the TOTAL error of all the elements, rather than the average error.

-

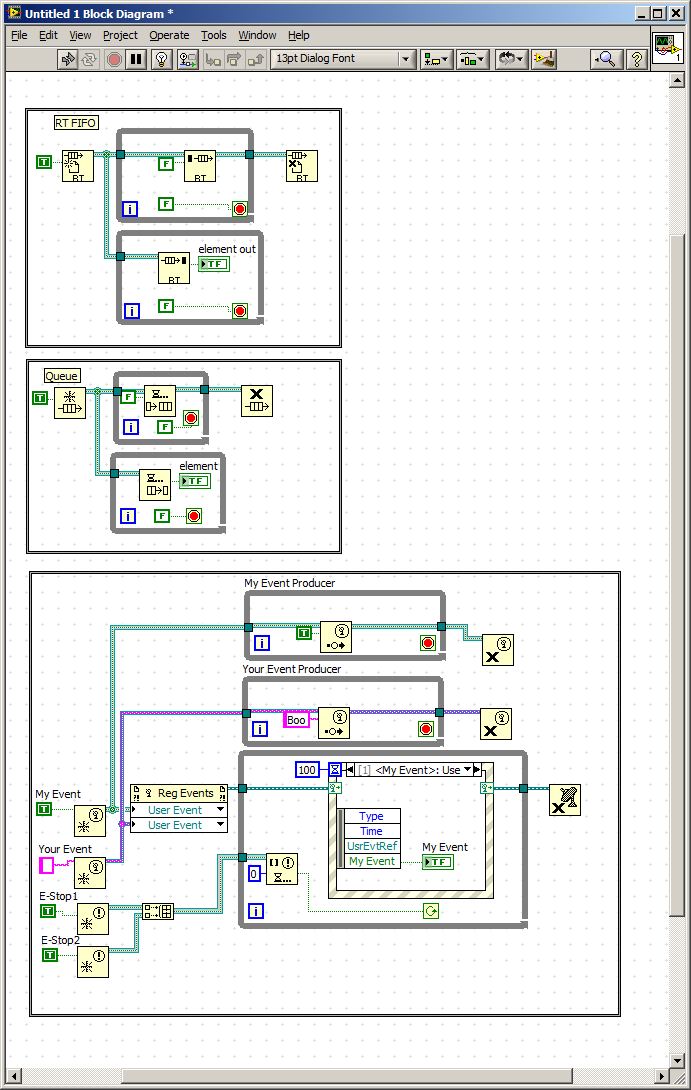

What is the fastest method producer consumer. Queue, RT-FIFO, event

Hi all

Another question completed for the pro:

I recently inherited the labview code that uses RT-FIFO for the transfer mechanism in the architecture of the producer consumer.

The code was first written in 3-4 years and is currently in LV8.6. It is possible that the reasons for the architectural decision no longer exists.

I am qualified using a producer consumer queued architecture,

I understand the RT-FIFO Architecture.

I started using an architecture based on events from the user.

(I have attached samples of each)

I also see the existence of a priority queue

Each method has its own capabilities and gaps, that hand, nobody knows the relative performance of each method.

(Assuming that the only process)

I would expect RT-FIFO to be faster, there seems to be a version of low characteristic of a standard queue.

What is the perfornace hit to use a coding user-friendly queue more

RT-FIFO description talks about commications between the time-critical and lower priority threads.

Until today, I thought that the queues had the same capacity.

I've included an event method that I commonly use for review by peers and help from other users...

It allows to:

1. several producers with different types of data

2. process 37 production order.

3 allows asynchronous verification of functional notifiers such as stop, start and abort.

4. in a system not real-time, it can include interactions of façade.

What I do not understand on this subject, that's what are overhead or thread priority changes that may occur using this architecture (it solves a lot of problems for me).

Thanks in advance,

Hi Timmar,

Here's a KB article on some frequently asked questions about the RT FIFO: http://digital.ni.com/public.nsf/allkb/7AE7075AF1B2C58486256AED006A029F?OpenDocument

The more relevant question is #4, I posted below.

What is the difference between RT FIFO and queues?

Functionally, RT FIFO and LabVIEW queues are two pads of first in, first out. However, here are the main differences between them:- RT FIFOs run deterministically in LabVIEW and evanescent code queues are not. This comes from the fact that queues use block calls during read/write to the resource shared, while RT FIFOs use non-blocking calls.

- RT FIFO are fixed size while queues develop when items are added to them.

- RT FIFOs will execute the code, even if there are input errors. They can (and will) produce new errors and spread the existing errors.

- Queues work with any type of data, while the data types that can be used with RT FIFOs are limited. Generally any type of data involving allocations of extra memory can be used with RT FIFOs to preserve determinism.

Let us know if you have persistent questions!

Ryan

-

General polynomial fitting of a curve at 5 points

Hi, I'm trying to adjust a curve of only 5 points with the polynomial general function, but it doesn't seem to work. I tried all the algorithms and controls up to 5 (number of points). You have a solution for that wonderfull should be that we get with excel?

I think you're using the coefficients in the wrong order. If the coefficient is a, the formula is:

y = a0 + a1 * x + a2 * x ^ 2 +...

Or as you label, element 0 is e, item 1 is d...

Hope this helps,

Daniel

-

Calculation of adjustment of 3 Points 2nd order polynomial curve

Hi all

I wonder if there is a simple way to calculate the coefficients a, b and c in a 2nd order polynomial of the form equation

y = ax ^ 2 + bx + c

given 3 points known.

Is there a LabVIEW function that can do this automatically?

Thank you!

-FB

I think you're looking for a general Polynomial fit.

-

General using polynomial Fit VI with waveform graph

Hello gurus,

I would like to ask how to use general polynomial Fit VI with waveform graph no GraphXY.

Waveform graph gives me an array of values not X, Y, I think, what do I do?

Thanks in advance

Hi Omar,.

I changed the example Labview embedded to use the general polynomial Fit VI with a waveform graph.

But why don't you use a X-Y-Graph?

-

How to calculate the equation of dataset using 'Fitting VI' (non-linear or polynomial fit)?

I have the datasheet of a NTC (thermistor negative Coefficient) and I want to calculate the most approximate equation

that describes its behavior. The table has values of temperature and resistance. If the resistance of the NTC varies according to the temperature it detects. I tried the following:

-Excel: polynomial adjustment

-Maple: polynomial adjustment

-LabVIEW: Polynomial Fit.vi

I've been doing equations with a not very good approximate parameteres. I suspect that this method 'Polynomial FIT' it is not the best method for this type of data/equation (being a non-linear equation).

So is - that someone has used the non-linear Fit.vi matching the behavior of a dataset object? If so, can you explain how this VI... I would really appreciate it.

Thanks in advance and I will wait for your reply.

-

Hello Forum,

It is a good forum. I see that many users have the same problems as me. I am using Captivate 3.

It's almost a double post (I hope that no one will notice).

I have not yet found a good way (or better way when it exists) regarding recording audio for a Captivate project.

I tried to record screen captures and shares all audio recording (not good at all) - but use two people - a drive and an operator.

I recorded screenshots - following a script - and guess the approximate times. And then audio recording using Audacity and or SoundBooth and then import the audio adjustment slides on the timeline, etc.

This method seems not too bad - seems to work fine for small projects, but as they grow - it can be very time consuming.

The last method I tried is to have a player of script read the script, a script of the screen reader guidelines monitoring operator and action captures (normally run in demo mode and using Captivate 3.0).

Then save the audio-based slide by slide, (with the help of captivate for audio) and sometimes audio for 3 or 4 or more slides. This requires still some juggling in the chronology of the Audio project calendar.

How do other this same thing? A last thought I have is to launch the project set timing of sage with player read script and an operator running catches and then select ' Audio/recording/project' of the menu items and record narration, while the project runs.

The other methods out there?

Thanks again,

eholz1Hi eholz1,

Basically, I use your method of 3rd. I save all my screenshots and actions without worrying about the time. I then record audio (using captivate and a pole of logitech, nothing fancy) on a slide-by-slide basis. Finally, I adjust calendar so that everything matches. Yes, it's a lot of time, but I found that it leads in the final product the better for me.

When you record audio, any methiod you use, I recommend you do it at the same time in the same place. If you break your record, small differences in your voice or Environment may be very obvious.

Hope this helps,

Peter -

Hello!

Using the line profile, I got a curve representative the trend of pixel on an image, now I want to apply for this curve a polynomial for a more linear trend eliminating bothers them; but before that I have to select(interpolate?) some points on this curve (this point will be the entrance to my polynomial). How can I do this?

Yet once, your displayed such VI contains no data.

You can use 'polynomial adjustment' to obtain the coefficients and then use "polynomial evaluation" to calculate the function for an arbitrary x ramp.

-

Best practices for call code plsql and methods of application module

In my application I am experience problems with the connection pool, I seem to use a lot of connections in my application when only a few users are using the system. As part of our application, we need to call procedures of database for the business logic.

Our support beans, calls the methods of the module of the application calling to turn a database procedure. For example, in the bean to support, we have code as follows to call the method of module of the application.

Component Module to generate new review/test.

CIGAppModuleImpl appMod = (CIGAppModuleImpl) Configuration.createRootApplicationModule ("ky.gov.exam.model.CIGAppModule", "CIGAppModuleLocal");

String testId = appMod.createTest (username, examId, centerId) m:System.NET.SocketAddress.ToString ();

AdfFacesContext.getCurrentInstance () .getPageFlowScope () .put ("tid", testId);

Close call

System.out.println ("delete Calling releaseRootApplicationModule");

Configuration.releaseRootApplicationModule (appMod, true);

System.out.println ("Completed releaseRootApplicationModule delete");

Return returnResult;

In the method of application module, we have the following code.

System.out.println ("CIGAppModuleImpl: call the database and use the value of the iterator");

CallableStatement cs = null;

try {}

CS = getDBTransaction () .createCallableStatement ("start?: = macilap.user_admin.new_test_init(?,?,?);") end; ", 0) ;

cs.registerOutParameter (1, Types.NUMERIC);

cs.setString (2, p_userId);

cs.setString (3, p_examId);

cs.setString (4, p_centerId);

cs.executeUpdate ();

returnResult = cs.getInt (1);

System.out.println ("CIGAppModuleImpl.createTest: return result is" + returnResult);

} catch (SQLException to) {}

throw new Aexception.getLocalizedMessage (se);

}

{Finally

If (cs! = null) {}

try {}

CS. Close();

}

catch (SQLException s) {}

throw new Aexception.getLocalizedMessage (s);

}

}

}

I read in one of the presentations of Steve Muench (Oracle Fusion Applications Team' best practices) that the call of the method createRootApplicationModule is a bad idea and call the method via the link interface.

I guess that the call of the createRootApplicationModule uses a lot more resources and connections to database as the call to the method via the link interface such as

BindingContainer links = getBindings();

OperationBinding ob = bindings.getOperationBinding("customMethod");

Object result = ob.execute)

Is this the case? Also use getDBTransaction () .createCallableStatement the best average of calls to database procedures. Would it not be better to expose plsql packages such as Web services and then call from the applicationModule. Is it more effective?

Concerning

OrlandoHe must show them.

But to work around the problem, try this - drag method of the data control to your page and the fall as a button.

Then go to the source of the JSPX view and remove the button from there - if it comes to the display of the source - the link must remain in your pagedef. -

Problem with 'Less' function in LabVIEW 2015

Hi guys,.

I create a VI that will allow me to record data at intervals of time du.1.2.3,.4,.5,.6,.7,.8,.9, 1, 2, 3, seconds etc 4 or 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 20 or 30 seconds etc. According to my my main VI entry.

In VI I joined, I maintain a case down and a case of rocker. I went through the case maintain down with execution of climax, and it seems to be ok. My problems begin when you use the rocker case. If I start time Stress control to 0, it increments a.1.2.3,.4,.5,.6,.7,.8.9 and 1 as expected. However, when he goes from ajouter.1 in the structure of cases where it is supposed to start adding 1, he eventually add un.1 and a 1 for the first time, then continue to add 1 as expected. I go back into the code and when I turn on highlight execution with a 1 coming from the shift register is to enter the function ' below to "in the structure of the bottom case and it generates a real when it must produce a fake. My case top of page structure also produces a zero when it should start out 1.

Can someone please run this code and see if the problem exists for them to preserve my sanity? Or am I just being a fool and lack of something obvious because of a crossover cable or something?

Thanks for your help

Basically, you have several choices:

- Make sure the floats an 'accurate' (= whole) value (for example, by multiplying by 10, and by changing the result to I32, the last step is necessary because only the integer representation allows accurate digital comparisons

- do the strings of floats with defined accuracy

- write (and use) a "almost equal" function (subtract the value you are testing of the float, take the absolute value and see if)<0.0001, or="" whatever="" precision="" you="">

Of these, the last is likely to be (by far) the fastest and "" will remind you that decimal values generally are 'approximations' (you can represent 0.25, 0.5, 0.125, etc. exactly, but not 0.1, for example).

Bob Schor

-

Equium A60 - 157 HELP Card/PCMCIA wireless network!

Hi all!

This problem has been frustrating me for at least 2 months.

OK, I recently bought a Belkin Wireless G Notebook Card (wifi card).

The first time I installed the wifi card it worked perfectly. I powered

extinguish your laptop (after I had finished working on it). The next day

When I turned the laptop on, you guessed it, the wifi card was not

work. I did not remove the wifi card for the power off/power on stage.When I went into Device Manager, a yellow exclamation point was

above the wifi card. When I looked at the properties of wifi cards, I found the

following message appears:This device cannot find enough free resources that it can use. (Code 12)

If you want to use this device, you will need to disable one of the other devices on this system.

Click Troubleshoot to start the troubleshooter for this device.I tried unistalling and reinstalling the wifi card. I tried to use the latest Belkin drivers for the wifi card. Sometimes it works, but when I have the power turned off/turned on the laptop it s not go.

One of the ways I got the card to operate was removing and inserting the card several times

until he finds resources and finally worked. I put t use this method as, obviously, I might damage the cardbus slot and the card. In the meantime I ve been using a Belkin Wireless G USB network adapter. I had no problems with the USB adapter, but I prefer to use the wifi card. This is because the majority of the wifi card is housed inside the laptop, so it s not easily evicted. The USB adapter is great for wireless networks inside, but too risky for roaming outside.I had this problem of 1.70 at 1.8 ° C bios version, and I still have it with revision 1.90. I intend to return m wifi card in the store for an Exchange, but I have a really bad feeling that the same thing will happen with the replacement of the card. I m not convinced that the current wifi card is defective, because it works perfectly when he gets the resources.

I tried to manually configure resources on the devices, but I have a major problem. Windows seems to be LOCKED in the automatic settings. The automatic settings check box is grayed out completely, it is impossible for me clear the checkbox. I did not take anything in the bios that can help me solve this problem.

I noticed that there are many devices running off IRQ (interrupt requests) 19, when you view resources by type in Device Manager. These devices are:

Standard enhanced PCI to USB Host Controller

USB host controller standard OpenHCD x 2 (two entries)

Texas Instruments OHCI Compliant IEEE 1394 Host Controller

Controller CardBus Texas Instrumetns PCI-1410When the wireless card works she also runs IRQ 19, but as I said before Windows is locked in the automatic settings and do not manually configure the hardware resources.

Equium A60-157 is not provided on board, but it has the potential for. WiFi L.e.d. on the front panel, physical location for wifi turn on side panel wireless icon and F8. Someone know if Toshiba offer an upgrade service, or can I install wifi on board myself?

ANYONE, PLEASE HELP ME WITH THESE QUESTIONS!

Thanks for your time!Hello

I found this for you:

Both devices have received a same ports of entry/exit (e/s), the same interrupt, or the same Direct memory access channel (whether through BIOS, the operating system, or a combination of both). This error message may also appear if the BIOS did not allocate enough resources to the device (for example, if a (USB) universal serial bus controller does not get a break in the BIOS because of a damaged MPS (Multiprocessor) system table).

You can use Device Manager to determine where the conflict is and turn off the device in conflict. On the tab General properties of the device, click Troubleshoot to start the Troubleshooting Wizard.

Try disabling the features of conflict. It will be interesting to know if the problem will occur again.In my opinion, you should contact the Service partner and ask them if the unit is ready for the Wi - Fi module. Sorry, but I'm not sure about that and I don't want to give you a bad information about it.

-

function with a variable number of cubic curves

Hello

I have a set of data : a set representing the independent variable and a set representing the dependent variable. I need to find the minimum number of cubic curves (find their coefficients) that reduce the average quadratic error less than a given tolerance. If the tolerance is higher than 17 particular segments, so I need to raise an error.

You have an idea what function blocks, I need to?

I could use general polynomial Fit VI with order 3 and its default method to check the residue. If the residue is greater than the given tolerance, so I could try to use two cubic curves and test them on different starting and ending points until the residue is less than the given tolerance. If tolerance is not guaranteed, I add another curve and I test again the Assembly as shown above. By iteration until the residue is less than the given tolerance, or until I would need to add 18th cubic curve.

If there was something ready, I would be grateful.

Thank you.

Fabiobonelli wrote:

Please, you can test your VI adding another point to four present?

X =-6453

Y =-266

Check the residue.

Thank you.

Did you even read my response? You have a serious air conditioning problem because the data is on a narrow Beach far from zero. By example-6500 ^ 3 is smaller that - 2E11, i.e. a value that many (many!) is orders of magnitude different from that of the constant term (1). The linear algebra problem that results is very ill, conditioned and just blindly Ford over the accelerator pedal are bad advice here.

This isn't a problem with the implementation of LabVIEW, but a fundamental vice that you encouter also (or worse) If you would do your own math. No way around it. (See also)

My recommendation is to delete the X offset and add it later again. Now things work correctly. (Note that a second order polynomial fitting still works without twist it).

Try the and see for yourself.

-

2D adapt: a question to Mr altenbach

Dear Altenbach,

I started looking at all your posts on form 2d (the oldest is in 2004) and I downloaded all the files 2Dquadratic, planefit, 2Dscatterfit2, 3Dpolyfit, XuFit_004. Also, I read this https://decibel.ni.com/content/docs/DOC-30394

Ok... I'm completely lost.

I would ask you where your files, I should start. I want to ride with the function z = a + bx + cx * 2 + dy + ey * 2 + fxy (second order polynamial) a set of experimental data (x, y, z are the experimental data, these are all vectors 1 d with the same dimension, in addition, they are very large vectors on the 100000 entries each).

I did not understand:

(1) what is the difference between mesh and scattered data.

(2) If you are suggesting the polynomial form (build the H matrix) or climb the Mar Lev.

I also ask you if there are more recent vi from, because the 'old' are very very difficult to understand.

Thanks in advance

Use the most recent one. Some of the old examples use homemade, pre LabVIEW 8.0 subroutines. Editing tools has received a major overhaul with LabVIEW 8.0 and should be used out of the box.

gnappo wrote:

I would ask you where your files, I should start. I want to ride with the function z = a + bx + cx * 2 + dy + ey * 2 + fxy (second order polynamial) a set of experimental data (x, y, z are the experimental data, these are all vectors 1 d with the same dimension, in addition, they are very large vectors on the 100000 entries each).

Because your problem is linear in coefficients, you must use the general linear adjustment, building the matrix H of the terms. No need of iterative procedures such a lev - mar. create your H matrix with various conditions for each pair of xy.

Gridded data mans that x and y values form a rectangular grid with no missing values, i.e. the 2D data can be represented as a simple 2D array. The scattered data means that there is a list of (x, y, z) points and x and there is not evenly spaced. You can run this in both modes to immediately see the difference.

Maybe you are looking for

-

Equium M50-216 - keyboard replacement

Hi all my name is Michael and I have a question about a mobile phone 2nd hand, I bought in a charity shop, he has a problem with several of the keys that do not work. I guess that little liquid has been spilled on the keyboard.My question is: is it w

-

Hello I have a new Lenovo Yoga 900 s. Everything worked fine until Windows has automatically installed upgrading birthday last night. Now, the battery won't charge. Fortunately, the laptop seems to run on a/c, given that the battery does not empty. B

-

Hello. I use the last MAX4.6.1 and I get the following error "database file is corrupted". It is said to go to the following link to fix the problem: http://digital.ni.com/public.nsf/allkb/2C7480E856987FFF862573AE005AB0D9. When I try to follow the s

-

Why my desktop settings are changed at the next logon?

I'm on a domain. Our use of Office XP SP3 PC. My phone has recently been reinstalled with the same OS. I'm supposed to have some local privileges on my laptop, but I don't know how to check this. My network administrator says we do not have a grou

-

Hello I am looking for two 10 GB switches to low price without perfomance high-end to create an environment of demonstration NSX. VMware NSX has only one requirement difficult and an option for the integration complete. A 10 GB of Dell switch x 4012