Increase video exposure gradually over time

So, I made a timelapse in which photos is receiving more light to begging for more dark at the end of the day, because the natural light conditions also changed. I couldn't change the shutter speed, iso, openness throughout the process, as it has been set to manual. Is it possible to add an exposure effect that could change the exposure over time, gradually as a result of 0 to + 5, to compensate for the video to penetrate slowly more dark. I hope you get the idea. Or I have to manually change exposure from sources including ar images darker before making the timelapse video. Thanks in advance for answering the question.

Add an adjustment layer for your offsetting effect, then:

Addition, navigate and defining the key - Pr images

http://help.Adobe.com/en_US/PremierePro/CS/using/WS1c9bc5c2e465a58a91cf0b1038518aef7-7e63a .html

Adobe Premiere Pro help / adjustment layers

http://helpx.Adobe.com/Premiere-Pro/using/help-tutorials-adjustment-layers.html

Tags: Premiere

Similar Questions

-

My WRT110 slows down gradually over time. Why?

I noticed that my internet download speed became slow. I tried using SpeakEasy and the speed of Comcast tested and found my upload and download speeds to be both less than 2 MB. In the tests, I disconnected my WRT110 RangePlus Wireless Router and connected directly to the Comcast RCA modem. I raised the speed races and got 21MB down and 4.4 upwards on two speed test sites. I then be able to reset the WRT110 then reconnected to it. So I ran the tests of speed and their return the same 21Mo down and 4.4 upward. I came home a day later and tested and my download speed is 8 MB. I came back today and it was 5MB. I connected directly to the modem Comcast and returned to 22 MB down speed. I then be able to reset the WRT110 and reconnected it and speeds were down to 22MB. I have run this test twice over a period of days and got the same defacement of speed over time. What could cause this?

I think you can try to upgrade/re-flash the firmware on your router.

Connect the computer to the router with the Ethernet cable. Download the latest firmware from the site Web of Linksys. Open the router configuration page and update the firmware on your router.

After upgrading the firmware of your router, it is recommended that you must reset the router and reconfigure. Press and hold the reset button on the router for 30 seconds. Release the reset button and wait 30 seconds. Power cycle the router and reconfigure.

-

Game slows down gradually over time. What to check?

Hey, I have fully functional game together, using the structure of the excellent tutorial of Toni Westbrook. I'm very happy with it! But not all right.

This is a scroller, and I wrote it so that a talented player can go away and so on... the power, rather than die and get another life. For example, tens or hundreds of items have been added to the vector and then removed with vector.removeElementAt ().

I think that the gradual slowdown is due the number of objects (dead). But I'm not sure. In any case, I discovered vector.trimToSize () and thought, ' that's it! But it has not cured the problem.

Anyone can shed light on cpu and memory usage in a case with a lot of objects to the most-deleted? Or suggest something else I should look at as a cause of slowdown?

The BG of scrolling should not be relevant, as it is just an object graphics and bitmap in the size of 2 screens, I continue to inspired, move, copy.

Using OS 5 on Curve 8900.

I recommend using the Profiler.

It will show you the number of live objects, and the time it takes for each method to execute.

E.

-

Increase consumption of memory over time

Greetings,

I have a card MCC DAS1000 and usb3102. When I check the counsumption of the memory of the PC I see more with time until some point the PC freezes and I have to restart.

What can cause this problem, any help greatly appreciated! (PC: XP SP3 1 GB Ram AMD64 X 2 CPU)PS: The code is attached

Best regards Ferhat

Move all your channels either BEFORE your loop creation. You cause memory leaks since you keep just created new references without closing the 'old '.

-

Make an increase in AO signal over time

Greetings,

I'm new to LabView and try to do a few simple programs.

I want a signal voltage 0 - 5 V in 20 sec.

For my first program, I added a slider and connected to the DAQ Assistant, so that I could manually control the tension between 0 and 5V.

Could someone help me with this problem?Thank you

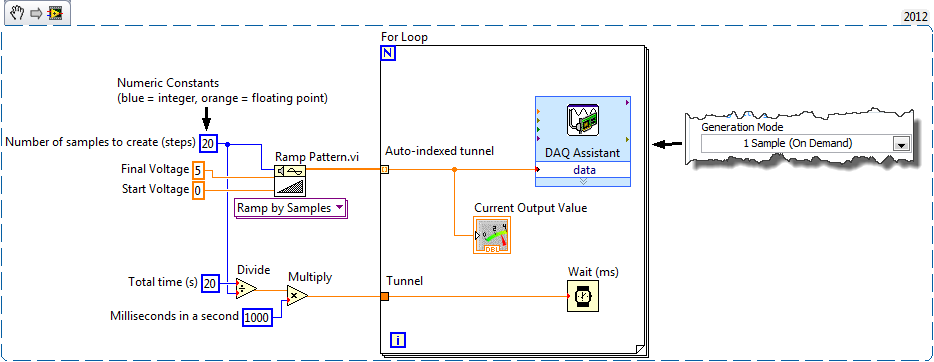

Here is a picture of what Dennis, maybe this will help.

I have everything on the labeled diagram so you can look for more specific help if you need.

We have also a few tutorials available basic here: http://www.ni.com/gettingstarted/labviewbasics/ and training here: http://ni.com/self-paced-training

-

I want to increase the speed of scaling over time, but I have no idea how do.

I don't know how I explain it, but as the title says, I want a clip to scale upwards or downwards faster over time. I've checked everything but I couldn't get on mine. Any help would be appreciated

Too bad everyone! I found it myself. That's what I was looking for https://www.youtube.com/watch?v=vbI-qmTTGww

-

How to create a list of chips which is aligned which can occur over time (such as powerpoint)

Hi all. I'm trying to figure out how to create a list of chips for a training video that will appear gradually as the voice on reveals each point, without the need to have a separate title for each point. Even if I create bullets, especially how to align and having the good spacing between each sentence. Basically I want it to be like an animated Powerpoint slide! Please can someone give me advice on how to do this than all my text/bullet alignment points and are spaced evenly. Thank you!

Each line is revealed over time:

Title

- Point 1

- Point 2

- Point 3

Do in the Titler, and start with the complete list of ball.

Then create "new title based on the current title" and remove the bottom line

Do so until that there is only a line on the left.

-

Want a ramp of output voltage over time and measure input 2 analog USB-6008

Hello

I want to produce an analog voltage output signal that increases over time with a certain slope, which I'll send in a potentiostat and at the same time I want to read voltage and current (both are represented by a voltage signal) that I want to open a session and ultimately draw from each other. To do this, I have a DAQ USB-6008 system at my disposal.

Creation of the analogue output with a linear ramp signal I was possible using a while loop and a delay time (see attachment). Important here is that I can put the slope of the linear ramp (for example, 10mV/s) and size level to make a smooth inclement. However when I want to measure an analog input signal he's going poorly.

To reduce noise from the influences I want for example to measure 10 values for example within 0.1 second and he averaged (this gives reading should be equal or faster then the wrong caused by the slope and the linear ramp step size.) Example: a slope of 10 mV/s is set with a 10 step size. Each 0.1 s analog output signal amounts to 1 mV. Then I want to read the analog input in this 0.1 s 10 values)

Because I use a timer to create the linear ramp and the analog input is in the same loop, the delay time also affects the analog input and I get an error every time. Separately, in different VI-programs (analog input and output) they work fine but not combined. I searched this forum to find a way to create the ramp in a different way, but because I'm not an experienced labview user I can't find another way.

To book it now a bit more complicated I said I want to measure 2 input analog (one for the voltage of the potentiostat) signals and one for the current (also represented by a voltage signal) and they should be measured more quickly then the bad of the analog signal. I have not yet started with because I couldn't read on channel work.

I hope someone can help me with this problem

An array of index. You want to index the columns for a single channel.

-

Get information from change of color over time

Hi all

I am new to LabView and on this forum, so I hope I'm in the right subforum. If this isn't the case, it would be nice if a mod could move this thread.

My problem is the following:

Let's say I have a white spot I want to observe with a camera. The color of the spot turns green over time and I want to acquire the photo, as well as to get the color intensity of green increase developing countries over time. I do not know what features I need to use to get there. Can someone me hint in the right direction? I think that it is at least possible in LabView.

Thank your all for your help.

Best regards

Tresdin

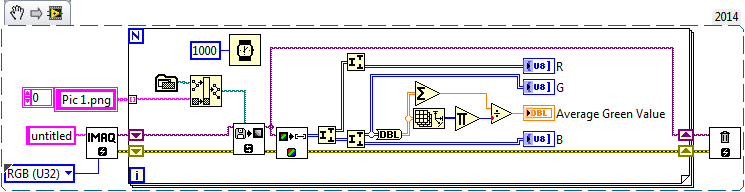

I don't know what the best resource for IMAQdx pilots, I think that the examples that provide OR are pretty useful. Here's a simple VI which will tell you the average value of green of all the pixels in your image.

-

I2C communication slows down over time using USB-8451

Hi all!

I try to communicate via a device slave using a USB-8451 I2C and I noticed that after a while the communication speed slows way.

I can send and receive data very well and apparently my system seems to work. But the problem is that over time the speed of data transfer slows down so much so that if I let it run all night it has slowed to a crawl in the morning. My ultimate goal is to gather data, draw and save every second and at the beginning it is easily achievable, but after 10-15 hours I can no longer collect data as fast I need. I'm not quite sure if this is a problem with my code LabVIEW, the NI USB-8451 box or the slave device. But if I stop running the LabVIEW program and start it again, everything returns to normal.

The slave device is a personalized card with a microchip PIC which acts as a slave I2C and returns the data at the request of the master. I can't imagine anyone will be able to determine if this is a problem directly, but if we can rule out the other two (code LabVIEW or the NOR-8451) as the source of the problem so I know it's my slave device.

I've greatly simplified the LabVIEW code that I used to collect data on I2C and I see even this gradual slowing down over time. Attached, it's that the very simplified VI and the data file, it produced. I only ran it for about 45 minutes, but from the beginning to the end we see again that the enforcement timeframe is rising.

I hope I'm just doing something stupid, thanks in advance!

-Aaron

Aaron,

Do not have the additional module responsible for these functions, but I'm sure I know whats going on. It seems that each time through the loop 'OR-845 x I2C Create Configuration Reference.vi' creates a new reference. After a while it will start to slow things down. Better to open the reference before the while loop starts and spend just the reference in the loop. Don't forget to close the reference after the loop stops.

-

How to adjust audio speed/duration over time?

I watched a video on how to adjust the video duration and speed over time, using time remapping and keyframes, but it doesn't seem to affect the audio for some reason any. Is it possible to adjust the speed/duration of the audio over time as you can do with the video?

No, you need AE for this.

-

Poor memory - rendered Management slows over time

I noticed a problem with my renders involving sequences. animation and not purely of the layers of things etc, but based on sequences of images as assets. I have a lot of free RAM initially with no other open applications. When I start making my CPU usage is very high and AE takes memory and gives him a number of aeselflink processes. Over time however in my iStatMenus I can see my 'active' RAM drag and my 'inactive' RAM goes upward, while at the same time my CPU usage slowing down my render grinds gradually stopped. It's like once it loads an image of my images in RAM for rendering, that he cannot let go of it completely once it is done so gradually, he eats all my RAM and my rendering slows down. If I stop the rendering and nothing do but running an application to empty all the 'inactive' in 'memory' memory and restart immediately made things are in their fast normal operation. He then proceeds to gradually slow down again. Everyone knows what is happening here? All the images located on the external eSATA drives and are made to external eSATA drives. Here are my specs for reference:

AE CC 2014 (v 13.0.0.214)

Mac Pro (early 2008) 3.1 8-core

18 GB OF RAM

Memory and multiprocessing:

18 GB installed RAM

RAM to leave for other applications 5 GB

CPU reserved for other applications 2

Allocation of RAM per CPU / 3GB

Real CPU that will be used 3

Any ideas would be greatly appreciated. Thank you!!!

Robert

What is the State of reduce the size when system is low on memory Cache preference?

I describe this preference here:

-

Since the installation on my Windows 7 64 - bit installed, Firefox has constantly gotten slow that hour passes, hangs for 10-15 seconds at a time and just gets worse over time with updates and all. It was fast when I installed first, but of the six latest mos has slown to a crawl.

upgrade your browser Firefox 8 and try

-

Can I create a spreadsheet to track events over time? If so, how?

Is it possible to use the numbers to track events over time? Dates would be the only numbers you use.

Can you give more details on what you are looking to do? Surely you don't want only a column of dates...

SG

-

Plotting the amplitude of a spectral peak over time

Hello

I am creating a VI where the acquired continuously DAQ, plots and save a waveform in millisecond delay (which is already done in this case), then takes this waveform, finds a specific pic (probably the first) and trace the amplitude of this pic over time (+ 30 minutes, one point each scan which is obtained). Essentially, I have a detector quickly attached to a chromatograph, and I want to select a single ion and monitor the amplitude of this ion. I can draw the waveforms along with it in post processing, but I want to do is to have the 'slow' constantly plot to update and display when it moves through time. Joined the VI will go in, using IMS software V1.3.vi, the paragraph is the "GC" Mode it seems I should use the "peak detect.vi.", but I'm not familiar with this operation, and so I don't know how to show a constantly updated graphic or remove the amplitudes and draw. Thank you for your help,

<><>

Eric-WSU wrote:

I get an amplitude on plot of time, but it does not appear until after all the iterations are stopped

I have not watched your VI (because I'm in a previous version of LabVIEW), but it's probably because your graphic is outside the loop.

Here's how you can get a graph of the peaks (all vertices, by iteration):

Or if you want to only a certain PEAK (and how this pic changes with the number of iterations):

Maybe you are looking for

-

"back" button does not return me to the area I have left in the search list

I use version 7.0.1 of Firefox on a laptop with XP SP3. All known updates have been applied. I use google to search for a topic and a list of results from site. After selecting one of the conclusions, I click on the back button, I went back to the li

-

After upgrading to Firefox 6 order of bookmarks to organize is missing, how can I get that back?

In the old version of Firefox, there is a way to move and organize bookmarks by clicking on the link "Organize bookmarks" in the bookmarks menu.

-

ReadyCloud States ReadyNAS 204 in offline mode

Hi all Yesterday I started to receive a notification of the client PC ReadyCloud that my RN204 was offline. This started after I have went to the 4th weekend and tried to connect from my laptop. As you can see he recognizes the name of the NAS, but s

-

I got this laptop a few months of end of September, and recently he has been stop by itself. I try to play games like Counter Strike and Aion, but after twenty to thirty minutes it just turns off. I thought that it was a matter of heating, so I tried

-

Deactivation of the system code 52741028

In the bios menu it says to enter the administrative password, I've so after three unsuccessful attempts, he says "system disabled 52741028. How can I get around this?