measure to the block-level disk data-delta for the calculation of the replication bandwidth

Hi community

I hope this question isn't too stupid, or maybe I'm thinking too complicated.

I want to measure the level of the disc data-delta time block time B to calculate the bandwidth for replication.

How can you experts out there, such a calculation. is my common sense approach?

Please share some light on this particular subject.

Thank you, peter

If you PM me I have a worksheet that may be useful

Tags: VMware

Similar Questions

-

Trigger validation key in two blocks in a data module

Hello

I would like to have a clear idea on works trigger the validation key to the level of data block.

I have a module that contains two blocks of data Block1 and block2 and eah block for example, I've defined respectively the trigger key validation as follows:

tirgger key validation (Block1)

Begin

if condition1 then

raise forms_trigger_failure;

end;

commit_forms;

end;

tirgge key validation (block2)

Begin

If condition2 then

raise forms_trigger_failure;

end;

commit_forms;

end;

My question is:

When I make changes in blocks (Block1 and block2) and press the save button, one of the tirigger in fires level data block and so one of the validation is the skikpped (condition1 or condition2) according to the update where it is.

Is it not better to use only the validation key in the module level instead, and if this is the case, why the oracle forms let us ppersonalize ky-validation of data block level.

Thank or for your advice.When I make changes in blocks (Block1 and block2) and press the save button, one of the tirigger in fires level data block and so one of the validation is the skikpped (condition1 or condition2) according to the update where it is.

The Module level trigger is the best option in this case and you will need both your test conditions before calling t_Form() Commi.

Is it not better to use only the validation key in the module level instead, and if this is the case, why the oracle forms let us ppersonalize ky-validation of data block level.

Generally, you will find a trigger key validation only at the Module level, but because there are times when it makes sense to put the trigger at the block level. Also, depending on the properties of the trigger, Oracle Forms will run the first block-level triggers, then it executes a trigger level of Module of the same name. It really depends on the developer and how they want to organise/organize their code. The tool simply allows you to put the code where will she be better tailored to your needs.

Hope this helps,

Craig B-)If someone useful or appropriate, please mark accordingly.

-

Is a block level copy when expand us the size of the disc during P2V?

During P2V, if we reduce the size of the disk, it will be a copy of file level which will be slower than the block level.

We wonder if she will be a block-level copy if we expand the size of the disk during a P2V? If she always uses level file copy, it would be preferable not to increase the size of disk and just extend the disk space by using DISKPART after conversion?

Thank you

It will be block level for NTFS volumes, and at the level of the files of FAT volumes.

HTH

Plamen

-

VMware Converter Standalone copy is the copy block level and sometimes at the level of files

Hi team,

I use VMware converter standalone for P2V and V2V migrations. I noticed that some readers are cloned by the converter by block-level copying and some readers are colned by the file-level copy. Anyone can unmask me why is it so? Block level and file level methodology is decided by the converter on what basis? is the size of the size\sector disc or disc type? Any suggestion will be highly appreciated.

Thanks in advance

Kind regards

Rohit

Screen enclosed... Post edited by: RohitGoel

RohitGoel wrote:

Is there a limit of resizing who decide if vmware converter will use the file level cloning. I have attacehd a screen shot... I'm resizing it had ' (from 50 GB to 80 GB) drive also for traget VM but yet Vmware converter used method block based to clone and 'E' is also a resized to target disk is converted by file based cloning. .. is there something else that decides on the level of the file or block level when it resizes?

No limit of resizing.

To resize the D: you extend that will always use at the block level.

What do you do to E:? Size reduction?

-

The level of data security issue

Hello gurus:

I'm having a problem with the level of data security.

I have copied my Production RPD and Webcat in Test, changed the DSN connection pool and username/password.

Now the problem is, a user has the same rights in Prod and test, is to see properly in Production, but sees nothing in the Test.

I use a block of initialization of the Siebel CRM Application. If clients are assigned to users based on their responsibility to S_RESP and S_USER.

on this basis, users can see the list of customers. Authentication is LDAP server even for production and testing.

Now, a user sees correctly assigned in Production, but not in the Test list. I don't know how to solve. I searched query logs and other things, but cannot find anything.

Please help me how should I study this issue.

Thank you.

VinayHi Vinay,

I guess that your problem is due to the ORGS session variable. Can you open the repository and test the block of initialization for Associations? I'm sure that it contains no value of Test.

On the logging level. I think that it is very convenient for you to have a user who logs level 2 or higher, in order to verify the physical SQL. But you use the same LDAP source for Test and Production, which makes things a little more difficult. So I would advise you to add an LDAP variable to fill the session LOGLEVEL variable. Create a user test with loglevel 2 or higher, and then set the loglevel for all users in 0.

Kind regards

Stijn -

All records in a block of tabular data of several records in the shadow

I have a block of tabular data of several recordings and would like all the other records in the data block to have a shaded background color. How do I would accomplish this? (Excel worksheet attached that shows what I'm trying to do with a block of data Oracle Form)

I don't know how to control the background color of the current record, but this is NOT what I'm asking.

In the event where you fill your block using EXECUTE_QUERY you can do the following:

-Create a Visual attribute with the desired colors and name it VA_BACKGROUND

-Create a parameter named P_BANDING

-Put the following code in your PRE-QUERY-trigger:

:PARAMETER.P_BANDING:=1;

-Put the following code in your POST-QUERY-trigger)

IF :PARAMETER.P_BANDING=1 THEN DISPLAY_ITEM('YOUR_ITEM_NAME_HERE', 'VA_BACKGROUND'); END IF; :PARAMETER.P_BANDING:=1-:PARAMETER.P_BANDING;Need DISPLAY_ITEM code once for each item that you want to color.

-

Can't add placeholder text data merge for all blocks of text on the document

I'm trying to put together a script that loops if all pages in an indesign file, search all text frames label caption1, caption2, caption3... (whenever you get to a new page, the number of text images label starts again from 1) and add a placeholder in the text data fusion: caption1, caption2, caption3... but this time, when you get to a new page the number continues to go... caption4, caption5 and so on.

Page 1 - image text = caption1 = tag > data merge placeholder = < < caption1 > >

Page 1 - image text = caption2 = tag > data merge placeholder = < < caption2 > >

Page 1 - image text = caption3 = tag > data merge placeholder = < < caption3 > >

page 2 - text = caption1 = image tag > data merge placeholder = < < caption4 > >

page 2 - image text = caption2 = tag > data merge placeholder = < < caption5 > >

Currently, the script is adding placeholder text merge data only for the two text frames first (out of 6) on a page (about 8 pages) and just the first image of text on the page two (out of 3)

Any help will be greatly appreciated

Here's the script:

myDocument var = app.activeDocument;

-SOURCE DATA CALLS FUSION

main();

main() {} function

myDataSource var = File.openDialog ("Please select a datamerge source", "text files: * .txt");

If (myDataSource! = null) {}

myDocument.dataMergeProperties.selectDataSource (myDataSource);

myDocument.dataMergeProperties.dataMergePreferences.recordsPerPage = RecordsPerPage.MULTIPLE_RECORD;

}

}

-MERGE OF DATA FIELD

function get_field (captionString, myDocument) {}

fields var = myDocument.dataMergeProperties.dataMergeFields;

for (var f = 0, l = fields.length; e < l; f ++) {}

If (fields [f] .fieldName == captionString) {}

Returns the fields [f];

}

}

Alert ("error: did not find all the fields with the name" + field_name);

}

THE TEXT - ADD PLACEHOLDER DATA MERGE

var countFrames = 1;

for (i = 0; i < myDocument.pages.length; i ++) {/ / TOTAL NUMBER OF PAGES in the DOCUMENT}

var capPerPage = 1;

for (x = 0; x < myDocument.pages [i].textFrames.length; x ++) {//COUNT TEXT TOTAL IMAGES PER PAGE

If (myDocument.pages [i] .textFrames [x] .label < 0) {}

Alert ('can not find any image caption');

} ElseIf (.textFrames [x] myDocument.pages [i] .label == 'caption' + capPerPage) {//IF IT IS A TEXT LABEL of IMAGE 'caption1' EXECUTE

var captionString = "caption" + countFrames;

var myTextFrame = myDocument.pages [i] .textFrames [x];

var myDataMergeProperties = myDocument.dataMergeProperties;

var myTextFrame.parentStory = monarticle;

var myStoryOffset = myTextFrame.parentStory.insertionPoints [-1];

var myNamePlaceHolder = myDocument.dataMergeTextPlaceholders.add (monarticle, myStoryOffset, get_field (captionString, myDocument)); ADD DATA FUSION TEXT HOLDER TO THE TEXT BLOCK

countFrames ++; INCREASE THE NUMBER TO ADD DATA FUSION TEXT PLACEHOLDER FOR THE NEXT BLOCK OF TEXT

capPerPage ++;

}

}

}

Hello

To verify this change (replace for...) loop)

var countFrames= 0, currLabel, capPerPage; for (i=0; i

Notice countFrames starts at 0.

Jarek

-

How to implement security at the level of data

How to implement security at the level of data in BI Publihser? I use Obiee enterprise edition and publihser bi. My requirement is to show the data based on the user relation ship - region.

He belongs to the eastern region

B - the user belongs to the region of the South

so if the user has opened a session it must see only the region Eastern report. If user B is connected it should see only the southern region. I use direct sql to my database of oralce as data source.

I appriciate your helpHello

You can use *: xdo_user_name * in your query.

concerning

Rainer -

Hi friends,

I have a small request. Make vm have access level of block on the disc if the disc asigned to the VMFS or RDM a block level access.OR data store both have access to the block-level.

Thanks in advance.

ZoOmbie

(1) guest operating system access block - vmdk > block in the vmdk file

(2) block Guest OS access - RDM > block on the LUN

---

MCITP: SA + WILL, VMware vExpert, VCP 3/4

-

When I "manually export level zero data", I get a few upper level members

I found that the export of level zero behaves differently over time. in our OSI 11.1.1.3 number cube, Feb. 4 EAS has exported only zero-level data when I "manually export level zero data. On 25 February, limbs stored exporting EAS with formula as well as the members of level as a Total, total compensation salary (when I manually 'export data to zero"). Why is this?In OSB, you export level zero blocks - i.e. at level zero in all sparse dimensions. Export may still contain high-level dense dimension members.

Why it seems to me change over time, I'm not sure. Maybe to do with the question of whether data had been calculated when export has been run?

-

How to put in place measures in the application of health?

How to put in place measures in the application of health?

What do you mean 'the configuration steps? If you want steps if poster on the health dashboard, tap on health at the bottom of the screen data. Tap fitness. Tap measures. The value "Show on dashboard" on.

The health app is really more than one application storage and synchronization. You can't do things as targets for a particular metric. If you want to set a goal of step and follow it, use one of the many followed apps available that can extract data from health. Map My Fitness, My Fitness Pal, Jawbone UP and Fitbit (both can be used with the phone if you have an iPhone 5 or a later version). If you like to compete with others, the Club activities is fun.

-

I can not get the voltage measured by the voltmeter.

Hello

When I use the VI "Cont Acq & voltage graph - write data to the file (PDM) .vi" to view the NI PCI6251 voltage, the voltage is of approximately - 5V. However, the voltage I measured by the voltmeter of the channels on PCI 6251 is about 0. 3. also, when I use another VI to show the voltage, it is of approximately - 5V too. I don't know what the problem is. Thank you very much.

-

Measurement of the current rail voltage > 10V

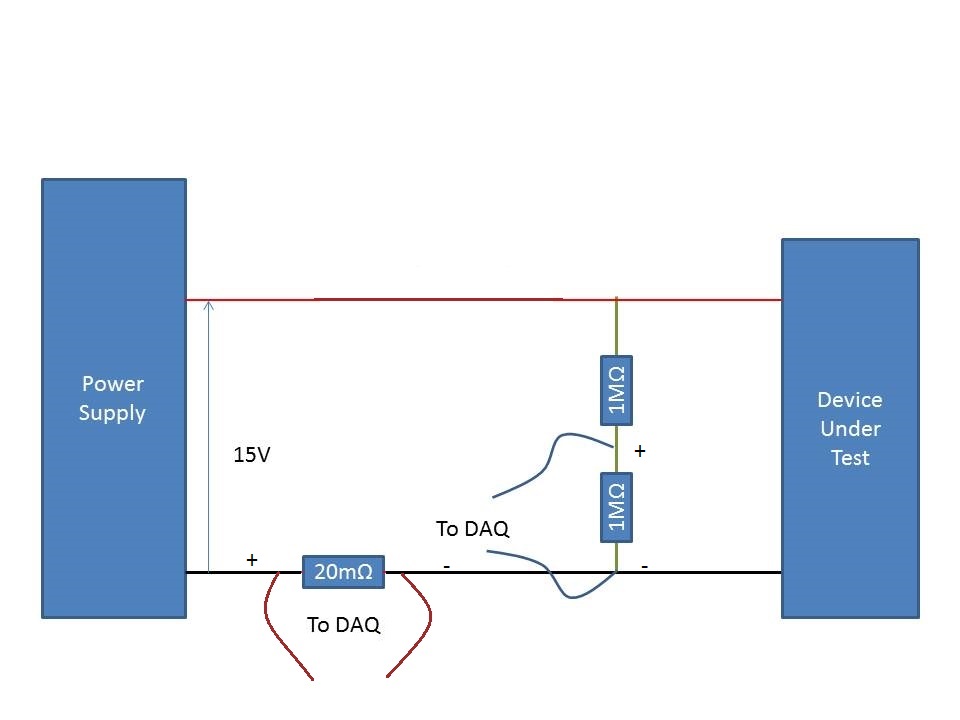

Who am I to measure the current and voltage on a 15V supply rail. (Here's a copy of my circuit attatched) I use an acquisition of data USB-6225 and Lab View,

I have my camera to test connected to a power source. I connected two differential channels to the acquisition of data, one for the current and the other for the voltage.

I understand that data acquisition is unable to manage more than 10V so I use a voltage halfway through the voltage divider. When I run the action, the indicated current is incorrect. It shows the 10A when it should be about 500 my.

When I reduce the tension on the power supply 10V, it everything works fine, so I think that its got something to do with the limit of 10V.

Please can you advise how I can connect that places correctly?

Do it this way.

-

Accuracy of measurement of the SCXI-1100 module

Dear team,

I'm figuring an accuracy of measurement for SCXI-1100 (http://digital.ni.com/manuals.nsf/websearch/0790D3C92CF4B16E8625665E00712865) devices.

It is not described in the standard table, it has precision stage of entry / exit (must be added?). I can't reach a precision which is a result of precision calculator. If you have any equatuions / examples please post.

Concerning

Michal

Hi Michal,

I'm not sure I understand your question. Are you trying to calculate the accuracy of the SCXI-1100 module, but you do not have the data specification for the calculation, or you don't know how to calculate the accuracy?

If you need performance data, you can consult the technical data sheet 1100 SCXI. If you want to calculate your accuracy of the device, I can recommend you following KB: How can I calculate absolute precision or the accuracy of the system?

I hope that this is the info you are looking for.

Kind regards

D. Barna

National Instruments

-

noise in the measurement of the frequency by using a counter

Hi all

I use a card 6602 for measurement of the frequency mode "bufferred range with 2 CTR", the signal of interest is a signal TTL of ODA, however, I got a signal as shown attached. It seems that the noise level varies with the level of the signal, y at - it a good way to eliminate this noise? Tried with digital filter, but it does not throw an error 200774. It seems that the source of the selected counter is set to default 'No Filter'. And I think also to use a bandpass filter, but given that the level of the signal changes (~ 300 Hz - ~ 300 kHz), high and low limits are not easy to define.

Thanks in advance!

Best,

L.

Maybe you are looking for

-

iCloud password does not stick on El Capitan

I recently changed my password, and it seems to have taken on all my devices with the exception of my iMac running El Capitan. I don't know why, but when I enter my password in iCloud preferences, it is as it should be, then I immediately get a messa

-

How Apple can for me if my Apple Watch was stolen?

How Apple can for me if my Apple Watch was stolen?

-

The list of messages in my poster files is 'of' or you o '. I show both?

I have a subfolder which shows 'Subject', 'From' & 'Date '. Also see "recipient"?

-

error "unable to connect to the network hidden.

Hidden in my own home network I have 2 laptops. I can connect to the network wirelessly fine with 1 laptop. But the 2nd laptop says cannot connect to the hidden network. HOW CAN I VIEW IT?

-

I'm having bad to write a VCL query to get all the vApps in VCD infrastructure and have hit a roadblock. I can't return the results. My code is below, and I think it's okay...Any help would be appreciated.queryService var = vcdHost.getQueryService ()