OVERALL TOTAL incorrect (with COUNT DISTINCT)

HelloI get incorrect results in a DISTINCT COUNT measure column GRAND TOTAL.

I have 5 separate in Paris and 10 separate clients in New York, I want the grand total for the sum of the two, it is 15.

But OBIEE calculates separate customers for all cities, so if there are customers in Paris and New York, the result is false.

This is the result I get:

City Number_Distinct_Customers

----------------------------------------------------------------------------

Paris 5

NEW YORK CITY 10

GRAND TOTAL 12

12 is the number of all separate clients.

The correct GRANT TOTAL is expected to be 5 + 10 = 15

Thank you

Concerning

I guess that COUNT(DISTINCT...) is regarded as the default aggregation in the Oracle replies.

To come in this, change the State of aggregation of fx (formula column in the criteria of answers tab) to 'SUM '...

Let me know if that solves your problem.

-bifacts :-)

http://www.obinotes.com

Tags: Business Intelligence

Similar Questions

-

Problem of IR with COUNT (*) (AS apxws_row_cnt)

Hello world

I use the same question raised in the IR problem with COUNT (*) () AS apxws_row_cnt discussion Forum, by Marco 1975. I'm developing on APEX 5.0.0.0.31 and using the Oracle 12 c DB. An IR translates 4648 occurrences only 100 records. The SQL is okay, but the OVER() COUNT (*) AS apxws_row_cnt done something witcthery on my report. Anyone has any idea how to solve this problem in 5 APEX?

My query:

SELECT PK_EMPLOYEE,

DATE_HIRE,

DATE_QUIT,

DBMS_LOB. GetLength ("foto") "FOTO"

NAME,

CPF

OF TB_EMPLOYEE

I found, helped by 3rd party experts - CITS Brasília - workaround using nested selects to avoid the Cartesian product of my request on IR. This is a Cartesian product of column FOTO ("PHOTO") against a column of master FK from another TABLE.

In any case there is the solution, I'm sorry for the Portuguese terms, but I understand that it will be quite understandable:

SELECT DISTINCT NULL as apxws_row_pk, "PK_EMPREGADO", "CPF", "NAME", "FOTO", "DATA_CONTRATACAO", "DATA_DISPENSA", count (*) (as apxws_row_cnt)

FROM (SELECT * FROM ())

SELECT

b."PK_EMPREGADO."

----------------------------------------------------------

--> Cartesian product: PHOTOS N versus Post - Posto Trabalho work 01

-"FK_POSTOS_TRABALHO"

-------------------------------------------------------------

-nvl (L1. ("" "' DISPLAY_VALUE ', b." FK_POSTOS_TRABALHO ") as"FK_POSTOS_TRABALHO,"

------------------------------------------------------------

«"" "" "" b."FCP", b."NOME", b. "FOTO", b. ' DATA_CONTRATACAO ', b. "DATA_DISPENSA»

FROM (SELECT * FROM (select distinct emp.) PK_EMPREGADO, emp. FK_POSTOS_TRABALHO, emp. FCP, emp. DATA_CONTRATACAO, emp. DATA_DISPENSA, emp. NOME, dbms_lob.getlength (emp. FOTO) "FOTO".

OF TB_EMPREGADO emp

RIGHT JOIN TB_POSTOS_TRABALHO_HOM pt ON (pt. PK_POSTOS_TRABALHO = emp. FK_POSTOS_TRABALHO)

TB_EXECUTORES of RIGHT JOIN ex WE: APP_USER IS NOT NULL and: APP_USER = e.g. MATRICULA AND pt. FK_CONTRATADA = e.g. FK_CONTRATADA WHERE emp.cpf IS NOT NULL

) ) b,

(SELECT NUM_CONTRATO |) ' - ' || DESCRIPTION as display_value, FK_POSTOS_TRABALHO as return_value

OF terceirizado. TB_CONTRATADA cont, terceirizado. Post TB_POSTOS_TRABALHO_HOM, terceirizado. Tipo TB_TIPO_HOM, terceirizado. TB_EMPREGADO emp

WHERE to post. TYPE = type. PK_TIPO and cont. PK_CONTRATADA = post. FK_CONTRATADA and emp. FK_POSTOS_TRABALHO = post. PK_POSTOS_TRABALHO

L1 OF THE ORDER OF 1)))

I hope that Oracle will provide a coherent solution for COUNT (*) () AS apxws_row_cnt question.

-

I'm counting how many people have registered and COMPLETED a SPECIFIC set of units

Must have completed all 2 units on the line

Business - UNITS = ISR and DSW

Physiology or medicine: UNITS = DFC and XFG

Accounting - UNITS = DF and FGR

1 ID enlisted in the operational units and two units of medicine so I would count this ID for each line

ID 2 made only 1 of each line, so I won't count the ID. Want to only count who have completed all THE 2 units for each line

ID3 enlisted in two business units then count

4 ID was enrolled in two business units but fell on a count THAT as long as it isn't BOTH

CREATE TABLE DAN_PATH

(VARCHAR2 (12) ID,)

UNIT VARCHAR2 (12),

STATUS varchar2 (12),

LINE VARCHAR2 (12));

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'SRI', 'Registered ', ' BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'WA', 'Registered', 'ACCOUNTING');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'DSW', 'Registered ', ' BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'DFC', 'Registered', 'MEDICINE');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'XCV', ' registered', 'GENIUS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('1', 'XFG", 'Followed', 'MEDICINE');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('2', 'SRI', 'Registered ', ' BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('2', 'FD', ' registered', 'GENIUS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('2', 'FGR', 'Registered', 'ACCOUNTING');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('3', 'SRI', 'Registered ', ' BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('3', 'DSW', 'Registered ', ' BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('4', 'DF', 'Registered', 'ACCOUNTING');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('4', 'SRI', 'Coward', 'BUSINESS');

insert into DAN_PATH (id, UNIT, STATUS, LINE) values ('4', 'DSW', 'Registered ', ' BUSINESS');GIVES:

ID UNIT STATUS LINE 1 SRI Registrants COMPANY 1 DRF Dropped ACCOUNTING 1 DSW Registrants COMPANY 1 DFC Dropped MEDICINE 1 XCV Registrants ENGINEERING 1 XFG Registrants MEDICINE 2 SRI Registrants COMPANY 2 FDS Registrants ENGINEERING 2 FGR Registrants ACCOUNTING 3 SRI Registrants COMPANY 3 DSW Registrants COMPANY 4 DF Registrants ACCOUNTING 4 SRI Dropped COMPANY 4 DSW Registrants COMPANY I would like to get 2 queries that to me the line and the County of ID and the other to get me the ID

LINE STUDENTS BUSINES 2 MEDICINE 1 ENGINEERING 0 ACCOUNTING 0 LINE ID COMPANY 1 COMPANY 3 MEDICINE 1 Hello

Let's start with 2 of the application; It's a little easier, and a solution for question 1 can be built on this subject:

WITH units AS

(

SELECT 'SRI' AS Unit, 'BUSINESS' AS a dual UNION ALL

SELECT 'DSW', 'BUSINESS' FROM dual UNION ALL

SELECT 'DFC', 'MEDICINE' IN UNION double, ALL

SELECT "XFG", 'MEDICINE' IN UNION double, ALL

SELECT 'DF', 'ACCOUNTING' FROM dual UNION ALL

SELECT 'FGR', 'ACCOUNTING' FROM dual UNION ALL

SÉLECTIONNEZ « FUBAR », « GÉNIE » DE la double

)

SELECT u.line

d.id

U units

JOIN dan_path d.unit d = u.unit

AND d.line = u.line

GROUP BY u.line

d.id

After HAVING COUNT (DISTINCT of CASE WHEN d.status = 'Registered' THEN d.unit END) = 2

COUNT (CASE WHEN d.status = 'Loose' THEN d.unit END) = 0

ORDER BY u.line

d.id

;

You can create a regular and permanent table for units, rather than use a WITH clause.

You can get the results desired for the application 1 by a GROUP BY on the results of the query 2, with the exception of a few things.

Quiz 1 must include all lines, even if no students not completed all units. To get these numbers 0, we can do an OUTER JOIN.

In addition, the HAVING of a query 2 clause eliminates the rows of the result set. We do not want to eliminate lines that fail under these conditions, we just want to know if they do not arrive or not. To do this, we have the same conditions inside a CASE, not a HAVING clause expression:

WITH units AS

(

SELECT 'SRI' AS Unit, 'BUSINESS' AS a dual UNION ALL

SELECT 'DSW', 'BUSINESS' FROM dual UNION ALL

SELECT 'DFC', 'MEDICINE' IN UNION double, ALL

SELECT "XFG", 'MEDICINE' IN UNION double, ALL

SELECT 'DF', 'ACCOUNTING' FROM dual UNION ALL

SELECT 'FGR', 'ACCOUNTING' FROM dual UNION ALL

SÉLECTIONNEZ « FUBAR », « GÉNIE » DE la double

)

query_2_results AS

(

SELECT u.line

d.id

CASE

WHEN COUNT (DISTINCT of CASE WHEN d.status = 'Registered' THEN d.unit END) = 2

COUNT (CASE WHEN d.status = 'Loose' THEN d.unit END) = 0

THEN 1

END as finished

U units

LEFT OUTER JOIN dan_path d.unit d = u.unit

AND d.line = u.line

GROUP BY u.line

d.id

)

SELECT line

COUNTY (completed) as students

OF query_2_results

GROUP BY line

;

I guess just some of your needs.

-

Any solution to LAST_VALUE with count (*) and BETWEEN the LINES without limit precedi

I'm working on the cover search active county from COV_CHG_EFF_DATE for the ID of strategy and Futures (sample data are for a single policy). The rule for the coverage of assets are counted as below

I find LAST_VALUE limit with the help of count (distinct COVERAGE_NUMBER) and the LINES BETWEEN Unbounded preceding AND 1 previous characteristic. Is there another better logical or analytical function in support of the application to calculate the value of aggregation based set of rules? Data from table and sample before if anyone find solution. Thank you.Transaction Type Count 01, 02 +1 06, 07 +1 09, 10 -1 03 0 COVERAGE_NUMBER TRANSACTION_TYPE COV_CHG_EFF_DATE Active_Cover_Count 01 01 22/OCT/2011 2 02 01 22/OCT/2011 2 04 02 23/OCT/2011 3 05 02 28/OCT/2011 4 05 09 30/OCT/2011 2 02 09 30/OCT/2011 2 05 06 03/NOV/2011 3 05 03 03/NOV/2011 3 05 09 05/NOV/2011 0 01 09 05/NOV/2011 0 04 09 05/NOV/2011 0

----------------------------------------------------------------------------------------------------------------------------------------

Published by: 966820 on October 30, 2012 20:30drop table PC_COVKEY_PD; create table PC_COVKEY_PD (PC_COVKEY varchar(30), POLICY_NUMBER varchar(30), TERM_IDENT varchar(3), COVERAGE_NUMBER varchar(3), TRANSACTION_TYPE varchar(3), COV_CHG_EFF_DATE date, TIMESTAMP_ENTERED timestamp ); delete from PC_COVKEY_PD; commit; insert into PC_COVKEY_PD values ('10695337P3021MC0020012', '10695337P3', '021', '002', '02', to_date('22/OCT/2011','DD/MM/YYYY'), cast('22/SEP/2011 03:10:12.523408 AM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0010012', '10695337P3', '021', '001', '02', to_date('22/OCT/2011','DD/MM/YYYY'), cast('22/SEP/2011 03:10:12.523508 AM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0040012', '10695337P3', '021', '004', '02', to_date('22/OCT/2011','DD/MM/YYYY'), cast('22/SEP/2011 03:10:12.864153 AM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0040032', '10695337P3', '021', '004', '03', to_date('22/OCT/2011','DD/MM/YYYY'), cast('22/SEP/2011 03:10:12.865153 AM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0050012', '10695337P3', '021', '005', '02', to_date('22/OCT/2011','DD/MM/YYYY'), cast('22/SEP/2011 03:10:12.976483 AM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0020042', '10695337P3', '021', '002', '09', to_date('22/NOV/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.006435 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0040042', '10695337P3', '021', '004', '03', to_date('22/NOV/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.135059 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0050042', '10695337P3', '021', '004', '09', to_date('22/NOV/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.253340 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0020042', '10695337P3', '021', '004', '03', to_date('22/NOV/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.363340 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0040032', '10695337P3', '021', '005', '09', to_date('29/NOV/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.463340 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0050032', '10695337P3', '021', '005', '03', to_date('29/DEC/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.563340 PM' as timestamp)); insert into PC_COVKEY_PD values ('10695337P3021MC0020012', '10695337P3', '021', '002', '03', to_date('30/DEC/2011','DD/MM/YYYY'), cast('16/FEB/2012 02:26:00.663340 PM' as timestamp)); commit;

-

Count distinct users registered

Hello

I have a table that contains the users and their hours of connection and disconnection. I need to know the maximum number of distinct users connected for a period of 15 minutes for each day. Periods of 15 minutes, start at 00:00 and ends at 23:45

For the above test data, I expect to see:WITH user_data AS (SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 11:57', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 12:34', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 12:18', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 12:23', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 12:41', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 13:20', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 13:22', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:12', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 13:25', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 13:26', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER2' user_id, TO_DATE('01-DEC-2010 13:27', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 13:30', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 13:34', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:08', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 13:53', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 16:38', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER3' user_id, TO_DATE('01-DEC-2010 14:00', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('22-FEB-2011 14:18', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:14', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:20', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:15', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:21', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:23', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:29', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:30', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 19:12', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:36', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 15:46', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:39', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 16:40', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('01-DEC-2010 14:44', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('01-DEC-2010 16:08', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 09:10', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:25', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 09:52', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:01', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 10:03', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:08', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 10:37', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 11:53', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER2' user_id, TO_DATE('02-DEC-2010 10:40', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:01', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 10:54', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 10:59', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 10:55', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 11:02', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 10:59', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 11:00', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER2' user_id, TO_DATE('02-DEC-2010 11:00', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:58', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 11:20', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:12', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 11:45', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 14:18', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 11:53', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:10', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 11:57', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:54', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 12:01', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:54', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 12:09', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:37', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER3' user_id, TO_DATE('02-DEC-2010 12:12', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 12:13', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 12:54', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 15:58', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 13:12', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:19', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 13:13', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 15:11', 'DD-MON-YYYY HH24:MI') logout_date FROM dual UNION ALL SELECT 'USER1' user_id, TO_DATE('02-DEC-2010 13:22', 'DD-MON-YYYY HH24:MI') login_date, TO_DATE('02-DEC-2010 13:50', 'DD-MON-YYYY HH24:MI') logout_date FROM dual), pivs AS (SELECT 0 piv_num FROM dual UNION ALL SELECT 1 piv_num FROM dual UNION ALL SELECT 2 piv_num FROM dual UNION ALL SELECT 3 piv_num FROM dual UNION ALL SELECT 4 piv_num FROM dual UNION ALL SELECT 5 piv_num FROM dual UNION ALL SELECT 6 piv_num FROM dual UNION ALL SELECT 7 piv_num FROM dual UNION ALL SELECT 8 piv_num FROM dual UNION ALL SELECT 9 piv_num FROM dual UNION ALL SELECT 10 piv_num FROM dual UNION ALL SELECT 11 piv_num FROM dual UNION ALL SELECT 12 piv_num FROM dual UNION ALL SELECT 13 piv_num FROM dual UNION ALL SELECT 14 piv_num FROM dual UNION ALL SELECT 15 piv_num FROM dual UNION ALL SELECT 16 piv_num FROM dual UNION ALL SELECT 17 piv_num FROM dual UNION ALL SELECT 18 piv_num FROM dual UNION ALL SELECT 19 piv_num FROM dual UNION ALL SELECT 20 piv_num FROM dual UNION ALL SELECT 21 piv_num FROM dual UNION ALL SELECT 22 piv_num FROM dual UNION ALL SELECT 23 piv_num FROM dual UNION ALL SELECT 24 piv_num FROM dual UNION ALL SELECT 25 piv_num FROM dual UNION ALL SELECT 26 piv_num FROM dual UNION ALL SELECT 27 piv_num FROM dual UNION ALL SELECT 28 piv_num FROM dual UNION ALL SELECT 29 piv_num FROM dual UNION ALL SELECT 30 piv_num FROM dual UNION ALL SELECT 31 piv_num FROM dual UNION ALL SELECT 32 piv_num FROM dual UNION ALL SELECT 33 piv_num FROM dual UNION ALL SELECT 34 piv_num FROM dual UNION ALL SELECT 35 piv_num FROM dual UNION ALL SELECT 36 piv_num FROM dual UNION ALL SELECT 37 piv_num FROM dual UNION ALL SELECT 38 piv_num FROM dual UNION ALL SELECT 39 piv_num FROM dual UNION ALL SELECT 40 piv_num FROM dual UNION ALL SELECT 41 piv_num FROM dual UNION ALL SELECT 42 piv_num FROM dual UNION ALL SELECT 43 piv_num FROM dual UNION ALL SELECT 44 piv_num FROM dual UNION ALL SELECT 45 piv_num FROM dual UNION ALL SELECT 46 piv_num FROM dual UNION ALL SELECT 47 piv_num FROM dual UNION ALL SELECT 48 piv_num FROM dual UNION ALL SELECT 49 piv_num FROM dual UNION ALL SELECT 50 piv_num FROM dual UNION ALL SELECT 51 piv_num FROM dual UNION ALL SELECT 52 piv_num FROM dual UNION ALL SELECT 53 piv_num FROM dual UNION ALL SELECT 54 piv_num FROM dual UNION ALL SELECT 55 piv_num FROM dual UNION ALL SELECT 56 piv_num FROM dual UNION ALL SELECT 57 piv_num FROM dual UNION ALL SELECT 58 piv_num FROM dual UNION ALL SELECT 59 piv_num FROM dual UNION ALL SELECT 60 piv_num FROM dual UNION ALL SELECT 61 piv_num FROM dual UNION ALL SELECT 62 piv_num FROM dual UNION ALL SELECT 63 piv_num FROM dual UNION ALL SELECT 64 piv_num FROM dual UNION ALL SELECT 65 piv_num FROM dual UNION ALL SELECT 66 piv_num FROM dual UNION ALL SELECT 67 piv_num FROM dual UNION ALL SELECT 68 piv_num FROM dual UNION ALL SELECT 69 piv_num FROM dual UNION ALL SELECT 70 piv_num FROM dual UNION ALL SELECT 71 piv_num FROM dual UNION ALL SELECT 72 piv_num FROM dual UNION ALL SELECT 73 piv_num FROM dual UNION ALL SELECT 74 piv_num FROM dual UNION ALL SELECT 75 piv_num FROM dual UNION ALL SELECT 76 piv_num FROM dual UNION ALL SELECT 77 piv_num FROM dual UNION ALL SELECT 78 piv_num FROM dual UNION ALL SELECT 79 piv_num FROM dual UNION ALL SELECT 80 piv_num FROM dual UNION ALL SELECT 81 piv_num FROM dual UNION ALL SELECT 82 piv_num FROM dual UNION ALL SELECT 83 piv_num FROM dual UNION ALL SELECT 84 piv_num FROM dual UNION ALL SELECT 85 piv_num FROM dual UNION ALL SELECT 86 piv_num FROM dual UNION ALL SELECT 87 piv_num FROM dual UNION ALL SELECT 88 piv_num FROM dual UNION ALL SELECT 89 piv_num FROM dual UNION ALL SELECT 90 piv_num FROM dual UNION ALL SELECT 91 piv_num FROM dual UNION ALL SELECT 92 piv_num FROM dual UNION ALL SELECT 93 piv_num FROM dual UNION ALL SELECT 94 piv_num FROM dual UNION ALL SELECT 95 piv_num FROM dual UNION ALL SELECT 96 piv_num FROM dual UNION ALL SELECT 97 piv_num FROM dual UNION ALL SELECT 98 piv_num FROM dual UNION ALL SELECT 99 piv_num FROM dual) SELECT s2.cdate, MAX(s2.user_count) FROM (SELECT TO_CHAR(t.cdate, 'DD-MON-YYYY') cdate, SUM(LEAST(t.user_count, 1)) user_count FROM (SELECT TO_DATE('01-DEC-2010', 'DD-MON-YYYY') + p1.piv_num cdate, TO_CHAR(TO_DATE((p2.piv_num * 15) * 60, 'SSSSS'), 'HH24:MI') time, SUM(CASE WHEN TRUNC((TO_DATE('01-DEC-2010', 'DD-MON-YYYY') + p1.piv_num) + (p2.piv_num * 15)/1440, 'MI') BETWEEN s.login_date and s.logout_date THEN 1 ELSE 0 END) user_count FROM pivs p1, pivs p2, (SELECT user_id, login_date, logout_date FROM user_data) s WHERE p1.piv_num < 2 -- number of days I want to look at i.e. 01-DEC-2010 and 02-DEC-2010 AND p2.piv_num < 96 -- number of 15 minute periods in a day i.e. 00:00 to 23:45 GROUP BY p1.piv_num, p2.piv_num, s.user_id ORDER BY p1.piv_num, p2.piv_num) t GROUP BY t.cdate, t.time) s2 GROUP BY s2.cdate ORDER BY TO_DATE(s2.cdate) /

The code above works, but I'm sure there must be a better way to do it, I can't see how...Date Users ----------- ----- 01-DEC-2010 2 02-DEC-2010 3

LeeHello

Here's one way:

WITH parameters AS ( SELECT TO_DATE ( '01-Dec-2010' -- Starting time (included in output) , 'DD-Mon-YYYY' ) AS start_dt , TO_DATE ( '03-Dec-2010' -- Ending time (NOT included in output) , 'DD-Mon-YYYY' ) AS end_dt , 24 * 4 AS periods_per_day FROM dual ) , periods AS ( SELECT start_dt + ((LEVEL - 1) / periods_per_day) AS period_start , start_dt + ( LEVEL / periods_per_day) AS period_end FROM parameters CONNECT BY LEVEL <= (end_dt - start_dt) * periods_per_day ) , got_cnt_per_period AS ( SELECT p.period_start , COUNT (distinct user_id) AS cnt , ROW_NUMBER () OVER ( PARTITION BY TRUNC (period_start) ORDER BY COUNT (*) DESC , period_start ) AS rnk FROM periods p JOIN user_data u ON p.period_start <= u.logout_date AND p.period_end > u.login_date GROUP BY p.period_start ) SELECT period_start , cnt FROM got_cnt_per_period WHERE rnk = 1 ORDER BY period_start ;You will notice that it does not use the pivs table. Generate periods of time with a CONNECT BY query is likely to be more effective, and you don't have to worry about having enough rows in the table pivs.

In this way avoids also all conversions between DATEs and strings (except for the entrance of the start_dt and end_dt parameters), which should take some time.If you are actually hardcode the parameters in the query, the abopve version will be easier to use and maintain, because each parameter only has to register once, in the first auxiliary request.

The output includes the start time of the period who had the highest number on each day. If there are equal (as in this example of data, there were 3 periods December 1, which had a number of 2), then the specified period is that earlier that day. If you want to see all, replace ROW_NUMBER RANK by the last auxiliary request, got_cnt_per_peroid. Of course, you don't have to display the time if you do not want.

-

10g - how to get the total overall totals

I need to generate a report of sales of products and companies with 3 columns of running totals. The columns are the amounts of gross sales,

the commissions earned and net premiums (gross sales - commissions earned). As each item in the purchase order, a record (transaction.transaction_id) is created and also

inserted into the table transaction_link as FK (transaction_link.trans_settle_id). When transaction_link has all the elements necessary to complete the order, the seller receives

his commission and totals to add to the report.

Here are the tablesscrewdrivers gross premium commission net premium(gross - commission) philips 1000 300 700 sears 500 200 300 === === === screwdriver ttl 1500 500 1000 power drills dewalt 1000 300 700 makita 600 200 400 === === === power drill ttl 1600 500 1100 grand total 3100 1000 1100

provider - prov_id (pk)

PRODUCT_TYPE - prod_type_id (pk)

transaction-transaction_id (pk), amount, dealer_commission

transaction_link - trans_settle_id, trans_trade_id, trans_type

I'm using the With clause, if I understand correctly it is very effective, but all the other tips are welcome:

net premium of the gross premium commission (gross-commission)WITH getSettlementTransaction AS (SELECT transaction_id FROM transaction trans WHERE trans.trans_type_id = 9 AND trans.PROV_ID = 25 AND trans.TRADE_DATE BETWEEN '1-SEP-05' AND TRUNC(SYSDATE)+1 ), getWireTransaction AS (SELECT trans_trade_id, trans_settle_id, trans.prov_id, prov.prov_name, trans.amount, trans.dealer_commission, trans.prod_type, prod.product_type FROM transaction_link, transaction trans, provider prov, product_type prod WHERE trans_link_type_id = 3 AND trans.transaction_id = transaction_link.trans_trade_id AND prov.PROV_ID = trans.PROV_ID AND trans.prod_type = prod.product_type_id AND trans.TRADE_DATE BETWEEN '1-SEP-05' AND TRUNC(SYSDATE)+1 ) SELECT prov_name, product_type, SUM(amount) gross_premium, SUM(dealer_commission) commission_earned , SUM(amount) - SUM(dealer_commission) FROM getWireTransaction, getSettlementTransaction WHERE trans_settle_id = transaction_id GROUP BY prov_name, product_type;

Philips 1000 700 300

Sears 500 200 300

But it doesn't give me the total for each vendor and does not include totals as I need.

Any guidance would be appreciated. Thank you.Hello

Achtung wrote:

It's great! Is it possible to format?DEPTNO:10 ENAME SAL --------------- ---------- CLARK 2450 KING 5000 MILLER 1300 Dept. Total 8750 DEPTNO:20 ADAMS 1100 FORD 3000 JONES 2975 SCOTT 3000 SMITH 800 Dept. Total 10875 DEPTNO:30 ALLEN 1600 BLAKE 2850 JAMES 950 MARTIN 1250 TURNER 1500 WARD 1250 Dept. Total 9400 Grand Total 29025I don't know how to make a line like "DEPTNO:10" before the header. It was maybe just a typo.

GROUP OF ROLLUP (or GROUP BY GROUPING SETS) will produce an great aggregate (such as 'Dept 9400 Total') line for each distinct value of the column rolled up. It looks like you want two of these lines: one at the beginning of each Department ("DEPTNO:30") and the other at the end ("min. Total 9400'). I don't know if you can get in a single subquery with GROUP BY. You can get it without a doubt with the UNION.

WITH union_results AS ( -- This branch of the UNION produces the header row for each department -- (and also the blank row before the grand total) -- SELECT deptno AS raw_deptno , CASE WHEN GROUPING (deptno) = 0 THEN 'DEPTNO: ' || deptno END AS display_deptno , NULL AS ename , NULL AS sal , 1 AS group_num FROM scott.emp GROUP BY ROLLUP (deptno) -- UNION ALL -- -- This branch of the UNION produces the rows for each employee, and the "Total" rows -- SELECT deptno AS raw_deptno , NULL AS display_deptno , CASE WHEN GROUPING (deptno) = 1 THEN ' Grand Total' WHEN GROUPING (ename) = 1 THEN ' Dept. Total' ELSE ename END AS ename , SUM (sal) AS sal , CASE WHEN GROUPING (ename) = 0 THEN 2 ELSE 3 END AS group_num FROM scott.emp GROUP BY ROLLUP (deptno, ename) ) SELECT display_deptno AS deptno , ename , sal --, group_num FROM union_results ORDER BY raw_deptno , groUp_num , ename ;Results:

DEPTNO ENAME SAL ------------ --------------- ---------- DEPTNO: 10 CLARK 2450 KING 5000 MILLER 1300 Dept. Total 8750 DEPTNO: 20 ADAMS 1100 FORD 3000 JONES 2975 SCOTT 3000 SMITH 800 Dept. Total 10875 DEPTNO: 30 ALLEN 1600 BLAKE 2850 JAMES 950 MARTIN 1250 TURNER 1500 WARD 1250 Dept. Total 9400 Grand Total 29025The subquery is needed only to hide a part of the ORDER BY columns.

You can comment the group_num column in the SELECT clause, to see how it works. -

How to count distinct exclusion of a value in the business layer?

Hi all

I have a column that has a lot of values. I need to do is a measure with aggregator separate count. But I shouldn't count 0 in the column. How can I do that. If I try to use any way to condition the aggregator option is disable. Help, please

Thank youLook at this example:

I did MDB table is DIRTY as:

Count_Distinct_Prod_Id_Exclude_Prod_Id_144

I'll count distinct PRODUCTS. PROD_ID, but exclude PROD_ID = 144 when counting.

Make this measure like this:

1. new column object/logic

2. go in tab data type and click EDIT the table logic table source

3. now, in the general tab add join of a table (in my case of PRODUCTS)

4. go in the column mapping tab-> see the deleted column mapping5. in the new column (in my case Count_Distinct_Prod_Id_Exclude_Prod_Id_144) write similar code:

CASE WHEN "orcl". » ». "" SH ". "PRODUCTS '." "' PROD_ID ' = 144 THEN ELSE NULL"orcl ". » ». "" SH ". "PRODUCTS '." "' PROD_ID ' END6. click OK and close the source logical table

7. now, in the logical column window go to the tab of the aggregation and choose COUNT DISTINCT.8. move the Count_Distinct_Prod_Id_Exclude_Prod_Id_144 measure for presentation

9 test answers (report cointains columns as follows)

PROD_CATEGORY_ID

Count_Distinct_Prod_Id_Exclude_Prod_Id_144And the result in the NQQuery.log is:

Select T21473. PROD_CATEGORY_ID C1,

Count (distinct from cases when T21473.) PROD_ID is 144, then NULL else T21473. End PROD_ID) C2

Of

PRODUCTS T21473

Group of T21473. PROD_CATEGORY_ID

order of c1Concerning

Goran

http://108obiee.blogspot.com -

COUNT (DISTINCT < nom_de_colonne >)

I would like to display the count (DISTINCT < company >) at the end of the report. I use a SQL query in the form of data / the whole model. Thanks in advance.

NEW YORK CITY

JANUARY 1, 2008

Company ID DIFF

ABC 1-1

2 2 XYZ

ABC 3-1

FEBRUARY 1, 2008

Company ID DIFF

MNO 1-1

2 2 XYZ

MNO 3-1

Total number of companies: 3Use this

-

I have a short 3d video with a distinct alpha black/white pass mark, how do I use the alpha as a mask pass? I want to add a distortion of the heat wave to the entire video, but not on the alpha pass area. How do I configure this?

Matte:

Start here learn After Effects:

-

min() with count equal to or greater than 2

I would like to find the ID number which had a load of * 2 or more * units during their FIRST term

The unit is * determined by combining SUB & SUBNO column (SUB |) SUBNO)

and want to STAT as a gift *.

Expected result:

ID 1 has 3 units and the only Word is 1101, which is also the first term (min) so would be selected

ID 2 has 2 units, so it passes that criteria, but the status is removed so that it would not be slected

ID 3 has 3 units, but only 1 unit was in the first quarter then it would be not selected as the requirement is 2 or more units in the FIRST quarter

Use: Oracle Database 10g Enterprise Edition Release 10.2.0.5.0 - 64biCREATE TABLE DAN_SSK (ID VARCHAR2(8), SUB VARCHAR2(8), SUBNO VARCHAR2(8), TERM VARCHAR2 (8), STAT VARCHAR2 (8)) INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (1,'LOP','11','1101','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (1,'KIL','20','1101','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (1,'OPP','58','1101','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (2,'PPP','78','1102','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (2,'UIN','99','1102','Deleted'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (3,'SSS','32','1106','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (3,'PIP','88','1112','Present'); INSERT INTO DAN_SSK (ID, SUB,SUBNO,TERM,STAT) VALUES (3,'KIL','41','1112','Present');

Thanks for any help.SELECT ID, COUNT(DISTINCT SUB || SUBNO) FROM dan_ssk WHERE (ID, TERM) IN (SELECT ID, TERM FROM (SELECT ID, MIN(term) TERM FROM dan_ssk GROUP BY ID) ) AND stat = 'Present' GROUP BY ID HAVING COUNT(DISTINCT SUB || SUBNO) > 1; ID COUNT(DISTINCTSUB||SUBNO) -------- ------------------------- 1 3 -

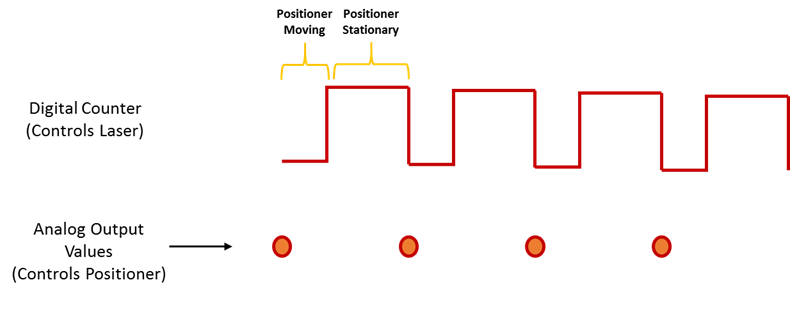

Analog output with counter Falling Edge

Hi all

Here's the iamge which describes what wishes to accomplish. I would like to trigger that the AO output with the edge of the fall of the meter.

I have set the clock for my AO as the counter.

The analogue output should be raised whenever the Digital signal meter falls

SAMPLE_SIZE = 80

SAMPLING_RATE = 40 #Samples are written every 25 milliseconds

TIME = float ((SAMPLE_SIZE) / (SAMPLING_RATE))CREATE TASKS

CREATE CHANNELS OF AO

CONFIGURE THE TIMING CHANNELS

DAQmxCfgSampClkTiming (taskHandleAO, "PFI12", SAMPLING_RATE, DAQmx_Val_Falling, DAQmx_Val_FiniteSamps, SAMPLE_SIZE)CREATE TASKS

CREATE A CHAIN COUNTER

# Time high-low + time equals 25 milliseconds and is proportional to the frequency of sampling

DAQmxCreateCOPulseChanTime(taskHandleD,"DAQ/ctr0","",DAQmx_Val_Seconds,DAQmx_Val_Low,0.00,0.005,0.020)# The values of voltage DAQmx writing

DAQmxWriteAnalogF64(taskHandleAO,SAMPLE_SIZE,0,10.0,DAQmx_Val_GroupByChannel,Voltage,None,None)# DAQmx AO task start

DAQmxStartTask (taskHandleAO)# Counter DAQmx Start task

DAQmxStartTask (taskHandleD)#TIME is equal to the total time for the writing samples

DAQmxWaitUntilTaskDone (taskHandleD, 2 * TIMES)I get an error every time that I run the task:

DAQError: Over Acquisition or generation has been stopped until the required number of samples were acquired or generated.

function DAQmxStopTaskThat's because my AO task is stopped for some reason any.

Is there an obvious problem with the code. Can it be structured differently?

best regards,

Ravi

I do all my programming in LabVIEW, so I'm pretty limited to help with programming syntax text. That being said, here's what I * think * I see:

Your AO task issues a call to DAQmxCfgSampClkTiming, but is not your task of counter. This probably leaves you with a meter spot which creates only a single impulse, which causes only a single AO D/A conversion. In LabVIEW when I need a pulse train, I would call a similar function of the synchronization with the clock mode is defined as 'implied '.

Hope this helps you get started, I don't know enough to give you the specific syntax in the text.

-Kevin P

-

Display total records with the last record

Hi all

I have the following tables:

create the table TRY_date_detail

(

item_date date,

number of item_order

);

create the table TRY_date_master

(

date of master_date,

number of date_amount

);

Insert all

IN TRY_date_detail values (to_date('01-01-2011','DD-MM-YYYY'), 1)

IN TRY_date_detail values (to_date('01-01-2011','DD-MM-YYYY'), 2).

IN TRY_date_detail values (to_date('01-01-2011','DD-MM-YYYY'), 3)

IN TRY_date_detail values (to_date('01-01-2011','DD-MM-YYYY'), 4)

IN TRY_date_detail values (to_date('01-01-2011','DD-MM-YYYY'), 5)

IN TRY_date_master values (to_date('01-01-2011','DD-MM-YYYY'), 6432)

IN TRY_date_master values (to_date('01-01-2011','DD-MM-YYYY'), 1111)

IN TRY_date_detail values (to_date('11-08-2012','DD-MM-YYYY'), 11)

IN TRY_date_detail values (to_date('11-08-2012','DD-MM-YYYY'), 12)

IN TRY_date_detail values (to_date('11-08-2012','DD-MM-YYYY'), 13)

IN TRY_date_detail values (to_date('11-08-2012','DD-MM-YYYY'), 14)

IN TRY_date_master values (to_date('11-08-2012','DD-MM-YYYY'), 8913)

IN TRY_date_detail values (to_date('09-03-2014','DD-MM-YYYY'), 21)

IN TRY_date_detail values (to_date('09-03-2014','DD-MM-YYYY'), 22)

IN TRY_date_detail values (to_date('09-03-2014','DD-MM-YYYY'), 23)

IN TRY_date_master values (to_date('09-03-2014','DD-MM-YYYY'), 1234)

IN TRY_date_detail values (to_date('11-03-2014','DD-MM-YYYY'), 33)

Select * twice;

-My query is:

SELECT ITEM_DATE, ITEM_ORDER, DATE_AMOUNT NULL

OF TRY_date_detail

WHERE ITEM_DATE IN (SELECT DISTINCT master_date OF TRY_date_master)

UNION

SELECT MASTER_DATE, NULL, SUM (DATE_AMOUNT)

OF TRY_DATE_MASTER

MASTER_DATE GROUP;

-My query result:

ITEM_DATE ITEM_ORDER DATE_AMOUNT

January 1, 11 12:00:00 AM 1 (null)

January 1, 11 12:00:00 AM 2 (null)

January 1, 11 12:00:00 AM 3 (null)

January 1, 11 12:00:00 AM 4 (null)

January 1, 11 12:00:00 AM 5 (null)

January 1, 11 12:00:00 AM (null) 7543

11 Aug 12 12:00:00 AM 11 (null)

11 Aug 12 12:00:00 AM 12 (null)

August 11, 12 noon 13 (null)

11 Aug 12 12:00 14 (null)

11 Aug 12 12:00:00 AM (null) 8913

9 March 14 12:00:00 AM 21 (null)

9 March 14 12:00:00 AM 22 (null)

9 March 14 12:00:00 AM 23 (null)

9 March 14 12:00:00 AM (null) 1234

-What I need, is to display the Date_amount with the last line of each date as follows:

ITEM_DATE ITEM_ORDER DATE_AMOUNT

January 1, 11 12:00:00 AM 1 (null)

January 1, 11 12:00:00 AM 2 (null)

January 1, 11 12:00:00 AM 3 (null)

January 1, 11 12:00:00 AM 4 (null)

January 1, 11 12:00:00 AM 5 7543

11 Aug 12 12:00:00 AM 11 (null)

11 Aug 12 12:00:00 AM 12 (null)

August 11, 12 noon 13 (null)

August 11, 12 12 00 14 8913 h

9 March 14 12:00:00 AM 21 (null)

9 March 14 12:00:00 AM 22 (null)

9 March 14 12:00:00 AM 23 1234

Thank you

Ferro

You have to JOIN to the table two

SQL> select item_date 2 , item_order 3 , decode(rno, cnt, date_amount) date_amount 4 from ( 5 select item_date 6 , item_order 7 , date_amount 8 , row_number() over(partition by item_date order by item_order) rno 9 , count(*) over(partition by item_date) cnt 10 from try_date_detail 11 join ( 12 select master_date, sum(date_amount) date_amount 13 from try_date_master 14 group 15 by master_date 16 ) 17 on item_date = master_date 18 ); ITEM_DATE ITEM_ORDER DATE_AMOUNT ------------------- ---------- ----------- 01/01/2011 00:00:00 1 01/01/2011 00:00:00 2 01/01/2011 00:00:00 3 01/01/2011 00:00:00 4 01/01/2011 00:00:00 5 7543 11/08/2012 00:00:00 11 11/08/2012 00:00:00 12 11/08/2012 00:00:00 13 11/08/2012 00:00:00 14 8913 09/03/2014 00:00:00 21 09/03/2014 00:00:00 22 09/03/2014 00:00:00 23 1234 12 rows selected. SQL>

-

Hello world

I read an article about a measure of viscosity (http://www.seomoz.org/blog/tracking-browse-rate-a-cool-stickiness-metric). It's basically a way to measure how well you attract people to your Web site.

Viscosity for the last 7 days: (County of different users today) / (number of distinct users for the last 7 days)

Viscosity for the last 30 days: (County of different users today) / (number of distinct users for the last 30 days)

I have a table called WC_WEB_VISITS_F that is in the grain of the user's visit to one of my web sites.

Is there a way I can use OBIEE to calculate values above?

I can get the numerator. I create a logical column called count of separate to everyday users and aggregation set to Distinct Count on USER_ID and then I pivot my report to the Date of visit.

I can't understand how Distinct Count for X days. If there were separate count for this month, I just copy the count of distinct daily users and set the content to the month level, however, it is a floating range, I can't do that.

Does anyone have an idea of Cleaver for this?

Thank you!

-Joe11g is the function PERIODROLLING, which is the answer to all these requirements. The PERIODROLLING function allows you to perform an aggregation on a specified set of periods of grain of query rather than a grain of fixed time series. The most common use is to create rolling averages, as "13-week Rolling average." With 10 all we will get is a feature limited according to solutions...

-

Update Firefox 18,0 on a MacBook running Mac OS 10.6. Non-static moment of window display areas (squares url & search, main area of window, etc.) incorrectly, similar to what happens when a program thinks that the image (for example) is 500 pixels wide, when its actually 512 pixels wide. Each line after the first begins with what is "still" line above, resulting in an image that appears tilted, with what should be the right of the image progressively more appearing on the left side of the image. No other programs (including Safari) do seem to be having this problem, and it appeared immediately after the upgrade. Minimize the window in the Dock, and then reopen temporarily solves the problem. As soon as I scroll the window, the problem reappears.

Have you tried disabling hardware acceleration?

- Firefox > Preferences > advanced > General > Browsing: "use hardware acceleration when available.

- https://support.Mozilla.org/KB/troubleshooting+extensions+and+themes

-

Determining direction HALL with counter

Hello

I need to acquire two signals of a HALL sensor on a test system, where an engine is running. The sensor system signals two squares. I need to determine two things:

1.) number of pulses (degrees counting)

2.), direction Tunring.

Counting pulses is very simple with two counter inputs, for example with a PXI-6602. But what is the right way to determine the direction of rotation (phase shift between two signals: + 90 ° CW = ;-90 ° = CCW)

Any help is welcome!

You're essentially talking about a rotary encoder. There is a counter input angular encoder via DAQmx option that already performs the decoding of it for you. Two signals (A and B) of probe is wired to the inputs of a meter and the number of pulses is counted and then put on the scale. In addition, the CI DV encoder count upwards or downwards depending on whether A leads to B or vice versa.

http://www.NI.com/gettingstarted/setuphardware/dataacquisition/quadratureencoders.htm

https://decibel.NI.com/content/docs/doc-17346

If you are still using an FPGA device there are a number of examples easy to write your own decoder of a pair of digital inputs (more a Z reference if you wish).

Maybe you are looking for

-

Hi guys, anyone would be able to recommend anti Virus app? By mistake I installed Norton who caused major problems with my machine ~ event if it's the mac version. Its now uninstalled, but with safety in mind, do I have to have anti virus? I thank in

-

. HP Smart printing fails to include images - all-in-one printer, HP Photosmart 3210

With the help of HP Smart printing on all-in-one printer, HP Photosmart 3210. Smart photo has disappeared from my computer and now reappeared. Now the images are not yet an option to include on the printed page. They do not appear. Has anyone else h

-

How can I specify the security protocol for an e-mail server when you use the SMTP e-mail functions. I would like to automatically generate and send emails on a certain interval of time. I can do to the servers of email such as AOL, Yahoo, etc.. Howe

-

Using XP Professional... problems caused after the download of Service Pack 3

Using XP Professional... problems caused after the download of Service Pack 3 Using XP Professional... problems caused after the download of Service Pack 3 Only these security updates are not downloaded successfully: Security Update for Microsoft .NE

-

Windows Vista - no system sounds - no application in the volume component

Windows Vista volume control pane, it is sndvol.exe, only shows the volume for master volume slider. Applications pane is empty. No sound from any application or system. iTunes displays the error message.