Behavior of batch writeback in level of EP partition transactions

Hello

We use EntryProcessors to perform updates on several entities stored in the cache partition. According to the documentation, consistency manages all updates in a 'sandbox' and then atomically commits them to the carrier of the cover sheet.

The question is, when using writeback, ensures consistency all updated in the same "transaction level partition' entries will be present in the same operation"storeAll?

Once again, according to the documentation, the behavior of lazy writer thread is this:

- The thread is waiting for an entry ripe become queued.

- When an entry reached its climax, the thread removes all blackberries and soft-ripe entries in the queue.

- The thread then writes all the entries of blackberries and mulberries soft via store() (if there is only the single mature entry) or storeAll() (if there are several entries of blackberries/soft-ripe).

- Then, the thread again (1).

If all the entries updated in the same transaction level partition become ripe or soft mature at the same time they will be present in the operation of storeAll. If they are not soft-blackberries/mulberries at the same time, they cannot all be present.

So, everything depends on the behavior of the validation of the transaction of partition level, so all entries postmarked even for the update, they will all become ripe at the same time.

Is anyone know what behavior we can expect in this regard?

Thank you.

Hello

This comment is relevant to 3.7.1. I guess the same thing remains can be found in paragraph 12.1, but have not checked yet.

There is no contract between "transactions level partition" and cache store.

In practice, in the case of writing-although

-storeAll() is never called, updated entries are transferred to call store() one by one.

writeback cace

-It works according to documentation (which you cited) without consent and limits 'partition level transactions

Kind regards

Alexey

Tags: Fusion Middleware

Similar Questions

-

write behind confirmation of behavior of batch processing

Scenario as follows. Cache is configured with a writeback delay of 10 seconds. An entry is inserted into the cache. For some reason, the db is slow and when the cache store is trying to dump this entry on the disk it takes 1 minute. During this period, this same entry is updated in the cache twice, but these updates are > 10 seconds apart).

These 2 latest updates get wrapped in a store operation in the cache store? Or ask the store cache to store versions of entrance, because updates were higher than processing batch apart?

Thank youHi anonymous user628574.

Consecutive puts will indeed be gathered in a single 'store' operation.

Kind regards

Gene -

Access a view of Table with DAL | Documaker 12.2 - ODBC

Hello

We want to access a view using DAL during batch printing to access the information of the entity. This info would go on a separator Page in batch (paper) print.

1 is this (a VIEW access) even possible?

2 see. us DB functions in the documentation. I think that we need an ODBC connection, Manager of the DB for the view.

Grateful if someone guide us to the required process.

Thank you!

Hello

Yes it is possible, provided the machines where you run Documaker have access to the database, and the necessary drivers are configured correctly. You don't mention what version of Documaker you run or what platform it is being run. DAL documentation is available in different versions:

The process is generally the same for the Standard of Documaker editions:

(1) set an INI setting DBHandler

(2) implement the DAL functions for the DB operations

The doc for 11.5 begins on page 43 of the above link and describes creating a DBHandler for different types of files and databases. You are not limited to ODBC, so check the documentation for your version of Documaker - some options are DB2, ODBC, SQL Server, Excel files, etc. Once you create the entry of DBHandler, you must set the DBTable who described the DISPLAY you will access. The DBTable has, as one of its options, the name of the DBHandler which must be used to access. Then, you create a DFD that defines the display (or table) - structure columns, data types and lengths. Most of this is detailed in the same document, although information on the creation of a DFD are located in other documents. You probably already - examples of DFD files, otherwise they are available in the documentation according to the Documaker version you are using. To 11.5, check the Documaker Studio guide.

After you have defined the DBHandler and DBTable with her partner DFD, you must then implement the code. You probably want to consider opening and closing the connection at the beginning and at the end of the race, or even at the level of the lot so you do not adversely affect performance. You will implement the query at the same level as the separator page (which means that if you build pages of separation in a PostTransactionDAL, you will do the same for the connection.

Example Configuration

< dbtable:myview="">

DBHandler = MYHANDLER

DFD is c:\deflib\view. DFD

< dbhandler:myhandler="">

Install = SQW32-> SQInstallHandler

Server = myserver

User = documaker

Passwd = ~ ENCRYPTED xxxx

Note: remember to create the appropriate DFD!

Code example - open/close

* Open the connection to the database - made a batch or run level before you process transactions.

* Open the dbTable called BIRD with the DBHandler set in the DBTable for BIRD. Optionally, you can specify a particular Manager in the DBOpen below.

RC = DBOPEN ("MYVIEW",,"c:\fap\mstrres\run\deflib\view.dfd","READ");

* manage return codes...

* Close the connection when we finished. This should be done at the end of all operations processing.

RC = DBClose ("BIRD");

Example of Code - query

To prepare the query variables. Variables preceded "REC".

RC = DBPrepVars ("" BIRD"," REC");

* manage the return code...

find value and use.

* query parameters are the value of the column, [,...] shown as COL1 = value1, COL2 = value2. Replace these values and the actual column names.

* Note that DBFind accesses the FIRST record that matches the specified criteria.

RC = DBFind("MYVIEW","REC","COL1","Value1","COL2","Value2");

* You can replace with variables DAL here, so if you have a variable DAL containing a name of entity or similar,

* rc = DBFind("MYVIEW","REC","PERSON_NAME",entityName);

* manage return codes...

* data access

* using the variable prefix created in DBPrepVars (in this case, we use "REC"). The column names are those defined in the DFD. Example:

print_it (REC. SOMECOLUMNNAME);

Other Options

You can also loop through the records by using the DBFirstRec and DBNextRec functions and evaluate each record to determine if it meets your criteria for use. However, it is recommended to use DBFind and get the exact record you need (also let the database do the work of localization of the registration right for you.

Example:

RC = DBPrepVars ("BIRD", "REC");

RC = DBFirstRec ("BIRD", "REC");

While rc = 1

evaluate the data using REC.columnname

* for example IF (REC. Col1 = 'Value1')...

* a code...

* If we have located the folder that we need, drop out of the loop

BREAK;

* background loop.

RC = DBNextRec ("BIRD", "REC");

WEND

* more than code...

Hope this helps,

Andy

-

Hello

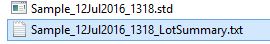

How to add an Id(which is inputted in the Configure Lot Setting) a lot in the naming of the batch summary and report STDF files generated by the Test Module of the semiconductor.

Currently the default name is shown in the excerpt below

Thank you

Rovi

Hi Rovi,

Have you tried the recall of ConfigureLotSettings or some of the steps listed in Cusomizing behavior for batch parametersof edition?

Kind regards

John Gentile

Engineering applications

National Instruments

-

SSD partitioning and Wear levelling

Hello

I just installed a Pro 480 G drive on an old laptop and partitioned the disk 78/368 for OS & data respectively.

The disk partitioning will reduce the CUTTING capacity to implement wear levelling on each partition or wear leveling is done through the reader regardless of the partitions?

Cheers - Andy

Wear leveling is done at a lower level than the partition table, so he shouldn't have worn effect of leveling.

-

Level lock table while truncating the partition?

Below, I use the version of oracle.

Oracle Database 11 g Enterprise Edition Release 11.2.0.2.0 - 64 bit Production

PL/SQL Release 11.2.0.2.0 - Production

CORE Production 11.2.0.2.0

AMT for Linux: Version 11.2.0.2.0 - Production

NLSRTL Version 11.2.0.2.0 - Production

I have a script to truncate the partition as below. Is there a table-level lock while truncating the parition? Any input is appreicated.

ALTER TABLE TEMP_RESPONSE_TIME TRUNCATE PARTITION part1>

Is there a table-level lock while truncating the parition?

>

No - it will lock the partition being truncated.Is there a global index on the table? If so they will be marked UNUSABLE and must be rebuilt.

See the VLDB and partitioning Guide

http://Oracle.Su/docs/11g/server.112/e10837/part_oltp.htm

>

Impact of surgery Maintenance of Partition on a Table partitioned local index

Whenever a partition maintenance operation takes place, Oracle locks the partitions of the table concerned for any DML operation. Data in the affected partitions, except a FALL or a TRUNCATION operation, are always fully available for any operation selection. Given that clues them are logically coupled with the (data) table partitions, only the local index partitions affected table partitions must be kept as part of a partition maintenance operation, which allows the optimal treatment for the index maintenance.For example, when you move an old score a level of high-end storage at a level of low-cost storage, data and index are always available for SELECT operations. the maintenance of the necessary index is either update the existing index partition to reflect the new physical location of the data or, more commonly, relocation and reconstruction of the index to a level of storage partition low cost as well. If you delete an older partition once you have collected it, then its local index partitions deleted, allowing a fraction of second partition maintenance operation that affects only the data dictionary.

Impact of surgery Maintenance of Partition on Global Indexes

Whenever a global index is defined on a table partitioned or not partitioned, there is no correlation between a separate partition of table and index. Therefore, any partition maintenance operation affects all global indices or index partitions. As for the tables containing indexes, the affected partitions are locked to prevent the DML operations against the scores of the affected table. However, unlike the index for the local index maintenance, no matter what overall index remains fully available for DML operations and does not affect the launch of the OLTP system. On the conceptual and technical level, the index maintenance for the overall index for a partition maintenance operation is comparable to the index maintenance which would become necessary for a semantically identical DML operation.

For example, it is semantically equivalent to the removal of documents from the old partition using the SQL DELETE statement to drop old partition. In both cases, all the deleted data set index entries must be removed to any global index as a maintenance operation of normal index that does not affect the availability of an index to SELECT and DML operations. In this scenario, a drop operation represents the optimal approach: data is deleted without the expense of a conventional DELETE operation and the indices are maintained in a non-intrusive way.

-

Programmatically change the taskflow transaction behavior

Hi all

Is it possible to programmatically change the transaction behavior taskflow "Always start a new transaction" to "reuse the existing Transaction? I have a taskflow which must be consumed by different teams and some teams want post me the transaction independent of their own, while others would like to indulge my changes with them once I returned from my taskflow control appellant taskflow.

Any suggestions on this?

Thank you

SriniI think that my answer was based on the semantics of your question. You have asked to change transaction option behavior programmatically separate to change the flow of task transaction behavior. Indeed the messages of Jobinesh provide such a mechanism and of course be too aware of the scope of data control.

Make one step back however, why don't the existing Transaction to use option if Possible do not work for you? If the appellant wants to that you have a remote, they just wrap your call in one always start new Transaction BTF. This way, you don't have to worry about the behavior of the transaction, it is the appellant as it should.

DMI

-

PARTITION-level statistics under

How do you define statistics to score high school level?

We are running Oracle 11 g R2 and you want statistics to simulate different data among secondary distributions in the walls.

Essentially, we want to control/change the "NUM_ROWS" to fool the optimizer to think that there are more or less lines.

We went through the DBMS_STATS subprograms

DBMS_STATS. GET_TABLE_STATS

DBMS_STATS. SET_TABLE_STATS

Two of these subprogrammes appear to be able to go to the subpartition to the deepest level.

We can determine the statistics (NUM_ROWS) for secondary partitions by querying the DBA_TAB_SUBPARTITIONS table NUM_ROWS column.

AFTER the parsed table.

But we have not found a way to PUT the statistics in the table at the level of secondary partition.

We work in a VLDB and using the RANGE-LIST partitioning for our big tables.

Published by: user10260925 on February 20, 2011 09:02

Published by: user10260925 on February 20, 2011 09:02user10260925 wrote:

How do you define statistics to score high school level?

We are running Oracle 11 g R2 and you want statistics to simulate different data among secondary distributions in the walls.

Essentially, we want to control/change the "NUM_ROWS" to fool the optimizer to think that there are more or less lines.We went through the DBMS_STATS subprograms

DBMS_STATS. GET_TABLE_STATS

DBMS_STATS. SET_TABLE_STATS

Two of these subprogrammes appear to be able to go to the subpartition to the deepest level.We can determine the statistics (NUM_ROWS) for secondary partitions by querying the DBA_TAB_SUBPARTITIONS table NUM_ROWS column.

AFTER the parsed table.

But we have not found a way to PUT the statistics in the table at the level of secondary partition.How do you use the set_table_stats procedure - it should do what you want, even if you use the parameter "partname" to provide a name of subpartition if you want to replace some stats subpartition.

for example

begin dbms_stats.set_table_stats( ownname => user, tabname => 'PT_RL', partname => 'P_2002_FEB_CA', numrows => 1000, numblks => 10, avgrlen => 80 ); end; /In this area, above, p_2002_feb_ca is the subpartition in the partition of a partitioned table p_2002_feb CA / ranges list.

Concerning

Jonathan Lewis

http://jonathanlewis.WordPress.com

http://www.jlcomp.demon.co.UKA general reminder on "Forum label / Reward Points": http://forums.oracle.com/forums/ann.jspa?annID=718

If you mark never questions answers people decide later that it isn't worth trying to answer you because they never know if yes or no, their response has been of no use, or if you even bothered to read it.

It is also important mark responses that you thought useful - again once it leaves other people know that you appreciate their help, but it also acts as a pointer for other people when they are researching on the same issue, also means that when you mark a bad or wrong the useful answer someone can be invited to tell you (and the rest of the forum) which is so bad or wrong on the answer that you found it useful.

-

iPhoto/iTunes sync is no longer to IOS devices

I got a new iPad Air 2 last week and has had to update iTunes 12.3.1.23 to sync it with my iMac Yosemite 10.10.2 running. iTunes sync is no longer my iPhoto Albums and events to my iPhone and iPad. Images, faces to events etc. in the Albums of IOS are frozen to what they were before the update to iTunes.

Because the pictures of OSX does not yet support updates by batches, events, 5 levels of favorites, title poster etc. I don't want to go to El captain or OSX Photos until they do.

Is there a solution without going to the pictures and lose these features?

Make sure that iCloud library is off somewhere on your system.

Because the pictures of OSX does not yet support batch updates

For this

Events,

Never, then modify your system or finding a new application, as iPhoto is is more developed and someday a version of the operating system will come along where it won't work. You can imitate a version of events with Photo Albums.

5 levels of favorites,

I guess you mean the side stars here? You can mimic that with keywords - same apply keyboard shortcuts. Has the advantage of conforming to the standards that was not rating stars.

title displays etc.

Don't know exactly what you mean by that, but you can display under photo images.

-

Red spots on the skin when printing with the HP PhotoSmart 8400 printer

I am trying to print a JPEG image that is very high resolution (3000 x 2000) and the quality is superb on my monitor.

However when I try to print on my HP PhotoSmart 8400 printer there is a strange red 'sand' pattern (pixelation) that appears on the skin of most of the bodies of the subjects. I did the test the page and sample page that look ok, but does not use the same colors and has not any skin tone (it's a picture of Orchid).

I tried this on glossy paper and regular paper, and it seems to pretty much the same, with the effect more pronounced on glossy paper. I also tried to change the image in several different ways, including lowering the resolution, changing colors and adding a blur gaussing, but cannot get rid of the strange grainy effect.

Can you get it someone please let me know if it is a common problem with the device, or if my photo is somehow wrong? If Yes, where can I get a picture called to test the printing of skin tone, or how can I solve the problem.

Thank you!

Update: I tried with another photo on the web and I see the same effect. Given that this printer is a few years old I start thinking that it's just a physical limitation of the printer.

Found problem - I replaced my cartridge 99 and that fixed it.

I didn't expect this type of behavior because of the low level of ink, but I guess there is a first time for everything ():

-

Insertion in Postgres via ODBC gateway

Hi, I did a few tests, here are the questions and results:

Postgres:

Oracle: [ORADB: db link to Postgrers]CREATE TABLE t_dump ( str character varying(100) )

Works very well, both from PL/SQL SQLINSERT INTO "t_dump"@ORADB VALUES ('test');

However:

Gives me an error:Declare m_str Varchar2 (10) := 'test'; Begin INSERT INTO "t_dump"@ORADB VALUES (m_str); End;

Dynamic SQL gives the exact same error:Error report: ORA-00604: error occurred at recursive SQL level 1 ORA-02067: transaction or savepoint rollback required ORA-28511: lost RPC connection to heterogeneous remote agent using SID=ORA-28511: lost RPC connection to heterogeneous remote agent using SID=(DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=DB)(PORT=1521))(CONNECT_DATA=(SID=pg))) ORA-02055: distributed update operation failed; rollback required ORA-02063: preceding lines from ORADB ORA-06512: at line 8 00604. 00000 - "error occurred at recursive SQL level %s" *Cause: An error occurred while processing a recursive SQL statement (a statement applying to internal dictionary tables). *Action: If the situation described in the next error on the stack can be corrected, do so; otherwise contact Oracle Support.

Is it possible that I could use variables in queries?Declare m_str Varchar2 (10) := 'test'; Begin EXECUTE IMMEDIATE 'INSERT INTO "t_dump"@ORADB VALUES (:v0)' Using m_str; End;

An issiue more:

I created a table partitioned in Postgres and simple INSERT statement works well [SQL Oracle's]; However - the same statement, accompanied by start/end [PL/SQL] needs about 60 seconds to complete. Is this a normal behavior? Y at - it a config/pragma... I use to speed it up?

Concerning

Bart Dabris the full path or you cut a few lines at the end?

When you look at the link I see a column is bound without precision (LONGVARCHAR-1 Y 0 0 0 / 0 0 0 220 stringvalue)-this also happens when using the insert without a variable binding?

Published by: kgronau on October 5, 2011 09:09

I've created a demo table in a Postgres database:

CREATE TABLE information_schema. "" VarBin ".

(

whole col1,

col2 character varying (100)

)

WITHOUT OIDS;And my gateway to help insert links works:

SQL > declare

Number of m_nmbr (10): = 1;

m_str Varchar2 (10): = 'test ';

Begin

INSERT INTO 'information_schema '. "VarBin"@postgres_dg4dd61_emgtw_1123_db (m_nmbr, m_str) values;

End;

/ 2 3 4 5 6 7PL/SQL procedure successfully completed.

SQL > select * from 'information_schema '. "VarBin"@postgres_dg4dd61_emgtw_1123_db;

col1 col2

----------------------------- ---------------------------------------------------

1 testWhat the version of the ODBC driver you are using and do the test using my script works for you?

-

An unexpected serious error occurred in JDeveloper

Hello

I use JDeveloper 11.1.1.3.0 Studio. I get the error "an unexpected serious error occurred in JDeveloper" whenever I try to create a new file in Jdeveloper. Is this a bug in JDeveloper?

Here is the trace of the exception.

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Run the Save action [of oracle.ide.ceditor.CodeEditor]

Call to order: [from oracle.ide.ceditor.CodeEditor]

Launched CommandProcessor transaction: Auto Save Actions on AWT-EventQueue-0 thread at batch level 1

Finished CommandProcessor transaction on AWT-EventQueue-0 thread at the batch 0 level

Calling command: delete [oracle.ide.ceditor.CodeEditor] Next

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Run the Save action [of oracle.ide.ceditor.CodeEditor]

Call to order: [from oracle.ide.ceditor.CodeEditor]

Launched CommandProcessor transaction: Auto Save Actions on AWT-EventQueue-0 thread at batch level 1

Finished CommandProcessor transaction on AWT-EventQueue-0 thread at the batch 0 level

Execution of action Project Properties... [from oracle.ide.navigator.ProjectNavigatorWindow]

Run the find action... [from oracle.ide.ceditor.CodeEditor]

Execution of action Run [of oracle.ide.navigator.ProjectNavigatorWindow]

Execution of action Run [of oracle.ide.navigator.ProjectNavigatorWindow]

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Run the Save action [of oracle.ide.ceditor.CodeEditor]

Call to order: [from oracle.ide.ceditor.CodeEditor]

Launched CommandProcessor transaction: Auto Save Actions on AWT-EventQueue-0 thread at batch level 1

Finished CommandProcessor transaction on AWT-EventQueue-0 thread at the batch 0 level

Execution of action Run [of oracle.ide.navigator.ProjectNavigatorWindow]

Perform the action cancel paste [of oracle.ide.ceditor.CodeEditor]

Run the Save action [of oracle.ide.ceditor.CodeEditor]

Call to order: [from oracle.ide.ceditor.CodeEditor]

Launched CommandProcessor transaction: Auto Save Actions on AWT-EventQueue-0 thread at batch level 1

Finished CommandProcessor transaction on AWT-EventQueue-0 thread at the batch 0 level

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Calling command: paste [of oracle.ide.ceditor.CodeEditor]

Run the Save action [of oracle.ide.ceditor.CodeEditor]

Call to order: [from oracle.ide.ceditor.CodeEditor]

Launched CommandProcessor transaction: Auto Save Actions on AWT-EventQueue-0 thread at batch level 1

Finished CommandProcessor transaction on AWT-EventQueue-0 thread at the batch 0 level

Execution of action range of resources [of oracle.ide.ceditor.CodeEditor]

Execution of action range of resources [of oracle.ide.ceditor.CodeEditor]

Execution of new action... [from oracle.ide.navigator.ProjectNavigatorWindow]

Uncaught exception

java.lang.NullPointerException

o.j.webservices.model.WebServiceProxyNode.openImpl(WebServiceProxyNode.java:367)

o.i.model.Node.open(Node.java:974)

o.i.model.Node.open(Node.java:922)

o.i.model.DefaultContainer.getChildren(DefaultContainer.java:76)

o.ii.explorer.ExplorerNode.getChildNodes(ExplorerNode.java:297)

o.ii.explorer.BaseTreeExplorer.addChildren(BaseTreeExplorer.java:371)

o.ii.explorer.BaseTreeExplorer.open(BaseTreeExplorer.java:1048)

o.ii.explorer.BaseTreeExplorer.findNodeDepthFirst(BaseTreeExplorer.java:1635)

o.ii.explorer.BaseTreeExplorer.findNodeDepthFirst(BaseTreeExplorer.java:1648)

o.ii.explorer.BaseTreeExplorer.findNodeDepthFirst(BaseTreeExplorer.java:1648)

o.ii.explorer.BaseTreeExplorer.findNodeDepthFirst(BaseTreeExplorer.java:1648)

o.ii.explorer.BaseTreeExplorer.findTNode(BaseTreeExplorer.java:215)

o.i.navigator.ProjectNavigatorWindow.searchInEntireApp(ProjectNavigatorWindow.java:707)

o.i.navigator.ProjectNavigatorWindow.openContext(ProjectNavigatorWindow.java:699)

o.i.navigator.ApplicationNavigatorWindow.openContext(ApplicationNavigatorWindow.java:520)

o.i.navigator.NavigatorWindow$ 1.run(NavigatorWindow.java:188)

j.a.event.InvocationEvent.dispatch(InvocationEvent.java:209)

j.a.EventQueue.dispatchEvent(EventQueue.java:597)

j.a.EventDispatchThread.pumpOneEventForFilters(EventDispatchThread.java:269)

j.a.EventDispatchThread.pumpEventsForFilter(EventDispatchThread.java:184)

j.a.EventDispatchThread.pumpEventsForHierarchy(EventDispatchThread.java:174)

j.a.EventDispatchThread.pumpEvents(EventDispatchThread.java:169)

j.a.EventDispatchThread.pumpEvents(EventDispatchThread.java:161)

j.a.EventDispatchThread.run(EventDispatchThread.java:122)

Thank you

Dembele.Please post your question in the appropriate forum.

JDeveloper and ADF

JDeveloper and ADFThank you

Hussein -

Satellite A200 28H: F6 floppy will not work when installing XP

Hello

I am trying to install Windows XP pro on my new laptop (Satellite A200 28 H), and since it needs driver SATA, I downloaded the driver suitable for my laptop (Satellite A200 28 H), put it on a floppy disk for use with a USB floppy drive (which works fine), press "F6" when appropriate and everything... But it simply doesn't. I had training as a technician for a few years, so even thouh that is no longer my work and I might be a little rusty, I think I still know at least a little what I do... Well, apparently I'm more image than I thought!

I've tried several things, and this is what I get:

* If I don't use all the disks, Windows XP will not find the hard drive. Which was intended, no problems here, don't except for the fact that it is completely useless for me :)

* If I force the driver on the floppy disk, despite Windows tells me that is already a driver that can be used, then within 10 seconds I get a BSOD with the stop message 0x7B (found or inaccessible boot device).

* If I use the floppy drive, but do not force the driver and listen instead of Windows ("oh, you already have a driver? ("OK, then, use and too bad that diskette"), I can actually finish the installation process. Windows will copy all the necessary files on the disk, it will even restart correctly after that and moved on. But when the installation is complete, the computer will restart, I'll see the loading for a second screen, and then, BSOD stop 0x7B again.

I'm sure I downloaded the driver for my computer. In fact, I also tried a couple of bad drivers too, just to be safe (IE I use Intel Matrix for ICH8M, but I also tried the ICH7M to be sure), I tried with drivers downloaded from the Toshiba site, on the site of Intel and whatnot-other-website-for-newbies-or-powerusers, and I still have the same behavior as described above.

I tried partitioning / formatting during installation, and I also tried leaving the file system intact. If I do the latter, the only difference is that I have offered 2 possible OS to boot from, the two being Windows XP and both failed miserably.

It's probably worth mentioning that, if I use the recovery DVDs and start over and clean with Vista, I have no problem whatsoever, except for the loaded gazillion process during startup, the RAM already used half and applications useless hundred I don't give a darn on... And the fact that some applications that I need work just can not be installed on Vista.

If anyone has any idea how to solve this problem, I would be very grateful!

Hello

The procedure, including the SATA drivers in Win XP using the external USB floppy drive is very easy.

First you need to download the Storage Manager and having to put on the diskette.During the installation of Win XP you must press F6 for inclusion since the external floppy drive and follow the new instructions on the screen.

_Note; _

Very important is that your XP CD Win would contains SP2!

Without SP2 a BSOD can appears and could break up of installation!Don t format or don t create all partitions after the installation of the SATA driver. It can erase the installed and included from HARD disk SATA driver.

Last but not least some readers of external floppy drive are not supported. I read that some users had some problems with an external FDD drives they tested another drive and it worked finally

Check the recommendations may indicate that it might be useful

Good bye

-

EliteBook 8760w: HP Recovery Manager missing on EliteBook 8760w

Hello

My laptop is all nine has no "HP Recovery Manager" Installed. is this a normal behavior?

What are the HP_RECOVERY & HP_TOOLS Partitions? (at least 2 GB occupied space in each partition)

I will apreciate your support

Thanks in advance

Some useful information:

Name of the operating system: Microsoft Windows 7 Professional

Version: 6.1.7601 Service Pack 1 Build 7601

Model: HP EliteBook 8760w

Type of system: PC x 64

Processor: Intel Core i7-2820QM CPU @ 2.30 GHz, 2301 Mhz, 4 Lossnay 8 logical processors

The version of the BIOS/Date: Hewlett-Packard 68SAD worm. F.29, 01/07/2013

SMBIOS Version: 2.6Hey @amaazaoui ,

Start by clicking F11 at startup to the top of the laptop.

Thank you.

-

ORA-01008: not all variables bound... but only in an environment

Running Oracle on Solaris 11.2.0.3.15.

Two test environments, each with identical spfiles (give or take the database names, etc.).

Here is my code in both cases:

create or replace procedure HJR_TEST

as

v_daykey_from number (10): = 13164.

v_text varchar2 (3000);

Start

Select cd.daykey. CD. CalendarDate in v_text

from the cds.cdsday CD,

CDS. Snapshot vsp

where vsp.daykey = cd.daykey

and cd.daykey > = v_daykey_from

and rownum < 2;

dbms_output.put_line (v_text);

end;

Work as an environment:

SQL > start

hjr_test;

end;

PL/SQL procedure successfully completed.

1318001/FEB/16

Run it in another environment:

Error from line: 18 in the command.

Start

hjr_test;

end;

Error report-

ORA-01008: not all variables

ORA-06512: at the 'JRH. HJR_TEST', line 7

ORA-06512: at line 2

01008 00000 - "not all variables.

* Cause:

* Action:

Same code; init.ora parameters; same version of the database. same o/s; different results.

Got clues as to where I should be looking for the trouble, please?

Concerning

JRH

Thought I better update the forum on this one.

This is a bug confirmed, although the bug report is not published.

There are cases where the optimizer calls kkpap do partition

pruning in the compilation. Sometimes to partition pruning is done in

subqueries running against the table. If the bind variables values

required to operate these subqueries, so we cannot do the pruning to

compile time.

The fix for the bug 14458214 fixed this problem in the case where the

subquery was used to carve at the partition level. However, it is

possible that use us another method at the level of the partition, and then use

pruning of subquery subpartition level; this case was not

addressed by the fix for 14458214.

The mentioned bug has a patch available in 11.2.0.4 and don't occur in 12 c. For some reason, I'm also made bug 17258090, but I see no content in this bug report. :-(

Possible solutions in 11.2.0.3 is to make a alter session set "_subquery_pruning_enabled" = false; ... but since X$ KSPPI lists _subquery_pruning_enabled as a hidden parameter, I guess you can also set it instance globally, although obviously the consequences for other queries would at this time must be evaluated very carefully.

Maybe you are looking for

-

Black lines under titles, rightclick not work / display options, flash and video appearing as black

Hello.For about a day now, I had to go through the horror of converting all my stuff on this horrible slow browser, chrome. And at this point, I want to just my firefox in return. I can't seem to find a "Add attachments" button, so I just uploaded to

-

A shield appeared on the Everio MediaBrowser HD Edition icon

I noticed that a blue and yellow shield has now itself superimposed on my Everio MediaBrowser HD Edition icon in the taskbar.What is the reason for this?

-

LaserJet M3035xs MFP Scan because of the network folder

Hello. I had a question about the scan to network folder option on our M3035. At the same time, I had this put option in place and works very well with all our Windows 7 computers. The hard drive in the Copier has failed and had to be replaced that e

-

How to install Panasonic HD Writer AE in 32-bit emulation mode?

Attempted to load Panasonic HD Writer AE 1.0 for HDC (video software from a Panasonic camcorder high definition) on my desktop computer that uses Vista Home Premium for the OS. Apparently, the software is a 32-bit application, and it gave me an erro

-

Flash CS6, import of JPEGs and continues to hang

When I import a jpeg file (less), I received a message: "one or more files were not imported because he had problems reading of their. When I click OK, I try again. After I imported the image I click on the image in the library: it shows "Preview n