By default the rate of execution 15 Hz of Communication send loop

Hi NIVS experts!

The link understand engine VS, the default implementation of communication loop rate is set to 15 Hz. I've found a way to change this default in VS. As this Communication send loop transmits the values of layer VeriStand bridge, where working space gets the tables of channel. The flow of data, I got look like this:

Data channels data channels

Communication send loop (15 Hz)---> VeriStand gateway---> Workspace (logging of data control (100 Hz))

My question is: If the rate of implementation of the communication loop is only 15 Hz, does make sense for the control to save data on the workspace to record data at a much higher rate (say 100 Hz rate target)? Or how can I change the default implementation of continuous loop rate?

Thank you

Pen

We used the 'recording' and 'streaming' API of VeriStand, and I can confirm that the flow is equal to that of the loop of real-time data. The Kz 15 data source is used to provide enough slow data API "read channel".

I assumed that the data connection control uses the 'recording', is not subject to the caching of 15 Hz.

++

Tags: NI Products

Similar Questions

-

How to get the rate max one sampling NOR 9263 and other cards?

Hello!

I'm using a NI 9263 map and a chassis cDAQ-9172 proyect and im he 8.0 whit CVI programming. IM generating a sine and square waves to do some tests on a radio.

I want my program to be functional for all cards of this type, and we know that most of the cards have different specifications, for example sampling max tariff, in this case the Pentecost of work NI 9263 100 kech. / s as the maximum. IM generating waves based on the sampling frequency.

If my program must be compatible with most of the cards, my need to program to acquire max sampling rate using a specific function of NIDAQmx.h.

Do you know if theres a function or attribute that can return this value?

I tried this function with different attributes, with no results:

DAQmxGetTimingAttribute (taskHandle, DAQmx_SampQuant_SampPerChan, & MaxSamp);

DAQmxGetTimingAttribute (taskHandle, DAQmx_SampClk_Rate, & MaxSamp);

DAQmxGetTimingAttribute (taskHandle, DAQmx_SampQuant_SampPerChan, & MaxSamp);

DAQmxGetTimingAttribute (taskHandle, DAQmx_SampClk_TimebaseDiv, & MaxSamp);

DAQmxGetTimingAttribute (taskHandle, DAQmx_SampClk_Timebase_Rate, & MaxSamp);The three first atribbutes gives me the rate real samp which is 1Ks/s (according to me, is the rate of samp set to the default value for all cards you before be initialized for the user), but do not give me samp (100Ks/s) max flow.

The rest of the attributes only gives me the value of the clk, which is 20 MHz and the divisor of the clk (20000). Also I tried with a card 9264 (max samp rate is 25 ksps / s) and the function returns the same results.

Any idea?

Thank you!!

Hey Areg22,

I think I've found the service you're looking for:

http://zone.NI.com/reference/en-XX/help/370471W-01/mxcprop/func22c8/

This link gives just the syntax for the function, but the following gives you more information about the function:

http://zone.NI.com/reference/en-XX/help/370471W-01/mxcprop/attr22c8/

When I used the property of this function node output was 100,000 for the NI 9263. Which is consistent with the plug. I would like to know if it works for you.

Thank you

-KP

-

Unable to see the rate of disk on the VMS using NFS

I think I know the root of the problem... it's because in vCenter, it isn't follow rates disk VMDK on NFS with the metric of the standard rate of disk. vCenter actually uses the 'rate of virtual disk' instead. But it seems that all default counters for vFoglight aren't looking 'Rate of virtual disk' but 'Rate of disc' so that all my virtual machines with no available data trying to determine their rate of disk.

Is there anyway to change this behavior?

There are some conditions in order to obtain metrics NFS:

- vSphere 4.1 and later versions

- vFoglight 6.6 and FGLAM base VMware agent

- Metric NFS does not appear on the dashboard by default, only exposed in the dashboard customized by drag / move.

- This will change in a future release.

I Don t have any NFS data warehouses in my lab so I can´t show you a dashboard example with action of NFS. If I get my hands on a laboratory with NFS I'll post back a few screenshots here.

/ Mattias

-

using google maps on FF, by default the Hebrew language. This does not happen in IE or chrome. where is the setting to control this on FF?

try to clear the cache and cookies from Google.com , and reload the page.

-

can I call from sri lanka to the US and what are the rates

I take my computer with me so I can call usa Sri lanka and what are the rates

Beachbum wrote:

I take my computer with me so I can call usa Sri lanka and what are the rates

With Skype, your location is unimportant. Calls to (or within) the United States wherever you are cost 2.3 cents / minute or part of it.

You can easily find the information yourself with a little research here:

http://www.Skype.com/intl/en-us/prices/PAYG-rates/

TIME ZONE - US EAST. LOCATION - PHILADELPHIA, PA, USA.

I recommend that you always run the latest version of Skype: Windows & Mac

If my advice helped to solve your problem, please mark it as a solution to help others.

Please note that I usually do not respond to unsolicited private Messages. Thank you. -

WSN NI 9792 safety 1.2: initial configuration? By default the username and password?

Hello

With NI WSN 1.1, using a 9792, I can click on the button set the permissions, and if none had been defined would simply ask me a new administrator password. Now with NI WSN 1.2, when I click on the button set the permissions he takes me to the web interface of the 9792 and said "nobody is connected or you don't have permissions to view/edit users, groups, and permissions.» When I click on the "connect" button, a box opens and asks me a username and password. I scoured the notes version of the help files and the internet, and it seems there is NO documentation on how to set up the first name of username/password combination, or it indicates what combination of username/password default username there might be. I also tried a brand new 9792 straight out of the box and load of 1.2 to this subject and it has the same problem. Is there something obvious that I'm missing? How a person initially configure security on the 9792?

Hello, Garrett,.

Thanks for your post!

Looks like you're having need for information on how to find the information of your 9792 newspaper. When you use NEITHER-WSN 1.1 allowed us users to define the initial password and this is still the case for the 9791. The 9792 is a gateway OR WSN node and a target time LabVIEW Real-time. Its default log in information or lie in a (KB) Knowledge Base using LabVIEW or helping MAX. You use LabVIEW 2009 SP1 or LabVIEW 2010?

The default name of the user and the password is the following:

user name: admin

password:

KO

By default the username and password for the newspaper in a real-time controller

http://digital.NI.com/public.nsf/allkb/14D8257A7724BE85862577F90071B73F

Help of LabVIEW

Start > all programs > National Instruments > xx LabVIEW > LabVIEW help

Then go to... Fundamentasl > working with egalitarian and target > how-to > control and configure a remote device from a Web browser

Help MAX

Open MAX > help > MAX Help (can also press F1 if open MAX)

Then go to... Help MAX remote systems > LabVIEW Real-time targets Configuration > Device Configuration > logging into your system

The help link above LabVIEW is called in 9792 under Related Documentation start guide that directs you using LabVIEW.

NEITHER Wireless Sensor Network Getting Started Guide

http://www.NI.com/PDF/manuals/372781c.PDF

Is the information you were looking for? Please indicate if there is something else we can do.

See you soon

Corby

WSN PES OF R & D

-

Hello

I'm just slow down my simulink (dll) model runs in Veristand. The model was compiled at a sampling frequency of 100 Hz and I have been able to slow the speed of the model running in Veristand by reducing the rate of the primary control loop, although he won't reduce anything lower than 10 Hz. My goal is to launch the model 100 x slower than real time. Is this possible? If so, how could I do that?

Thank you

Hello claw,

A basic rule concerning the models of simulation in VeriStand 2009 and 2010 is to run as slow as possible PCL. Regarding onlymodels, it's the lowest divisible by all rates of the model. (Other parts of the system may require the PCL to run faster).

For example, that model A was compiled at 25 Hz, model B at 75 Hz and model C at 100 Hz. The lowest rate that is divisible by all models is 300 Hz, this is what you run the PCL to. The next step and maybe the answer to your question is to define the "Décimation" integer for each model in System Explorer. System Explorer, decimation is always in what regards the PCL. Decimation 1 means 'at the PCL rate. " 2 decimation means 'every other iteration of the PCL' or 'half the rate of PCL. Decimation model both for the model A = 12, B = 4 and C = 3 model.

The reason for the basic rule is that in VeriStand 2009 and 2010 models decimated are required to run a stage of time in an iteration of the PCL to be 'in time'. In the previous case, models A, B and C each have 3.3ms to run once the step in parallel mode, otherwise they are fine.

Steve K

-

Make the icon cancel execution Invisible

Is there a way to make the invisible abort execution icon, when I deploy an application, I want the user to use only the STOP button I provide without the option to stop the program with the execution of the demolition at the top.

If you go to file-> properties VI, you can go to the appearance of Windows and choose the dialog box or any other customizable configuration that you like.

-

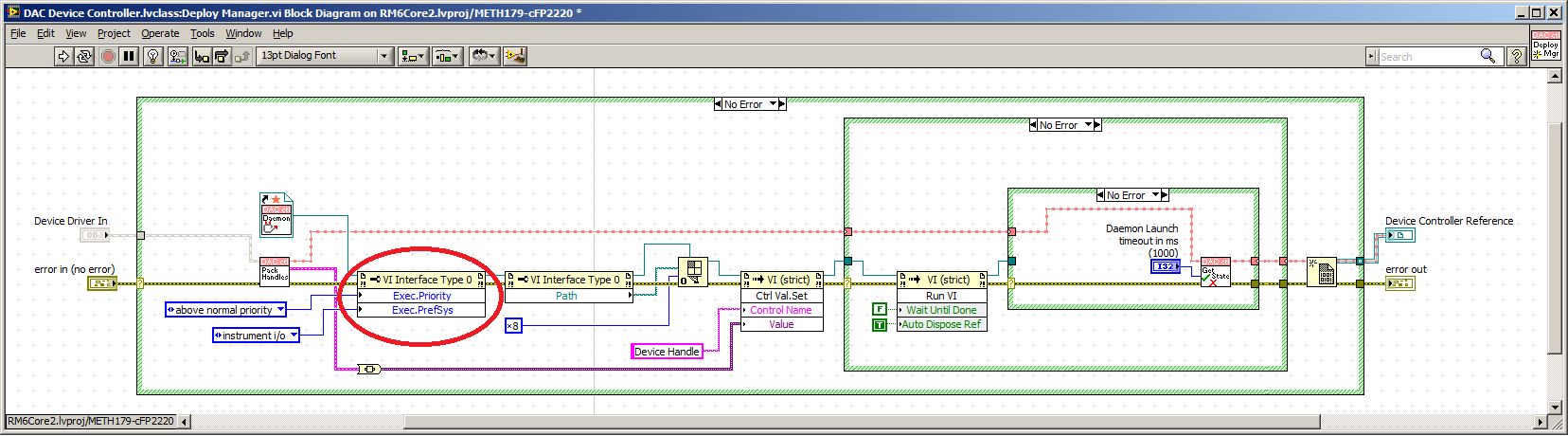

(How) Can I change the priority for execution vi when running

Hi all

I use the demons (free running VI) and I communicate them through queues.

They are part of my architecture of device driver and use a producer (of the Acquisition) architecture or Architecture consumer (for control)

I have a single VI demon I deploy an 'object of device' using a class polymorphic implimentation.

This implimentation has a deficit of subtle,

I'm not able to change the priority for execution to the launch

There is a property node taunts that it is possible, but the assistance (and run the error message) says not available during execution.

Anyone know of another method?

Here's what I thought about so far:

1. do you have 5 different demons with different priorities [Distasteful for the maintenance of the Code]

2. make low priority and ensure that at least 1 VI in the driver has a highest priority [do not know if it works, mask implimentation]

Kind regards

Tim L.

You might think about putting a timed loop or timed sequence in your daemon and then passing a value of digital priority to your demon. This is the best solution I can think.

-

Y at - it a State chart type or the flowchart describing the order of execution process model?

One of my clients asked me this question...

I have a problem with a change in the order of execution in TestStand 4.1.1. [he has improved from 3.1 to 4.1.1] It seems that ProcessModelPreStep runs earlier in 4.1.1 to 3.1. This causes our model of error because some required variables have not been set when executing. I wish that was a big flow chart or a diagram of the State for TestStand. Maybe it is and I don't know where to look?

The reminder that you describe is a reminder of engine and is independent of the process template. There is a list of all actions and their order described in the TestStand reference manual in Chapter 3 of the section called "step Execution. You will see the action 11 is a reminder of previous step motor.

P. Allen

NEITHER

-

the time of acquisition of data - how to calculate the rate of analog output

I want to calculate an acceptable rate of analog output, one that is taken in charge by material (PCIe6353), without the rate being changed by the VI DAQmx Timing (sample clock). The final objective is to have a rate of analog output that is an integer multiple of the analog input for precise frequency, since the sinusoid AO's amplifiers, which have a ringtone when AO updates occur.

According to 27R8Q3YF of the knowledge base: how the actual scanning speed is determined when I specify the rate of scanning to My d..., the rate is revised as needed by calculating the rate of clock / asked for advice, divide the result rounded downwards and upwards in the clock of the Board and use the one that is closest to the requested speed.

If 'Embedded clock' is selected, which is the result "Council clock. DAQmx sample clock timebase Timing node - SampClk.Timebase.Rate says 100 ms/s. However, for a rate resulting from the update of 2.38095MS / s, the divisor of the time base timing node - "SampClk.TimebaseDiv" gives a value of 42. 42 x 2.38095 M = 99, 999, 990, where it should be 100 ms/s.

How to calculate an acceptable rate of analog output is supported by the hardware? I have other plates, in addition, a general method would be appreciated.

I haven't worked all the details yet but noticed a few things that may be relevant.

Req AI rate isn't a whole ditch 1E8. It is used to determine the rate of the AO.

There is no check to ensure that the rate of the AO is an integer division.

It seems that you have the right idea, but the implementation is not yet there.

Lynn

-

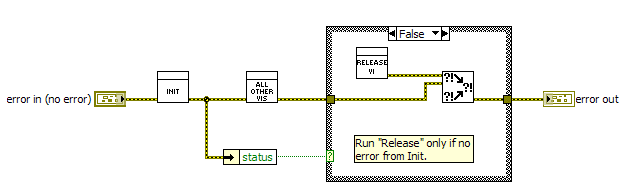

Control the order of execution of the init/release

I have a small program that I write in LabView. It has an API (one set of screws to wrapping the functions of the DLL to control a device).

Other such programs in the Gayshop make liberal use of the structures of the sequence. I understand that the sequence into LabVIEW structures are not usually recommended. I am writing my program with the best style of LabVIEW (as far as I understand - I'm still a relative novice in LabVIEW).

I found that I could wire together the error / mistake Terminal to create a data flow to control the order of execution and it works beautifully.

However, there are some cases where it is not enough.

Here is an example. I hope that the answer to this will answer my other questions. If this is not the case, perhaps that I'll post more.

One of the first live I call is an Init function. One of the last screw is the release function.

The release function must be called at the end, after the rest of the program executed (in this case, it is after the user requests the stop). It should be naturally at the end (or almost) of the error string in / out connections error (as it is currently).

However, the error it receives, which will determine whether or not he will run, should be the output of Init. Release should work even if something else has failed.

I enclose a photo showing the problem, with most of the code snipped out (as exactly what is happening in the middle is not relevant).

What is the elegant way to handle this in LabVIEW. Is it really a deal for a structure of the sequence, or is there a way more pleasant or better? How would you recommend handling?

Thank you very much.

BP

I agree with what pincpanter said, more you will need to use the status of the function 'Init '.

Note that you can ignore the error and make the merger within the Vi version if you wish.

Steve

-

How can I improve the rate of acquisition with daqmx and usb-6008?

Hello

I am trying to acquire data of analog voltage with a USB-6008. I'm under Labview 8.5 student on an HP laptop with a 1.33 Ghz cpu and 736MB RAM, apparently. I tried using the Daq assistant and the low-level Daqmx functions. My best results come with a task set in MAX for my analog input, and using the function 'Daqmx read' the 'unique double 1 d sample' value in a while loop. I insert the values returned in a table which built in the while loop, and then when I'm done, I check the number of samples in the table. In the test VI attached, I also use the time to Get before and after all loop. The best sampling rate I made using this method, is around 40samples/second. I have attached a VI below that illustrates this concept. In my actual application, the data acquisition code runs at a time while loop with 1ms, parallel to other code that controls the device I'm collecting data of. The sampling rate is roughly the same for my test below VI and my application program.

The 6008 datasheet gives the sampling frequency maximum 10 kHz. I'd be happy with 2 to 2.5 kHz, or as soon as possible; I'm sure that I can achieve a little more than 40 Hz. My first idea was tied to the hardware, but the 6008 cannot make acquisitions NI hardware.

My question is: How can I implement a faster sampling of analog voltages to a USB-6008 in LAbview? If I can't do it, is there another way I can taste the data more quickly?

Thank you

-SK-

To the best of my knowledge, the USB-6008 can do timed equipment acquisition. Don't forget that this is a multiplexed device, so if you add 8 channels so the maximum you can set is 10 k/8

If you are new to LabVIEW, I suggest that you try this sample program first

\examples\DAQmx\Analog In\Measure voltage. llb\Acq & Graph tension-Int Clk.vi Amit

-

Hello

I use the terminal server server 2008 r2 64 bit for 35 users, we have recently updated our ERP software.

Since then, we meet a few software crashes.

When we talked with the software they told us to add several of the software files to the DEP (data execution prevention).

When I tried to add I got this message: "this program must run with enabled data execution protection."

How can u add these files to the DEP?

(I tried on another server that is running the software and no problem)

b.r

Ronen malka

Hello

Your question of Windows is more complex than what is generally answered in the Microsoft Answers forums. It is better suited for the IT Pro TechNet public. Please post your question in the Technet Windows Forums. Here is the link:

http://social.technet.Microsoft.com/forums/en-us/winservergen/threads -

change the frequency of the signal during execution

Hi, I'm working on a VI to calculate excess of signal I think VI seems to be good (not sure) but now my problem is

change the frequency during execution, which I am not able to do only when I stop and run the vi frequency changes... pls

someone help me I tried to put in an event... but no use... maybe iam missing something pls help me...

I noticed that you have some time a loop around the entire block diagram - I'm not entirely sure why that is. The way you have your VI wrote that the two inner loops will never come out unless there is an error-'stop' buttons are hidden. Thus, the outer loop is not do anything for you.

Once you take it, it is easy to see why you cannot change frequency. It is defined once, outside of the loops and so never gets checked again. The best way to resolve this, in your case, is to use registers with shift on the upper loop and check if the value of the frequency has changed since the last iteration. If so, generate a new waveform and feed DAQmx writing instead.

See the version annexed to your main VI for what I mean. (I also replaced the controls to stop with one that is visible and which will stop the two loops without your duty to hit the button abandon.) I'm also an error on the DAQmx read in the loop at the bottom, but I'm sure it will work fine on your equipment.

See you soon,.

Michael

Maybe you are looking for

-

Today I received one, which, according to me, is a phony Bill of Apple £ 49 for a music subscription I've ever done. Address of the sender of the email is: [email protected] which is a different address of the Apple recipes that I usually ge

-

Is it possible to mirror screen Pro of your iPad on a TV screen and also show, in real-time, of annotations, you make using the pencil to Apple? For example, if you have a document and you want to highlight certain sections or graphic you want to ann

-

How the recovery disk creator actually works?

I read on the forum on the recovery disk creator and I'm a bit confused... in the name, it becomes obvious that he is indeed a creator, but I have no thread caught my attention which in fact is to create a recovery disk. How does it work? Of course,

-

When I press on add and remove the button, it displays 'Please wait a moment of populating the list' and then never goes any further. I could wait forever, but it never gives me the list. I need to remove some programs, please help

-

F4500 all-in-one HP printer: printer all-in-one Hp DeskJet F4500

I have a HP Pavilion Slimline with an office jet f4500 printer and Windows 7, but I can't find the driver on their website. The only driver that arise when I type in the model #, than these two. Any ideas? Are compatible with my printer? DeskJet F45