Camera GigE of NI VBAI lost packets

We run VBAI on a fast PC with an Intel Pro/1000 card and a switch GigE of jumbo-frame to a large number of Basler Ace GigE cameras.

It is understandable that multiple cameras cannot transfer complete images to the PC to the full flow of 1000 MHz because of the limited bandwidth between the PC and the Switch simultaneously.

A way around this is to strangle the maximum for each camera down data flow so that the sum is not greater than 1,000 MHz.

However, this means that transfers images always take more time, even if only a single camera to enter service, a large part of the time.

What is a fundamental limitation of GigE Vision, or are smart enough work as soon as possible of the GigE Vision cameras... via returns package loses casual?

Nelson

We have found a solution.

The problem is that the cameras, switch, network card and VisionBuiilder cannot treat all cases where more than one camera sends images to VisionBuilder where the total of the rates of data for these cameras is never more than 1 GB of band bandwidth network card.

(It would be nice if someone did a network switch that has a little more package buffer memeory...) 100 MB?... so that the images of the garbage does not have the occasional collision while allowing most of the captures operate at maximum speed.)

Solution:

(1) we have added 3 ports on network 1 Gb additional to our pc of mink.

(2) in order to redistribute traffic camera in more than one network port, we assigned to each network adapter and corresponding cameras, to a different subnet.

(3) we have lowered the rate of data for less urgent cameras.

Even after the lowering of the data rates for multiple cameras in NOR-MAX up to 200 Mb/s such as lost packets should no longer be possible, we stil seen.

After a detailed examination, we found that the stages of acquisition image VisionBuilder are not careful the data rates that you assign to NOR-MAX and always default to 1000 MB/s maximum rate, so saturated flow and lost packets.

The solution to this problem proves to be explicitly set to the flow desired in each stage of the acquisition of Image VisionBuilder, using the attributes tab. While you're there, you should also check other critical parameters, such as the packet size, are also correct and update if not.

After you explicitly fix rates given in every stage of image acquisition, we ran a stress test of capture image that ran all the process of vision (several programs running at the same time) about 10 times faster than necessary and observed no lost in all packets.

Problem solved.

Tags: NI Hardware

Similar Questions

-

Cisco Jabber CDR analysis and reporting: the lost packets and other info call stating "Null."

Hello

I have recently setup Cisco Jabber Setup and make a video call between Mac and Windows laptop. When I go to the CDR reporting, I can find my call details when I search after the end of the call. However, I see lost packets, jitter, latency as Null.

I looked in the support forums and made all the required configurations such as going to service settings and allowing the call diagnosis. I also uncheck CDR load only one box, but not seeing any information other than the ip address and the details of the bandwidth of VideoCAP.

Am I missing something?

No, the CMR is not implemented in any current version of Jabber, the next version of Jabber will be the first to have * some * RMC capacity.

What you see at the present time, is the expected behavior.

-

Hi all

Prosilica camera failure I just borrowed a MV Matrix Vision camera blue Cougar S123c GIGE.

It does not seem to the MAX!

I tried all the usual stuff by checking network connections and connection as a standalone device or a part of the network bridge. Windows XP, it will provide an IP address with the same subnet as the system.

That I did not find all the other threads regarding this brand of camera, my first question is ' is supported by NEITHER?

If it is supported of the ideas on which then would be greatly appreciated.

Thank you very much

Darren

Hey Darren,.

I am aware that the camera is not supported by software from national instruments and form what I can say that they have no drivers available on their Web site. I would recommend using our industrial camera search tool to search for compatible cameras.

Industrial Camera Advisor:

-

Let fall speed when starting to save the AVI with camera basler GigE

Hi all

I use 2 cameras GigE of Ace basler at 60 frames per second to capture of animal locomotion. I randomly experience this kind of problem. When I start to save in a start/stop button and save videos in the HDD sometimes lower at 20-14 fps frame rate and a framework to skip 2 or 3. After that, the pace has become stable at 60 frames per second.

Here are the parameters and specifications of the overall program:

-J' use a loop of producer/consumer (I've ever known a fill of the queue even when the rate of drop occur)

-J' fixed bandwidth of the card Intel GigE from 100 to 500 for each camera

-sent packet size = 8000

-firewall disabled

-execution of vi in higher mode

I observed that the dropping of the pace occur when the vi run for a while without interaction with the operator (the operator configure other settings in another computer, so the vi only acquire the video without saving)

I have attached the vi

Thank you for your help

Alex

Hi Eric and Isaac

Finally, the problem was similar to that indicated by Peter Westwood in his post 'lost GigE frames/buffers '. I discovered that the problem occur with the version of labview 2012. Indeed, I tried with the 2011 version and I have it all fall. So, I'm doing the same thing that Peter is "I changed the program, so that keeps an account number of buffer (incrementing the number of buffer on each bucket) and specifies the number of buffer to the VI Grab." "It works well, even on the occasions when the loop of the acquisition is sometimes momentarily delayed for up to a second or more, i suspect, Windows to write the contents of the disk the disk buffer, or do some other household.

So even if the fps drop the program acquire the right frame and in the video I do not see any image ignored

Best regards

Alex

-

Camera trigger with trigger material GigE

Hello

Here's an overview of what I want to accomplish:

LabView - program starts and expected output frames GigE camera

-Hardware trigger leads, to camera, GigE, image display

-A few simple calculations is performed on each image to generate the average pixel value--> this average value is plotted for each frame

-Repeat the three steps above

Please see the attached VI. I put my camera settings successfully in MAX do wait an external hardware trigger. However, out of IMAQdx Grab2.vi inside the While loop is only a single image (even if MAX I put the Mode of Acquisition of multitrame - 255 images).

Any help would be appreciated!

Thank you.

-

External triggering of GigE camera

Hi, I'm planning on using the camera triggers the entry to start capturing images but I'm not sure what I need to do in my c# application when a trigger has launched.

-If the trigger is coming from the PLC, should I also send a trigger signal to the PC and keep the active trigger signal until the PC is the acquisition of the image?

-Do I still need use the snap or enter functions in imaqdx to get the picture?

-If the camera is connected directly to the external NETWORK card triggering she still great benefits? The camera in less than 5 meters from the PC.

Thank you

Cliff

Normal 0 false false false fr X-NONE X-NONE / * Style Definitions * / table. MsoNormalTable {mso-style-name : « Table Normal » ; mso-tstyle-rowband-taille : 0 ; mso-tstyle-colband-taille : 0 ; mso-style-noshow:yes ; mso-style-priorité : 99 ; mso-style-qformat:yes ; mso-style-parent : » « ;" mso-rembourrage-alt : 0 à 5.4pt 0 à 5.4pt ; mso-para-marge-top : 0 ; mso-para-marge-droit : 0 ; mso-para-marge-bas : 10.0pt ; mso-para-marge-left : 0 ; ligne-hauteur : 115 % ; mso-pagination : widow-orphelin ; police-taille : 11.0pt ; famille de police : « Calibri », « sans-serif » ; mso-ascii-font-family : Calibri ; mso-ascii-theme-font : minor-latin ; mso-hansi-font-family : Calibri ; mso-hansi-theme-font : minor-latin ;}

Hi riscoh,

When you work with a GigE camera you are not anything on the computer triggering its self, only the camera. When it is configured to trigger it will sit and wait for a trigger. When he received the signal it will take an acquisition and send the information to the computer. When you set up your support on the computer it is searched for images and brings them into the computer as they become available. The example I'm pointing you will show you how to set up the camera by a trigger program. It looks like it may be available only in Visual C. As far as the documents relate to article after the release with a GigE camera Developer area described briefly. Acquisition of cameras GigE Vision with Vision Acquisition Software-part II

-

Hello

I have two Aviiva IRLS cameras gigE (4K) that I try to control from a PC via an ethernet card dual port (Intel Pro 1000 PT). The problem is that it is not always possible to see the two cameras in MAX. When I see two cameras, then I'm only able to set up one of them. When I try to access the 2nd camera then it says «camera already in use» However, only a single camera often appears in MAX under peripheral IMAQdx.

I have also written a VI snap to capture an image of each camera at the same time. I get a similar error message after the first image is obtained. It seems that LabView is struggling to see the two cameras as separate entities or assign different IP addresses. If anyone has had this problem or if anyone could suggest a solution?

I use the standard gigE drivers and tried to manually set IP addresses automatically, all to nothing does not.

Thanks Simon

Solved by using only one of the ports on the double ethernet card. Now, using an ethernet switch to route the two cameras through a single port. Worked the first time.

-

The value FPS using NOR-IMAQ with a GigE camera programmatically?

Hi all

I'm (slowly) learning how LabVIEW plays well with the cameras, GigE, that I just bought.

At this point, I'm trying to understand how to programmatically set the value of fps for the camera. I have been using the examples NOR-IMAQdx to control the cameras so far, but I am open to trying anything. Someone at - it a good example of how I can change the frame rate "on the fly?

My next task will be to find a way to change the size of the image on the screen programmatically, so if anyone has any ideas for me on this path there, I'd be pleased them too well.

Thank you very much

FB

I thought about it:

1. use the vi IMAQdx open camera to open the camera

2 use the "List attributes" in the palette of NOR-IMAQdx to see all available attributes

3 using the step 2, above, I could find the attribute, the attribute "AcquisitionFrameRateAbs".

4. create a node in the output of the open unit VI property, choose 'Attributes of the camera,' select "Attribute Active."

5. set the property node "change all to write."

6. connect a constant string of 'AcquisitionFrameRateAbs' attributeinto the property node value

7. create another node in property 'Attributes of the camera', this time by selecting 'Value' and affecting the type DBL

8. change all to write

9 create a digital control for cadence

10. connect the digital control to the property node 'ValueDBL '.

Presto! You are finished.

-

How to achieve high with Dalsa GigE camera

Hello everyone

I have dalsa spyder3 line-scan Camera, GigE, who says it's scanning line 36KHz device. But when I run this camera at the maximum rate of the line in labview and display data using a while loop, I do not reach the specified line 36KHz rate. To capture all frames that I have to use a capture card or there is a way to achieve this rate of line using another technique in labview. Please help to in the present.

Concerning

Charles

Hi Charles,

I realize that this is a line scan camera. However, the Spyder3 has the ability to buffer multiple lines in a single image. It is also highly critical of GigE Vision because there is a certain amount of load image by image, which would be very important to the rates that you will. By default the camera should give you more than one line per image - you should have manually configure for a smaller amount. If you have configured to do one line per image, then it is likely that you drop frames in the acquisition or in your processing loop - two would probably have to follow with 36Khz rates. However, if you get a hundred or a thousand lines per image, then the load becomes trivial and you should have no problem to reach your desired line frequency.

Eric

-

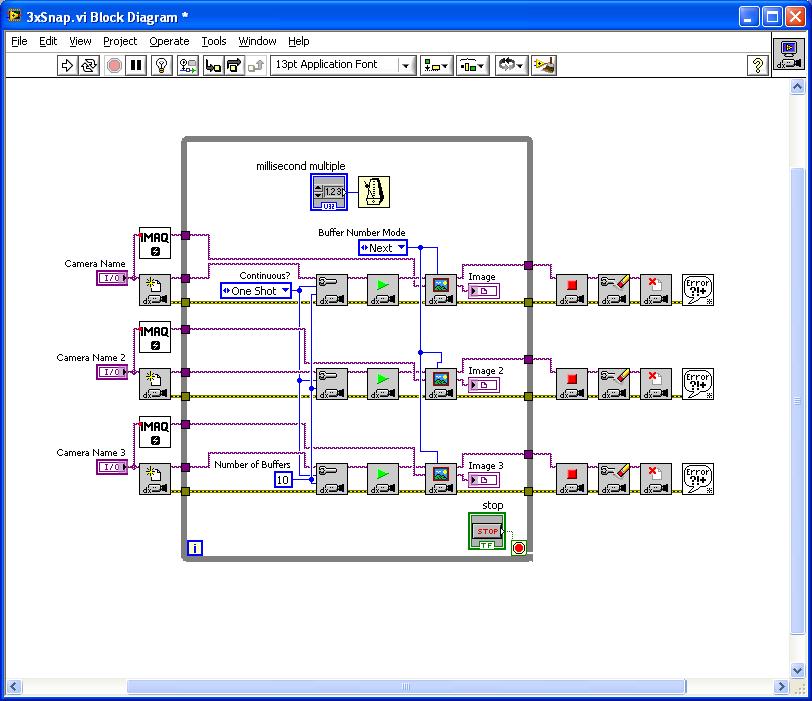

Hi AllI use LV8.6 and 8235 PCIe card with 3 cameras GIGE (Prosilica 780c) on a PC running XP sp3 with latest drivers of vision. My application requires that the PC receives an external trigger with a sensor via a card PCIe-6320 it is then used to go into a loop. In the loop, an image is captured, then treatment is carried out before leaving the loop to check once more for relaxation. The main point of the loop, it is that the image is acquired as soon as possible after the trigger is received and always so. When you use the "continuous" configuration and "get image" as next with a rate of 60 Hz images could be 1/60 s after the outbreak. With the help of 'one shot' mode worked perfectly with a single camera, but failed with 3. When running 3 with a simple vi below a certain number of images would be acquired before the vi randomly encounter a delay in "get image" 5 seconds exactly. I have overcome this by placing the 'configure acquisition' out of the loop with mode set to "continue". I played a bit with many settings of the device to the MAXIMUM, including bandwidth, exposure times, sizes of packages etc. nothing works. Someone at - it ideas as to why this happens and is my solution OK.? thanksDarren

Darren,

The attribute default time-out in IMAQdx is 5000 milliseconds.

You trigger the cameras with a hardware trigger? You must make sure that synchronize with when you configure and start the cameras.

Eric

-

GPS chip that can locate lost or stolen camera?

Help me find the camera. Is there a GPS tracker in the EOS camera that can locate a lost or stolen device?

Hi raziel2003!

Thanks for the post.

While some newer models have a built-in GPS, it can not be active and outside if the camera is lost or stolen.

This has not answered your question? Find more help contact us.

-

Acquire GigE camera data using labview CIN or DLL to call.

I am tring to acquire data from a basler runner line CCD camera (GigE).

Because the NI Vision Development Module is not free, and the camera provide a C++ API and C and also some examples, so I plan on using the function CIN or call DLLS in labview to achieve. Is this possible?

I tried to generate a DLL with the example of the company code of the camera. But encounter difficulties;

I did that a little background in C++, but not familiar with it. The C++ Code example provides the camera is a C++ (a source Code file) and a .cproj file, it depends on other files, the camera API directory.

If I build the project directly, it will create an application window, not in a DLL. I don't know how to convert a DLL project, given that other information such as dependence in the .cproj file, other than source code.

Can someone help me with this?

Don't forget that for the acquisition of a GigE camera, you must only Module of Acquisition of Vision, not the entire Vision Development Module. Acquisition of vision is much lower price and also delivered free with hardware NI Vision current (for example a card PCIe - 8231 GigE Vision of purchase). You need only Vision Development Module if you then want to use pre-made image processing duties. If you are just display, save images to disk, or image processing using your own code (for example to manipulate the pixels of the image in a table) you can do so with just Vision Acquisition.

It is certainly possible to call DLL functions if LabVIEW by using a node called library, it would be quite a lot of work unless you are very familiar with C/C++. Since their driver interface is C++, you need to create wrapper functions in C in a DLL that you write. Depending on how much you want to expose functions, this could be a bit of work.

Eric

-

I know that we can now acquire multiple cameras in VBAI (thanks!). However, in my view, what to do so leads to very complex control sequences. It doesn't look like VBAI has fully embraced parallelism for anything other than the FTP transfer. What should I do if I have 5 cameras GigE each needs to be raised, and where it is possible that two can be triggered at the same time? Can I run multiple instances of VBAI on a single PC (hey, it's multi-core)? I predict that the current response is to use LabVIEW, but VBAI is especially LabVIEW... right?

I want to retain only the simple, single camera, inspections on the PC. Running multiple instances of VBAI would allow me to devote an instance for each camera without worrying about what's happening on TSF the other 4 (or more).

No, I have not tried to do it again. Currently, I am out of town and away from my vision laboratory. I thought that if this is possible, it is worth mentioning to the public and if it is not, then, it is worth noting in R & D. The system I'm imagining would be a future version of a system, we finished just where we had 4 NI Smart Cameras and a GigE camera. The question is on what to do if all 5 GigE cameras. Don't worry, we still love the SmartCams!

On a sequence of validation.

Dan press

Hey Dan,

Good question! VBAI supports the acquisition of several cameras, even if they are triggered at the same time. We have a flag in the acquisition stage that allows to specify if you want to wait as the following picture or purchase immediately. Thus, in the case simple two cameras triggered at the same time, you will have an initial state who tried to acquire the camera 1 and if there was a timeout he loop back and try again using the wait for the flag of the next Image. When an image is acquired, you know that the other camera was triggered, so you transisition to another State who acquires the camera 2 with an immediate flag, since we know that the image is already there. You can use the image select to switch between the two images, so it is possible, but not necessarily the cleanest. Even if you had two VBAIs simultaneously, synchronizing is not trivial to make sure you weren't inspect imageN camera1 and imageN + 1 on camera2, but perhaps this would not be the cause of your system. In any case, we don't currently support multiple inspections VBAI operating simultaneously on a system, but it is certainly a feature that we know.

Thanks for your series of validation,

Brad

-

Several cameras simultaneously on Labview

Hello

I'm new to Labview programming, so it would really help if you guys can help me...

I have an Exchange virtual server and 2 GigE cameras and I have developed an inspection using Vision Builder program, that works perfectly for what I need. The thing is that I have to run two cameras at the same time - as two tasks at the same time - and I realized (with support) that it is impossible to do such a thing with Vision Builder. So, I have to do all this work on Labview now...

At the moment I can't use the Exchange virtual server, so I'm with a RESUME, to test... I use a scA640-70fm and a Basler A631f, the two IEEE-1394 cameras. So here is my question:

To make both cameras working at the same time, is there enough creating two simple While loops and put the Acquisition of the Vision of each cam function inside of their? Is it supposed to work this way?

because when I am doing this and spin, I don't see on the face before Labview two cameras running at the same time! It seems odd, with cameras acquire images that the other and images sometimes disappear... looks like it does not process images at the same time.

I'm doing something wrong? Should I take another procedure to make it work the way I need?

Should I have problems when I perform this procedure on my cameras GigE with EVS?

Thank you so much in advance...

You are correct that Vision Builder AI is sequential in terms of how he runs the individual steps. Algorithms in these steps and how they execute can take advantage of multiple cores to help them run faster on machines with more than 1 kernel, so in that sense, some steps may take advantage of multiple cores, but several steps cannot run at the same time. This does not prevent several images acquired since the same trigger to be treated so. The stages of acquisition supports an immediate mode, which means that if two cameras were triggered at the same time, even if the steps in order, the pictures, they come back can come at the same time in time and you can use the step select an Image to switch the image you wish to treat. It should be benchmarking of your inspection in VBAI on the Exchange virtual server (much faster than CVS) before moving on to a parallel architecture more complicated in LabVIEW with several loops of execution simultaneously.

One reason your previous test with several loops may not have worked, is that you may have named the image that you have created with the same name. This basically means the acquisition of two loops would be overwhelming each other images. Make sure that when you use IMAQ create, you provide a unique name for each image. Another suggestion for how to ensure hourly LabVIEW each loop on a separate processor is to do two under the screws that are configured for different delivery systems. You can set this by going to file > VI properties > run > favorite Execution System on the VI and selecting the different systems.

Certainly, you should be able to have several running in parallel loops in LabVIEW, but before jumping to this approach, it may be worth benchmarking of the VBAI solution first because this will make it easier to make changes to the code, and it provides steps to high level who don't have to worry about things like having unique names for the images.

The comment above about the parallelism of the loop For is useful if you want the same code to run in parallel, which you can't do because you're acquisition from different cameras. He'd also manipulation of the results of different cameras and disguishing which translates to go with what more difficult camera. That's why I recommended the VI sub running in various delivery systems as a more flexible way to ensure that you take advantage of both processors.

Hope this helps,

Brad

-

I have a VERSION of EL CAPITAN 10.11.4 2011 FIRST MACBOOK PRO and some of the apps that came with it have been lost since my hard drive crashed. Now APPLE wants to charge me to reinstall.

Even if its not a lot of money, it's the principal that matters. I think that a company BILLIONS of dollars should be able to provide a free download of an application that was initially provided with the computer instead of load you.

Does anyone have any suggestions on what I need to do to recover the APP FREE?

Help, please.

What measures have you used to try to re - install these applications?

Maybe you are looking for

-

Satellite L505-10Z & L505-GS5039 - can connect to the router

A few days ago that I buy two Toshiba Satellite L505-10Z & L505-GS5039 the two cannot connect WiFi.When I connect with the Ethernet cable it works but WiFi does not work. I use ADSL Linksys router and Access Point for internet access. Laptops are ide

-

House-sharing has stopped working on my AppleTV 4

Apple TV 4 - A1625 rMBP (end 2013) iTunes 12.3.2.35 Home sharing my collection of videos iTunes worked and then it didn't. It's the good Apple ID on Apple TV and the Macbook Pro. The computer and the Apple TV are connected to the same network, and

-

Aspire Z5771 - Windows 7 64B - HDMI port does not

Hi, recently I had to format my internal hard drive and reinstall Windows 7 using the DVD that I created with the ACER Recovery program. My front HDMI port worked perfectly, and I had just to plug something into the port so that everything is on the

-

EPrint does not print the spare part on Photosmart 6510 using MACBook ro

When I send an email with attachments to my Photosmart 6510, it prints the email but not attachments

-

I need to install msxml 3 on my laptop, how can I do this