Configuration log archiving in RAC environment

I've activated archiving during the installation of RAC.So currently the archive logs is kept on the ASM diskgroup + DATA

I need to change the destination of journaling archive to a local drive on each node.

RAC1 archived information redo for its disk and so the RAC2.

Should what procedure I follow

SQL > show parameter log_archive_dest_state_1

VALUE OF TYPE NAME

------------------------------------

allow the chain of log_archive_dest_state_1

SQL > alter system set log_archive_dest_1 = ' LOCATION = / RAC1 ' sid = 'RAC1.

SQL > alter system set log_archive_dest_1 = ' LOCATION = / RAC2 ' sid = 'RAC2. "

Date of arrival:

SQL > alter system archive log current;

and check... check the log files to / RAC1 / * on node RAC1 RAC2 / / * on RAC2 node.

In addition, you should keep Archivelog files on the shared DRIVE, it's easy to back up and recover data + database...

Tags: Database

Similar Questions

-

Logs archiving for the RAC ASM basics

Hello

I have a question about logs archiving on the ASM database located on a RAC. I created a database orcl who has orcl1 instance on node1 and orcl2 on Node2. For the backup of this database, I enabled for the database to archivelog.

After a few transactions and backups, I noticed that there are two sets of archiving logs created on each node in the folder $ORACLE_HOME/dbs. In node 1, it starts with arch1_ * and node2 is arch2_ *.

IWhy is it creates logs archiving on local disks, in which she should ideally create disks asm which is shared between the nodes. My backup application fails with journal archive not found error, because it searches newspaper archives in the other node.

All entries on this will be useful.

AmithHello

I have a question about logs archiving on the ASM database located on a RAC. I created a database orcl who has orcl1 instance on node1 and orcl2 on Node2. For the backup of this database, I enabled for the database to archivelog.

After a few transactions and backups, I noticed that there are two sets of archiving logs created on each node in the folder $ORACLE_HOME/dbs. In node 1, it starts with arch1_ * and node2 is arch2_ *.

I believe that it is missing from your configuration database and Oracle uses the default location. (i.e. your "$ORACLE_HOME/dbs")

ARCHIVELOG must focus on a shared domain.

You need the parameter config below:

SQL> show parameter db_recovery_file NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ db_recovery_file_dest string db_recovery_file_dest_size big integerOr location of default config:

SQL> show parameter log_archive_dest NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ log_archive_dest stringIWhy is it creates logs archiving on local disks, in which she should ideally create disks asm which is shared between the nodes. My backup application fails with journal archive not found error, because it searches newspaper archives in the other node.

To resolve this problem see this example:

SQL> show parameter recover NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ db_recovery_file_dest string db_recovery_file_dest_size big integer 1 SQL> archive log list; Database log mode Archive Mode Automatic archival Enabled Archive destination /u01/app/oracle/product/10.2.0/db_1/dbs/ Oldest online log sequence 2 Next log sequence to archive 3 Current log sequence 3 SQL> SQL> alter system set db_recovery_file_dest_size=20G scope=both sid='*'; System altered. SQL> alter system set db_recovery_file_dest='+FRA' scope=both sid='*'; System altered. SQL> archive log list; Database log mode Archive Mode Automatic archival Enabled Archive destination USE_DB_RECOVERY_FILE_DEST Oldest online log sequence 5 Next log sequence to archive 6 Current log sequence 6 SQL>With RMAN

RMAN> CONFIGURE CHANNEL 1 DEVICE TYPE DISK CONNECT 'sys/oracle@db10g1'; new RMAN configuration parameters: CONFIGURE CHANNEL 1 DEVICE TYPE DISK CONNECT '*'; new RMAN configuration parameters are successfully stored starting full resync of recovery catalog full resync complete RMAN> CONFIGURE CHANNEL 2 DEVICE TYPE DISK CONNECT 'sys/oracle@db10g2'; new RMAN configuration parameters: CONFIGURE CHANNEL 2 DEVICE TYPE DISK CONNECT '*'; new RMAN configuration parameters are successfully stored starting full resync of recovery catalog full resync complete RMAN> list archivelog all; using target database control file instead of recovery catalog List of Archived Log Copies Key Thrd Seq S Low Time Name ------- ---- ------- - --------- ---- 1 1 3 A 28-FEB-11 /u01/app/oracle/product/10.2.0/db_1/dbs/arch1_3_744216789.dbf 2 2 2 A 27-FEB-11 /u01/app/oracle/product/10.2.0/db_1/dbs/arch2_2_744216789.dbf RMAN> crosscheck archivelog all; allocated channel: ORA_DISK_1 channel ORA_DISK_1: sid=127 instance=db10g1 devtype=DISK allocated channel: ORA_DISK_2 channel ORA_DISK_2: sid=135 instance=db10g2 devtype=DISK validation succeeded for archived log archive log filename=/u01/app/oracle/product/10.2.0/db_1/dbs/arch1_3_744216789.dbf recid=1 stamp=744292116 Crosschecked 1 objects validation succeeded for archived log archive log filename=/u01/app/oracle/product/10.2.0/db_1/dbs/arch2_2_744216789.dbf recid=2 stamp=743939327 Crosschecked 1 objects RMAN> backup archivelog all delete input; Starting backup at 28-FEB-11 current log archived using channel ORA_DISK_1 using channel ORA_DISK_2 channel ORA_DISK_1: starting archive log backupset channel ORA_DISK_1: specifying archive log(s) in backup set input archive log thread=1 sequence=3 recid=1 stamp=744292116 channel ORA_DISK_1: starting piece 1 at 28-FEB-11 channel ORA_DISK_2: starting archive log backupset channel ORA_DISK_2: specifying archive log(s) in backup set input archive log thread=2 sequence=2 recid=2 stamp=743939327 channel ORA_DISK_2: starting piece 1 at 24-FEB-11 channel ORA_DISK_1: finished piece 1 at 28-FEB-11 piece handle=+FRA/db10g/backupset/2011_02_28/annnf0_tag20110228t120354_0.265.744293037 tag=TAG20110228T120354 comment=NONE channel ORA_DISK_1: backup set complete, elapsed time: 00:00:02 channel ORA_DISK_1: deleting archive log(s) archive log filename=/u01/app/oracle/product/10.2.0/db_1/dbs/arch1_3_744216789.dbf recid=1 stamp=744292116 channel ORA_DISK_2: finished piece 1 at 24-FEB-11 piece handle=+FRA/db10g/backupset/2011_02_24/annnf0_tag20110228t120354_0.266.743940249 tag=TAG20110228T120354 comment=NONE channel ORA_DISK_2: backup set complete, elapsed time: 00:00:03 channel ORA_DISK_2: deleting archive log(s) archive log filename=/u01/app/oracle/product/10.2.0/db_1/dbs/arch2_2_744216789.dbf recid=2 stamp=743939327 channel ORA_DISK_1: starting archive log backupset channel ORA_DISK_1: specifying archive log(s) in backup set input archive log thread=1 sequence=4 recid=4 stamp=744293023 input archive log thread=2 sequence=3 recid=3 stamp=743940232 channel ORA_DISK_1: starting piece 1 at 28-FEB-11 channel ORA_DISK_1: finished piece 1 at 28-FEB-11 piece handle=+FRA/db10g/backupset/2011_02_28/annnf0_tag20110228t120354_0.267.744293039 tag=TAG20110228T120354 comment=NONE channel ORA_DISK_1: backup set complete, elapsed time: 00:00:02 channel ORA_DISK_1: deleting archive log(s) archive log filename=+FRA/db10g/archivelog/2011_02_28/thread_1_seq_4.264.744293023 recid=4 stamp=744293023 archive log filename=+FRA/db10g/archivelog/2011_02_24/thread_2_seq_3.263.743940231 recid=3 stamp=743940232 Finished backup at 28-FEB-11 Starting Control File and SPFILE Autobackup at 28-FEB-11 piece handle=+FRA/db10g/autobackup/2011_02_28/s_744293039.263.744293039 comment=NONE Finished Control File and SPFILE Autobackup at 28-FEB-11 SQL> alter system archive log current; System altered. RMAN> list archivelog all; using target database control file instead of recovery catalog List of Archived Log Copies Key Thrd Seq S Low Time Name ------- ---- ------- - --------- ---- 5 1 5 A 28-FEB-11 +FRA/db10g/archivelog/2011_02_28/thread_1_seq_5.264.744293089 6 2 4 A 24-FEB-11 +FRA/db10g/archivelog/2011_02_24/thread_2_seq_4.268.743940307 RMAN> CONFIGURE CHANNEL 1 DEVICE TYPE DISK CLEAR; old RMAN configuration parameters: CONFIGURE CHANNEL 1 DEVICE TYPE DISK CONNECT '*'; old RMAN configuration parameters are successfully deleted RMAN> CONFIGURE CHANNEL 2 DEVICE TYPE DISK CLEAR; old RMAN configuration parameters: CONFIGURE CHANNEL 2 DEVICE TYPE DISK CONNECT '*'; old RMAN configuration parameters are successfully deleted RMAN> exit Recovery Manager complete.Kind regards

Levi PereiraPublished by: Levi Pereira on February 28, 2011 12:16

-

Purge logs archiving on things primary and Standby and for Data Guard RMAN

Hi, I saw a couple of orders in regard to the purge archive records in a Data Guard configuration.Set UP the STRATEGY of SUPPRESSION of ARCHIVE to SHIPPED to All RELIEF;

Set UP the STRATEGY of ARCHIVELOG DELETION to APPLIED on All RELIEF;

Q1. The above only removes logs archiving to the primary or primary and Standby?

Q2. If deletions above archive logs on the primary, is it really remove them (immediately) or does the FRA to delete if space is needed?

I also saw

CONFIGURE ARCHIVELOG DELETION POLICY TO SAVED;

Q3. That what precedes, and once again it is something that you re primary side?

I saw the following advice in the manual of Concepts of data protection & Admin

Configure the DB_UNIQUE_NAME in RMAN for each database (primary and Standby) so RMAN can connect remotely to him

Q4. Why would I want my primary ro connect to the RMAN Repository (I use the local control file) of the standby? (is this for me to say define RMAN configuration settings in the Standby) manual

Q5. Should I only work with the RMAN Repository on the primary or should I be also in things in the deposits of RMAN (i.e. control files) of the standby?

Q6. If I am (usually mounted but not open) Physics, of standby I able to connect to its own local repository for RMAN (i.e. control based on files) while sleep mode is just mounted?

Q7. Similiarly if I have an old lofgical (i.e. effectively read-only), to even connect to the local RMAN Repository of Eve?

Q8. What is the most common way to schedule a RMAN backup to such an environment? example cron, planner of the OEM, DBMS_SCHEDULER? MY instinct is cron script as Planner OEM requires running the OEM service and DBMS_SCHEDULER requires the data runs?

Any idea greatly appreciated,

Jim

The above only removes logs archiving to the primary or primary and Standby?

When CONFIGURE you a deletion policy, the configuration applies to all destinations archive

including the flash recovery area. BACKUP - ENTRY DELETE and DELETE - ARCHIVELOG obey this configuration, like the flash recovery area.

You can also CONFIGURE an archived redo log political suppression that newspapers are eligible for deletion only after being applied to or transferred to database backup destinations.

If deletions above archive logs on the primary, is it really remove them (immediately) or does the FRA to delete if space is needed?

Its a configuration, it will not erase by itself.

If you want to use FRA for the automatic removal of the archivelogs on a database of physical before you do this:

1. make sure that DB_RECOVERY_FILE_DEST is set to FRA - view parameter DB_RECOVERY_FILE_DEST - and - setting DB_RECOVERY_FILE_DEST_SIZE

2. do you have political RMAN primary and Standby set - CONFIGURE ARCHIVELOG DELETION POLICY to APPLY ON ALL STANDBY;

If you want to keep archives more you can control how long keep logs by adjusting the size of the FRA

Great example:

http://emrebaransel.blogspot.com/2009/03/delete-applied-archivelogs-on-standby.html

All Oracle is worth a peek here:

http://emrebaransel.blogspot.com/

That what precedes, and once again it is something that you re primary side?

I would never use it. I always had it put it this way:

CONFIGURE ARCHIVELOG DELETION POLICY TO APPLIED ON ALL STANDBY;

I would use only 'BACKED UP' for the data protection system. Usually it tell oracle how many times to back up before removing.

Why would I want my primary ro connect to the RMAN Repository?

Because if you break down, you want to be able to backup there, too.

Also if it remains idle for awhile you want.

Should I only work with the RMAN Repository on the primary or should I be also in things in the deposits of RMAN (i.e. control files) of the standby?

Always use a database of catalog RMAN with Data Guard.

If I am (usually mounted but not open) Physics, of standby I able to connect to its own local repository for RMAN (i.e. control based on files) while sleep mode is just mounted?

Same answer as 5, use a catalog database.

Similiarly if I have an old lofgical (i.e. effectively read-only), to even connect to the local RMAN Repository of Eve?

Same answer as 5, use a catalog database.

What is the most common way to schedule a RMAN backup to such an environment? example cron, planner of the OEM, DBMS_SCHEDULER? MY instinct is cron script as Planner OEM requires running the OEM service and DBMS_SCHEDULER requires the data runs?

I think cron is still, but they all work. I like cron because the database has a problem at work always reports its results.

Best regards

mseberg

Summary

Always use a database with RMAN catalog.

Always use CRF with RMAN.

Always set the deletion «To APPLY ON ALL STANDBY» policy

DB_RECOVERY_FILE_DEST_SIZE determines how long to keep the archives with this configuration.

Post edited by: mseberg

-

Hi Oracle Community,

I have a few databases (11.2.0.4 POWER 2 block and 12.1.0.2) with Data Guard. Back up this database with RMAN to ribbons, only on the primaries.

During the backup, sometimes I got RMAN-08137: WARNING: log archived not deleted, necessary for intelligence or upstream collection procedure

By reason of this global warning to backup status is "COMPLETED WITH WARNINGS". Is it possible to remove this warning, or perhaps change RMAN configuration to avoid the appearance of this warning?

Concerning

J

Where is the problem on these warnings? If you do not want to see such warnings, why not simply to perform the removal of the archivelogs in a separate step? For example:

RMAN> backup database plus archivelog; RMAN> delete noprompt archivelog all completed before 'sysdate-1';

-

Need help on design for 2 EBS with RAC environment

Hi all

We currently have 4 servers with 256 GB of RAM, 1 TB HDD and 8 cores. Our requirement is to configure two EBS 12.1.3 with DB RAC environment. We want to take advantage of these 4 server to accommodate the two EBS since the configuration of the server (application server 2 with HA and 2 DB for RAC servers) are quite high, because the concurrent users on each EBS are not more than 200.

So our problem is as below:

Say we have these server 4A, B, C and D. We have 1 create multi instance node, with DB on server A & B (CARS) and application on the server C & D. Now if we want to install the second case, with the same architecture on the same servers that we do.

(1) use the same grid infrastructure and create DB for 2nd instance cluster even. (how?)

(2) install another grid infrastructure and create totally another cluster for this instance.

You can do the following for a case of BSE, and perform similar operations for a 2nd on the same grid. Check after the link. He detailed information EBS on RAC Setup

Install EBS R12 on 11g RAC Oracle ASM

High level steps are:

- Install and Configure Grid Infrastructure on cluster 2 nodes.

- Create the DATA starts EAM and FRA with a sufficient size.

- Install theSoftware to the database of this Cluster Homeon.

- Install the EBS by using a quick installation on the database node 1 (out of the BOX Non-RAC on 1 node installation

- Install BSE using rapid installation on nodes of applications.

- Convert the database of 11 GR 1 material not RAC RAC Database.

-

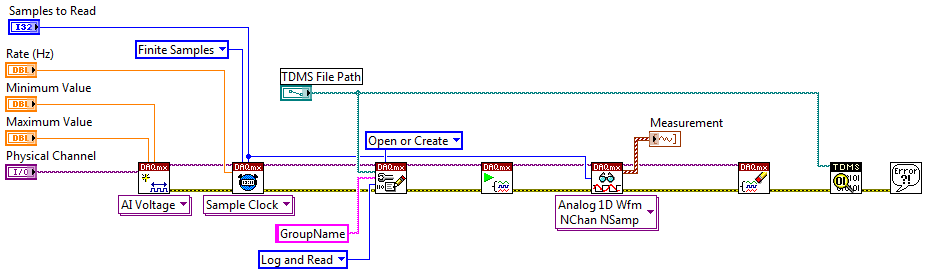

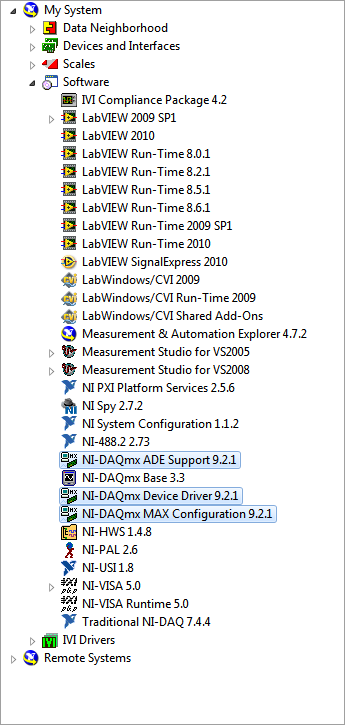

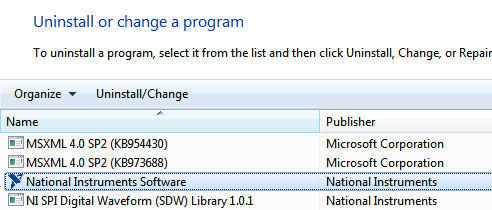

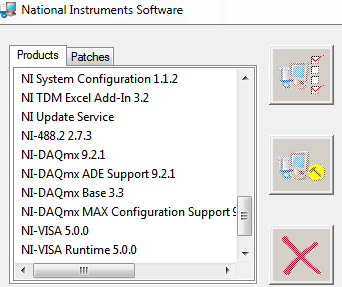

"DAQmx Configure Logging" found in Labview 2009

Dear Sir or Madam

I have the version of Labview 9.0f3 instored. Measurement & Automation Explorer version 4.60f1 instored.

Must I update anything to run the attached VI?

Problem:

As mentioned in the file like this http://zone.ni.com/devzone/cda/tut/p/id/9574

"NOR-DAQmx 9.0 installs a new DAQmx Configure Logging VI VI". However, I can't find this VI in my control panel and the icon appears as a question mark.

There are two ways to check your version of DAQmx:

(1) measurement and automation explore:

(2) using Add Remove programs

My guess is that you use DAQmx 8.9.5 and need to upgrade to 9.0 or later.

Best regards

-

Linear encoder and configure logging

Hi all

I am currently using a surfboard with LV2010 M Series PCI-6280.

I have a linear encoder in X 4 mode connected to the counter 0 and I was wondering if it is possible to spread the values on the counter because it keeps track of the distance in a file TDMS using 'DAQmx Configure Logging'. I tried this using MAX to set this, but it doesn't seem to work, so I wonder if it is still possible that I couldn't find anything online about it.

Thank you

Lester

Hi Lester,.

My apologies, I tried on another Committee. It can work with yours, as well. There is an example of a task of counter in the buffer that should allow you to save the data by adding in the Configure logging VI as we did before.

Open LabVIEW and select help > find examples... it will open the Finder of the example. In the Finder of the example, expand folders to get to the input and output material > DAQmx > Counter measures > County Digital events > County Digital events-buffer-meter-Ext Clk.vi

Put the meter right channel and channel PFI in the controls, and then run the VI to see what he does. Then you can change the schema to add the configuration Logging VI (just as before) and rerun the VI. You will find yourself with a TDMS log saved to the path that wire you in the logging VI configures.

I hope this helps.

Kind regards

Daniel H.

-

Delete logs archive > 1 day

Hi all

9i

RHEL5

I posted a thread here on how to remove the log archiving liked only 1 day for both PRIMARY and standby database.

But I don't find it anymore

Is it possible to search all the contents of my son using Keywork "delete archive logs?

Thank you all,

JC

Hello;

You old thread:

Remove the archivelogs and old backups

Best regards

mseberg

-

major differences of exadata database, listener, process than the normal RAC environment?

I would ask for any input about the major differences of exadata database, listener, process than the normal RAC environment.

I know now the exadata have not only SCAN listeners, but many other listeners. expert here can provide clarification?

Thank you

All the right questions... Welcome to the world of Exadata.

These are questions that could get into a lot more detail and discussion than a forum post. At a high level, you certainly don't want delete all indexes on Exadata. However, you need to index and an indexing strategy will change on Exadata. After you move a database from one not Exadata Exadata environment you are probably more indexed. Indexes used for OLTP transactions real - looking one or a few lines among others will usually quickly with an index. The index used to avoid a percentage of records but always returning number of records can often be moved. On the index depends on the nature of the workload and your application. If you have a control on the index, then test your queries and DML with the index (s) invisible. Check your implementation plan, the columns io_cell_offload in v$ sql, smart scan wait events to ensure you get intelligent analysis... and see if the smart scan is faster than using the index (s). The real-time SQL Monitor is an excellent tool to help with this - use dbms_sqltune or grid/cloud control.

Parallelism is a great tool to help still speed up queries and direct path loading operations and can help prompt smart scans... but use of parallelism really depends on your workload and must be controlled using DBRM and the parallel init parms, possibly using same parallel declaration put on hold, so it is not overwhelm your system and cause concurrency problems.

If you have a mixed environment of workload or consolidates databases on Exadata so my opinion is IORM plans should certainly be implemented.

-

Question: We have configured replication vsphere in our environment and when replication is triggered according to the OFR start us below the questions on the virtual machines that are configured for replication.

Version for esxi: Patch 5.1 level 7

Virtual center of version 5.1

Source of storage - IBM v7000

Destination storage - Hitachi

Questions:

Events of breach RPO

Consolidation for the VM disk failure

Delta abandoned events

Time change events

NTFS error and warning (event ID: 137, 57)

RDP disconnect and ping does matter

Standby error

Volsnap error

Stop the server and offline issue

Install vmtools emits automaticallywell, in this case, we need to disable the standby of the end of the replication.

So that everything in triggering replication does not trigger the snapshot of the suspension.

Then test the if you get these errors.

-

Concept on Undo tablespace in RAC environment

Why we need two different Undo tablespace for two different instances in RAC environment. What are the internal mechanisms behind it? No explanation of the concept will be great for me. What will be the scenario if we have same tablespace undo for two instances?

Thanks in advance.

Hello

To reduce CONTENTION and the complexity of the software.aslo oracle instances share not undo tablespace using cancel separate increase the availability of data undo files.

Note: all instances can still read that cancel all blocks for coherent reading purposes. In addition, any instance can update the any tablespace undo during the restoration of the transaction,

as long as undo tablespace is not currently used by another instance to cancel generation or a recovery operation.

HTH

Tobi

-

Reg apply log archiving after the transfer of data files

Hi all

That I reinstalled the main server of the D-Drive E-reader data files using the command line.

The redo logs for the move operation will apply on the eve of the database?C:\>Move <source_path> <destination_path>

In addition, what happens if the data files are moved manually in the primary database (i.e. without using the command prompt)?

Thank you

MadhuSee this doc. Keyword search Rename a data file in the primary database

http://docs.Oracle.com/CD/B28359_01/server.111/b28294/manage_ps.htm#i1034172

Also, you need to update primary database controlfile if some moment of the file made...

And also close this thread

Reg apply log archiving after the transfer of data files

As it would help in the forum of maintenance to clean.

-

I have 5 redo log files. Each is 4 GB. There are huge transactions (1800-2000 per second). So we have every hour 5-6 journal of the switches. So all the hours of archives journal 20 to 24 GB and daily increase in size, the size 480 GB.

I had planned to take an incremental backup of level 1 and level 0 per week daily with the option delete log archiving 'sysdate-1 '.

But we have little storage if, it is impossible to the sphere 480 GB per day and take backup of these 480 GB for 7 days (480 * 7) GB = 4 TB. How can I correct this situation? Help, please.1 disk is cheap

2 the onus on those who "Advanced" application

3 you can use Log Miner to check what is happening.You need to this address with application developers and/or clients and/or buy the disc.

There are no other solutions.-----------

Sybrand Bakker

Senior Oracle DBAPublished by: sybrand_b on July 16, 2012 11:11

-

Dear experts,

I have to test the size of the log archive every transaction. the next step needs to be done for this test

1 running dml scripts in bulk

2 need to find how the size of the log file archive created on the archive location.

based on what we have to give the stats that particular transaction generated as much redo log file from archive. can you please provide the script to do this. Thanks in advance.Oragg wrote:

Dear experts,I have to test the size of the log archive every transaction. the next step needs to be done for this test

1 running dml scripts in bulk

2 need to find how the size of the log file archive created on the archive location.based on what we have to give the stats that particular transaction generated as much redo log file from archive. can you please provide the script to do this. Thanks in advance.

Lets assume that you have loaded data for 1 day or 1 hour as below

alter session set nls_date_format = 'YYYY-MM-DD HH24'; select trunc(COMPLETION_TIME,'HH24') TIME, SUM(BLOCKS * BLOCK_SIZE)/1024/1024 SIZE_MB from V$ARCHIVED_LOG group by trunc (COMPLETION_TIME,'HH24') order by 1; -

The amendment applies to a proceeding Eve in RAC environment

11 g, using DGMGRL how instance to apply can be changed to the backup in RAC environment site. We have 4 node RAC 11.2.0.1 to the backup site and 1 instance is beging used for recovery. We pass the instance of recovery to others, a few reasons of maintenance. How can I do that.

Thank you

ShashiIt's in the Manual:

http://download.Oracle.com/docs/CD/E11882_01/server.112/e17023/dbresource.htm#i1029540

Larry

Maybe you are looking for

-

EliteBook 9480 m: how to rest the BIOS on HP EliteBook 9480 m password?

Hello Please help me to reset my bios password, my bios is blocked and does not work by removing the Bios battery. Please help me!

-

How to get rid of the virus of the moneyak of the fbi?

How to get rid of the virus of the moneyak of the fbi?

-

eMachine 5260 crashed because of the virus. How can I reinstall XP Home Edition OS?

My 5 year old eMachine 5260 crashed due to a virus. Had to buy a new HP with Windows 7 Desktop to enable the family to continue to work. It's a shame simply throw the eMachine. Could I check if the HARD drive does not work, remove everthing that w

-

I'm still on VISTA WINDOWS! I have a few questions about my situation and would like to hear if I again all the options for me more. - Is there anyway for me to upgrade Vista Home Premium to Windows 7? Is another question, possible that I can get out

-

The upgrade to windows 7 pro windows 10. Repeatedly to receive error code 0 x 80190001 after waiting a long time for download. There is also a message to check the network settings. Convenience store could not find no problem, so I myself removed fro