data for the DOL file plugin

I need to open the files DOL in TIARA. This DOL file out of the dynamic Turbolab software. This file can be opened in the analysis of signals Turbolab software. Here I enclose a DOL of DLL file plugin for Signal Turbolab analysis software.

Can someone help me make plugin for this file

and how to attach. DOL. DLL files & .inf with

Hi, Germain,.

We finished the development of the TurboLab_DOL use to read files DOL TurboLab dynamics.

You can download it here: http://zone.ni.com/devzone/cda/epd/p/id/6345

A big thank you to CORRSYS - DATRON Sensorsysteme GmbH for their support.

Stefan

PS: Please visit ni.com/dataplugins for a list of all supported file formats / DataPlugins

Tags: NI Software

Similar Questions

-

organize data for the tablespace files

Hi all

I would like to ask you if you have a way to organize a tablespace (especially USERS) using 10 data into 1 single file of data files.

You have a way to reorganize a database during the work day, so the watermark will go down, but without making a copy of storage space?

Thank you for taking my question into consideration.

ConcerningHello

I suppose that, if I understand you correctly as a Remap_tablespace with a Tablespace rename DataPump could help you.

This,

(1) create a tablespace again with a single good size in data file to hold your all objects. You may need to calculate the size of the data file.

(2) export all the objects, or better, the schema itself, of the current storage space, we call it source at this time using data pump.

(3) using the DP import, import the given in the new tablespace, we call it target with option Remap_tablespace = Source: target.

(4) once done successfully, drop the tablespace from source.

(5) and rename the target source.

You would get a tablespace with a data file and all the objects inside.

HTH

Aman... -

Writing data to extend the acquisition of data for the sampling rate high file

These are the tasks that I have to do to take noise measurements:

(1) take continuous data to USB 6281 Office, in a sample of 500 k (50 k samples at a time) rate.

(2) save data continuously for 3 to 6 hours in any file (any format is OK but I need to save in a series of files rather than the single file). I want to start writing again file after every 2 min.

I enclose my VI and pictures of my setup of the task. I can measure and write data to the file continuously for 15 minutes. After that, I see these errors:

(1) acquisition of equipment can't keep up with the software (something like that, also with a proposal to increase the size of the buffer...)

(2) memory is full.

Please help make my VI effective and correct. I suggest to remove him "write in the action file" loop of consumption because it takes a long time to open and close a file in a loop. You can suggest me to open the file outside the loop and write inside the loop. But I want to save my data in the new file, after every 2 min or some samples. If this can be done efficiently without using Scripture in the measurement file then please let me know.

Thank you in advance.

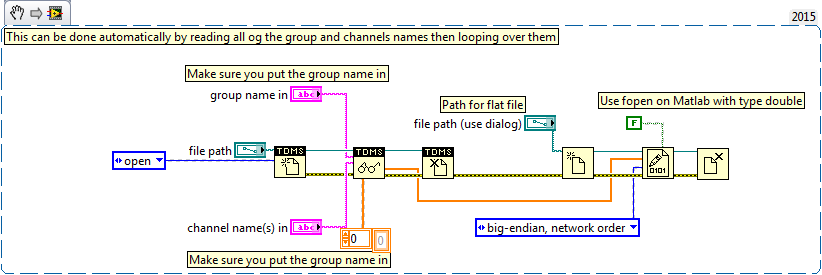

This example here is for a single file and a channel, you should be able to loop over that automatically. The background commentary should be the name of the channel, no group namede the name of the channel in the control.

-

labView send data wireless node for the excel file

Hi all

I used the basic tutorial for the network of wireless sensors OR. http://www.ni.com/tutorial/8890/en/. I finished it with a single node. The next phase of my project is to send the data from the node and the date and time in an excel file every hour. Is there any advice I can get to at least send the date and the data in the excel file? I'm using labView in 2012

I change, because I put the text instead of excel file

You cannot connect a scalar to the entry level that is needed. A solution would be to insert a table to build before the writing on the worksheet.

-

Write delay failed unable to save all the data for the file $Mft

Have a frustrating problem. Help is greatly appreciated. Learned the hard way and lost a dwg important issue which was unrecoverable. Bought a new drive external hard seagate. Could not back up the entire system with software pre-installed. Download acronis true image home 2011. Tried to backup system. Receive error when trying to backup messages; 'Delay to failure of Scripture; Reading of the sector; Windows was unable to save all the data for the file $Mft. The data has been lost. Have tried many fixes. Unable to disable write caching method (grayed out / unclickable). Played regedit 'EnableOplocks' is not listed to select. Attempted to run microsoft 'fix it' and got the blue screen of death. Short hair out of my head. Suggestions appreciated.

I don't know where Microsoft 'Technical support engineers' get their information.

Write caching without a doubt "does apply to external hard drives", but it is usually disabled to prevent to sort the problem you are experiencing.

I've seen several positions reporting to the same question that you do. All of these positions were with SATA drives. Is your Seagate eSATA drive?

The disc is recognized as an external drive in Device Manager? In Device Manager, go to the drive properties dialog box and click the policies tab. An external drive should have two options: "Optimize for quick removal" and "Optimize for performance". An internal hard drive shows the options as gray (with 'performance' selected), but there should also be a checkbox "Enable disk write cache" under the second option.

What shows in your policies tab?

If I understand correctly, "writeback" or "write behind" is implemented by disc material or its pilot. If the option is not available on the drive properties > policies tab, I would suggest to contact Seagate support.

-

Cannot get data author and the title for the pdf files display in the Explorer of Windows 7

How can I get Windows 7 explore to display the author information and the title for the pdf files? I can get columns to display for the author and the title of information, but they are empty and when I click it, I get the message "not specified." I use Acrobat to create and read pdf files. Pdf files, I create include the author and title information in the metadata, so I know the information are here. When I used Windows XP, I could hover over a pdf file and metadata would leap upward with the author and title, but Windows 7 does not display no information in the pop up more than Tpye/size/update even if the metadata author data and the title has been entered.

nothing to see here...

-

Dynamic action for validation of date with the notification message plugin

Hi all

Someone help me please with dynamic action for validation of date with the message notification plugin. I have a form with two elements of the date picker control and message notification plugin.

The requirement first user selects the exam is finished and then selects the date. So, if the date is greater than the date of the examination is over + 2 years then doesn't trigger the message notification plugin. I tried to create that dynamic action on the date picker date that triggers the scheduled issue notification message but I want to make conditional, I mean displays the message only if date of the selected is greater than the date of the exam is finished more than 2 years.

In terms simple, notification is displayed only if provided is superior to (date of the exam is completed + 2 years).

I use oracle apex 4.0 version and oracle 10g r2 database. I tried to reproduce the same requirement in my personal workspace. Here are the details. Please take a look.

Workspace: raghu_workspace

username: orton607

password: orton607

APP # 72193

PG # 1

Any help is appreciated.

Thanks in advance.

Orton.

You can get the value of the date of entry:

$(ele) .datePicker ('getDate');

So what to add functions such as:

function validateNotification (d1, d2) {}

Date1 var = $(d1) .datepicker ('getDate');

date2 var = $(d2) .datepicker ('getDate');

if(date1 && date2) {}

return ((date2.getTime()-date1.getTime())/(1000*24*60*60))>(365*2);

} else {}

Returns false;

}

}

The logic based on setting (I have two years from years of 365 days preceding)

Then in the D.A. specify a JavaScript expression as:

validateNotification ('P2_REVIEW_COMPLETED', this.triggeringElement.id)

Refer to page 2 for example.

-

Data for the graph economy waveform

Hello

I'm trying to collect the data of temperature with the attached VI. Everything works fine until I open the exported file that contains only the last point of the chart. I already had this problem and I think that it is quite common, but I can't find how to solve it.

Thank you very much for your help!

Here's what you do. The central loop is where you get several waveforms. You want to write each waveform in the output file, which means you want the wave form to write to the worksheet in the Central loop. Look at its inputs and outputs - put three of them, the file Path/New path, add to the file? and the header? on the shift registers. Initialize (wants to say wire the register shift outside the While loop) the path of the file to "data\test" (as you've already done), with the new path towards the "output" Shift Register Terminal. False (the default) of thread to add to the file? and true (if you want headers) to headers? On the side of the "output" of these two SHIFT registers, wire to 'Add to the file?' "True" and "False" to "Headers?

For the first time you run the intermediate loop, the file will be opened in a new file (since add to the file? is false) and a possibly written header. All subsequent calls will add data in the same file (because you wired "True" to add to the file on the output terminal) and no header.

And to get rid of the unnecessary frame at the end – data flow will be responsible for sequencing.

Bob Schor

-

I tried, but in vain, to write data in the CSV file, with the column headers of the file labeled appropriately to each channel as we do in LabView (see attached CSV). I know that developers should do this same in .net. Can anyone provide a snippet of code to help me get started? In addition, maybe there is a completely different way to do the same thing instead of writing directly to the CSV file? (In fact, I really need to fill a table with data and who join the CSV every seconds of couple). I have the tables already coded for each channel, but I'm still stuck on how to get it in the CSV file. I'm coding in VB.net using Visual Studio 2012, Measurement Studio 2013 Standard. Any help would be greatly appreciated. Thank you.

a csv file is nothing more than a text file

There are many examples on how to write a text using .NET file

-

Adds data to the binary file as concatenated array

Hello

I have a problem that can has been discussed several times, but I don't have a clear answer.

Normally I have devices that produce 2D image tables. I have send them to collection of loop with a queue and then index in the form of a 3D Board and in the end save the binary file.

It works very well. But I'm starting to struggle with problems of memory, when the number of these images exceeds more than that. 2000.

So I try to enjoy the fast SSD drive and record images in bulk (eg. 300) in binary file.

In the diagram attached, where I am simulating the camera with some files before reading. The program works well, but when I try to open the new file in the secondary schema, I see only the first 300 images (in this case).

I read on the forum, I have to adjust the number of like -1 in reading binary file and then I can read data from the cluster of tables. It is not very good for me, because I need to work with the data with Matlab and I would like to have the same format as before (for example table 3D - 320 x 240 x 4000). Is it possible to add 3D table to the existing as concatenated file?

I hope it makes sense :-)

Thank you

Honza

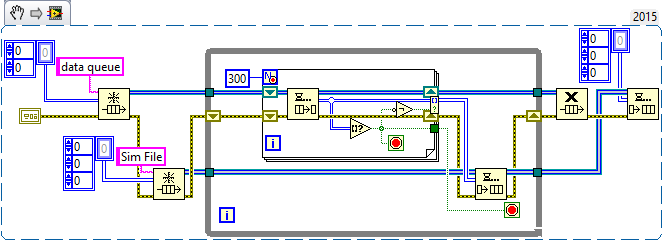

- Good to simulate the creation of the Image using a table of random numbers 2D! Always good to model the real problem (e/s files) without "complicating details" (manipulation of the camera).

- Good use of the producer/consumer in LT_Save. Do you know the sentinels? You only need a single queue, the queue of data, sending to a table of data for the consumer. When the producer quits (because the stop button is pushed), it places an empty array (you can just right click on the entry for the item and choose "Create Constant"). In the consumer, when you dequeue, test to see if you have an empty array. If you do, stop the loop of consumption and the output queue (since you know that the producer has already stopped and you have stopped, too).

- I'm not sure what you're trying to do in the File_Read_3D routine, but I'll tell you 'it's fake So, let's analyze the situation. Somehow, your two routines form a producer/consumer 'pair' - LT_Save 'product' a file of tables 3D (for most of 300 pages, unless it's the grand finale of data) and file_read_3D "consume" them and "do something", still somewhat ill-defined. Yes you pourrait (and perhaps should) merge these two routines in a unique "Simulator". Here's what I mean:

This is taken directly from your code. I replaced the button 'stop' queue with code of Sentinel (which I won't), and added a ' tail ', Sim file, to simulate writing these data in a file (it also use a sentinel).

Your existing code of producer puts unique 2D arrays in the queue of data. This routine their fate and "builds" up to 300 of them at a time before 'doing something with them', in your code, writing to a file, here, this simulation by writing to a queue of 3D Sim file. Let's look at the first 'easy' case, where we get all of the 300 items. The loop For ends, turning a 3D Board composed of 300 paintings 2D, we simply enqueue in our Sim file, our simulated. You may notice that there is an empty array? function (which, in this case, is never true, always False) whose value is reversed (to be always true) and connected to a conditional indexation Tunnel Terminal. The reason for this strange logic will become clear in the next paragraph.

Now consider what happens when you press the button stop then your left (not shown) producer. As we use sentries, he places an empty 2D array. Well, we dequeue it and detect it with the 'Empty table?' feature, which allows us to do three things: stop at the beginning of the loop, stop adding the empty table at the exit Tunnel of indexing using the conditional Terminal (empty array = True, Negate changes to False, then the empty table is not added to the range) , and it also cause all loop to exit. What happens when get out us the whole loop? Well, we're done with the queue of data, to set free us. We know also that we queued last 'good' data in the queue of the Sim queue, so create us a Sentinel (empty 3D table) and queue for the file to-be-developed Sim consumer loop.

Now, here is where you come from it. Write this final consumer loop. Should be pretty simple - you Dequeue, and if you don't have a table empty 3D, you do the following:

- Your table consists of Images 2D N (up to 300). In a single loop, extract you each image and do what you want to do with it (view, save to file, etc.). Note that if you write a sub - VI, called "process an Image" which takes a 2D array and done something with it, you will be "declutter" your code by "in order to hide the details.

- If you don't have you had an empty array, you simply exit the while loop and release the queue of the Sim file.

OK, now translate this file. You're offshore for a good start by writing your file with the size of the table headers, which means that if you read a file into a 3D chart, you will have a 3D Board (as you did in the consumer of the Sim file) and can perform the same treatment as above. All you have to worry is the Sentinel - how do you know when you have reached the end of the file? I'm sure you can understand this, if you do not already know...

Bob Schor

PS - you should know that the code snippet I posted is not 'properly' born both everything. I pasted in fact about 6 versions here, as I continued to find errors that I wrote the description of yourself (like forgetting the function 'No' in the conditional terminal). This illustrates the virtue of written Documentation-"slow you down", did you examine your code, and say you "Oops, I forgot to...» »

-

Tool to manage data in the DMP file

Friends,

We currently have this process:

-Export production data using expdp

-gzip .dmp file and move it via ftp to test servers

-import data on the test servers

We are trying to create a process where we can remove all the "sensitive data" test, because our data is highly confidential.

So, we have already designed a procedure that can remove these data after the import.

BUT my question is: there is some King of oracle tool which can allow us to modify the data in the dmp file, even before you start the import operation?

Approx. (Test and Production): Oracle 10 g 2 running under Linux

Thank youYou can protect your sensitive data after importation in the test environment using datamasking grid control functions.

Another solution, I've used sometimes is create the trigger for insertion in the test environment a sensitive but important data change is slower.

HTH

Antonio NAVARRO -

Examples of code for the robot LDAP plugin is missing

I downloaded and installed ITS 10.1.8 version but the sample code for the robot LDAP plugin isn't the case to find. The "sample" directory is missing. The example of code removed for any reason or is it replaced by something else? We must be able to search an LDAP compatible to v3 for our new dashboard application.

The document "Secure Connector Software Development Kit" for ITS Version 10.1.6 says the following:

-Oracle shipped three sample plug-ins that supports analysis, analysis of database table and analysis file LDAP directory.

-ORACLE SECURE ENTERPRISE SEARCH comes with three sample plug-ins sub $OH/search/sample/agent.Yes, samples of tracks were removed in 10.1.8 because they have not been sufficiently tested, or considered good enough to ship.

However, I have the LDAP robot and I'll be happy to send it to you (STRICTLY no guarantee, of course!) If you leave me an email for roger dot ford at oracle dot com.

-

ORA-01839: invalid date for the specified month

Hello

I don't know if this is the right place for posting this... but I got the following errors in my alert log file (10.2.0.1)

Errors in the fichier.../bdump/xxx_j000_23928.trc

ORA-12012: error on auto work 8888

ORA-01839: invalid date for the specified month

in the trace file:

NAME OF THE ACTION: (AUTO_SPACE_ADVISORY_JOB) 2009-03-30 22:00:02.313

NAME OF THE MODULE: (DBMS_SCHEDULER) 2009-03-30 22:00:02.313

SERVICE NAME: (SYS$ USERS) 2009-03-30 22:00:02.313

ID OF THE SESSION: (194,87) 2009-03-30 22:00:02.313

2009-03-30 22:00:02.313

ORA-12012: error on auto work 8888

ORA-01839: invalid date for the specified month

The problem is the date is not valid for the specified month, my question is how do I solve this problem. This is a newly created database.

I have only 2 jobs (some from dba_jobs) and there is no 8888.

2 jobs I have are:

THE WORK INTERVAL WHICH

1 + 1/240 sysdate unlock;

trunc (sysdate) 2 + 7 + 3/24 dbms_stats.gather_schema_stats ('db_name');

Please help. Thank you.

Published by: user10427867 on March 31, 2009 12:00The work is probably assigned a group of windows, probably MAINTENANCE_WINDOW_GROUP. The task will run in this window. Try these queries.

select schedule_name from dba_scheduler_jobs where job_name = 'AUTO_SPACE_ADVISOR_JOB'; select next_start_date from dba_scheduler_window_groups where window_group_name = 'MAINTENANCE_WINDOW_GROUP'; -- or the value returned by the first query if differentSince it is a work of Oracle supplied, I recommend you contact Oracle support. The trace file contains other useful information?

-

Hello

When autoconfig is running, it uses the model directory FND_TOP/admin/template files $...

But what is the use of model files under

IAS_ORACLE_HOME/appsutil/model/Apache/Apache/conf

Thank youAutoConfig read model files in $FND_TOP/admin/model and generate the specific model of the forum for IAS_ORACLE_HOME in $IAS_ORACLE_HOME/appsutil/templates/Apache/Apache/conf with some specific values of the instance. AutoConfig use this file and replace the variables with the instance from the file context information.

For example, use AutoConfig model apps_ux.conf or apps_nt.conf (under $FND_TOP/admin/model) and generate the apps.conf file (in $IAS_ORACLE_HOME/appsutil/templates/Apache/Apache/conf). The generated file apps.conf will have the correct format, but with values (or some) for each environment variable. AutoConfig reads the data in the context file and override it in this file to generate the file apps.conf in the directory IAS_ORACLE_HOME/Apache/Apache/conf $.

-

Need a visual cue for the status of Plugins

Need a visual cue for the status of Plugins:

. . . "Ask to activate" - these are light gray - it's good

. . . "Always enable" - should be in light green

. . . 'Never turn on' - should be in red light - it currently goes to a slightly darker shade of grey

Be able to sort them based on the State

The above would make it easier to verify that they are the way you want

There is only a ' true/false' = active tribute to work with, so you cannot always distinguish between activate and ask to activate.

.addon-view[type="plugin"][active="false"]{box-shadow: inset 0pt 0pt 100px rgba(255,144,144,1.0)}

Maybe you are looking for

-

Portege S100: Impossible to install Toshiba SD Host to use Toshiba Bluetooth SD Card2

I try to install the Toshiba SD Card2 on my Portege S100 Bluetooth but I can't make it work, I get the error"Cannot open the device Bluetooth ACPI (tosrfec.sys) driver. (Try again)? which suggests that the SD slot is not reading the card. Then, I wen

-

By adding a sequential number to analyze the file name

On my HP OJ 4500 G510g-m, when scaning using the HP solutions Center - it always adds a number to 4 digits at the end of the name of the basis file that I can change. That I don't seem to be able to do is to get until STOPPED by adding the number 4-d

-

Redirected page 'Protect your kids with parental controls' when you try to sign in to Family Safety

I'm able to access my security account for the family to manage the accounts of my children. I keep getting redirected to the page of 'your kids with parental controls Protection. " What happened, and what should I do about it?

-

Digitization of Document with Dell All In One Printer A960

I would like to know the steps to scan a document on a Dell All In One Printer A960. I have a Windows Vista operating system. Here are the steps I take in the manaul. 1. I put the paper on the glass. 2. Select the place I want the paper to go. 3. I

-

can use account creative cloud on different computers?

I would like to know if I can use CC on two computers (one in my office and one at home) or more?Thank you.. Aline.