data insert a problem...

I am creating a table type nested and used in a type of table column after that I want to insert data in the table... but there is an occurred.when problem I am executing the insert view ',' missing...Insert a statement like that...

INSERT INTO TAB_EMPLOYEE_DTL

(EMP_ID,

EMP_CODE,

EMP_NAME,

SEX,

Date of birth

NT_EMP_ADDRESS (TY_EMP_ADDRESS (ADD_LINE_1,

ADD_LINE_2,

DISTRICE,

PIN_NUBER,

STATE)))

VALUES

(1,

"a01",.

"Patricia."

'.

To_date (10-mar-87', 'dd-MON-rr'),

NT_EMP_ADDRESS (TY_EMP_ADDRESS ('a', 'sss', 'wer', 561234, "UP")));

You must provide a column name to «*»

and also delete

NT_EMP_ADDRESS(TY_EMP_ADDRESS(ADD_LINE_1,ADD_LINE_2,DISTRICE,PIN_NUBER,STATE)

and replace it with a column name

Published by: bluefrog August 22, 2011 17:02

Tags: Database

Similar Questions

-

How to find - including the table - the data insertion

Hi all

We have oracle 10g need to table name which records the last "data inserted the name of the table.

Any help on this query,

Thank you

My understanding is correct

1 sales man Insert customer details

2. this information is stored in a DB with 200 + tables

3. sales manager need a report for review and approve the details of the customer entered by sales man.

4. you are asked to prepare this report

5. you have no idea of what are the paintings to study to get information

If this is true the correct way is the look in the design of your application document.

-

I m using the detailed form master and I m off the sup_rec_no in the main table bcz and I m using the trigger in the database backend

but problem is when I click on the Insert button of data detail cannot be inserted

You control the order of your rows.after post by inserting the master line, you need to get the lines PK masters and ti value line details.

Read the doc to http://docs.oracle.com/cd/E23943_01/web.1111/b31974/bcadveo.htm#ADFFD1149 , which describes how to do this.

Timo

-

Third issue of the day, I know I'm breaking all records.

Try to insert the date below to db;

I get the following error;to_date('2010/05/27 4:52:02 PM', 'yyyy/mm/dd hh12:mi:ss'))

Also I tried not to change to hh24 hh12 no chance.ORA-01830: date format picture ends before converting entire input string

I followed this tutorial:

[http://www.techonthenet.com/oracle/questions/insert_date.php | http://www.techonthenet.com/oracle/questions/insert_date.php]

I left for the day but will come back tomorrow and check this.

Thank you so much as always.The chain has PM, so you must use the appropriate format, IE;

to_date('2010/05/27 4:52:02 PM', 'yyyy/mm/dd hh:mi:ss am') -

Hi Experts,

I am new to Oracle. Ask for your help to fix the performance of a query of insertion problem.

I have an insert query that is go search for records of the partitioned table.

Background: the user indicates that the query was running in 30 minutes to 10 G. The database is upgraded to 12 by one of my colleague. Now the query works continuously for hours, but no result. Check the settings and SGA is 9 GB, Windows - 4 GB. DB block size is 8192, DB Multiblock read file Count is 128. Overall target of PGA is 2457M.

The parameters are given below

VALUE OF TYPE NAME

------------------------------------ ----------- ----------

DBFIPS_140 boolean FALSE

O7_DICTIONARY_ACCESSIBILITY boolean FALSE

whole active_instance_count

aq_tm_processes integer 1

ARCHIVE_LAG_TARGET integer 0

asm_diskgroups chain

asm_diskstring chain

asm_power_limit integer 1

asm_preferred_read_failure_groups string

audit_file_dest string C:\APP\ADM

audit_sys_operations Boolean TRUEAUDIT_TRAIL DB string

awr_snapshot_time_offset integer 0

background_core_dump partial string

background_dump_dest string C:\APP\PRO

\RDBMS\TRA

BACKUP_TAPE_IO_SLAVES boolean FALSE

bitmap_merge_area_size integer 1048576

blank_trimming boolean FALSE

buffer_pool_keep string

buffer_pool_recycle string

cell_offload_compaction ADAPTIVE channel

cell_offload_decryption Boolean TRUE

cell_offload_parameters string

cell_offload_plan_display string AUTO

cell_offload_processing Boolean TRUE

cell_offloadgroup_name string

whole circuits

whole big client_result_cache_lag 3000

client_result_cache_size big integer 0

clonedb boolean FALSE

cluster_database boolean FALSE

cluster_database_instances integer 1

cluster_interconnects chain

commit_logging string

commit_point_strength integer 1

commit_wait string

string commit_write

common_user_prefix string C#.

compatible string 12.1.0.2.0

connection_brokers string ((TYPE = DED

((TYPE = EM

control_file_record_keep_time integer 7

control_files string G:\ORACLE\TROL01. CTL

FAST_RECOV

NTROL02. CT

control_management_pack_access string diagnostic

core_dump_dest string C:\app\dia

bal12\cdum

cpu_count integer 4

create_bitmap_area_size integer 8388608

create_stored_outlines string

cursor_bind_capture_destination memory of the string + tell

CURSOR_SHARING EXACT stringcursor_space_for_time boolean FALSE

db_16k_cache_size big integer 0

db_2k_cache_size big integer 0

db_32k_cache_size big integer 0

db_4k_cache_size big integer 0

db_8k_cache_size big integer 0

db_big_table_cache_percent_target string 0

db_block_buffers integer 0

db_block_checking FALSE string

db_block_checksum string TYPICAL

Whole DB_BLOCK_SIZE 8192db_cache_advice string WE

db_cache_size large integer 0

db_create_file_dest chain

db_create_online_log_dest_1 string

db_create_online_log_dest_2 string

db_create_online_log_dest_3 string

db_create_online_log_dest_4 string

db_create_online_log_dest_5 string

db_domain chain

db_file_multiblock_read_count integer 128

db_file_name_convert chainDB_FILES integer 200

db_flash_cache_file string

db_flash_cache_size big integer 0

db_flashback_retention_target around 1440

chain of db_index_compression_inheritance NONE

DB_KEEP_CACHE_SIZE big integer 0

chain of db_lost_write_protect NONE

db_name string ORCL

db_performance_profile string

db_recovery_file_dest string G:\Oracle\

y_Area

whole large db_recovery_file_dest_size 12840M

db_recycle_cache_size large integer 0

db_securefile string PREFERRED

channel db_ultra_safe

db_unique_name string ORCL

db_unrecoverable_scn_tracking Boolean TRUE

db_writer_processes integer 1

dbwr_io_slaves integer 0

DDL_LOCK_TIMEOUT integer 0

deferred_segment_creation Boolean TRUE

dg_broker_config_file1 string C:\APP\PRO

\DATABASE\

dg_broker_config_file2 string C:\APP\PRO

\DATABASE\

dg_broker_start boolean FALSE

diagnostic_dest channel directory

disk_asynch_io Boolean TRUE

dispatchers (PROTOCOL = string

12XDB)

distributed_lock_timeout integer 60

dml_locks whole 2076

whole dnfs_batch_size 4096dst_upgrade_insert_conv Boolean TRUE

enable_ddl_logging boolean FALSE

enable_goldengate_replication boolean FALSE

enable_pluggable_database boolean FALSE

event string

exclude_seed_cdb_view Boolean TRUE

fal_client chain

fal_server chain

FAST_START_IO_TARGET integer 0

fast_start_mttr_target integer 0

fast_start_parallel_rollback string LOW

file_mapping boolean FALSE

fileio_network_adapters string

filesystemio_options chain

fixed_date chain

gcs_server_processes integer 0

global_context_pool_size string

global_names boolean FALSE

global_txn_processes integer 1

hash_area_size integer 131072

channel heat_map

hi_shared_memory_address integer 0hs_autoregister Boolean TRUE

iFile file

inmemory_clause_default string

inmemory_force string by DEFAULT

inmemory_max_populate_servers integer 0

inmemory_query string ENABLE

inmemory_size big integer 0

inmemory_trickle_repopulate_servers_ integer 1

percent

instance_groups string

instance_name string ORCL

instance_number integer 0

instance_type string RDBMS

instant_restore boolean FALSE

java_jit_enabled Boolean TRUE

java_max_sessionspace_size integer 0

JAVA_POOL_SIZE large integer 0

java_restrict string no

java_soft_sessionspace_limit integer 0

JOB_QUEUE_PROCESSES around 1000

LARGE_POOL_SIZE large integer 0

ldap_directory_access string NONE

ldap_directory_sysauth string no.

license_max_sessions integer 0

license_max_users integer 0

license_sessions_warning integer 0

listener_networks string

LOCAL_LISTENER (ADDRESS = string

= i184borac

(NET) (PORT =

lock_name_space string

lock_sga boolean FALSE

log_archive_config string

Log_archive_dest chain

Log_archive_dest_1 chain

LOG_ARCHIVE_DEST_10 string

log_archive_dest_11 string

log_archive_dest_12 string

log_archive_dest_13 string

log_archive_dest_14 string

log_archive_dest_15 string

log_archive_dest_16 string

log_archive_dest_17 string

log_archive_dest_18 string

log_archive_dest_19 string

LOG_ARCHIVE_DEST_2 string

log_archive_dest_20 string

log_archive_dest_21 string

log_archive_dest_22 string

log_archive_dest_23 string

log_archive_dest_24 string

log_archive_dest_25 string

log_archive_dest_26 string

log_archive_dest_27 string

log_archive_dest_28 string

log_archive_dest_29 string

log_archive_dest_3 string

log_archive_dest_30 string

log_archive_dest_31 string

log_archive_dest_4 string

log_archive_dest_5 string

log_archive_dest_6 string

log_archive_dest_7 string

log_archive_dest_8 string

log_archive_dest_9 string

allow the chain of log_archive_dest_state_1

allow the chain of log_archive_dest_state_10

allow the chain of log_archive_dest_state_11

allow the chain of log_archive_dest_state_12

allow the chain of log_archive_dest_state_13

allow the chain of log_archive_dest_state_14

allow the chain of log_archive_dest_state_15

allow the chain of log_archive_dest_state_16

allow the chain of log_archive_dest_state_17

allow the chain of log_archive_dest_state_18

allow the chain of log_archive_dest_state_19

allow the chain of LOG_ARCHIVE_DEST_STATE_2allow the chain of log_archive_dest_state_20

allow the chain of log_archive_dest_state_21

allow the chain of log_archive_dest_state_22

allow the chain of log_archive_dest_state_23

allow the chain of log_archive_dest_state_24

allow the chain of log_archive_dest_state_25

allow the chain of log_archive_dest_state_26

allow the chain of log_archive_dest_state_27

allow the chain of log_archive_dest_state_28

allow the chain of log_archive_dest_state_29

allow the chain of log_archive_dest_state_3allow the chain of log_archive_dest_state_30

allow the chain of log_archive_dest_state_31

allow the chain of log_archive_dest_state_4

allow the chain of log_archive_dest_state_5

allow the chain of log_archive_dest_state_6

allow the chain of log_archive_dest_state_7

allow the chain of log_archive_dest_state_8

allow the chain of log_archive_dest_state_9

log_archive_duplex_dest string

log_archive_format string ARC%S_%R.%

log_archive_max_processes integer 4log_archive_min_succeed_dest integer 1

log_archive_start Boolean TRUE

log_archive_trace integer 0

whole very large log_buffer 28784K

log_checkpoint_interval integer 0

log_checkpoint_timeout around 1800

log_checkpoints_to_alert boolean FALSE

log_file_name_convert chain

whole MAX_DISPATCHERS

max_dump_file_size unlimited string

max_enabled_roles integer 150

whole max_shared_servers

max_string_size string STANDARD

memory_max_target big integer 0

memory_target large integer 0

NLS_CALENDAR string GREGORIAN

nls_comp BINARY string

nls_currency channel u

string of NLS_DATE_FORMAT DD-MON-RR

nls_date_language channel ENGLISH

string nls_dual_currency C

nls_iso_currency string UNITED KINnls_language channel ENGLISH

nls_length_semantics string OCTET

string nls_nchar_conv_excp FALSE

nls_numeric_characters chain.,.

nls_sort BINARY string

nls_territory string UNITED KIN

nls_time_format HH24.MI string. SS

nls_time_tz_format HH24.MI string. SS

chain of NLS_TIMESTAMP_FORMAT DD-MON-RR

NLS_TIMESTAMP_TZ_FORMAT string DD-MON-RR

noncdb_compatible boolean FALSE

object_cache_max_size_percent integer 10

object_cache_optimal_size integer 102400

olap_page_pool_size big integer 0

open_cursors integer 300

Open_links integer 4

open_links_per_instance integer 4

optimizer_adaptive_features Boolean TRUE

optimizer_adaptive_reporting_only boolean FALSE

OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES boolean FALSE

optimizer_dynamic_sampling integer 2

optimizer_features_enable string 12.1.0.2optimizer_index_caching integer 0

OPTIMIZER_INDEX_COST_ADJ integer 100

optimizer_inmemory_aware Boolean TRUE

the string ALL_ROWS optimizer_mode

optimizer_secure_view_merging Boolean TRUE

optimizer_use_invisible_indexes boolean FALSE

optimizer_use_pending_statistics boolean FALSE

optimizer_use_sql_plan_baselines Boolean TRUE

OPS os_authent_prefix string $

OS_ROLES boolean FALSE

parallel_adaptive_multi_user Boolean TRUE

parallel_automatic_tuning boolean FALSE

parallel_degree_level integer 100

parallel_degree_limit string CPU

parallel_degree_policy chain MANUAL

parallel_execution_message_size integer 16384

parallel_force_local boolean FALSE

parallel_instance_group string

parallel_io_cap_enabled boolean FALSE

PARALLEL_MAX_SERVERS integer 160

parallel_min_percent integer 0

parallel_min_servers integer 16parallel_min_time_threshold string AUTO

parallel_server boolean FALSE

parallel_server_instances integer 1

parallel_servers_target integer 64

parallel_threads_per_cpu integer 2

pdb_file_name_convert string

pdb_lockdown string

pdb_os_credential string

permit_92_wrap_format Boolean TRUE

pga_aggregate_limit great whole 4914M

whole large pga_aggregate_target 2457M-

Plscope_settings string IDENTIFIER

plsql_ccflags string

plsql_code_type chain INTERPRETER

plsql_debug boolean FALSE

plsql_optimize_level integer 2

plsql_v2_compatibility boolean FALSE

plsql_warnings DISABLE channel: AL

PRE_PAGE_SGA Boolean TRUE

whole process 300

processor_group_name string

query_rewrite_enabled string TRUE

applied query_rewrite_integrity chain

rdbms_server_dn chain

read_only_open_delayed boolean FALSE

recovery_parallelism integer 0

Recyclebin string on

redo_transport_user string

remote_dependencies_mode string TIMESTAMP

remote_listener chain

Remote_login_passwordfile string EXCLUSIVE

REMOTE_OS_AUTHENT boolean FALSE

remote_os_roles boolean FALSEreplication_dependency_tracking Boolean TRUE

resource_limit Boolean TRUE

resource_manager_cpu_allocation integer 4

resource_manager_plan chain

result_cache_max_result integer 5

whole big result_cache_max_size K 46208

result_cache_mode chain MANUAL

result_cache_remote_expiration integer 0

resumable_timeout integer 0

rollback_segments chain

SEC_CASE_SENSITIVE_LOGON Boolean TRUEsec_max_failed_login_attempts integer 3

string sec_protocol_error_further_action (DROP, 3)

sec_protocol_error_trace_action string PATH

sec_return_server_release_banner boolean FALSE

disable the serial_reuse chain

service name string ORCL

session_cached_cursors integer 50

session_max_open_files integer 10

entire sessions 472

Whole large SGA_MAX_SIZE M 9024

Whole large SGA_TARGET M 9024

shadow_core_dump string no

shared_memory_address integer 0

SHARED_POOL_RESERVED_SIZE large integer 70464307

shared_pool_size large integer 0

whole shared_server_sessions

SHARED_SERVERS integer 1

skip_unusable_indexes Boolean TRUE

smtp_out_server chain

sort_area_retained_size integer 0

sort_area_size integer 65536

spatial_vector_acceleration boolean FALSE

SPFile string C:\APP\PRO

\DATABASE\

sql92_security boolean FALSE

SQL_Trace boolean FALSE

sqltune_category string by DEFAULT

standby_archive_dest channel % ORACLE_HO

standby_file_management string MANUAL

star_transformation_enabled string TRUE

statistics_level string TYPICAL

STREAMS_POOL_SIZE big integer 0

tape_asynch_io Boolean TRUEtemp_undo_enabled boolean FALSE

entire thread 0

threaded_execution boolean FALSE

timed_os_statistics integer 0

TIMED_STATISTICS Boolean TRUE

trace_enabled Boolean TRUE

tracefile_identifier chain

whole of transactions 519

transactions_per_rollback_segment integer 5

UNDO_MANAGEMENT string AUTO

UNDO_RETENTION integer 900undo_tablespace string UNDOTBS1

unified_audit_sga_queue_size integer 1048576

use_dedicated_broker boolean FALSE

use_indirect_data_buffers boolean FALSE

use_large_pages string TRUE

user_dump_dest string C:\APP\PRO

\RDBMS\TRA

UTL_FILE_DIR chain

workarea_size_policy string AUTO

xml_db_events string enableThanks in advance

Firstly, thank you for posting the 10g implementation plan, which was one of the key things that we were missing.

Second, you realize that you have completely different execution plans, so you can expect different behavior on each system.

Your package of 10g has a total cost of 23 959 while your plan of 12 c has a cost of 95 373 which is almost 4 times more. All things being equal, cost is supposed to relate directly to the time spent, so I expect the 12 c plan to take much more time to run.

From what I can see the 10g plan begins with a scan of full table on DEALERS, and then a full scan on SCARF_VEHICLE_EXCLUSIONS table, and then a full scan on CBX_tlemsani_2000tje table, and then a full scan on CLAIM_FACTS table. The first three of these analyses tables have a very low cost (2 each), while the last has a huge cost of 172K. Yet once again, the first three scans produce very few lines in 10g, less than 1,000 lines each, while the last product table scan 454 K lines.

It also looks that something has gone wrong in the 10g optimizer plan - maybe a bug, which I consider that Jonathan Lewis commented. Despite the full table scan with a cost of 172 K, NESTED LOOPS it is part of the only has a cost of 23 949 or 24 K. If the math is not in terms of 10g. In other words, maybe it's not really optimal plan because 10g optimizer may have got its sums wrong and 12 c might make his right to the money. But luckily this 'imperfect' 10g plan happens to run fairly fast for one reason or another.

The plan of 12 starts with similar table scans but in a different order. The main difference is that instead of a full table on CLAIM_FACTS scan, it did an analysis of index on CLAIM_FACTS_AK9 beach at the price of 95 366. It is the only main component of the final total cost of 95 373.

Suggestions for what to do? It is difficult, because there is clearly an anomaly in the system of 10g to have produced the particular execution plan that he uses. And there is other information that you have not provided - see later.

You can try and force a scan of full table on CLAIM_FACTS by adding a suitable example suspicion "select / * + full (CF) * / cf.vehicle_chass_no...". "However, the tips are very difficult to use and does not, guarantee that you will get the desired end result. So be careful. For the essay on 12 c, it may be worth trying just to see what happens and what produces the execution plan looks like. But I would not use such a simple, unique tip in a production system for a variety of reasons. For testing only it might help to see if you can force the full table on CLAIM_FACTS scan as in 10g, and if the performance that results is the same.

The two plans are parallel ones, which means that the query is broken down into separate, independent steps and several steps that are executed at the same time, i.e. several CPUS will be used, and there will be several readings of the disc at the same time. (It is a mischaracterization of the works of parallel query how). If 10g and 12 c systems do not have the SAME hardware configuration, then you would naturally expect different time elapsed to run the same parallel queries. See the end of this answer for the additional information that you may provide.

But I would be very suspicious of the hardware configuration of the two systems. Maybe 10 g system has 16-core processors or more and 100's of discs in a matrix of big drives and maybe the 12 c system has only 4 cores of the processor and 4 disks. That would explain a lot about why the 12 c takes hours to run when the 10 g takes only 30 minutes.

Remember what I said in my last reply:

"Without any contrary information I guess the filter conditions are very low, the optimizer believes he needs of most of the data in the table and that a table scan or even a limited index scan complete is the"best"way to run this SQL. In other words, your query takes just time because your tables are big and your application has most of the data in these tables. "

When dealing with very large tables and to do a full table parallel analysis on them, the most important factor is the amount of raw hardware, you throw the ball to her. A system with twice the number of CPUS and twice the number of disks will run the same parallel query in half of the time, at least. It could be that the main reason for the 12 c system is much slower than the system of 10g, rather than on the implementation plan itself.

You may also provide us with the following information which would allow a better analysis:

- Row counts in each tables referenced in the query, and if one of them are partitioned.

- Hardware configurations for both systems - the 10g and the 12 a. Number of processors, the model number and speed, physical memory, CPU of discs.

- The discs are very important - 10g and 12 c have similar disk subsystems? You use simple old records, or you have a San, or some sort of disk array? Are the bays of identical drives in both systems? How are they connected? Fast Fibre Channel, or something else? Maybe even network storage?

- What is the size of the SGA in both systems? of values for MEMORY_TARGET and SGA_TARGET.

- The fact of the CLAIM_FACTS_AK9 index exist on the system of 10g. I guess he does, but I would like that it confirmed to be safe.

John Brady

-

Insert the problem using a SELECT table with an index by TRUNC function

I came across this problem when you try to insert a select query, select returns the correct results, but when you try to insert the results into a table, the results are different. I found a work around by forcing a selection order, but surely this is a bug in Oracle as how the value of select statements may differ from the insert?

Platform: Windows Server 2008 R2

11.2.3 Oracle Enterprise Edition

(I've not tried to reproduce this on other versions)

Here are the scripts to create the two tables and the data source:

Now, execute the select statement:CREATE TABLE source_data ( ID NUMBER(2), COUNT_DATE DATE ); CREATE INDEX IN_SOURCE_DATA ON SOURCE_DATA (TRUNC(count_date, 'MM')); INSERT INTO source_data VALUES (1, TO_DATE('20120101', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120102', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120103', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120201', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120202', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120203', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120301', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120302', 'YYYYMMDD')); INSERT INTO source_data VALUES (1, TO_DATE('20120303', 'YYYYMMDD')); CREATE TABLE result_data ( ID NUMBER(2), COUNT_DATE DATE );

You should get the following:SELECT id, TRUNC(count_date, 'MM') FROM source_data GROUP BY id, TRUNC(count_date, 'MM')

Now insert in the table of results:1 2012/02/01 1 2012/03/01 1 2012/01/01

Select the table, and you get:INSERT INTO result_data SELECT id, TRUNC(count_date, 'MM') FROM source_data GROUP BY id, TRUNC(count_date, 'MM');

The most recent month is repeated for each line.1 2012/03/01 1 2012/03/01 1 2012/03/01

Truncate your table and insert the following statement and results should now be correct:

If someone has encountered this problem before, could you please let me know, I don't see what I make a mistake because the selection results are correct, they should not be different from what is being inserted.INSERT INTO result_data SELECT id, TRUNC(count_date, 'MM') FROM source_data GROUP BY id, TRUNC(count_date, 'MM') ORDER BY 1, 2;

Published by: user11285442 on May 13, 2013 05:16

Published by: user11285442 on May 13, 2013 06:15Most likely a bug in 11.2.0.3. I can reproduce on Red Hat Linux and AIX.

You can perform a search on MOS to see if this is a known bug (very likely), if not then you have a pretty simple test box to open a SR with.

John

-

Prior insertion trigger problem

Hi Guyz,

I am facing a strange problem or may be wrong somewhere, but can't find my problem, I hope I'll get the solution to my problem here im im.

IM updating my quantities in the table MN_ITEM_DETAILS.

I have below master-details on before insert trigger block works very well.SQL> DESC MN_ITEM_DETAILS Name Null? Type ----------------------------------------- -------- ------------------ SI_SHORT_CODE NUMBER(10) SI_CODE NUMBER(15) ITEM_DESCP VARCHAR2(200) ITEM_U_M VARCHAR2(6) ITEM_QTY NUMBER(10) ITEM_REMARKS VARCHAR2(100)

All the triggers to INSERT before & after INSERTION block levelSQL> DESC MN_MDV_MASTER Name Null? Type ----------------------------------------- -------- ---------------------------- MDV_NO NOT NULL NUMBER(15) MDV_DATE DATE WHSE_LOC VARCHAR2(15) PROJ_WHSE VARCHAR2(30) ACTIVITY_LOC VARCHAR2(30) MRF_NO VARCHAR2(30) CLIENT VARCHAR2(30) CLIENT_PO# VARCHAR2(15) CLIENT_PO_DATE DATE WHSE_INCHG VARCHAR2(30) WHSE_DATE DATE RECD_BY VARCHAR2(30) INSPECTED_BY VARCHAR2(30) DRIVER_NAME VARCHAR2(30) REMARKS VARCHAR2(200) RECD_BY_DATE DATE INSPECTED_DATE DATE DRIVER_NAME_DATE DATE CST_CENTER VARCHAR2(15) SQL> DESC MN_MDV_DETAILS Name Null? Type ----------------------------------------- -------- ---------------------------- MDV_NO NUMBER(15) ITEM_CODE NUMBER(15) ITEM_DESCP VARCHAR2(150) ITEM_U_M VARCHAR2(6) ITEM_QTY NUMBER(6) ITEM_BALANCE NUMBER(10) PROJECT VARCHAR2(15) ACTIVITY VARCHAR2(15) LOCATION VARCHAR2(15)

until the above works fine and its update of the MN_ITEM_DETAILS. ITEM_QTY correctlyPRE-INSERT -- ON details block level UPDATE MN_ITEM_DETAILS SET ITEM_QTY = NVL(ITEM_QTY,0) - NVL(:MN_MDV_DETAILS.ITEM_QTY,0) WHERE SI_CODE = :MN_MDV_DETAILS.ITEM_CODE; POST-INSERT MASTER BLOCK LEVEL TRIGGER INSERT INTO MN_MRBV_MASTER( MDV# , MDV_DATE , WHSE_LOC , CST_CENTER )VALUES (:MN_MDV_MASTER.MDV_NO , :MN_MDV_MASTER.MDV_DATE , :MN_MDV_MASTER.WHSE_LOC , :MN_MDV_MASTER.CST_CENTER); POST-INSERT ON DETAILS BLOCK LEVEL INSERT INTO MN_MRBV_DETAILS( MDV# , ITEM_CODE , ITEM_DESCP , ITEM_U_M , QTY , ITEM_BALANCE , PROJECT , ACTIVITY , LOCATION )VALUES (:MN_MDV_DETAILS.MDV_NO , :MN_MDV_DETAILS.ITEM_CODE , :MN_MDV_DETAILS.ITEM_DESCP , :MN_MDV_DETAILS.ITEM_U_M , :MN_MDV_DETAILS.ITEM_QTY , :MN_MDV_DETAILS.ITEM_BALANCE, :MN_MDV_DETAILS.PROJECT , :MN_MDV_DETAILS.ACTIVITY , :MN_MDV_DETAILS.LOCATION );

but im using the same as above in the MASTER-DETAIL table below but do not update the ITEM_QTY in MN_ITEM_DETAILS

SQL> DESC MN_MRBV_MASTER Name Null? Type ----------------------------------------- -------- ---------------------------- MDV# NOT NULL NUMBER(15) MDV_DATE DATE WHSE_LOC VARCHAR2(15) RET_FRM_PROJECT VARCHAR2(1) RET_FRM_CLIENT VARCHAR2(1) CST_CENTER VARCHAR2(15) WHSE_INCHG VARCHAR2(30) WHSE_DATE DATE RETURN_BY VARCHAR2(30) INSPECTED_BY VARCHAR2(30) RETURN_BY_DATE DATE INSPECTED_BY_DATE DATE DRIVER_NAME VARCHAR2(30) DRIVER_DATE DATE REMARKS VARCHAR2(250) SQL> DESC MN_MRBV_DETAILS Name Null? Type ----------------------------------------- -------- ---------------------------- MDV# NUMBER(15) ITEM_CODE NUMBER(15) ITEM_DESCP VARCHAR2(150) ITEM_U_M VARCHAR2(6) QTY NUMBER(6) ITEM_BALANCE NUMBER(10) PROJECT VARCHAR2(15) ACTIVITY VARCHAR2(15) LOCATION VARCHAR2(15)

ConcerningPRE-INSERT--> here its not updating the MN_ITEM_DETAILS.ITEM_QTY table any sugesstion plz why its not updating...? MDV_DETAILS UPDATE MN_ITEM_DETAILS SET ITEM_QTY = NVL(ITEM_QTY,0) + NVL(:MN_MRBV_DETAILS.QTY,0) WHERE SI_CODE = :MN_MRBV_DETAILS.ITEM_CODE;

Houda

Published by: houda Shareef on January 8, 2011 02:19try to write your code in before update trigger

-

I have an insert query that I have not changed, but for some reason it won't insert anything in the database.

The data is entered by a form of Ajax submission and goes to insert.php

{if (isset($_POST['message_wall']))}

/ * The database connection * /.

include ('config.php');

/ * Remove the HTML tag to prevent the injection of the query * /.

$message = mysql_real_escape_string($_POST['message_wall']);

$to = mysql_real_escape_string($_POST['profile_to']);

$sql = "INSERT INTO wall (VALUES) (message)

« '. $message. " »)';

mysql_query ($SQL);I want to be able to add a user_id in the database too

The ajax code:

$(document) .ready (function () {}

{$("form#submit_wall").submit (function ()}

var message_wall is $('#message_wall').attr ('value');.

$.ajax({)

type: 'POST',

URL: "insert.php"

data: "message_wall ="+ message_wall, ".

success: function() {}

$("ul#wall").prepend ("< style li =' display: none" > "+ message_wall +"< /li > < br > < HR >");

$("ul #wall li:first").fadeIn();)

}

});

Returns false;

});

});Hello

As it is a form ajax post then the form data should be inserted into your database using the insert.php script. All in the form of ajax jQuery is passed to the script of treatment if you process in the insert script it should work o/k.

You must then include a text response to aid a statement simple echo in your insert script, this should include everything that you want to appear on your page.

Php in your insert script would be similar to.

At the beginning of the script.

$date = $_POST ['msg_date'];

At the bottom of the script.

If {($success)

echo "Inserted on $date ';

} else {}

echo "there was a problem processing your information.';

}The jQuery to achieve code would be-

Perform tasks of post-tabling

function showResponse (responseText, statusText) {}

$('.response').text (responseText);

}More-

success: showResponse,

Treatment options in your ajax form

And just include a

where you want to display in your html code.

PZ

-

Tecra S1 - Vodafone 3 G card data - error 797 problems

I installed a 3G data card and the Vodafone software on a Tecra S1 Windows XP runnig. When I click on "Connect" software Vodafone, it returns a message "Error 797 the modem could not be found" .

In settings network - the 3G connection that the software creates has a red cross through it and says device not found - even if it is listed in the Modems in the control panel and can be queried.

I have re-installed the card and the software several times. The card works on any other laptop I try, but not this one. Anyone know if this is a known issue with the Tecra S1? More important, a fix! -It starts to become really annoying.

Thanks in advance.

Hello

As far as I know computers laptops with processors HTT have problems with cards Vodafone but your Tecra S1 has no processor HTT. Did you already try to disable the modem of Toshiba and try to use only a Vodafone card? I guess that you have properly installed the card and use it after Vodafone dashboard software.

-

Satellite M100-JG2 HotKey and date and time problem

Hi all

I'm not very good with computers, and I've had some problems for a while now. My laptop is almost three years, I don't know why I'm writing this after so long but anyway. I think they are called "keys" or "launchkeys", hopeyfully, you know what I mean, but anyway they do no more work. They worked for a while, but they do not work now. I went into the control panel and went into the 'Toshiba' orders and tried to change what does each control, but it still does not work. No one knows how to fix?

Also, another problem that I had since I first laptop is my date and time are screwed up. If I put my 15:30 time, it works perfectly, until around 16:15, that when he goes back to 15:30 and go all the way up to 16:15 and continues in this cycle until I manually change the time. I had a few friends (no professional) to look at, but can not understand. When I start my laptop I go to settings in the start screen, try to change it was from there, but it keeps going during the cycle. If I click on the time and click on the tab "internet time" and say "update now", it updates, and then only time will work normally, but only until I turn off my laptop. Once I turn it on then next turn, it passes through this new cycle of 45 minutes. If anyone has had this problem or knows how to fix?

I'm not very good at explaining, but I hope you guys can help! I appreciate it really :) Show!

> I think they are called "keys" or "launchkeys", hopeyfully, you know what I mean, but anyway they do no more work. They worked for a while, but they do not work now. I went into the control panel and went into the 'Toshiba' orders and tried to change what does each control, but it still does not work. No one knows how to fix?

The Toshiba Satellite M100. M100-JG2 seems to be a Canadian series laptop. I recommend you to visit the driver Toshiba Canada page and to download and reinstall the tool called controls, Toshiba HotKey Utility, touch and launch.

http://support.Toshiba.ca/support/download/ln_byModel.asp

> Also, another problem that I had since I first laptop is my date and time are screwed up. If I put my 15:30 time, it works perfectly, until around 16:15, that when he goes back to 15:30 and go all the way up to 16:15 and continues in this cycle until I manually change the time. I had a few friends (no professional) to look at, but can not understand. When I start my laptop I go to settings in the start screen, try to change it was from there, but it keeps going during the cycle. If I click on the time and click on the tab "internet time" and say "update now", it updates, and then only time will work normally, but only until I turn off my laptop. Once I turn it on then next turn, it passes through this new cycle of 45 minutes. If anyone has had this problem or knows how to fix?

I think that you have changed the date and time in the BIOS. Is this good?

If not, change it and don t forget to save the changes.In Control Panel--> Date and time--> time tab Internet, please uncheck the auto sync with the internet time server.

In the other, called tab time zone please choose the right time zone and activate the tick to the option called automatically adjust the clock for an advance of changesSee you soon

-

export data wave graph problem

Hi all!

I have a problem with my table of waveform:

The length of the history of the chart I updated 5 X 24 X 60 X 60 = 432 000 comments. I draw a new point in all the 1 minute, so that I can preview as the last 5 days. The problem is, when I'm just trying to export to Excel, the data for the chart, the data are not in the order time (if I export the data to the Clipboard, it is all the same). The data starts with the second day. And Yes, before I export data, the x-axis are configured to be autoscaled, so I don't see 5 days together data curves. But after export, in the table opening Excel (2010 office, LabView 2011, silver chart) the data are really mixed upward...

Anyway, the workaround is simple: 2 clicks in Excel and it puts the data in order, but I'm curious to know why it happens... I guess I'm doing something wrong with the berries of waveform of construction?

Is this a bug?

Thanks in advance!

PS. : I have attached a graphic exported to jpg format what I see, and excel table with "mixed up" sequence of data, as well as the Subvi, that I use to generate and send the three points of the chart made every minute.

Hey,.

as far as I know, it was a change in the interface ActiveX of Office 2007 to Office 2010.

You wouldn't have this bug in Exel 2007.

Try to use the the VI 'Export waveforms to spreadsheet' or if you use a PDM file.

Oxford

Sebastian

-

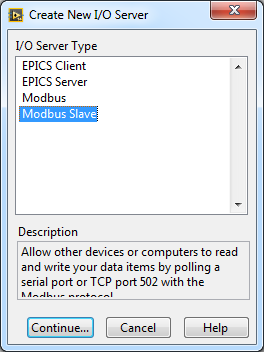

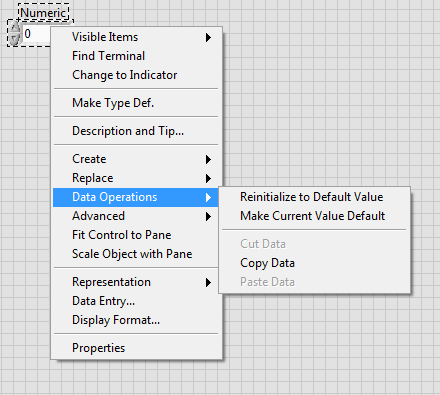

Create server OPC of e/s and data front panel problem

Hi all!

I installed the OPC server from OR. I don't see the possibility of 'mutual FUND customer' when I try to create a new server I/O in a LabVIEW project.

Something is the lack of software?

Another question: I tried to connect to the server OPC with decision-making data façade but my problem is the same. When I click on the digital control and I'm in the "operation" menu there is no possibility to the data socket connection.

I don't know what the problem is.

I have attached two photos on my problem.

Dear vajasgeri1,

you have the module LabVIEW DSC installed? Without it you won't have the functionality of OPC client.

And to configure DataSocket link, you must go to the tab of the data link in the properties of a control.

BR,

-

Data insertion Smartphones blackBerry 9900 if unable to restore

I have 9700 to 9900 upgrade I have several insertions 922, impossible to restore in the 9900.

Thank you

If you have a backup of the 9700, you can return the Desktop software > device > restore > select the appropriate to the 9700 backup file, select data (not full restoration) > select ONLY the automatic and continuous integration database.

-

Greetings,

version: 11.2.0.2

Test case-

create the table TEST_S

(number of req_id,

number of comment_id,

Comments varchar2 (2000));

Insert into TEST_S values (1, 20, ' it's comment #1, id #1 "');

Insert into TEST_S values (2, 10, ' it's comment #1, id #2 "');

Insert into TEST_S values (2, 20, ' it's comment #2, id #2 "');

insert into TEST_S values (2, 100, "this is the comment #3, id #2" ');

Insert into TEST_S values (3, 15, ' this is comment #1, id #3 "');

Insert into TEST_S values (3, 25, "comment #2, id #3" ');

Insert into TEST_S values (3, 16, "this is comment #3, id #3" ');

Insert into TEST_S values (3, 25, "comment #4, id #3" '); -req_id can have same comment_id

Insert into TEST_S values (8, 9, ' it's comment #1, id #8 "');

commit;

;

I need to rotate and insert the data of this table TEST_S in TEST_D:

create the table TEST_D

(number of req_id,

COMMENT1 varchar2 (2000).

VARCHAR2 (2000) comment2,.

comment3 varchar2 (2000).

VARCHAR2 (2000) comment4,.

comment5 varchar2 (2000));

...

and rotated once the result should be:

req_id COMMENT1 comment2 comment3 comment4 comment5 1 It comes to comment #1, id #1 2 It comes to comment #1, id #2 It comes to comment #2, id #2 It is observation #3, id #2 3 It comes to comment #1, id #3 It comes to comment #2, id #3 It is observation #3, id #3 It comes to comment #4, id #3 8 It comes to comment #1, id #8 - and my attempt...

DECLARE

CURSOR C2

IS

SELECT req_id,

comment_id,

Comments

OF TEST_S

order by 1, 2;

Id1 c2% ROWTYPE;

Id2 c2% ROWTYPE;

ID3 c2% ROWTYPE;

ID4 c2% ROWTYPE;

ID5 c2% ROWTYPE;

BEGIN

FOR THE ID IN

(SELECT DISTINCT FROM TEST_S ORDER BY req_id req_id

)

LOOP

OPEN C2;

Get c2 INTO id1;

Get c2 INTO id2;

Get c2 INTO id3;

Get c2 INTO id4.

Get INTO id5 c2;

CLOSE C2;

INSERT INTO test_D

SELECT id.req_id,

MAX)

CASE

WHEN comment_id = id1.comment_id

THEN the comments

END) AS comment1,.

MAX)

CASE

WHEN comment_id = id2.comment_id

THEN the comments

END) AS comment2,.

MAX)

CASE

WHEN comment_id = id3.comment_id

THEN the comments

END) AS comment3,.

MAX)

CASE

WHEN comment_id = id4.comment_id

THEN the comments

END) AS comment4,.

MAX)

CASE

WHEN comment_id = id5.comment_id

THEN the comments

END) AS comment5

OF TEST_S

WHERE req_id = id.req_id

GROUP BY id.req_id;

END LOOP;

END;

... but the result is:

REQ_ID COMMENT1 COMMENT2 COMMENT3 COMMENT4 COMMENT5 1

It comes to comment #1, id #1 It comes to comment #1, id #1 2

It comes to comment #2, id #2 It comes to comment #1, id #2 It comes to comment #2, id #2 It is observation #3, id #2 3

It comes to comment #1, id #3 8

What I am doing wrong? or is there a better approach?

Actually, "TEST_S" has 453K lines, 178K distinct "req_id" and each "req_id" has between 1 and 24 'comment_id '.

'TEST_S' can contain up to 16 comments / 'comment_id '. If there is more than 16 'comment_id' for a 'req_id"then the rest of the s 'comment_id' goes to a

another table (that is another story).

Thanks for your time,

T

Hello

Here's one way:

INSERT INTO test_d (req_id comment1, comment2, comment3, comment4, comment5

-..., comment16 - according to needs

)

WITH got_r_num AS

(

SELECT req_id, comments

ROW_NUMBER () OVER (PARTITION BY req_id

ORDER BY reviews - or what you want

) AS r_num

OF test_s

)

SELECT *.

OF got_r_num

PIVOT (MIN (comments)

FOR r_num IN (1 AS comment1

2 AS comment2

3 AS comment3

4 AS comment4

5 AS comment5

-..., 16 AS a comment16 - according to needs

)

)

;

You can add as many columns comment more at your leisure. Just add them to the list of columns at the beginning and the PIVOT clause at the end; nothing else in the statement must change.

If you have more comments 5 (or 16 or another) for the same req_id, then those extra will be simply ignored. no error.

I guess that you replicate the data to display only, as in a data warehouse. For most purposes, including where you might need to look in the comments, the structure of the test_s table is much better than test_d.

-

Palm Pre for Sprint data back up problem

I have problems with data upward with my Palm Pre for Sprint - Palm webOS 1.4.1.1

I set it so "On" to make the data to automatically back up my Palm profile every day, however it has not saved it automatically since June 5. I just noticed today (June 17) and I tried today to back up data manually by clicking on "Back Up Data Now" button, but the screen just hangs indefinitely with a note saying 'Préparation' never back up the data.

On a related note, between June 6 & June 10 I made a trip to Canada where my phone was roaming - then I can understand why he might not come back in these circumstances - but I went back to the United States on June 10 and he has not saved automatically since and it does not appear that he is able to save manually either.

Anyone had a similar issue or know what I could do to remedy this?

Of course, it seems that a simple reboot solved my problem.

Here is the link to the post, which has contributed to:

Thank you.

Maybe you are looking for

-

Unique finished and differentiated steps of simultaneous action on a USB-6009 device?

-

lines on the screen of the computer

horizontal lines through my moiniter

-

Vista slow system and displaying the message "Windows has a problem.

I feel like a complete NEWB. I'm going on my computer today after two days. My computer starts slowly. More slowly than usual. The first thing I see is this grey screen with a white cursor that is HUGE on this subject. At the same time, I see that it

-

60XL color cartridge won't print on my C4650

Have had this printer for about a year and no problems. Just changed the color cartridge and no color prints, just black. I tried to diagnose the problem with the HP Solution Center, shows the cartridge as full, clean the cartridge 3 x still no color

-

I am trying to download 10 Windows using the ISO method. Everything worked so far, but now I'm up to the point where you are prompted to enter your product key.I entered my correct product key, and it came up saying that it was an invalid key. I retr