data of a trigger on channel timming

Hello

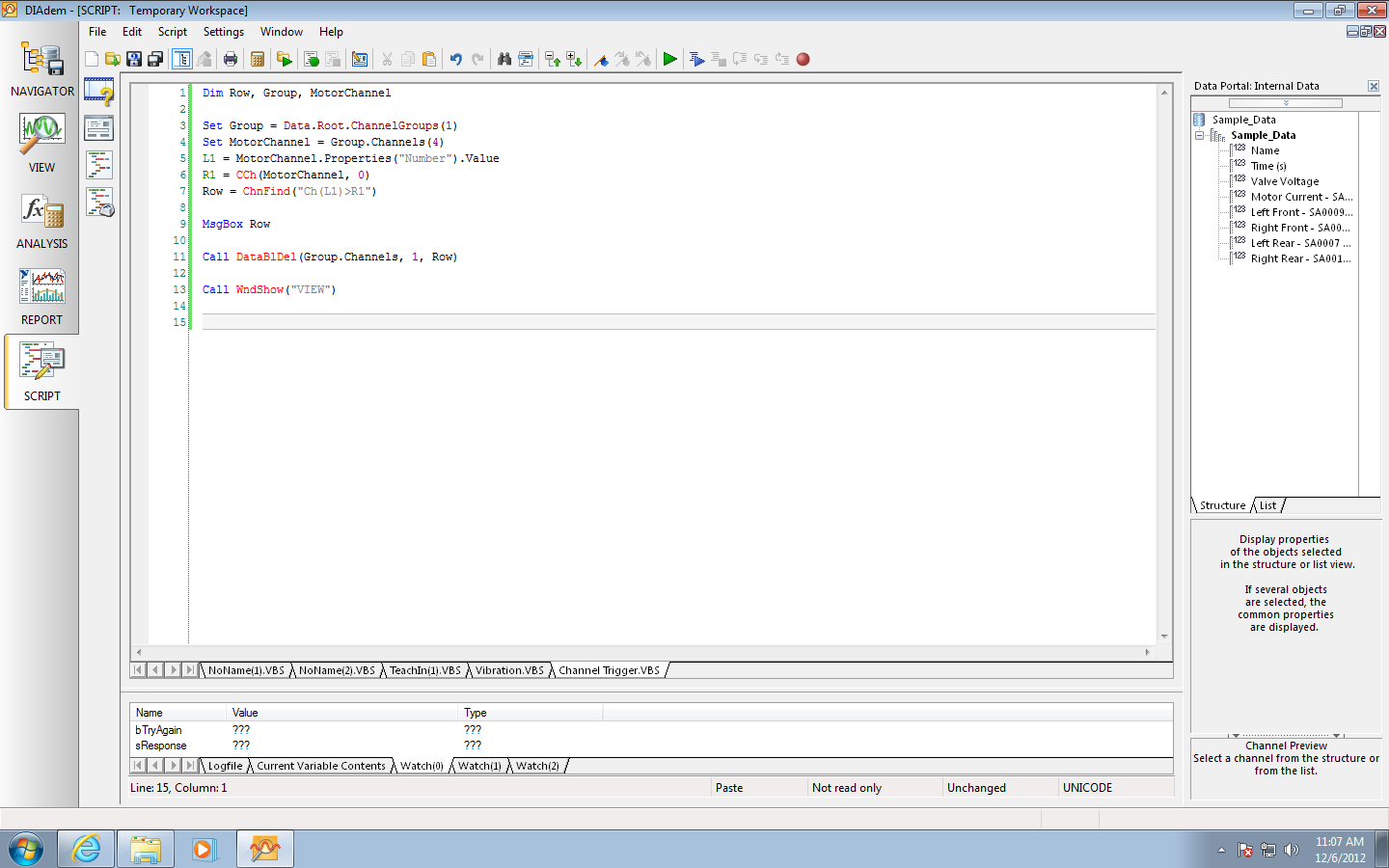

I am interested in developing a tiara script that can look at a value of triggering on a specific channel in a file and delete all previous data on all channels before the index of relaxation.

For example, the data file below contains a trace of activation of the pump. I would like to trigger off the moment where the engine power (engine power) begins to increase from zero (it comes to t = 0 for all waveforms).

This could be done in the Labview software that acquires data, but I'd rather do this tiara in the post-processing of the data.

I tried a similar discussion forums example and the code example, but discovered nothing. If there is a similar script that I can base it off of, that would be great.

Any input would be appreciated.

Thank you

Hi Andreas,

I just had Brad Turpin of OR look at that and it created this script in about 10 seconds. I'll have to tweak it a bit, but it seems good.

Im sure yours works too and I'll take a look at this when I get back to work.

Thanks for the help.

Tags: NI Software

Similar Questions

-

How to make the exchange of data between 2 whole loop real-time

Hello

I have 2 while loop

the 1st loop includes data acquisition program

the 2nd loop includes control program

--------------------------------------------------------------------------------------------------------------

My question is how do the exchange of data between 2 whole loop real-time--------------------------------------------------------------------------------------------------------------

I tried with the variable and direct wiring between the 2 local while loop

It does not work (there is a delay)

-

Made with multi date columns to the dimension of time

Hello world

First let see my script:

I have the control panel with these columns

order_no (int),

create_date (date),

approve_date (date)

close_Date (date)

----

I have a time hierarchy dimension: time

I want to see at the time of the hierarchy, how many orders created, approved and closed for each time part(year,quarter,month,..) as below:

Date | created | approved | closed

————————————————------------------------

2007-1st quarter | 50. 40 | 30

2007 - q2 | 60. 20 | 10

Q3-2007 | 10. 14 | 11

2007-4th quarter | 67. 28 | 22

Q1-2008 | 20. 13 | 8

Q2-2008 | 55. 25 | 20

Q3-2008 | 75. 35 | 20

Q4-2008 | 90. 20 | 2

…

My solution:

Physical layer;

1. I create an order f alias as the fact table for the roll of the order

2 i joined f-ordered with d-time (time alias) on f_order.created_Date = d - time.day_id

3 - I added 2 logical column with the formula of the measures:

Sum aggregation is_approved(If approve_date= THEN 0 else 1)

Sum aggregation is_closed(If closed_date= THEN 0 else 1)

order_no (will use to measure aggregation created County

When I create the report in analytics in the generated query he used created_date and this isn't what I expected!

What is the best solution?

1-

If I have to create 3 is of the order: f_order_created, f_order_approved, f_order_closed and join each other on these columns d-time?

f_order_created.created_Date = d - time.day_id

f_order_approved.approved_Date = d - time.day_id

f_order_closed.closed_Date = d - time.day_id

2-do you create my fine measure?

Hello anonymous user,.

The approach with three done alias that you then use in three separate sources of logical table for your logical fact is the right one. This way, you keep canonical once and did do the role play.

So you won't need to 3 facts in the layer of logic that you ask above, but only 3 LTS. Physically, you need 3 alias joined the dimension of time with the join specifications you mention of course.

PS: Jeff wrote about this a few years if you want to take a look.

-

Table is not fetch all the data of the VO at a time?

Hello world

My version of Jdev is 11.1.2.3.0.

I developed page ADF that has a single Table that should show all the data in the original Version at the same time.

The table has vertical and horizontal scroll bars. And the VO has more than 900 documents.

The question is, at the start when loading the page table shows 20-25 records then when I try to scroll down and then the data is going to look this day there ("retrieving data" message) by which its takes a long time to see the data because its extraction at the time where I scroll down.

How can I avoid this?

How will I be able to retrieve all data from VO (all 900 records) by which when I scroll data should not look at this time?

And also increases the size of the table and is based on the data when I scroll down.

How can I avoid this?

How can we fix the size of the table regardless of the content?

All suggestions will be really useful...

Thank you.The message «Retrieving data...» "is issued by the client component of table rich user interface when it retrieves data of the server-side model component lines in its local buffer of lines. Even if the server-side model component has recovered all the rows from the database (e.g. VO has recovered all of the rows from the database in the cache of the VO), the client component of table UI does not download all of them locally, but it downloads as needed into portions whose size is controlled by the attribute 'fetchSize' of the table. By default, the 'fetchSize' attribute is set to the value of the property "rangeSize' of the corresponding mandatory ADF iterator (whose default value is 25). In this way, if you scroll to the bottom of the table of the UI after the 25th row, the table would download the next set of 25 lines of its components side server model (and it then displays the message "retrieving data... ('') and so on.

If you want to avoid downloading frequent of the next rowset of the component side server model to the customer table component user interface, you can set attribute 'fetchSize' of the table to a more fixed, for example:

In this way, only the client user interface table would download locally 1000 lines (if there are so many existing lines, of course) and it won't download more rows until you scroll the table after the 1000th line (when it would download a next series of 1000 lines).

Be wise when the setting 'fetchSize' of the table to a large number, because more the 'fetchSize', greater would be the size of HTTP, the longer answer response would be and more memory at the customer level would be consumed for the customer table buffer. Note that-1 (for example "get all") is not a valid value for the attribute 'fetchSize '. If you set it to-1, it will default to 25.

Dimitar

-

Main events doesn't trigger all the time

Hi all

At my request, I added a key listener, but he's not trigger all the time. For example when I logged in initially is not the trigger. But when I go to another page and come back using alt + tab then it works. When there is no alert is also on the page of the key event not triggers.

Kind regards

Roman.

Look on systemManager.stage, not application

-

record channel times of order data NI_DataType

I'm trying to replicate some features of selection of the control Data Logging property in the VeriStand workspace in a Labview application (I wish I could get just the VI for control of the workspace). In the control of the registration of the properties-> channel category data the time channel can be selected. When I select absolute time he records the system path = 'targets, controller, channels, Absolute System Time. " But I have one property TDMS NI_DataType = 68. If I manually select the channel (add channels) we obtain a = 10 NI_DataType. The difference seems to be that the NI_DataType = 68 has the well formatted time (m/d/Y/min) while the NI_DataType = 10 at a time in a large number that represents the number of seconds. The NI_DataType is read-only. How can I get the formatting nice NI_DataType = 68 to be registered like that? This post is treated? If so how to do that?

Thank you!

I have found that the Group of channels TDMS (NI_VS Data logging API.lvlib) type def that gets wired for the new Data Logging Specification.vi has a def of time type of the channels Options which allow channels different time to be configured and named.

-

Can't see the rise on a USB 6212 BNC channel time

Hello

I use an acquisition of data NI USB 6212 BNC to monitor the rise time. A single channel (AO0) is used to define a voltage all followed another channel (AI1). The problem I have is that I'm not able to see the rise on AI1 time, only a jump to the specified voltage.

I started to edit one of the material examples. Initially, I have connected the two channels via a BNC connector to BNC cable but thought I better with a simple circuit. I set up a resistance on a model and connected my channels accordingly with crocodile clips. Unfortunately, the result remained the same and all I see is a jump to the previous setting in tension (usually 0) to the new I entered with a infinite slope (vertical line).

I am fairly new to DAQ and plan to work with it for a while. The simple circuit will be expanded considerably in filter tests and other applications, but for now, I have to get the basics. I would appreciate help, that you can offer; only currently, my thoughts are if this could be a sampling problem (I put it to 400000 above) and if I may add a ceiling to slow things down. I want to get this to work, however, so I could develop by looking at rates of filters and sweep of the go-around.

I enclose my screws in the hope that they will help you decipher the problem. Have tried different combinations on DAQ Read and Write with single or multiple and 1DB/Waveform channel, although I'm pretty green in their functioning, without success.

In hoping to hear talk about you,

Yusif NurizadeYusif Nurizade,

What type of signal you generate on the AO line? The default value in the output array is a single element zero. That didn't exactly have a rise time!

To measure rise times sampling on the line frequency should be fast enough to get samples of several over the course of the rising part of the measured waveform.

The signal on the AO line will always be in the steps from one value to another. This is the way of working with D/A converters. 6212 specifications indicate a speed of 5 V / us. If two successive samples were 0 to 10 v it would take exit 4 move us from one value to another. Same samplng HAVE it line 400 kech. / s, you would get no more than an intermediate value and would not be able to measure the rise time.

Try putting an R - C circuit with a time constant on the order of milliseconds between connections AO and AI. You should be able to measure it.

Lynn

-

CQL join with 2-channel time-stamped application

Hello

I am trying to join the two channels that are time-stamped application, both are total-ordered. Each of these 2 channels retrieve data in a table source.

The EPN is something like this:

Table A-> processor A-> A channels->

JoinProcessor-> channel C

Table B-> processor B-> B-> channel

My question is, how are "simulated" in the processor events? The first event that happens in any channel and the Treaty as the timestamp, starting point get it? Or fact block treatment until all channels have published its first event?

Channel A

--------------

timestamp

1000

6000

Channel B

-------------

timestamp

4000

12000

Channel B arrived both of system a little later due to the performance of the db,

I would like to know if the following query won't work, whereas I would like to match the elements of channel A and B which are 10 sec window and out matches once in a stream.

The following query is correct?

Select a.*

A [here], B [slide 10 seconds of range 10 seconds]

WHERE a.property = b.property

I'm having a hard time to produce results of the join well I checked the time stamp of application on each stream and they are correct.

What could possibly cause this?

Thank you!

Published by: Jarell March 17, 2011 03:19

Published by: Jarell March 17, 2011 03:19Hi Jarrell,

I went through your project that had sent you to Andy.

In my opinion, the following query should meet your needs-

ISTREAM (select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bparty)

I used the test of CatSender and TrafficaSender data (I show only the part bparty fields and here and that too as the last 4 characters of each)

TrafficaSender (, bparty)

At t = 3 seconds, (9201, 9900)

At t = 14 seconds (9200, 9909)

To t = 28 seconds (9202, 9901)CatSender (, bparty)

At t = 16 seconds, (9200, 9909)

At t = 29 seconds (9202, 9901)For query q1 ISTREAM = (select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bparty)

the results were-

At t = 16 seconds, (9200, 9909)

At t = 29 seconds (9202, 9901)and it seems OK for me

Please note the following-

(1) in the query above I do NOT require a "abs1(t.ELEMENT_TIME-c.ELEMENT_TIME)".<= 15000"="" in="" the="" where="" clause.="" this="" is="" because="" of="" the="" way="" the="" cql="" model="" correlates="" 2="" (or="" more)="" relations="" (in="" a="" join).="" time="" is="" implicit="" in="" this="" correlation="" --="" here="" is="" a="" more="" detailed="">

In the above query, either W1 that designating the window TrafficaSender [range 15] and W2 means the CatSender [range 15] window.

Now both W1 and W2 are evaluated to CQL Relations since in the CQL model WINDOW operation over a Creek gives a relationship.

Let us look at the States of these two relationships over time

W1 (0) = W2 (0) = {} / * empty * /.

... the same empty up to t = 2

W1 (3) = {(9201, 9900)}, W2 (3) = {}

same content for W1 and W2 until t = 13

W1 (14) = {9201 (9900) (9200, 9909)}, W2 (14) = {}

same content at t = 15

W1 (16) = {9201 (9900) (9200, 9909)}, W2 (16) = {(9200, 9909)}

same content at t = 17

W1 (18) = {(9200, 9909)}, W2 (18) = {(9200, 9909)}

same content at up to t = 27

W1 (28) = {(9200, 9909), (9202, 9901)}, W2 (28) = {(9200, 9909)}

W1 (29) = {(9202, 9901)}, W2 (29) = {(9200, 9909), (9202, 9901)}Now, the result.

Let R = select t.aparty, TrafficaSender [range 15 seconds] t.bparty t, CatSender [range 15 seconds] c where t.aparty = c.party AND t.bparty = c.bpartyIt is the part of the application without the ISTREAM. R corresponds to a relationship since JOINING the 2 relationships (W1 and W2 in this case) that takes a relationship according to the CQL model.

Now, here's the most important point about the correlation in the JOINTS and the implicit time role. R (t) is obtained by joining the (t) W1 and W2 (t). So using this, we must work the content of R over time

R (0) = JOIN of W1 (0), W2 (0) = {}

the same content up to t = 15, since the W2 is empty until W2 (15)

R (16) = JOIN of W1 (16), W2 (16) = {(9200, 9909)}

same content up to t = 28, even if at t = 18 and t = 28 W1 changes, these changes do not influence the result of the JOIN

R (29) = JOIN of W1 (29), W2 (29) = {(9202, 9901)}Now the actual query is ISTREAM (R). As Alex has explained in the previous post, ISTREAM leads a stream where each Member in the difference r (t) - R (t-1) is associated with the t. timestamp applying this to R above, we get

R (t) - R (t-1) is empty until t = 15

(16) R - R (16 seconds - 1 nanosecond) = (9200, 9909) associated with timestamp = 16 seconds

R (t) - R (t-1) is again empty until t = 29

(29) R - R (29 seconds - 1 nanosecond) = (9202, 9901) associated with timestamp = 29 secondsThis explains the output

-

How can I get Thunderbird to display the Date to send instead of just time?

New message only. Seems to have started with change to 2015

By default, Thunderbird shows the time for the messages today. It takes for granted that you know what day it is. The date is added at midnight.

Is that what you see?

-

How can I make the date of the message appear with time for unread messages?

Messaging systems that I spend show the date and time with the messages. I need this poster to determine the aging and the importance of the received mail. What archive/old economy mail help to drop off mail and not duplicate archived messages.

Thunderbird displays the time and date for all messages except for the messages of today. Only displayed for today and the date is added at midnight.

-

Registration of decrypted on 42WL863G channels time shift

I have problems with registration on 42WL863G (at least) a few months of lag.

When starting a recording of time offset of a satellite channel decrypted (decoded by SMiT Irdeto CAM CI + with card HD ORF) everything seems OK at first.

But after a few seconds (about 6) recording stops by itself.

When you press on2 or 3 seconds are played, and then it's over. When you try the same thing with the unencrypted channels, everything is OK (as described in the manual).

Programmed or a contact of the channels record decrypted also works very well.Is there a solution for this problem?

FW is the newest (... (15).

It could be a problem with the SMiT Irdeto CAM.

Would be interesting to check another CAM.

What do you think?

You have this problem with all the encrypted channels or only some specific strings are affected? -

cellular data should be on all the time?

cell data in parameters should be on all the time?

No, no, unless you need a data connection and there is no wifi available.

without wifi data or cell, your iPhone is just a basic cell phone.

-

Restoring my data to a new SSD with Time Machine

I'm going to buy a new SSD hard drive, and I backed up all my data in Time Machine on an external hard drive. Is it possible to restore all my data only through Time Machine? I have a bootable USB key with El Capitan on that, I'm not worried about the operating system. Wouldn't time machine recognize my computer as a completely different machine? This treatment?

If it is possible how I would go about it?

hugosixtynine wrote:

I'm going to buy a new SSD hard drive, and I backed up all my data in Time Machine on an external hard drive. Is it possible to restore all my data only through Time Machine?

Your new SSD must be in the format GUID extended journeled using disk utility. Boot into Recovery (R command).

Install OS X and use the Time machine to restore all your data.

-

Restore the data in the external drive using Time Machine

Hi y ' All!

I store my photos in my external hard drive because there is not enough space in memory is internal. This is actually my second drive external hard than my first (Adata HE720) is not the beginning of last year. Unfortunately, my WD Elements has stopped working and I can't restore data using Time Machine, as the drive is not found in Time Machine under devices, and I'm sure that I restored my photo library and many other files from my old external drive using Time Machine.

Can someone tell me how to recover my old files? I have many other important data here including my photos and I don't want to lose them. I'm fairly positive that Time Machine allows me to get the data as is the point of it.

Thank you in advance!

P.S. If you guys have any recommendation on a reliable external drive, please do tell me!

Hey guys, I found the problem.

It turns out that I'm never giving time Machine to deselect my external drive to backup; Therefore, there is absolutely no copy done during execution Time machine. I really don't understand why Apple made this the default behavior, but it is what it is.

So, people, don't forget to go to Time Machine preferences and make sure that you remove the external hard drive that you want to backup under options. Once you do, you can enter the time Machine and your drive will appear under devices.

See you soon.

-

Because getting my Mac Pro in early November my monthly data consumption increased by 3 times what my experience based widows was. My average for the 3 months before getting the Mac was 3.5 GB/mo. Last month was 13 concerts, and at halfway through this month, I approach 6 GB. What could be the cause of the use of the data to jump so radically?

Are you "sync-ing" to any google docs online, for example, Dropbox, iCloud sharing services?

you update a large iTunes library?

you share with a phone, such as calendar, Photos?

Try to make online backups?

Maybe you are looking for

-

Apparently installed an unwanted application SmartShop.

The SmartShopper app installs links on different pages and automatically displays unwanted videos. How can I get rid of him?

-

Pavilion G7 stops completely and can be restarted, once, but stops then down immediately

Not really a deadlock, as the machine stops completely, no lights. It can be restarted, but just once and only for a few moments. After that, it will not start for several minutes. It happens by itself or can be caused by hand pressure or lower right

-

Pavilion DV7 start error message - unable to start BTVSTACK. EXE, BTBIP. Missing DLL

I have a Pavilion Dv7 (A3F83AV) running windows 7, 64 bit and recently to get the error message at startup telling BTVSTACK. EXE cannot start as long as BTBIP. DLL is missing. I found that this has to do with the bluetooth, but I'm not sure which is

-

'Fn & F5' part-time work only

Hi, I have trouble with the Fn & F5 - combination on my X 60-portable: When I press this combination of key it opens the black menu window, but it shows that the "Wireless" tab where you can enable WLAN & BT enabled or disabled. The "Location profile

-

Aironet 1142N-A-K9 quick setup for security

Hi all! We recently bought this access point for a 'fast' proof of concept for Wireless N in our hotel. I bought the only model in the hope that it would be a quick installation to test with stand. I am familiar with the Routing and switching with