First Bare Metal hypervisor. Best practices

Hi, I'm buiding a supermicro superserver 7046t - NTR + w / 2 x Xeon E5620 CPU 48 GB DDR3 1333 ECC REG RAM (6X8Go) 8 x 1 TB 7200 RPM 32 MB SATA drives. I intend to install the free version of ESXi. I need to put as much Windows XP VLPD here that the hardware allows. I can extend up to 144 GB of RAM if necessary. The Windows XP VLPDs run 512 MB or 1024 MB of RAM 8 GB virtual drives. The VLPDs averaged a light load, but there are gusts moderate activity. The night before I start installing ESXi and I realized that I still have questions.

With 8 drives 1 TB should I do two-Bay RAID 10 install ESXi on one and use them for the VLPDs and mirror 2 for ESXi and 2 to Exchange files and then put VLPDs on RAID 10?

With 48 GB memory vivid 1024Mo how Windows XP VLPDs can I expect to run?

How many people find the SATA drives from the bottleneck?

Is - this true statement

The free version of ESXI will support 2 quad core processors, but to switch to 6 or 8 core processors ESXi requires a new license.

Thank you guys

More pins the better performance you will get. Also, make sure you do diskalignment. ALSO do not format the VMFS on installation make in vSphere Client so that you have the question about your VMFS. See this link for more information it is something that can really eat your drive! http://www.yellow-bricks.com/2010/04/08/aligning-your-VMS-virtual-harddisks/

See you soon,.

Chad King

VCP-410. Server +.

Twitter: http://twitter.com/cwjking

If you find this or any other answer useful please consider awarding points marking the answer correct or useful

Tags: VMware

Similar Questions

-

Received my first laptop as a gift today (Pavilion touchsmart 15-n287cl). You need to know 1. How long to charge it for the first time or first several times; 2 do you need to unload and reload between each use completely and how long should it take to charge an average before being used again. 3. Since no instructions is a good idea to leave it plugged when you use all the time or the battery should be removed to improve the conservation of life?

Thank you in advance, it has taken a lot of time to migrate from the desktop. Want it to be a positive experience.

Hello

Modern batteries and chargers are now smarter than you.

1. you can plugin and immediately to use the machine, the machine will stop charge when the battery is fully charged.

2. No, we don't need to unload completely between uses, then as above. The recommendation is sometimes we run machine on battery until that up to 10%.

3. when using AC is a way to protect the unit during the failure of current, all of a sudden, leaving battery on laptop.

Please read the following article:

http://BatteryUniversity.com/learn/article/how_to_prolong_lithium_based_batteries

Kind regards.

-

What is the best practice to block through several layers sizes: hardware and hypervisor VM OS?

The example below is not a real Setup, I work with, but should get the message. Here's my example of what I'm doing as a reference layer:

(LAYER1) Hardware: The hardware RAID controller

- -1 TB Volume configured in the 4K block size. (RAW)?

(Layer2) Hypervisor: Data store ESXi

- -1 TB of Raid Controller formatted with VMFS5 @ block size of 1 MB.

Layer (3) the VM OS: Server 2008 R2 w/SQL

- -100 GB virtual HD using NTFS @ 4 K for the OS block size.

- -900 GB virtual HD set up using NTFS @ 64 K block size to store the SQL database.

It seems that vmfs5 is limited to only having a block size of 1 MB. It would be preferable that all or part of the size of the blocks matched on different layers and why or why not? What are the different block sizes on other layers and performance? Could you suggest better alternative or best practices for the sample configuration above?

If a San participated instead of a hardware on the host computer RAID controller, it would be better to store the vmdk of OS on the VMFS5 data store and create an iSCSI separated THAT LUN formatted to a block size of 64 K, then fix it with the initiator iSCSI in the operating system and which size to 64 K. The corresponding block sizes through layers increase performance or is it advisable? Any help answer and/or explaining best practices is greatly appreciated.

itsolution,

Thanks for the helpful response points. I wrote a blog about this which I hope will help:

Alignment of partition and blocks of size VMware 5 | blog.jgriffiths.org

To answer your questions here, will:

I have 1 TB of space (around) and create two Virutal Drives.

Virtual Drive 1-10GB - to use for OS Hyper-visiere files

Virtual Drive 2 - 990 GB - used for the storage of data/VM VMFS store

The element size of default allocation on the Perc6 / i is 64 KB, but can be 8,16,32,64,128,256,512 or 1024 KB.

What size block would you use table 1, which is where the real hyper-visiere will be installed?

-> If you have two tables I would set the size of the block on the table of the hypervisor to 8 KB

What block size that you use in table 2, which will be used as the VM data store in ESXi?

->, I'd go with 1024KO on VMFS 5 size

-Do you want 1024KO to match the VMFS size which will be finally formatted on top he block?

-> Yes

* Consider that this database would eventually contain several virtual hard drives for each OS, database SQL, SQL logs formatted to NTFS to the recommended block, 4K, 8K, 64K size.

-> The problem here is THAT VMFS will go with 1 MB, no matter what you're doing so sculpture located lower in the RAID will cause no problems but does not help either. You have 4 k sectors on the disk. RAID 1 MB, 1 MB invited VMFS, 4 k, 8K, 64 K. Really, 64K gains are lost a little when the back-end storage is 1 MB.

If the RAID stripe element size is set to 1 024 Ko so that it matches the VMFS 1 MB size of block, which would be better practice or is it indifferent?

-> So that's 1024KB, or 4 KB chucks it doesn't really matter.

What effect this has on the OS/Virtual HD and their sizes respective block installed on top of the tape and the size of block VMFS element?

-> The effect is minimal on the performance but that exists. It would be a lie to say that he didn't.

I could be completely on the overall situation of the thought, but for me it seems that this must be some kind of correlation between the three different "layers" as I call it and a best practice in service.

Hope that helps. I'll tell you I ran block size SQL and Exchange time virtualized without any problem and without changing the operating system. I just stuck with the standard size of microsoft. I'd be much more concerned by the performance of the raid on your server controller. They continue to do these things cheaper and cheaper with cache less and less. If performance is the primary concern then I would consider a matrix or a RAID5/6 solution, or at least look at the amount of cache on your raid controller (reading is normally essential to the database)

Just my two cents.

Let me know if you have any additional questions.

Thank you

J

-

What is the best practice to roll production ApEx?

Hello

My first ApEx application :) What is the best practice to deploy an ApEx application to production?

Also, I created end users account and use accountsto, connect from the end-user to ApEx via a URL (http://xxx.xxx.xxx:8080/apex/f? p = 111:1). However, how is it sometimes it is still in development mode (ie: Home |) Request # | Change Page # | Create | Session |...) Tool bar appear at the bottom, but sometimes not?

Thanks a lot :)

HelenWhen you set up your users, make sure that the radio button for the area of admin work and developer is set to no. Cela should make them an "end user", and they should not see links. Only developers and administrators of the workspace can be seen.

-

Code/sequence TestStand sharing best practices?

I am the architect for a project that uses TestStand, Switch Executive and LabVIEW code modules to control automated on a certain number of USE that we do.

It's my first time using TestStand and I want to adopt the best practices of software allowing sharing between my other software engineers who each will be responsible to create scripts of TestStand for one of the DUT single a lot of code. I've identified some 'functions' which will be common across all UUT like connecting two points on our switching matrix and then take a measure of tension with our EMS to check if it meets the limits.

The gist of my question is which is the version of TestStand to a LabVIEW library for sequence calls?

Right now what I did is to create these sequences Commons/generic settings and placed in their own sequence called "Functions.seq" common file as a pseduo library. This "Common Functions.seq" file is never intended to be run as a script itself, rather the sequences inside are put in by another top-level sequence that is unique to one of our DUT.

Is this a good practice or is there a better way to compartmentalize the calls of common sequence?

It seems that you are doing it correctly. I always remove MainSequence out there too, it will trigger an error if they try to run it with a model. You can also access the properties of file sequence and disassociate from any model.

I always equate a sequence on a vi and a sequence for a lvlib file. In this case, a step is a node in the diagram and local variables are son.

They just need to include this library of sequence files in their construction (and all of its dependencies).

Hope this helps,

-

TDMS & Diadem best practices: what happens if my mark has breaks/cuts?

I created a LV2011 datalogging application that stores a lot of data to TDMS files. The basic architecture is like this:

Each channel has these properties:

To = start time

DT = sampling interval

Channel values:

Table 1 d of the DBL values

After the start of datalogging, I still just by adding the string values. And if the size of the file the PDM goes beyond 1 GB, I create a new file and try again. The application runs continuously for days/weeks, so I get a lot of TDMS files.

It works very well. But now I need to change my system to allow the acquisition of data for pause/resume. In other words, there will be breaks in the signal (probably from 30 seconds to 10 minutes). I had originally considered two values for each point of registration as a XY Chart (value & timestamp) data. But I am opposed to this principal in because according to me, it fills your hard drive unnecessarily (twice us much disk footprint for the same data?).

Also, I've never used a tiara, but I want to ensure that my data can be easily opened and analyzed using DIAdem.

My question: are there some best practices for the storage of signals that break/break like that? I would just start a new record with a new time of departure (To) and tiara somehow "bind" these signals... for example, I know that it is a continuation of the same signal.

Of course, I should install Diadem and play with him. But I thought I would ask the experts on best practices, first of all, as I have no knowledge of DIAdem.

Hi josborne;

Do you plan to create a new PDM file whenever the acquisition stops and starts, or you were missing fewer sections store multiple power the same TDMS file? The best way to manage the shift of date / time is to store a waveform per channel per section of power and use the channel property who hails from waveform TDMS data - if you are wiring table of orange floating point or a waveform Brown to the TDMS Write.vi "wf_start_time". Tiara 2011 has the ability to easily access the time offset when it is stored in this property of channel (assuming that it is stored as a date/time and not as a DBL or a string). If you have only one section of power by PDM file, I would certainly also add a 'DateTime' property at the file level. If you want to store several sections of power in a single file, PDM, I would recommend using a separate group for each section of power. Make sure that you store the following properties of the string in the TDMS file if you want information to flow naturally to DIAdem:

'wf_xname '.

'wf_xunit_string '.

'wf_start_time '.

'wf_start_offset '.

'wf_increment '.Brad Turpin

Tiara Product Support Engineer

National Instruments

-

Best practices for the .ini file, reading

Hello LabViewers

I have a pretty big application that uses a lot of communication material of various devices. I created an executable file, because the software runs on multiple sites. Some settings are currently hardcoded, others I put in a file .ini, such as the focus of the camera. The thought process was that this kind of parameters may vary from one place to another and can be defined by a user in the .ini file.

I would now like to extend the application of the possibility of using two different versions of the device hardware key (an atomic Force Microscope). I think it makes sense to do so using two versions of the .ini file. I intend to create two different .ini files and a trained user there could still adjust settings, such as the focus of the camera, if necessary. The other settings, it can not touch. I also EMI to force the user to select an .ini to start the executable file using a dialog box file, unlike now where the ini (only) file is automatically read in. If no .ini file is specified, then the application would stop. This use of the .ini file has a meaning?

My real question now solves on how to manage playback in the sector of .ini file. My estimate is that between 20-30 settings will be stored in the .ini file, I see two possibilities, but I don't know what the best choice or if im missing a third

(1) (current solution) I created a vi in reading where I write all the .ini values to the global variables of the project. All other read only VI the value of global variables (no other writing) ommit competitive situations

(2) I have pass the path to the .ini file in the subVIs and read the values in the .ini file if necessary. I can open them read-only.

What is the best practice? What is more scalable? Advantages/disadvantages?

Thank you very much

1. I recommend just using a configuration file. You have just a key to say what type of device is actually used. This will make things easier on the user, because they will not have to keep selecting the right file.

2. I use the globals. There is no need to constantly open, get values and close a file when it is the same everywhere. And since it's just a moment read at first, globals are perfect for this.

-

Best practices - dynamic distribution of VI with LV2011

I'm the code distribution which consists of a main program that calls existing (and future) vi dynamically, but one at a time. Dynamics called vi have no input or output terminals. They run one at a time, in a subgroup of experts in the main program. The main program must maintain a reference to the vi loaded dynamically, so it can be sure that the dyn. responsible VI has stopped completely before unloading the call a replacement vi. These vi do not use shared or global variables, but may have a few vi together with the main program (it would be OK to duplicate these in the version of vi).

In this context, what are best practices these days to release dynamically load of vi (and their dependants)?

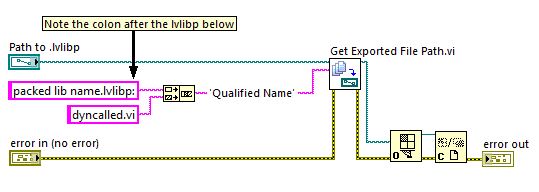

If I use a library of project (.lvlib), it seems that I have to first build an .exe that contains the top-level VI (that dynamically load), so that a separate .lvlib can be generated which includes their dependencies. The content of this .lvlib and a .lvlib containing the top-level VI can be merged to create a single .lvlib, and then a packed library can be generated for distribution with the main .exe.

This seems much too involved (but necessary)?

My goal is to have a .exe for the main program and another structure containing the VI called dynamically and their dependents. It seemed so straighforward when an .exe was really a .llb a few years ago

Thanks in advance for your comments.

Continue the conversation with me

here is the solution:

here is the solution:Runs like a champ. All dependencies are contained in the packed library and the dynamic call works fine.

-

HP stream 11-d008tu: best practices for migrating to Windows 10?

Hey there, I have a HP flow 11-d0008tu and received the notification I can upgrade to Windows 10. I want to do a clean install with a downloaded ISO but knowledge first of all, it is better to upgrade so that you get the activation done.

Can someone give me some ideas on best practices for the upgrade? The stream has only 30GB HD so I have no recovery disk, I'll just do a recovery later on USB or SD card. When I do the upgrade can deselect recovery option or delete?

Also, any other suggestions appreciated, especially which helps make effective for small HD

See you soon

Hi, I posted an installation procedure for Windows 10 fresh for the Tablet HP Stream here. Also, I have a laptop 11 flow but do not have that yet. I think it must be the same because they both the same drive of mem 32 GB Hynix. A change would you probably want to use the 64-bit instead of 32-bit Windows 10 ISO file, the Tablet has only 1 GB of RAM.

-

Dell MD3620i connect to vmware - best practices

Hello community,

I bought a Dell MD3620i with 2 x ports Ethernet 10Gbase-T on each controller (2 x controllers).

My vmware environment consists of 2 x ESXi hosts (each with 2ports x 1Gbase-T) and a HP Lefthand (also 1Gbase-T) storage. The switches I have are the Cisco3750 who have only 1Gbase-T Ethernet.

I'll replace this HP storage with DELL storage.

As I have never worked with stores of DELL, I need your help in answering my questions:1. What is the best practices to connect to vmware at the Dell MD3620i hosts?

2. What is the process to create a LUN?

3. can I create more LUNS on a single disk group? or is the best practice to create a LUN on a group?

4. how to configure iSCSI 10GBase-T working on the 1 Gbit/s switch ports?

5 is the best practice to connect the Dell MD3620i directly to vmware without switch hosts?

6. the old iscsi on HP storage is in another network, I can do vmotion to move all the VMS in an iSCSI network to another, and then change the IP addresses iSCSI on vmware virtual machines uninterrupted hosts?

7. can I combine the two iSCSI ports to an interface of 2 Gbps to conenct to the switch? I use two switches, so I want to connect each controller to each switch limit their interfaces to 2 Gbps. My Question is, would be controller switched to another controller if the Ethernet link is located on the switch? (in which case a single reboot switch)Tahnks in advanse!

Basics of TCP/IP: a computer cannot connect to 2 different networks (isolated) (e.g. 2 directly attached the cables between the server and an iSCSI port SAN) who share the same subnet.

The corruption of data is very likely if you share the same vlan for iSCSI, however, performance and overall reliability would be affected.

With a MD3620i, here are some configuration scenarios using the factory default subnets (and for DAS configurations I have added 4 additional subnets):

Single switch (not recommended because the switch becomes your single point of failure):

Controller 0:

iSCSI port 0: 192.168.130.101

iSCSI port 1: 192.168.131.101

iSCSI port 2: 192.168.132.101

iSCSI port 4: 192.168.133.101

Controller 1:

iSCSI port 0: 192.168.130.102

iSCSI port 1: 192.168.131.102

iSCSI port 2: 192.168.132.102

iSCSI port 4: 192.168.133.102

Server 1:

iSCSI NIC 0: 192.168.130.110

iSCSI NIC 1: 192.168.131.110

iSCSI NIC 2: 192.168.132.110

iSCSI NIC 3: 192.168.133.110

Server 2:

All ports plug 1 switch (obviously).

If you only want to use the 2 NICs for iSCSI, have new server 1 Server subnet 130 and 131 and the use of the server 2 132 and 133, 3 then uses 130 and 131. This distributes the load of the e/s between the ports of iSCSI on the SAN.

Two switches (a VLAN for all iSCSI ports on this switch if):

NOTE: Do NOT link switches together. This avoids problems that occur on a switch does not affect the other switch.

Controller 0:

iSCSI port 0: 192.168.130.101-> for switch 1

iSCSI port 1: 192.168.131.101-> to switch 2

iSCSI port 2: 192.168.132.101-> for switch 1

iSCSI port 4: 192.168.133.101-> to switch 2

Controller 1:

iSCSI port 0: 192.168.130.102-> for switch 1

iSCSI port 1: 192.168.131.102-> to switch 2

iSCSI port 2: 192.168.132.102-> for switch 1

iSCSI port 4: 192.168.133.102-> to switch 2

Server 1:

iSCSI NIC 0: 192.168.130.110-> for switch 1

iSCSI NIC 1: 192.168.131.110-> to switch 2

iSCSI NIC 2: 192.168.132.110-> for switch 1

iSCSI NIC 3: 192.168.133.110-> to switch 2

Server 2:

Same note on the use of only 2 cards per server for iSCSI. In this configuration each server will always use two switches so that a failure of the switch should not take down your server iSCSI connectivity.

Quad switches (or 2 VLAN on each of the 2 switches above):

iSCSI port 0: 192.168.130.101-> for switch 1

iSCSI port 1: 192.168.131.101-> to switch 2

iSCSI port 2: 192.168.132.101-> switch 3

iSCSI port 4: 192.168.133.101-> at 4 switch

Controller 1:

iSCSI port 0: 192.168.130.102-> for switch 1

iSCSI port 1: 192.168.131.102-> to switch 2

iSCSI port 2: 192.168.132.102-> switch 3

iSCSI port 4: 192.168.133.102-> at 4 switch

Server 1:

iSCSI NIC 0: 192.168.130.110-> for switch 1

iSCSI NIC 1: 192.168.131.110-> to switch 2

iSCSI NIC 2: 192.168.132.110-> switch 3

iSCSI NIC 3: 192.168.133.110-> at 4 switch

Server 2:

In this case using 2 NICs per server is the first server uses the first 2 switches and the second server uses the second series of switches.

Join directly:

iSCSI port 0: 192.168.130.101-> server iSCSI NIC 1 (on an example of 192.168.130.110 IP)

iSCSI port 1: 192.168.131.101-> server iSCSI NIC 2 (on an example of 192.168.131.110 IP)

iSCSI port 2: 192.168.132.101-> server iSCSI NIC 3 (on an example of 192.168.132.110 IP)

iSCSI port 4: 192.168.133.101-> server iSCSI NIC 4 (on an example of 192.168.133.110 IP)

Controller 1:

iSCSI port 0: 192.168.134.102-> server iSCSI NIC 5 (on an example of 192.168.134.110 IP)

iSCSI port 1: 192.168.135.102-> server iSCSI NIC 6 (on an example of 192.168.135.110 IP)

iSCSI port 2: 192.168.136.102-> server iSCSI NIC 7 (on an example of 192.168.136.110 IP)

iSCSI port 4: 192.168.137.102-> server iSCSI NIC 8 (on an example of 192.168.137.110 IP)

I left just 4 subnets controller 1 on the '102' IPs for more easy changing future.

-

Best practices to configure NLB for Secure Gateway and Web access

Hi team,

I'm vworksapce the facility and looking for guidance on best practices on NLB with webaccess and secure gateway. My hosted environment is Hyper-v 2012R2

My first request is it must be configure NLB, firstly that the role of set up or vice versa.

do we not have any document of best practice to configure NLB with 2 node web access server.

Hello

This video series has been created for 7.5 and 2008r2 but must still be valid for what you are doing today:

https://support.software.Dell.com/vWorkspace/KB/87780

Thank you, Andrew.

-

Hi all

I recently finished my first app of cascades, and now I want to inspire of having a more feature rich application that I can then sell for a reasonable price. However, my question is how to manage the code base for both applications. Any have any "best practices", I would like to know your opinion.

You use a revision control system? This should be a prerequisite...

How the different versions of the application will be?

Generally if you have two versions that differ only in terms of having a handful of features disabled in the free version, you must use exactly the same code base. You could even just it for packaging (build command) was the only difference, for example by adding an environment variable in one of them that would be checked at startup to turn paid options.

-

denying denying interceptor inline best practices

We recently deployed an inline IPS solution using software 5.1 7 E1. We wish to refuse-attacker-victim-pair-Inline for a few signatures on a particular subnet on the network but to destroy the rest.

To implement this correctly, I think that we must use Action SigEvent filters on the sensor and use the commands

> for all subnets agree that we want to allow deny actions for. I have seen that in the configuration of the sensor, you can implement in the section

> a > statement. My understanding is that does more for fleeing rather than deny solutions online. Am I right about that?

Please could someone on the list post that it is the best practical solution to deny deny attackers inline.

create 2 event action filters.

The first action event filter will match signatures and subnets you want to deny on and do not subtract all actions. Make sure that you set to "stop the match".

The following will match the same signature but the address 0.0.0.0 - 255.255.255.255. remove the appropriate actions.

The net result is that the filter action first event will apply when it matches and the second when it doesn't.

-

Best practices for the configuration of virtual drive on NGC

Hello

I have two C210 M2 Server with 6G of LSI MegaRAID 9261-8i card with 10 each 135 GB HDDs. When I tried the automatic selection of the RAID configuration, the system has created a virtual disk with RAID 6. My concern is that the best practice is to configure the virtual drive? Is - RAID 1 and RAID5 or all in a single drive with RAID6? Any help will be appreciated.

Thank you.

Since you've decided to have the CPU on the server apps, voice applications have specified their recommendations here.

http://docwiki.Cisco.com/wiki/Tested_Reference_Configurations_%28TRC%29

I think that your server C210 specifications might be corresponding TRC #1, where you need to have

RAID 1 - first two drives for VMware

RAID 5 - 8 hard drives within the data store for virtual machines (CUCM and CUC)

HTH

Padma

-

best practices of restart patch

Microsoft has a best practices documented how long after that patching a reboot must be done when necessary?

You won't get a more precisely to restart. Part of the installation of the patch is to restart the computer. Until you restart, it is not installed. This means that any problem should be fixed is always broken. Or if it's a security patch, you are not protected until you restart.

In other words, any justification you have for the installation of the patch in the first place, is also your justification to restart - because rebooting is part of this installation.

Maybe you are looking for

-

Problem with the two factor authentication with Apple TV.

I tried to connect to my Apple TV (2nd generation, operating system and updates are up-to-date), log-in failed and indicated that I had to use two-factor authentication which I recently install on my trust Apple devices which included my iMac, iPhone

-

Frequent crashes / freezes after intsalling 10.11.4

After installing my imac 10.11.4, crashes / freezes several times a day, especially if you are using safari

-

Webcam - Skype says that the webcam is not working

I tried Skype this morning and for some reason any, they told me that the Webcam was not working. Why would that happen? I have not had problems with this before that my computer is less than 4 months. Any suggestions?

-

I need a camcorder to use as a camera for a system CCTV in a church. Are the menus in the viewfinder to show via the HDMI output. Or it will look like it does when you listen to a recording. I used cameras before which showed the rec light in the p

-

strange PhoneCall from "service technique windows"

Today, I was called bij one man who said he was with the service of rechnical of Windows. He knew my name and said that he had that my computer has been hacked, 10 to 15 days of information that would have distrastrous consequences foor me. He said h