Gauge serial data, tracing and logging

Is anyone able to point me to an example of project to read 3 data values in a series flow and spread them out on a gauge and a graphic.

Logging would be nice as well, but gauge and chart are more important.

My data is reflected in the format below, but I have complete control over it.

R: 0, P:-210.15, F:173.191

R: 0, P:-210.65, F:173.191

R: 0, P:-210.78, F:173.191

R: 0, P:-210.67, F:173.191

Have basically left me short way no time for that one, and try to understand the example files to put me in a spin.

May be interested to pay for the assistance of an expert, if it's not trivial.

Any help gratefully received!

Duncan

Read data one line at a time and use Scan string to break up into 3 values.

Tags: NI Software

Similar Questions

-

Tracing and recording of data from multiple channels (and tasks)

I have two modules in a CompactDAQ chassis:

-NI 9213 (TC 16 channels)

-NI 9203 (8 current)

I'm using LabVIEW 11, data acquisition both of the modules without problem. I am, however, having trouble with the tracing and recording of data. Is there a method to combine the tables 1 d of waveforms from each of the two tasks, so that a waveform chart can accept? In the same way, I can write them to the same PDM or the file lvm?

The tasks use the same synchronization settings, which shouldn't be a problem. It is 1 to 10 Hz, so I have no major concerns regarding performance.

I'm new to the Forum, so I apologize for any misstep in terms of etiquite. Thanks in advance for the help.

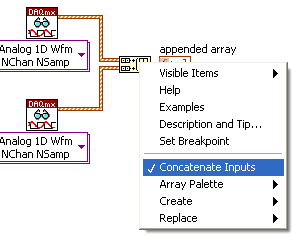

I might be misunderstanding your question, but why can't you just use the 'build' array function to combine your two bays of waveform? Click on 'build array' and select 'Concatenate' to add one table on the other.

-

acquire, analyze, and log data using the technique of the statemachine

I'm technical state machine learning to develop code to acquisitoin of data and logging. I think I wrote the correct code for this purpose, but I couldn't have done the following:

All the values(>0) of warning should appear on table 1 d on the façade. The code is written only the first value.

All values must be recorded using to write to the palette of the spreadsheet and written on the excel sheet. Code is only writing first value.

I wonder what is the error. The code I created is attached, I would be grateful if someone fix the code and post in response.

Thank you in advance for help

See you soon

Hi kwaris,

"What is the error": well, you use a lot of unnecessary conversions. Really a lot of...

Why do you convert a dynamic scalar value, then convert to table, then index an element of the array? Why convert to dynamic when everything you needis a simple "build table" node?

OK, I've included a shift register where you will store your table. It of just a simple 'how to', but not the best solution for all cases. But it should give you a clue...

-

Peformance Gain for a different drive for the SQL Server data and log (VM) file?

Hello

It is suggested to separate SQL Server 2012 data and log files in different drive for VM. Is there a performance gain if two of them resided in the same logical (Partition VMDK) unit number? OR should they (different dives) in different LUNS (Partition VMDK)?

Thank you

The General mantra in the physical world goes as follows:

For optimal performance, you need to separate logs and database of transactions on different physical disks (e.g. disks) stacks.

This general concept applies naturally agnostically to virtual workloads as well. It essentially translates to:

For performance, you should put the files of database transactions on various resident on different LUNS VMDK or use separate RDM. You should also use a separate vSCSI controller to each VMDK/RDM as the controller has its own IO queue.

There is a point more important which is often neglected in the physical and virtual world in particular:

Ideally, you should make sure that your physical LUN for logs and DB are not a group of common disk same stacks of physical disks. This violates the general concept above.

-

Cache buffers DB, file Cache Redo, DBWR, LGWR and log and data files

Hi all Experts,

I m very sory for taking your time to a matter of very basic level. in fact, in my mind, I have a confusion about the DB buffer cache and the cache of log buffers

My question is in the DB buffer cache are three types of dirty, pinned and free i-e data. and in Sales, they are all changed data data and are willing to empty in DBRW. and then he write data files.

but again bufers also works for the CDC and all the changed data is temporaryliy chacheed bufers redo and the LGWR writes in the log files. and these data can be data are committed or not. and when the log switch is held, then it writes data to data files commited

My question is that if a log file may have committed data type and stop and when a log switch takes place then only the data are committed are transferred to datafiles, then where are the data no go?

If dirty pads also contain modified data so wath is the diffrence between the bufers and data log data incorrect.

I know that this can be funny. but I m maybe wrong abot the concept. Please correct my concept about this

Thank you very much

Kind regardsuser12024849 wrote:

Hi all Experts,I m very sory for taking your time to a matter of very basic level. in fact, in my mind, I have a confusion about the DB buffer cache and the cache of log buffers

The first thing we do not mention this newspaper stamp in the log buffer cache. It won't make a difference to even call it that, but you should stick with the term normally used.

My question is in the DB buffer cache are three types of dirty, pinned and free i-e data.

Correction, it is the States of the buffer. These aren't the types. A buffer can be available in all of these three States. Also, note even when you would select a buffer, his first PIN before it can be given to you. Apart from this, there is a type more State called instant capture buffer aka CR (coherent reading) buffer that is created when a select query arrives for a buffer inconsitent.

and in Sales, they are all changed data data and are willing to empty in DBRW. and then he write data files.

Yes, that's correct.

but again bufers also works for the CDC and all the changed data is temporaryliy chacheed bufers redo and the LGWR writes in the log files. and these data can be data are committed or not.

That is partially right. The buffer which is written by DBWR in the data file is a full buffer while in the log buffer, it is not the entire block that is copied. Oracle enters the log buffer called vector of change . It's actually the representation of this change that you made in the data block. It is the internal representation of the change that is copied into the log block and is much smaller in size. The size of the buffer of paper compared to the buffer cache is much smaller. What you mentioned about the writing of written data room again or not committed is correct. His transactional change that needs to be protected and therefore is almost always written in the restore log file by progression of the LGWR.

and when the log switch is held, then it writes data to data files commited

Fake! To the command log, Checkpoint is triggered and if I'm wrong, its called point of control of thread . This causes DBWR write buffers dirty this thread in the data file regardless of whether or not they are committed.

>

My question is that if a log file may have committed data type and stop and when a log switch takes place then only the data are committed are transferred to datafiles, then where are the data no go?

As I explained, it is not only the validated data are written to the file data but committed and not committed is written.

If dirty pads also contain modified data so wath is the diffrence between the bufers and data log data incorrect.

Read my response above where I explained the difference.

I know that this can be funny. but I m maybe wrong abot the concept. Please correct my concept about this

No sound is not funny, but make sure that you mix in anything else and understand the concept that he told you. Assuming that before understanding can cause serious disasters by getting the concepts clearly.

Thank you very much

HTH

Aman... -

Get the former locations of the data files and Redo logs

Version: 11.2

Platform: Solaris 10

When we manage hundreds of DBs, we do not know the locations of all DB files these allows DBs. say a DB goes down and you have all the required RMAN backups.

When you restore the DB in a new location in the path of the new server, you must run the commands for the data files and ORLs below. But how do we know

The former location of the data files.

B. the old location of redo online stores that I can run

runalter database rename file 'oldPath_of_OnlineRedoLogs' to 'newPath_of_OnlineRedoLogs' ; --- Without this command , the restored control file will still reflect the old control file locationrun { set newname for datafile 1 to '/u04/oradata/lmnprod/lmnprod_system01.dbf' ; set newname for datafile 2 to '/u04/oradata/lmnprod/lmnprod_sysaux01.dbf' ; set newname for datafile 3 to '/u04/oradata/lmnprod/lmnprod_undotbs101.dbf' ; set newname for datafile 4 to '/u04/oradata/lmnprod/lmnprod_audit_ts01.dbf' ; set newname for datafile 5 to '/u04/oradata/lmnprod/lmnprod_quest_ts01.dbf' ; set newname for datafile 6 to '/u04/oradata/lmnprod/lmnprod_yelxr_ts01.dbf' ; . . . . . }Hello

With the help of Oracle 11.2, you can use feature 'set newname for database' using OMF.

SET NEWNAME FOR DATABASE TO '/oradata/%U'; RESTORE DATABASE; SWITCH DATAFILE ALL; SWITCH TEMPFILE ALL; RECOVER DATABASE;After the restore and recover databases (i.e. before resetlog open) you can do to rename redolog. Just a query column member from v$ logfile and deliver ' alter database rename file 'oldPath_of_OnlineRedoLogs' to 'newPath_of_OnlineRedoLogs ';

When we use the DSO is much easier to use OMF because Oracle automatically creates the directory structure.

But when we use the file system that the OMF does not serve due DBA dislikes system generated on file system names.If you don't like OMF file system, you can use the script on thread below to help restore you using readable for datafile names, tempfile, and redo.

{message: id = 9866752}

Kind regards

Levi Pereira -

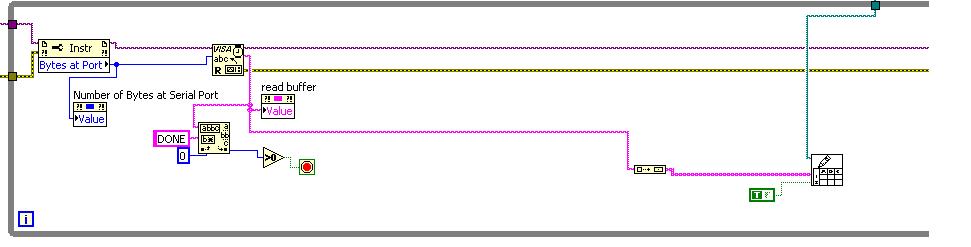

reading serial port constantly and update the string buffer

Hi guys,.

I am facing a problem in reading the data in series using LabVIEW.

I have an unknown size of data to be read on the RS-232 (Serial) and using read write serial.vi (example) I have read the data permanently and monitor for chain DONE on the buffer, but the channel indicator that displays the output not updated data.

Chain should:

1 2 3 4

5 6 7 8

I don't see one character at a time on the indicator.

This is the screenshot of the vi

Is there any method which will help me to do this?

Hi Dave,.

Thanks a lot... you helped me finish all my work... "Always small things blink quickly.

See you soon,.

Sailesh

-

Questions about serial port read and write at the same time

Hi I create a user interface for the communication serial port, where there are essentially 2 front panels, where the user enters commands one and the other where the prints of UART is delivered. I thought initially using a state machine but the reading and writing may be independent sometimes and so I can't rely on States. I searched a bit on the forum and he left me even more confused. Help, please.

(1) in a thread that sessions visa duplicated has been used for writing and reading at the same time, is it recommendable? How will this affect performance?

(2) essentially when the vi is reading data are it must constantly view as well, however, someone said that it takes too much memory to use shift registers, so how do I go about this? If using a State in queue after the loop of reading it affects the playback loop and be sequential?

In addition anyway is to move the cursor to the latest data from the indicator

(3) for the control of the user input, assumes that the user has entered an order in the control and press ENTER, then writing visa is launched, but if it comes in another string and press enter then write must be called again... is - it possible? will detect the previous commands in the control of compensation?

(4) according to my understand the expectation for the event do not monopolize resources and writing can go in parallel, am I right?

Thank you. I have attached a very basic vi which took me to the point, but I want to make it more robust. Please help especially in the part of the user interface.

su_a,

(1) you can have only one session to a port. Several UART can handle full duplex so performance is not affected. At flow rates of high data and large amounts of data, buffering and latencies of BONE can become a problem.

(2) who told you that shift registers using too much memory? Shift registers are usually the best way to transfer data from one iteration to another. String concatenation inside a loop (registry to offset or not) causes the chain to develop and may require re-allocation of memory. Your VI never clears the string so its cold length become very large.

Generally, you do not have an active cursor on an indicator. If you want to always display the most recently received characters and turn on the vertical scroll bar use a property node to keep scrolls to the bottom. This can be annoying for users if they attempt to manually move the scroll bar and find that the program continues to move it back automatically.

(3) if the user has changed the value in the chain of command, when he hits enter the modified value event fires. Simply strike brace does not change the value and does not trigger the event. Not control need to be erased, but the value that he has to change. If you want to send the same command again, have a button send a command may be a better choice.

(4) write is a case of the event. It is not in parallel with anything. The structure of the event do not monopolize resources. The other loop will run while it waits.

The event loop will not stop when you press the STOP button. Probably it wll take two command: change events of value after JUDGMENT before any loop stops. Replace the Timeout event (which never expires) with a STOP: value change event and a real wire of this judgment to the Terminal endpoint. Remove the local variable. Make mechanical locking when released.

Lynn

-

timed transmission of serial data

I need to send transmissions series continuously at different periods and between transmissions, I also need to search for received data.

For the moment, I had things works affecting first to the top of my serial port Comms and then that feeds 3 separate loops while where the data are sent to different times. Running at 80ms loop 1 loop 2 to 160ms and loop 3 to 340ms. Data are read in loop 2 after a waiting period of 20ms.

This config works sort of ok, but sometimes I lose data on the reading.

Is there a better way to do this?

Hello

Indeed, you don't have to reset the starttime.

And only if you are running more than 49 days according to me you should take care of the reset of the moment.

And make sure that all messages are sent in multiple related to waiting time.

better yet take the gmd (in this case 20ms) to have as much time to sent stuff.

-

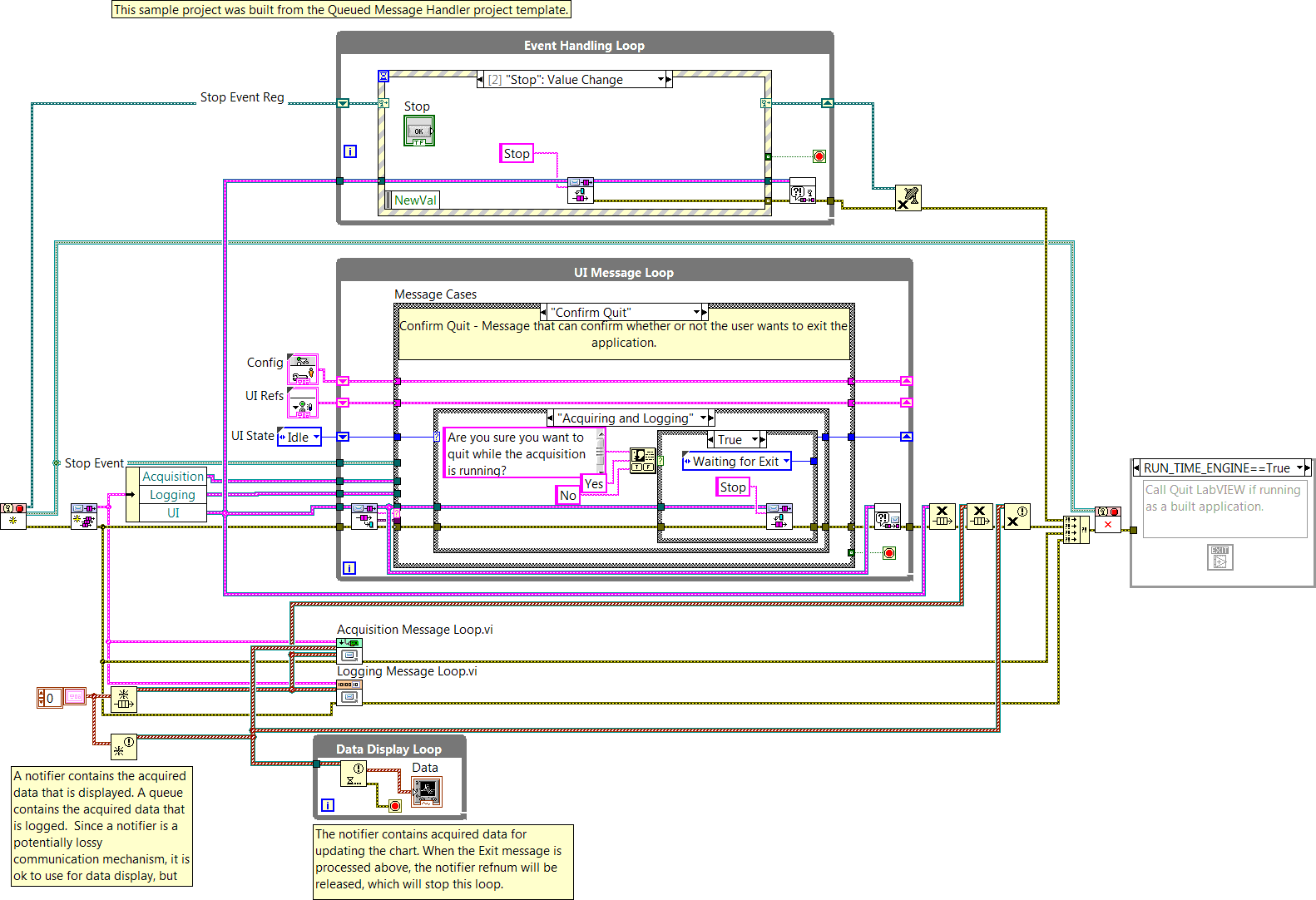

continuous measurement and logging, LV2014

In LabView 2012, I examined how project templates QueuedMessageHandler and the measure continuously and logging (CML). In addition, there are all of a lengthy and detailed documentation for the QMS. There is a much shorter documentation for CML, but readers of mention the QMS, given CML is based on this project template of course.

I just got LV2014, and I began to consider the continuous measurement and model of forestry (DAQmx) inside LabView 2014 project. There is here a change which is not documented: a state machine additional typedef enum in the loop of paremeter. But there is no change in the QMS 2014 version, while this change is not explained in detail.

You can direct me to some docs or podcasts more explaining the feature of the new version of CML?

Also, when I try to run the continuous measurement and model of forestry project (DAQmx), just to see how it works, I see strange behavior: even though I always select "connect" in the trigger section, force the trigger button starts to blink, and two messages begin to iterate through the channel indicator : logging and waiting for release. But I always chose "log"

is this a bug?

is this a bug?I was reading an "interesting debate" since the year last too:

I understand that these models are only starting points, but I'd be very happy for some documentation to understand how to properly use these models (I was quite OK with the original models, but the new ones obtained more complex due to the state machine).

Thanks for the tips!

Hello

The additional Typedef is necessary to ensure that only data acquisition-task is started.

This is because the mechanical action is defined as "lock when released. For example, when you press on the

Start button, then release, the changes of signal from false to true, and then it goes from true to false.

It would be two events. During the first event, a new Message is created. Now, when you take a look at the "Message loop will vary."

you will also see an additional type of def. In this VI data acquisition task is created and started. The problem now is the second event.

Now another Message is created to start an acquisition process. And if another task-acquisition of data is started, you would get a problem with LabVIEW and DAQ hardware.

But due to the fact that in the loop of the Acquisition, the State has the value of Acquisition with the first Message, the second Message does not start another DAQ-task.In the QMH there no need of this, because you don't start a data acquisition task.

Two indicators during indexing, strength of trigger is set to false. If the program connects

and force trigger is disabled. After the registration process, the flag is bit set to Wait on trigger

because, as already stated, it is disabled. Now Force trigger is true once again, and it connects again. This process

is repeated and the indicator is switching between these two States.

When you open the VI "Loop of Message Logging", you will see that the trigger for the Force is set to false.Kind regards

Whirlpool

-

Measure continuously and logging LV2014

Hello

I consider the LMC model located in LabView 2014. He's very nice, I'm learning a lot while playing with this model. However, because of the very minimal documentation (virtually zero), some things are unclear why he implemented?

1, in the UI Message Loop, the business structure has a case of 'leave to confirm. In my view, this case can never get triggered because the stop button is disabled during data acquisition, why is that? I guess to give an example, if someone wants to activate the option to close the application during data acquisition without stopping the acquisition of data?

2, because it is not explained in the documentation, could someone explain the behavior of the enum type def in the loop of user interface messages? I think that understand that, before stopping the acquisition of data, we are certainly on the first data acquisition is stopped. Second, all the data is stored, so the loop of logging can also be stopped and finally we can go to the idle state, and the "status update" message can be broadcast. Am I wrong? Somehow, it's still a little "fuzzy" for me...

Thanks for the help!

Kind regards

1. confirm that Quit is activated if you click on the Red 'X' to close the main window VI during an acquisition.

2. Yes, we must ensure that the acquisition and logging loops are in an idle state before you can close the application. It is the purpose of the enum status user interface.

Our documentation team has already a task update documentation to discuss these issues (it is followed in CAR 397078).

-

Data Guard and auditor of the Apex

Hi all

I seem to be unable to understand the configuration of parameters for protective Apex listener when connecting to a database.

We have a lot of servers of databases (without data guard) and we use the command line Wizard to generate the configuration file. It's straigthforeward and

easy. We enter the server, port, and Service_name and it works.But for a guard database, there are two servers and I seem to be unable to set it in the right way. After doing some research I came across the parameter apex.db.customURL which should solve the problem.

I removed the references to the server, port, and Service_name and put the key in.

The result were errors of connection due to some incorrect port settings.

SEVERE: The pool named: apex is not properly configured, error: IO error: format invalid number for the port number

oracle.dbtools.common.jdbc.ConnectionPoolException: the pool named: apex is not properly configured, error: IO error: format invalid number for the port number

at oracle.dbtools.common.jdbc.ConnectionPoolException.badConfiguration(ConnectionPoolException.java:65)

What Miss me?

Thank you

Michael(Here's the rest of our configuration :)

<? XML version = "1.0" encoding = "UTF-8" standalone = 'no '? >

< ! DOCTYPE SYSTEM property "http://java.sun.com/dtd/properties.dtd" > ""

Properties of <>

< comment > saved on Mon Oct 19 18:28:41 CEST 2015 < / comment >

< key "debug.printDebugToScreen entry" = > false < / entry >

< key "security.disableDefaultExclusionList entry" = > false < / entry >

< key = "db.password entry" > @055EA3CC68C35F70CF34A203A8EE1A55D411997069F6AE9053B3D1F0B951D84E0E < / entry >

< key = "enter cache.maxEntries" > 500 < / entry >

< key = "enter error.maxEntries" > 50 < / entry >

< key = "enter security.maxEntries" > 2000 < / entry >

< key = "cache.directory entry" > / tmp/apex/cache < / entry >

< enter key = "jdbc. DriverType"> thin < / entry >

< key = "enter log.maxEntries" > 50 < / entry >

< enter key = "jdbc. MaxConnectionReuseCount"> 1000 < / entry >

< key "log.logging entry" = > false < / entry >

< enter key = "jdbc. InitialLimit' > 3 < / entry >

< enter key = "jdbc. MaxLimit' 10 > < / entry >

< key = "enter cache.monitorInterval" 60 > < / entry >

< key = "enter cache.expiration" > 7 < / entry >

< key = "enter jdbc.statementTimeout" > 900 < / entry >

< enter key = "jdbc. MaxStatementsLimit' 10 > < / entry >

< key = "misc.defaultPage entry" > apex < / entry >

< key = "misc.compress" / entry >

< enter key = "jdbc. MinLimit"> 1 < / entry >

< key = "cache.type entry" > lru < / entry >

< key "cache.caching entry" = > false < / entry >

< key "error.keepErrorMessages entry" = > true < / entry >

< key = "cache.procedureNameList" / entry >

< key = "cache.duration entry" > days < / entry >

< enter key = "jdbc. InactivityTimeout"1800 > < / entry >

< key "debug.debugger entry" = > false < / entry >

< key = "enter db.customURL" > JDBC: thin: @(DESCRIPTION = (ADDRESS_LIST = (ADRESSE = (COMMUNAUTÉ = tcp.world) (PROTOCOL = TCP) (host = DB-ENDUR) (Port = 1520)) (ADDRESS = (COMMUNITY = tcp.world)(PROTOCOL=TCP) (Host = DB-ENDURK) (PORT = 1521)) (LOAD_BALANCE = off)(FAILOVER=on)) (CONNECT_DATA = (SERVICE_NAME = ENDUR_PROD.) VERBUND.CO. «"" AT))) < / entry >»»»

< / properties >

Hi Michael Weinberger,

Michael Weinberger wrote:

I seem to be unable to understand the configuration of parameters for protective Apex listener when connecting to a database.

We have a lot of servers of databases (without data guard) and we use the command line Wizard to generate the configuration file. It's straigthforeward and

easy. We enter the server, port, and Service_name and it works.But for a guard database, there are two servers and I seem to be unable to set it in the right way. After doing some research I came across the parameter apex.db.customURL which should solve the problem.

I removed the references to the server, port, and Service_name and put the key in.

Keep the references the server name, port and service. No need to delete.

The result were errors of connection due to some incorrect port settings.

SEVERE: The pool named: apex is not properly configured, error: IO error: format invalid number for the port number

oracle.dbtools.common.jdbc.ConnectionPoolException: the pool named: apex is not properly configured, error: IO error: format invalid number for the port number

at oracle.dbtools.common.jdbc.ConnectionPoolException.badConfiguration(ConnectionPoolException.java:65)

What Miss me?

You must create two entries in the configuration file "defaults.xml" for your ADR (formerly APEX Listener).

One for db.connectionType and, secondly, for db.customURL, for example:

customurl jdbc:oracle:thin:@(DESCRIPTION=(ADDRESS_LIST= (ADDRESS=(COMMUNITY=tcp.world)(PROTOCOL=TCP)(HOST=DB-ENDUR)(Port = 1520)) (ADDRESS=(COMMUNITY=tcp.world)(PROTOCOL=TCP)(HOST=DB-ENDURK)(PORT = 1521)) (LOAD_BALANCE=off)(FAILOVER=on))(CONNECT_DATA=(SERVICE_NAME=ENDUR_PROD.VERBUND.CO.AT))) Reference: http://docs.oracle.com/cd/E56351_01/doc.30/e56293/config_file.htm#AELIG7204

NOTE: After you change the configuration file, don't forget to restart independent ADR / Java EE application server support if ADR is deployed on one.

Also check if your URL for a JDBC connection is working properly and if there are any questions, you can turn on debugging for ADR:

Reference:

- http://docs.Oracle.com/CD/E56351_01/doc.30/e56293/trouble.htm#AELIG7206

- http://docs.Oracle.com/CD/E56351_01/doc.30/e56293/trouble.htm#AELIG7207

Directed by Tony, you should post the ADR related questions to the appropriate forum. Reference: ADR, SODA & JSON in the database

You can also move this thread on the forum of the ADR.

Kind regards

Kiran

-

vCenter Server does not send data to vCenter Log Insight

I second vCenter server in another site, in Insight Log connection tests but I don't see any data from it...

ESXi hosts connected to this server vcenter report their data after you run the command configure esxi on the tank.

Where I can watch on the vCenter box that does not ensure that its installation correctly to send the data?

Log Insight does not change to vCenter server so there is not place to search the vCenter Server (API calls only - you could try to look at events for the instance of vCenter Server view connection / disconnection of the events in log Insight). Usually when the vCenter Server events, tasks and alarms are not observed it stresses a connection permissions problem. The user specified for vCenter Server integration - must read-only permissions that are set on the object level superior vCenter Server and have you selected the checkbox to enable the authorization to broadcast across all objects? If so, can you generate a beam of support-> page Health Administration and transfer it by the directions here: http://kb.vmware.com/kb/1008525 (not necessary for a SR, simply create a file called chasehansen)

-

Problem renaming of data warehouses and networks

I have recently upgraded a number of servers to ESX 4.1 to 5 ESXi and vCenter vCenter 4 5 and have noticed a problem. Whenever I have rename a data store or a network, the new name is added to the list of network or data store, but the old name is too present. In the past, if I had a data store and renamed her, I'd still only 1 ad for the renamed data store. With ESXi 5, I have two - the former name and the new name. It goes the same for networks. And they always appear in vCenter as being attached to the host. I tried to disconnect/reconnect vcenter, removing the host and add it again, restart the ESX host and always the old names appear. If I look at the host through vShpere, everything seems OK with the renowned name 1. But vCenter can't seem to let go of the old data store names and network. Any ideas?

I can't tell you for sure. This has to do with the return to a snapshot of the IMO. If you have a single host and back to a snapshot you will be logged out anyway (if the Group of ports of origin no longer exists). In a cluster, you will have the opportunity of the first migration/vMotion virtual computer to another host that maintains such a group of port before returning to a snapshot. As I said, just a thought.

André

-

Analyzes operational Audit, data verification and historical process of flow

Hello

Internal Audit Department asked a bunch of information, we need to compile from newspaper Audit task, data verification and process Flow history. We have all the information available, but not in a format that allows to correct "reporting" the log information. What is the best way to manage the HFM logs so that we can quickly filter and export the verification information required?

We have housekeeping in place, newspapers are 'live' partial db tables and partial purged tables which have been exported to Excel to archive historical newspaper information.

Thank you very much.I thought I posted this Friday, but I just noticed that I never hit the "Post Message" button, ha ha.

This info below will help you translate some information in tables, etc.. You may realize in tables audit directly or move them to another array of appropriate data for analysis later. The consensus, even if I disagree, is that you will suffer from performance issues if your audit tables become too big, if you want to move them periodically. You can do it using a manual process of scheduled task, etc.

I personally just throw in another table and report on it here. As mentioned above, you will need to translate some information as it is not "readable" in the database.

For example, if I wanted to pull the load of metadata, rules of loading, loading list of members, you can run a query like this. (NOTE: strAppName must be the name of your application...)

The main tricks to know at least for checking table tasks are finding how convert hours and determine what activity code matches the friendly name.

-- Declare working variables -- declare @dtStartDate as nvarchar(20) declare @dtEndDate as nvarchar(20) declare @strAppName as nvarchar(20) declare @strSQL as nvarchar(4000) -- Initialize working variables -- set @dtStartDate = '1/1/2012' set @dtEndDate = '8/31/2012' set @strAppName = 'YourAppNameHere' --Get Rules Load, Metadata, Member List set @strSQL = ' select sUserName as "User", ''Rules Load'' as Activity, cast(StartTime-2 as smalldatetime) as "Time Start", cast(EndTime-2 as smalldatetime) as ''Time End'', ServerName, strDescription, strModuleName from ' + @strAppName + '_task_audit ta, hsv_activity_users au where au.lUserID = ta.ActivityUserID and activitycode in (1) and cast(StartTime-2 as smalldatetime) between ''' + @dtStartDate + ''' and ''' + @dtEndDate + ''' union all select sUserName as "User", ''Metadata Load'' as Activity, cast(StartTime-2 as smalldatetime) as "Time Start", cast(EndTime-2 as smalldatetime) as ''Time End'', ServerName, strDescription, strModuleName from ' + @strAppName + '_task_audit ta, hsv_activity_users au where au.lUserID = ta.ActivityUserID and activitycode in (21) and cast(StartTime-2 as smalldatetime) between ''' + @dtStartDate + ''' and ''' + @dtEndDate + ''' union all select sUserName as "User", ''Memberlist Load'' as Activity, cast(StartTime-2 as smalldatetime) as "Time Start", cast(EndTime-2 as smalldatetime) as ''Time End'', ServerName, strDescription, strModuleName from ' + @strAppName + '_task_audit ta, hsv_activity_users au where au.lUserID = ta.ActivityUserID and activitycode in (23) and cast(StartTime-2 as smalldatetime) between ''' + @dtStartDate + ''' and ''' + @dtEndDate + '''' exec sp_executesql @strSQLWith regard to the codes of the activity, here's a quick breakdown on those...

ActivityID ActivityName 0 Idle 1 Rules Load 2 Rules Scan 3 Rules Extract 4 Consolidation 5 Chart Logic 6 Translation 7 Custom Logic 8 Allocate 9 Data Load 10 Data Extract 11 Data Extract via HAL 12 Data Entry 13 Data Retrieval 14 Data Clear 15 Data Copy 16 Journal Entry 17 Journal Retrieval 18 Journal Posting 19 Journal Unposting 20 Journal Template Entry 21 Metadata Load 22 Metadata Extract 23 Member List Load 24 Member List Scan 25 Member List Extract 26 Security Load 27 Security Scan 28 Security Extract 29 Logon 30 Logon Failure 31 Logoff 32 External 33 Metadata Scan 34 Data Scan 35 Extended Analytics Export 36 Extended Analytics Schema Delete 37 Transactions Load 38 Transactions Extract 39 Document Attachments 40 Document Detachments 41 Create Transactions 42 Edit Transactions 43 Delete Transactions 44 Post Transactions 45 Unpost Transactions 46 Delete Invalid Records 47 Data Audit Purged 48 Task Audit Purged 49 Post All Transactions 50 Unpost All Transactions 51 Delete All Transactions 52 Unmatch All Transactions 53 Auto Match by ID 54 Auto Match by Account 55 Intercompany Matching Report by ID 56 Intercompany Matching Report by Acct 57 Intercompany Transaction Report 58 Manual Match 59 Unmatch Selected 60 Manage IC Periods 61 Lock/Unlock IC Entities 62 Manage IC Reason Codes 63 Null

Maybe you are looking for

-

Hi all! Even if I take my iPhone with me, training app still will not produce an outdoor performance map? You have to use a third party app?

-

Need driver WLAN for Satellite Pro S300-10j

Toshiba Satellite Pro S300-10j driver for wireless sound I tried to look around the section of the driver, but he's not here. can someone send a link. Thank you.

-

generate documentation of several VI

Hi everyone, I am trying to automatically generate documentation of my own VI (connector, façade and description) I know that now LabVIEW can run a lot of things, but this one in particular? Thank you

-

store the value obtained in the while loop

Hello I'm data acquisition (1,000 points every 0.1 s) in a while loop. I would like to calculate the average y of the first sample of 1000 points when I click on a Boolean 'calibrate', store that value somewhere (outside the while loop?) so that it c

-

OfficeJet 6500 does not print black

Printer started printing black printing very light and jagged. Replace the cartridge of black with no improvement. A run a few cleaning process and replay it does not print anything in black. Colors seem to work well. What is the print head?