Nexus 1000V for vSphere 4.1

Will there be an updated version of the Nexus 1000V for vSphere 4.1, or is the last compatible version?

Current is compatible and included for vSphere 4.1 Ent more.

---

MCSA, MCTS Hyper-V, VCP 3/4, VMware vExpert

Tags: VMware

Similar Questions

-

UCS environment vSphere 5.1 upgrade to vSphere 6 with Nexus 1000v

Hi, I've faced trying to get the help of TAC and internet research on the way to upgrade to our UCS environment, and as a last thought resort, I would try a post on the forum.

My environment is an environment of UCS chassis with double tracking, tissue of interconnections, years 1110 with pair HA of 1000v 5.1 running vsphere. We have updated all our equipment (blades, series C and UCS Manager) for the supported versions of the firmware by CISCO for vSphere 6.

We have even upgraded our Nexus 1000v 5.2 (1) SV3(1.5a) which is a support for vSphere version 6.

To save us some treatment and cost of issuing permits and on our previous vcenter server performance was lacking and unreliable, I looked at the virtual migration on the vCenter 6 appliance. There is nowhere where I can find information which advises on how to begin the process of upgrading VMWare on NGC when the 1000v is incorporated. I would have thought that it is a virtual machine if you have all of your improved versions to support versions for 6 veil smooth.

A response I got from TAC was that we had to move all of our VM on a standard switch to improve the vCenter, but given we are already on a supported the 1000v for vSphere version 6 that he left me confused, nor that I would get the opportunity to rework our environment being a hospital with this kind of downtime and outage windows for more than 200 machines.

Can provide some tips, or anyone else has tried a similar upgrade path?

Greetings.

It seems that you have already upgraded your components N1k (are VEM upgraded to match?).

Are your questions more info on how you upgrade/migration to a vcenter server to another?

If you import your vcenter database to your new vcenter, it shouldn't have a lot of waste of time, as the VSM/VEM will always see the vcenter and N1k dVS. If you change the vcenter server name/IP, but import the old vcenter DB, there are a few steps, you will need to ensure that the connection of VSM SVS corresponds to the new IP address in vcenter.

If you try to create a new additional vcenter in parallel, then you will have problems of downtime as the name of port-profiles/network programming the guestVMs currently have will lose their 'support' info if you attempt to migrate, because you to NIC dVS standard or generic before the hosts for the new vcenter.

If you are already on vcenter 6, I believe you can vmotion from one host to another and more profile vswitch/dVS/port used.

Really need more detail on how to migrate from a vcenter for the VCA 6.0.

Thank you

Kirk...

-

Nexus 1000v and vMotion/VM traffic?

Hello

We are moving our servers of VM production to a new data center.

There is a willingness to use the ports of 2 x 10 giga on each ESX host for the uplinks (etherchannel) Nexus 1000v for vMotion traffic and traffic VM.

I don't know if that's even possible/supported? To devote nexus 1000v for vMotion and VM traffic ports at the same time? I think each guest will need an address unique IP for vMotion be able to operate.

Best regards

/ Pete

Info:

vCenter/ESXi - 5.0 Update 3

Yes - the characteristics of vmotion still apply if you use a virtual switch Standard or Distributed Virtual Switch as the Nexus 1000v - you should have a network for vmotion and each port vmkernel will need a unique IP address on the subnet of vmotion

-

Remove the ' system VLAN "Nexus 1000V port-profile

We have a Dell M1000e blade chassis with a number of Server Blade M605 ESXi 5.0 using the Nexus 1000V for networking. We use 10 G Ethernet fabric B and C, for a total of 4 10 cards per server. We do not use the NIC 1 G on A fabric. We currently use a NIC of B and C fabrics for the traffic of the virtual machine and the other card NETWORK in each fabric for traffic management/vMotion/iSCSI VM. We currently use iSCSI EqualLogic PS6010 arrays and have two configuration of port-groups with iSCSI connections (a physical NIC vmnic3 and a vmnic5 of NIC physical).

We have added a unified EMC VNX 5300 table at our facility and we have configured three VLANs extra on our network - two for iSCSI and other for NFS configuration. We've added added vEthernet port-profiles for the VLAN of new three, but when we added the new vmk # ports on some of the ESXi servers, they couldn't ping anything. We got a deal of TAC with Cisco and it was determined that only a single port group with iSCSI connections can be bound to a physical uplink both.

We decided that we would temporarily add the VLAN again to the list of VLANS allowed on the ports of trunk of physical switch currently only used for the traffic of the VM. We need to delete the new VLAN port ethernet-profile current but facing a problem.

The Nexus 1000V current profile port that must be changed is:

The DenverMgmtSanUplinks type ethernet port profile

VMware-port group

switchport mode trunk

switchport trunk allowed vlan 2308-2306, 2311-2315

passive auto channel-group mode

no downtime

System vlan 2308-2306, 2311-2315

MGMT RISING SAN description

enabled state

We must remove the list ' system vlan "vlan 2313-2315 in order to remove them from the list" trunk switchport allowed vlan.

However, when we try to do, we get an error about the port-profile is currently in use:

vsm21a # conf t

Enter configuration commands, one per line. End with CNTL/Z.

vsm21a (config) #-port ethernet type DenverMgmtSanUplinks profile

vsm21a(config-port-Prof) # system vlan 2308-2306, 2311-2312

ERROR: Cannot delete system VLAN, port-profile in use by Po2 interface

We have 6 ESXi servers connected to this Nexus 1000V. Originally they were MEC 3-8 but apparently when we made an update of the firmware, they had re - VEM 9-14 and the old 6 VEM and associates of the Channel ports, are orphans.

By example, if we look at the port-channel 2 more in detail, we see orphans 3 VEM-related sound and it has no ports associated with it:

Sho vsm21a(config-port-Prof) # run int port-channel 2

! Command: show running-config interface port-canal2

! Time: Thu Apr 26 18:59:06 2013

version 4.2 (1) SV2 (1.1)

interface port-canal2

inherit port-profile DenverMgmtSanUplinks

MEC 3

vsm21a(config-port-Prof) # sho int port-channel 2

port-canal2 is stopped (no operational member)

Material: Port Channel, address: 0000.0000.0000 (bia 0000.0000.0000)

MTU 1500 bytes, BW 100000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA

Port mode is trunk

Auto-duplex, 10 Gb/s

Lighthouse is off

Input stream control is turned off, output flow control is disabled

Switchport monitor is off

Members in this channel: Eth3/4, Eth3/6

Final cleaning of "show interface" counters never

102 interface resets

We can probably remove the port-channel 2, but assumed that the error message on the port-profile in use is cascading on the other channel ports. We can delete the other port-channel 4,6,8,10 orphans and 12 as they are associated with the orphan VEM, but we expect wil then also get errors on the channels of port 13,15,17,19,21 and 23 who are associated with the MEC assets.

We are looking to see if there is an easy way to fix this on the MSM, or if we need to break one of the rising physical on each server, connect to a vSS or vDS and migrate all off us so the Nexus 1000V vmkernel ports can clean number VLAN.

You will not be able to remove the VLAN from the system until nothing by using this port-profile. We are very protective of any vlan that is designated on the system command line vlan.

You must clean the canals of old port and the old MEC. You can safely do 'no port-channel int' and "no vem" on devices which are no longer used.

What you can do is to create a new port to link rising profile with the settings you want. Then invert the interfaces in the new port-profile. It is generally easier to create a new one then to attempt to clean and the old port-profile with control panel vlan.

I would like to make the following steps.

Create a new port-profile with the settings you want to

Put the host in if possible maintenance mode

Pick a network of former N1Kv eth port-profile card

Add the network adapter in the new N1Kv eth port-profile

Pull on the second NIC on the old port-profile of eth

Add the second network card in the new port-profile

You will get some duplicated packages, error messages, but it should work.

The other option is to remove the N1Kv host and add it by using the new profile port eth.

Another option is to leave it. Unless it's really bother you no VMs will be able to use these ports-profile unless you create a port veth profile on this VLAN.

Louis

-

Nexus 1000v and vSwitch best practices

I am working on the design of our vDS Nexus 1000v for use on HP BL490 G6 servers. 8 natachasery is allocated as follows:

vmnic0, 1: management of ESXi, VSM-CTRL-PKT, VSM - MGT

vmnic2, 3: vMotion

vmnic4, 5: iSCSI, FT, Clustering heartbeats

vmnic6, 7: data server and Client VM traffic

Should I migrate all the natachasery to 1000v vDS, or should I let vmnic 0.1 on a regular vSwitch and others migrate to the vDS? If I migrate all the natachasery at the very least I would designate vmnic 0.1 as system so that traffic could elapse until the MSM could be reached. My inclination is to migrate all the natachasery, but I've seen elsewhere on comments in forums that the VSM associated networks and, possibly, the console ESX (i) are better let off of the vDS.

Thoughts?

Here is a best practice-how-to guide specific to 1000v & VC HP might be useful.

See you soon,.

Robert

-

Question about license for Nexus 1000v and it time to stand not licensed ESXi

Hello

I have the license for 12 cpu that are 3 popular ESXi with 4 processors each

so if I add an another ESXi, which will support 60 days

I'm pretty sure but wanted confirmation

Thank you

What version do you use? 2.1 they went to a model free and advanced, depending on the features you need, you can be able to upgrade and continue without additional licenses.

No doubt, you can convert your current license VSG, contact your engineer.

-

help required for cisco nexus 1000v

Hello

I have three esxi host in my environment and I want to integrate these hosts with cisco nexus 1000v switch.

I installed vsm on host1 and adding the remaining host via vsm Update Manager. exchanges I have already create in SMV shown in the welcome that I've added to the vsm, but the port group is not shown on the host1 esx on which I have installed vsm, should I also add the host that contains MSM in the cisco nexus switch?

I want to say that I have installed the MEC on any army three esxi. is it good?

Hi Mohsin,

Where did you read that? In the past, we have added the guests, including one who executes the VSM. Usually run us both VSMs (primamry and secondary) and add anti rules affinity so that the two VSMs are on different hosts. I'm not a person CISCO, but having worked with CISCO engineers, we had no problem with what you have just mentioned. It would really be a waste of host in my opinion. I don't see why this could be a problem... As long as you have all your trade (PGs for your packages VSM etc etc) in place, you should be able to add all hosts in my experience.

Follow me @ Cloud - Buddy.com

-

Upgrade to vCenter 4.0 with Cisco Nexus 1000v installed

Hi all

We have vCenter 4.0 and ESX 4.0 servers and we want to upgrade to version 4.1. But also Nexus 1000v installed on the ESX Server and vCenter.i found VMware KB which is http://kb.vmware.com/selfservice/microsites/search.do?language=en_US & cmd = displayKC & externalId = 1024641 . But only the ESX Server upgrade is explained on this KB, vCenter quid? Our vcenter 4.0 is installed on Windows 2003 64-bit with 64-bit SQL 2005.

We can upgrade vcenter with Nexsus plugin 1000v installed upgrading on-site without problem? And how to proceed? What are the effects of the plugin Nexus1000v installed on the server vcenter during update?

Nexus1000v 4.0. (version 4).sv1(3a) has been installed on the ESX servers.

Concerning

Mecyon,

Upgrading vSphere 4.0-> 4.1 you must update the software VEM (.vib) also. The plugin for vCenter won't change, it won't be anything on your VSM (s). The only thing you should update is the MEC on each host. See the matrix previously posted above for the .vib to install once you're host has been updated.

Note: after this upgrade, that you will be able to update regardless of your software of vSphere host or 1000v (without having to be updated to the other). 1000v VEM dependencies have been removed since vSphere 4.0 Update 2.

Kind regards

Robert

-

Nexus 1000v - this config makes sense?

Hello

I started to deploy the Nexus 1000v at a 6 host cluster, all running vSphere 4.1 (vCenter and ESXi). The basic configuration, license etc. is already completed and so far no problem.

My doubts are with respect to the actual creation of the uplink system, port-profiles, etc. Basically, I want to make sure I don't make any mistakes in the way that I want to put in place.

My current setup for each host is like this with standard vSwitches:

vSwitch0: 2 natachasery/active, with management and vMotion vmkernel ports.

vSwitch1: natachasery 2/active, dedicated to a storage vmkernel port

vSwitch2: 2 natachasery/active for the traffic of the virtual machine.

I thought that translate to the Nexus 1000v as this:

System-uplink1 with 2 natachasery where I'm putting the ports of vmk management and vMotion

System-uplink2 with 2 natachasery for storage vmk

System-uplink3 with 2 natachasery for the traffic of the virtual machine.

These three system uplinks are global, right? Or I put up three rising system unique for each host? I thought that by making global rising 3 would make things a lot easier because if I change something in an uplink, it will be pushed to 6 guests.

Also, I read somewhere that if I use 2 natachasery by uplink system, then I need to set up a channel of port on our physical switches?

At the moment the VSM has 3 different VLAN for the management, control and packet, I want to migrate the groups of 3 ports on the standard switch to the n1kv itself.

Also, when I migrated to N1Kv SVS management port, host complained that there no redundancy management, even if the uplink1 where mgmt-port profile is attached, has 2 natachasery added to it.

While the guys do you think? In addition, any other best practices are much appreciated.

Thanks in advance,

Yes, uplink port-profiles are global.

What you propose works with a warning. You cannot superimpose a vlan between these uplinks. So if your uplink management will use vlan 100 and your uplink of VM data must also use vlan 100 which will cause problems.

Louis

-

Configuring network DMZ, internal using Nexus 1000v

Hello peoples, this is my first post in the forums.

I am trying to build a profile for my customer with the following configuration;

4 x ESXi hosts on the DL380 G7 each with 12 GB of RAM, CPU Core X 5650 of 2 x 6, 8 x 1 GB NIC

2 x left iSCSI SAN.

The hardware components and several design features, on that I have no control, they were decided and I can't change, or I can't add additional equipment. Here's my constraints;

(1) the solution will use the shared for internal, external traffic and iSCSI Cisco network switches.

(2) the solution uses a single cluster with each of the four hosts within that group.

(3) I install and configure a Nexus 1000v in the environment (something I'm not want simply because I have never done it before). The customer was sold on the concept of a solution of cheap hardware and shared because they were told that using a N1Kv would solve all the problems of security.

Before I learned that I would have to use a N1Kv my solution looked like the following attached JPG. The solution used four distributed virtual switches and examples of how they were going to be configured is attached. Details and IP addresses are examples.

My questions are:

(1) what procedure should I use to set up the environment, should I build the dvSwtiches as described and then export it to the N1Kv?

(2) how should I document place this solution? In general in my description I will have a section explaining each switch, how it is configured, vital details, port groups etc. But all of this is removed and replaced with uplink ports or something is it not?

(3) should I be aiming to use a different switch by dvSwitch, or I can stem the heap and create groups of different ports, is it safe, is there a standard? Yes, I read the white papers on the DMZ and the Nexus 1000v.

(4) is my configuration safe and effective? Are there ways to improve it?

All other comments and suggestions are welcome.

Hello and welcome to the forums,

(1) what procedure should I use to set up the environment, should I build the dvSwtiches as described and then export it to the N1Kv?

N1KV replace dvSwitch, but there isn't that a N1KV ONLY where there are many dvSwitches N1KV would use the same rising in the world.

(2) how should I document place this solution? In general in my description I will have a section explaining each switch, how it is configured, vital details, port groups etc. But all of this is removed and replaced with uplink ports or something is it not?

If you use N1KV you rising the pSwitch to the N1KV.

If you use dvSwitch/vSwitch you uplink to the pSwitches to the individual dvSwitch/vSwitch in use.

(3) should I be aiming to use a different switch by dvSwitch, or I can stem the heap and create groups of different ports, is it safe, is there a standard? Yes, I read the white papers on the DMZ and the Nexus 1000v.

No standard and Yes in many cases, it can be considered secure. If your existing physical network relies on VLANs and approves the Layer2 pSwitches, then you can do the exact same thing in the virtual environment and be as safe as your physical environment.

However, if you need separation to the layer of pSwitch then you must maintain various vSwitches for this same separation. Take a look at this post http://www.virtualizationpractice.com/blog/?p=4284 on the subject.

(4) is my configuration safe and effective? Are there ways to improve it?

Always ways to improve. I would like to start looking into the defense-in-depth the vNIC and layers of edge within your vNetwork.

Best regards

Edward L. Haletky VMware communities user moderator, VMware vExpert 2009, 2010Now available: url = http://www.astroarch.com/wiki/index.php/VMware_Virtual_Infrastructure_Security'VMware vSphere (TM) and Virtual Infrastructure Security' [/ URL]

Also available url = http://www.astroarch.com/wiki/index.php/VMWare_ESX_Server_in_the_Enterprise"VMWare ESX Server in the enterprise" [url]

Blogs: url = http://www.virtualizationpractice.comvirtualization practice [/ URL] | URL = http://www.astroarch.com/blog Blue Gears [url] | URL = http://itknowledgeexchange.techtarget.com/virtualization-pro/ TechTarget [url] | URL = http://www.networkworld.com/community/haletky Global network [url]

Podcast: url = http://www.astroarch.com/wiki/index.php/Virtualization_Security_Round_Table_Podcastvirtualization security Table round Podcast [url] | Twitter: url = http://www.twitter.com/TexiwillTexiwll [/ URL]

-

iSCSI MPIO (Multipath) with Nexus 1000v

Anyone out there have iSCSI MPIO successfully with Nexus 1000v? I followed the Cisco's Guide to the best of my knowledge and I tried a number of other configurations without success - vSphere always displays the same number of paths as it shows the targets.

The Cisco document reads as follows:

Before you begin the procedures in this section, you must know or follow these steps.

•You have already configured the host with the channel with a port that includes two or more physical network cards.

•You have already created the VMware kernel NIC to access the external SAN storage.

•A Vmware Kernel NIC can be pinned or assigned to a physical network card.

•A physical NETWORK card may have several cards pinned VMware core network or assigned.

That means 'a core of Vmware NIC can be pinned or assigned to a physical NIC' average regarding the Nexus 1000v? I know how to pin a physical NIC with vDS standard, but how does it work with 1000v? The only thing associated with "pin" I could find inside 1000v was with port channel subgroups. I tried to create a channel of port with manuals subgroups, assigning values of sub-sub-group-id for each uplink, then assign an id pinned to my two VMkernel port profiles (and directly to ports vEthernet as well). But that doesn't seem to work for me

I can ping both the iSCSI ports VMkernel from the switch upstream and inside the VSM, so I know Layer 3 connectivity is here. A strange thing, however, is that I see only one of the two addresses MAC VMkernel related on the switch upstream. Both addresses show depending on the inside of the VSM.

What I'm missing here?

Just to close the loop in case someone stumbles across this thread.

In fact, it is a bug on the Cisco Nexus 1000v. The bug is only relevant to the ESX host that have been fixed in 4.0u2 (and now 4.1). Around short term work is again rev to 4.0u1. Medium-term correction will be integrated into a maintenance for the Nexus 1000V version.

Our implementation of code to get the multipath iSCSI news was bad but allowed in 4.0U1. 4.0U2 no longer our poor implementation.

For iSCSI multipath and N1KV remain 4.0U1 until we have a version of maintenance for the Nexus 1000V

-

Hello

I tried to install the Nexus 1000V using the CLI on a 4.U1 Server ESXi remote.

I use after a command

C:\Program Files (x 86) \VMware\VMware vSphere CLI\bin > vihostupdate.pl b-i "c:\Program Files (x 86) \VMware\VMware CLI\ vSphere.

"Cisco-vem-v100 - 4.0.4.1.1.27 - 0.4.2.zip '-SCSC-sesx002 Server

Enter the user name: root

Enter the password:

Please wait install the fix is underway...

There was an error resolving dependencies.

Asked to VIB cross_cisco-vem-v100 - esx_4.0.4.1.1.27 - 0.4.2 comes into conflict with the host

No VIB provides ' vmknexus1kvapi-0-4' (required by cross_cisco-vem-v100 - esx_4.0.4.1.1.27 - 0.4.2)»

But as you can see, there is an error message.

If there is a mismatch between the version of Nexus and the ESXi4.

I use more ESXi version 4.0U1: 208167 in evaluation mode.

Does anyone have an idea why this happens?

Thank you

Concerning

Jürgen

Jurgen,

You use the bad VEM module for the patch of the ESX kernel that you are running. Take a look at the link for compatibility with the VEM module

For the core ESX 208167, you should install VIB 4.0.4.1.2.0.80 - 1.9.179

It also seems that you try to install a module VEM 1.1. I strongly suggest to download the package N1KV from cisco.com and get version 1.2 from N1KV.

Louis

-

What does Nexus 1000v Version number Say

Can any body provide long Nexus 1000v version number, for example 5.2 (1) SV3 (1.15)

And what does SV mean in the version number.

Thank you

SV is the abbreviation of "Swiched VMware"

See below for a detailed explanation:

http://www.Cisco.com/c/en/us/about/Security-Center/iOS-NX-OS-reference-g...

The Cisco NX - OS dialing software

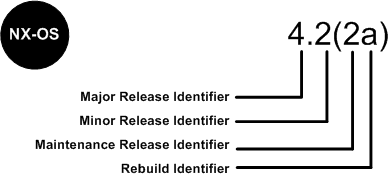

Software Cisco NX - OS is a data-center-class operating system that provides a high thanks to a modular design availability. The Cisco NX - OS software is software-based Cisco MDS 9000 SAN - OS and it supports the Cisco Nexus series switch Cisco MDS 9000 series multilayer. The Cisco NX - OS software contains a boot kick image and an image of the system, the two images contain an identifier of major version, minor version identifier and a maintenance release identifier, and they may also contain an identifier of reconstruction, which can also be referred to as a Patch to support. (See Figure 6).

Software NX - OS Cisco Nexus 7000 Series and MDS 9000 series switches use the numbering scheme that is illustrated in Figure 6.

Figure 6. Switches of the series Cisco IOS dial for Cisco Nexus 7000 and MDS 9000 NX - OS

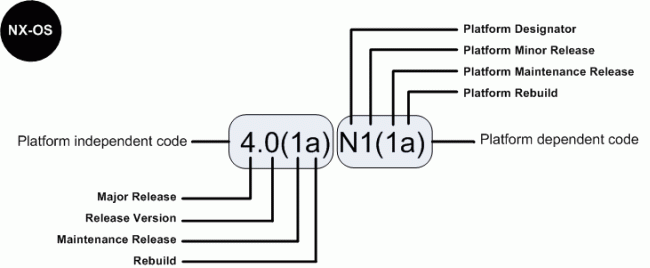

For the other members of the family, software Cisco NX - OS uses a combination of systems independent of the platform and is dependent on the platform as shown in Figure 6a.

Figure 6 a. software Cisco IOS NX - OS numbering for the link between 4000 and 5000 Series and Nexus 1000 switches virtual

The indicator of the platform is N for switches of the 5000 series Nexus, E for the switches of the series 4000 Nexus and S for the Nexus 1000 series switches. In addition, Nexus 1000 virtual switch uses a designation of two letters platform where the second letter indicates the hypervisor vendor that the virtual switch is compatible with, for example V for VMware. Features there are patches in the platform-independent code and features are present in the version of the platform-dependent Figure 6 a above, there is place of bugs in the version of the software Cisco NX - OS 4.0(1a) are present in the version 4.0(1a) N1(1a).

-

The Nexus 1000V loop prevention

Hello

I wonder if there is a mechanism that I can use to secure a network against the loop of L2 packed the side of vserver in Vmware with Nexus 1000V environment.

I know, Nexus 1000V can prevent against the loop on the external links, but there is no information, there are features that can prevent against the loop caused by the bridge set up on the side of the OS on VMware virtual server.

Thank you in advance for an answer.

Concerning

Lukas

Hi Lukas.

To avoid loops, the N1KV does not pass traffic between physical network cards and also, he silently down traffic between vNIC is the bridge by operating system.

http://www.Cisco.com/en/us/prod/collateral/switches/ps9441/ps9902/guide_c07-556626.html#wp9000156

We must not explicit configuration on N1KV.

Padma

-

Cisco Nexus 1000V Virtual Switch Module investment series in the Cisco Unified Computing System

Hi all

I read an article by Cisco entitled "Best practices in Deploying Cisco Nexus 1000V Switches Cisco UCS B and C Series series Cisco UCS Manager servers" http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9902/white_paper_c11-558242.htmlA lot of excellent information, but the section that intrigues me, has to do with the implementation of module of the VSM in the UCS. The article lists 4 options in order of preference, but does not provide details or the reasons underlying the recommendations. The options are the following:

============================================================================================================================================================

Option 1: VSM external to the Cisco Unified Computing System on the Cisco Nexus 1010In this scenario, the virtual environment management operations is accomplished in a method identical to existing environments not virtualized. With multiple instances on the Nexus 1010 VSM, multiple vCenter data centers can be supported.

============================================================================================================================================================Option 2: VSM outside the Cisco Unified Computing System on the Cisco Nexus 1000V series MEC

This model allows to centralize the management of virtual infrastructure, and proved to be very stable...

============================================================================================================================================================Option 3: VSM Outside the Cisco Unified Computing System on the VMware vSwitch

This model allows to isolate managed devices, and it migrates to the model of the device of the unit of Services virtual Cisco Nexus 1010. A possible concern here is the management and the operational model of the network between the MSM and VEM devices links.

============================================================================================================================================================Option 4: VSM Inside the Cisco Unified Computing System on the VMware vSwitch

This model was also stable in test deployments. A possible concern here is the management and the operational model of the network links between the MSM and VEM devices and switching infrastructure have doubles in your Cisco Unified Computing System.

============================================================================================================================================================As a beginner for both 100V Nexus and UCS, I hope someone can help me understand the configuration of these options and equally important to provide a more detailed explanation of each of the options and the resoning behind preferences (pro advantages and disadvantages).

Thank you

PradeepNo, they are different products. vASA will be a virtual version of our ASA device.

ASA is a complete recommended firewall.

Maybe you are looking for

-

Satellite L750D 1GC - where to find the drivers for Windows 7 64 bit

Unfortunately, I couldn't find a restore disc so used a disk of charge windows 7 64 bit, it is now installed, but I need to get all the drivers and install so that it works correctly. Where could I get these / how to install and is there a single pac

-

I can't import Windows Embedded POS ready 2009 in MDT 2012

When I try to import a complete set of source files in MDT2012 workbench, it gives me the following error: Operation 'import' on target 'Operating system '. System.Management.Automation.CmdletInvocationException: Index (based zero) must be greater th

-

Red LED light flashes 3 times, two sec pause and so on

I have a Dx5150 MT, Windows XP with this problem: Red LED light flashes 3 times, two sec pause and so on. According to http://h20000.www2.hp.com/bizsupport/TechSupport/Document.jsp?objectID=c00868914&lang=en&cc=us&taskI... Reinstall the processor, bu

-

I downloaded Nifskope, and whenever I try to run it it says I am missing QTCore4.dll

Original title: QTCore.dll help So I downloaded Nifskope, and whenever I try to run it it says I am missing QTCore4.dll. A way to download from a secure site, or a fix that I can use? Not looking for the Sims, or whatever it is, the Skyrim modding.

-

Differences between versions of the WRT54G

Hi all What are the differences between the different versions of the WRT54G? for example, v1.0, v2.0, V3.0... V8.0, etc. Thank you.