Question about expdp and impdp number of tables in a schema 11.2.0.3

Hello Experts-

I have 145 tables of a certain pattern that I want to save for example export and import the same tables in another schema is possible?

- REMAP schema to use for this purpose?

- I must quote all 145 tables? or I can use '%' as all the tables I want export & import start with a certain word-(RECORDS_)?

Thanks in advance

Hi 885068,

I have 145 tables of a schema of some I want to save for example export & import the same tables in another schema is possible?

Yes, it is possible.

REMAP schema to use for this purpose?

Yes, it is the purpose of REMAP_SCHEMA. Import metadata and data from one schema to another.

I must quote all 145 tables? or I can use '%' as all the tables I want to export & import begin with a certain word-(RECORDS_)?

It's always better when you have total control of what you're doing. I prefer to put all the tables, of course it not easy, but you can use sql statements to generate the TABLES all = parameters you need like the following:

SELECT 'TABLES='||table_name FROM all_tables WHERE owner = '' and table_name like 'RECORDS_%' ;

In case of import, you need not specify that you must import because the entire dump contains the tables that you imported exported tables, but it is very advisable that you specify the tables.

So if the tables in the target schema must be created with names different than the original, you must use the parameter REMAP_TABLE =

HTH, Juan M

Tags: Database

Similar Questions

-

Hey guys

I'm fairly new to using expdp and impdp and I was wondering if anyone knew a solution to my problem. I am currently using expdp in parallel mode, which creates several DMP files. Now, when I use impdp, I use the following code:

Impdp stglsr/stglsr@tintdb tables = CTDA0FIL_CDC CONTENT = ALL = LSR_DQA_REPORTS dumpfile = CTDA0FIL_CDC_01.dmp directory, CTDA0FIL_CDC_02.dmp logfile = CTDA0FIL_CDC_IMP.log

As you can see I'm going into each of the files to be imported. As it will be run by someone else and I can't guarantee the export will produce the number of files, is it possible to just import all the files associated with an export particular rather than to specify the files to be imported individually.

-Below is an example of my export:

expdp stglsr/stglsr@tintdb parfile = ctca0fil_export.par

-By file:

TABLES = CTCA0FIL_CDC

DUMPFILE=CTCA0FIL_CDC_%U.dmp

CONTENT = ALL

DIRECTORY = LSR_DQA_REPORTS

LOGFILE = CTCA0FIL_CDC.log

PARALLEL = 4

QUERY = CTCA0FIL_CDC: ' where ETL_STATUS = 'E "".

JOB_NAME = CTCA0FIL_EXP_JOB

If anyone can help I would be very grateful.

Thank you

Simon[email protected] wrote:

Here's the import statement as I'm now using:Impdp stglsr/stglsr@tintdb tables = CTDA0FIL_CDC CONTENT = directory = LSR_DQA_REPORTS dumpfile=CTDA0FIL_CDC_U%.dmp logfile = CTDA0FIL_CDC_IMP.log ALL

But when I start using it, it says:

ORA-39001: invalid argument value

ORA-39000: bad dump file specification

ORA-39124: dump file name 'CTDA0FIL_CDC_U%.dmp' contains invalid substitution variableThank you

SimonIts you and not U % %.

-

Full expdp and impdp: one db to another

Hello! Nice day!

I would like to ask for any help with my problem.

I would like to create a full database export and import them to a different database. This data base 2 are on separate computers.

I try to use the expdp and impdp tool for this task. However, I experienced some problems during the import task.

Here are the details of my problems:

When I try to impdp the dump file, it seems that I was not able to import data and metadata to the user.

Here is the exact command that I used during the import and export of task:

Export (Server n ° 1)

expdp user01 / * directory = ora3_dir full = y dumpfile=db_full%U.dmp filesize = parallel 2 logfile = db_full.log G = 4

import (Server #2)

Impdp user01 / * directory = ora3_dir dumpfile=db_full%U.dmp full = y log = db_full.log sqlfile = db_full.sql estimate = parallel blocks = 4

Here is the log that was generated during the impdp runs:

;;;

Import: Release 10.2.0.1.0 - 64 bit Production on Friday, 27 November 2009 17:41:07

Copyright (c) 2003, 2005, Oracle. All rights reserved.

;;;

Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.1.0 - 64 bit Production

With partitioning, OLAP and Data Mining options

Table main "PGDS. "' SYS_SQL_FILE_FULL_01 ' properly load/unloaded

Departure "PGDS. ' SYS_SQL_FILE_FULL_01 ': PGDS / * directory = ora3_dir dumpfile=ssmpdb_full%U.dmp full = y logfile = ssmpdb_full.log sqlfile = ssmpdb_full.sql.

Object DATABASE_EXPORT/TABLESPACE of treatment type

Type of object DATABASE_EXPORT/PROFILE of treatment

Treatment of DATABASE_EXPORT/SYS_USER/USER object type

Treatment of DATABASE_EXPORT/SCHEMA/USER object type

Type of object DATABASE_EXPORT/ROLE of treatment

Treatment of type of object DATABASE_EXPORT, GRANT, SYSTEM_GRANT, PROC_SYSTEM_GRANT

DATABASE_EXPORT/SCHEMA/SCHOLARSHIP/SYSTEM_GRANT processing object type

DATABASE_EXPORT/SCHEMA/ROLE_GRANT processing object type

DATABASE_EXPORT/SCHEMA/DEFAULT_ROLE processing object type

DATABASE_EXPORT/SCHEMA/TABLESPACE_QUOTA processing object type

DATABASE_EXPORT/RESOURCE_COST processing object type

Treatment of DATABASE_EXPORT/SCHEMA/DB_LINK object type

DATABASE_EXPORT/TRUSTED_DB_LINK processing object type

DATABASE_EXPORT/PATTERN/SEQUENCE/SEQUENCE processing object type

Treatment of type of object DATABASE_EXPORT/PATTERN/SEQUENCE/EXCHANGE/OWNER_GRANT/OBJECT_GRANT

DATABASE_EXPORT/DIRECTORY/DIRECTORY of processing object type

Treatment of type of object DATABASE_EXPORT/DIRECTORY/EXCHANGE/OWNER_GRANT/OBJECT_GRANT

Treatment of type of object DATABASE_EXPORT/DIRECTORY/EXCHANGE/CROSS_SCHEMA/OBJECT_GRANT

Type of object DATABASE_EXPORT/CONTEXT of transformation

Object DATABASE_EXPORT/SCHEMA/PUBLIC_SYNONYM/SYNONYM of treatment type

Object DATABASE_EXPORT/SCHEMA/SYNONYM of treatment type

DATABASE_EXPORT/SCHEMA/TYPE/TYPE_SPEC processing object type

Treatment of type of object DATABASE_EXPORT, SYSTEM_PROCOBJACT, PRE_SYSTEM_ACTIONS, PROCACT_SYSTEM

Treatment of type of object DATABASE_EXPORT, SYSTEM_PROCOBJACT, POST_SYSTEM_ACTIONS, PROCACT_SYSTEM

DATABASE_EXPORT/SCHEMA/PROCACT_SCHEMA processing object type

DATABASE_EXPORT/SCHEMA/TABLE/TABLE processing object type

Treatment of type of object DATABASE_EXPORT, SCHEMA, TABLE, PRE_TABLE_ACTION

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/SCHOLARSHIP/OWNER_GRANT/OBJECT_GRANT

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/SCHOLARSHIP/CROSS_SCHEMA/OBJECT_GRANT

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/INDEX/INDEX

DATABASE_EXPORT/SCHEMA/TABLE/CONSTRAINT processing object type

Object type DATABASE_EXPORT/SCHEMA/TABLE/INDEX/STATISTICS/INDEX_STATISTICS of treatment

DATABASE_EXPORT/SCHEMA/TABLE/COMMENT processing object type

DATABASE_EXPORT/SCHEMA/PACKAGE/PACKAGE_SPEC processing object type

Treatment of type of object DATABASE_EXPORT/SCHEMA/PACKAGE/SCHOLARSHIP/OWNER_GRANT/OBJECT_GRANT

DATABASE_EXPORT/SCHEMA/FEATURE/FUNCTION processing object type

Treatment of type of object DATABASE_EXPORT/SCHEMA/FUNCTION/GRANT/OWNER_GRANT/OBJECT_GRANT

DATABASE_EXPORT/DIAGRAM/PROCEDURE/PROCEDURE processing object type

Treatment of type of object DATABASE_EXPORT/DIAGRAM/PROCEDURE/GRANT/OWNER_GRANT/OBJECT_GRANT

DATABASE_EXPORT/SCHEMA/FUNCTION/ALTER_FUNCTION processing object type

DATABASE_EXPORT/DIAGRAM/PROCEDURE/ALTER_PROCEDURE processing object type

DATABASE_EXPORT/SCHEMA/VIEW/VIEW processing object type

Treatment of type of object DATABASE_EXPORT/SCHEMA/VIEW/SCHOLARSHIP/OWNER_GRANT/OBJECT_GRANT

Treatment of type of object DATABASE_EXPORT/SCHEMA/VIEW/SCHOLARSHIP/CROSS_SCHEMA/OBJECT_GRANT

DATABASE_EXPORT/SCHEMA/VIEW/COMMENT processing object type

Type of object DATABASE_EXPORT/SCHEMA/TABLE/CONSTRAINT/REF_CONSTRAINT of treatment

Type of object DATABASE_EXPORT/SCHEMA/PACKAGE_BODIES/PACKAGE/PACKAGE_BODY of treatment

DATABASE_EXPORT/SCHEMA/TYPE/TYPE_BODY processing object type

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/INDEX/FUNCTIONAL_AND_BITMAP/INDEX

Treatment of object type DATABASE_EXPORT/SCHEMA/TABLE/INDEX/STATISTICS/FUNCTIONAL_AND_BITMAP/INDEX_STATISTICS

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/STATISTICS/TABLE_STATISTICS.

Treatment of type of object DATABASE_EXPORT, SCHEMA, TABLE, POST_TABLE_ACTION

DATABASE_EXPORT/SCHEMA/TABLE/TRIGGER processing object type

DATABASE_EXPORT/SCHEMA/VIEW/TRIGGER processing object type

Treatment of object DATABASE_EXPORT/PATTERN/JOB type

Object DATABASE_EXPORT/SCHEMA/DIMENSION of treatment type

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/POST_INSTANCE/PROCACT_INSTANCE

Treatment of type of object DATABASE_EXPORT/SCHEMA/TABLE/POST_INSTANCE/PROCDEPOBJ

Treatment of type of object DATABASE_EXPORT, DIAGRAM, POST_SCHEMA, PROCOBJ

Treatment of type of object DATABASE_EXPORT, DIAGRAM, POST_SCHEMA, PROCACT_SCHEMA

Job 'PGDS. "" SYS_SQL_FILE_FULL_01 "led to 17:43:09

Thank you in advance.The good news is that your dumpfile seems fine. It has metadata and data.

I looked through your impdp command and found your problem. You have added the sqlfile parameter. This indicates datapump to create a file that can be run from sqlplus. In fact, it is not objects. It also excludes data because data could get pretty ugly in a sqlfile.

Here's your impdp command:

Impdp user01 / * directory = ora3_dir dumpfile=db_full%U.dmp full = y log = db_full.log sqlfile = db_full.sql...

Just remove the

sqlFile = db_full. SQL

After you run your first job, you will have a file named db_full.sql that has all the inside create statements. After you remove the sqlfile parameter, your import will work.

Dean

-

About a month ago I posted a question about iMovie and not being able to "share". I solved the problem thanks, so no more emails!

Hi Michael,

If you want to stop receiving notifications by electronic mail, in the thread, that you have created, then I suggest that you follow the steps below:

One time connected to the Apple Support communities, visit your mini profile and select manage subscriptions.

Content

To manage this content, you are currently subscribed and changing your preferences, select the content.

Select next to see what content you are currently following. Note that any thread you are responding you subscribe you automatically to this thread.

You can select to terminate a subscription to a thread.

Learn how to manage your subscriptions

Take care.

-

Question about business and Service of Proxy

Hi friends,

I have a question about services and proxy services business IE I need to find the time it takes to call an operation on multipoint business of proxy service, how can I do in oracle service bus (OSB)? You can help quicklyIf your question is only about the performance analysis,

try to activate the 'monitoring' on the 'operational' of the Service Proxy Service/Company tab.

For the Proxy Services, if you activate at the level of the 'Action' you get a lot of information about each individual action in a power of attorney.See http://www.javamonamour.org/2011/06/osb-profiling-your-proxy-service.html

-

need advice on expdp and impdp

I have a database on server win of oracle 10g.

I installed a newserver on oracle 11g in GNU / linux.

Now I would like to clone the data old server to the new server.

I think expdp and impdp is the best option.

If so, what would be the maximum amount of data that I can cope?

its better to do it in shema shema or database data?

help me guysIf you have SQL Developer, on the Tools menu, there is an option to copy the database. It will not be an easy one.

-

Not a Dba, try a simple operation with expdp and impdp

Hi all

My Oracle database: 10G

I'm not a DBA, but I'm stuck to what looks like a simple operation, here is the context

I exported 2 tables schema HR using expdp and here is the command:

C:\ > expdp hr/hr@ORCL tables = EMPLOYEES, DEPARTMENTS directory = ORACLE_BKP dumpfile = hr_e_d.dmp logfile = hr_e_d.log

so this part uptill now is no problem, now I try to use this dmp 'hr_e_d.dmp' file and the urge to put these two tables in a different pattern that is pkg_utils and here is the command that starts the error:

C:\ > impdp pkg_utils/pkg_utils@ORCL schemas = HR = dumpfile ORACLE_BKP1 directory = hr_

e_d.dmp

Import: Release 10.2.0.1.0 - Production on Sunday, September 8, 2013 14:51:04

Copyright (c) 2003, 2005, Oracle. All rights reserved.

Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.1.0 - production

tion

With partitioning, OLAP and Data Mining options

ORA-31655: no data or metadata of objects selected for employment

ORA-39039: pattern Expression "(' HR')" contains no valid schema.

Table main "PKG_UTILS." "' SYS_IMPORT_SCHEMA_01 ' properly load/unloaded

Start "PKG_UTILS". ' SYS_IMPORT_SCHEMA_01 ': pkg_utils/***@ORCL schemas = HR.

Directory = ORACLE_BKP1 dumpfile = hr_e_d.dmp

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

Work "PKG_UTILS". "" SYS_IMPORT_SCHEMA_01 "carried out at 14:51:05

PS: the requirement is only to employees, departments of the HR export schema pkg_utils schema tables

Concerning

Rahul

C:\>Impdp pkg_utils/pkg_utils@ORCL schemas = HR directory = ORACLE_BKP1 = hr_ dumpfile

e_d.dmp

Instead of schemas = HR try remap_schema = h:pkg_utils

See Data Pump Import

-

Windows 7 starts questions about mac and office 2010 following an installation

It's a long cut as short as vent. I've been miss sold a record of upgrade of windows 8 full disk-shaped, with norton and office 2010. My goal of creating windows on my mac for a course I'm taking. After that know I need windows 7, I bought it online. I created a partition of 80g on my mac charge windows 7, then norton. Until I loaded the office that I was invited to perform the update from apple in the windows software 7. Note before I installed norton, I load boot camp mac utilities according to the instructions. So, followed by all as should be. The prompt update from apple was quick time, iTunes and airport, I checked all and then updated. Then I loaded office 2010 and obtained throughout the process from the Microsoft site. On the last part of the installation, the installation failed due to a mistake of hanging iTunes. I tried again and again, I fixed the update to iTunes to add and remove the section of windows. Then, I got different errors. I decided to remove the whole score without uninstalling all programs again. When I get to the windows live, that this falls priming. I get various error messages whenever I try to start the process. As a header of Bank pool, fatal error and so on. Can anyone help? What is the damage?

Hello

Thanks for posting your query in Microsoft Community.

Because the question is limited to Windows 7 on your Mac with Boot Camp, I suggest you contact Apple Boot Camp support for assistance.

http://www.Apple.com/support/Bootcamp/

I hope it helps. If you have any questions about Windows in the future, please let us know. We will be happy to help you.

-

Question about snapshots and virtual machines

Hi all

I have a small question about snapshots. I use VMware for about 6 months and I read about snapshots and how they work but still have a question to make sure that I understand how it works and it will work the way I think it works.

I have a Windows 2003 server, which is a virtual machine. I have an application that uses MSDE database. I want to upgrade the database to MS SQL 2005, but to do this I need to back up the MSDE database, uninstall MSDE, and then install SQL 2005 and move the SQL database. This happens on the same VM.

What I was thinking if I well Snapshots correctly is to snap turned the virtual machine before doing anything. Then do my upgrade steps and see if everything works. If its all messed up so I should be able to return to my shot and everything should be the way he had. What is the good? One of the main reasons I moved the server VM was because of this upgrade.

After reading the documentation for the snapshots, it's how I undestood it. Pretty much any change could be reversed with snapshots. I wish just they had put in a few examples in the PDF file. Also it would be the same for all type of grades of the OS? Could I snapshot a Windows 2000 Server, and then "in the upgrade of the square" to Windows 2003? I've cloned a VM to do this but was curious about whether snapshots would be just as easy.

Thanks for your help.

Hello.

What I was thinking if I well Snapshots correctly is to snap turned the virtual machine before doing anything. Then do my upgrade steps and see if everything works. If its all messed up so I should be able to return to my shot and everything should be the way he had. What is the good?

That is right.

After reading the documentation for the snapshots, it's how I undestood it. Pretty much any change could be reversed with snapshots. I wish just they had put in a few examples in the PDF file. Also it would be the same for all type of grades of the OS? Could I snapshot a Windows 2000 Server, and then "in the upgrade of the square" to Windows 2003? I've cloned a VM to do this but was curious about whether snapshots would be just as easy.

Exactly the same thing for the upgrades of the OS.

Perhaps the most important thing with the snapshots is to keep an eye on them and don't let them sit too long or become too big. You have described the use cases are very good uses for snapshots. Just make sure you have a solid plan with a schedule set, so that you can come back or validate changes before the snapshot becomes too large.

Good luck!

-

Question about AUTOALLOCATE and UNIFORM in the tablespace management

Good afternoon

I'm unclear as to if a UNIFORM extent allocation means that the data file must be manually extended or not.

Documentation:

>

The following statement creates a managed tablespace locally named lmtbsb and specifies AUTOALLOCATE:

Lmtbsb CREATE TABLESPACE DATAFILE ' / u02/oracle/data/lmtbsb01.dbf' SIZE 50 M

EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

AUTOALLOCATE causes tablespace system managed with a volume of minimum extent of 64K.

The alternative to AUTOALLOCATE is UNIFORM. who says that the tablespace is managed with uniform size extents. You can specify the size of the uniform SIZE clause. If you omit the SIZE, the default size is 1 M.

The following example creates a tablespace with uniform extents of 128 K. (In a database with 2 K blocks, each would amount to 64 blocks of data). Each measure 128K is represented by a bit in the bitmap extent for this file.

Lmtbsb CREATE TABLESPACE DATAFILE ' / u02/oracle/data/lmtbsb01.dbf' SIZE 50 M

EXTENT MANAGEMENT UNIFORM LOCAL 128K SIZE;

>

It is clear that the clause UNIFORM control the size of the scope which will be awarded, but doesn't really say if these will be assigned manually or automatically. In other words, I'm not clear as to whether or not the data in the second case file will be automatically extended.

In my test environment, when I published the command:

and only looked into the tablespace EM I had created above, there is no check mark in the box "Automatically extend datafile when full", which led to the doubt that I have now.SQL> create tablespace largetabs datafile 'E:\Oracle\app\Private\oradata\dbca\largetabs.dbf' size 100M extent management local uniform size 16M;

Is: during the extended allocation is UNIFORM, this means that space must be allocated manually or can the renewed automatically datafile? If it can be automatically extended, I would appreciate an example of a create tablespace command that allows to achieve this result.

Thank you for your help,

John.John

There is nothing relationship extended datafile and management extend way (autolallocate or manually-uniform). See below

SQL*Plus: Release 10.2.0.1.0 - Production on Sun Sep 12 22:47:15 2010 Copyright (c) 1982, 2005, Oracle. All rights reserved. Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.1.0 - Production With the Partitioning, OLAP and Data Mining options SQL> select name from v$datafile; NAME -------------------------------------------------------------------------------- D:\ORACLE\PRODUCT\10.2.0\ORADATA\TEST\SYSTEM01.DBF D:\ORACLE\PRODUCT\10.2.0\ORADATA\TEST\UNDOTBS01.DBF D:\ORACLE\PRODUCT\10.2.0\ORADATA\TEST\SYSAUX01.DBF D:\ORACLE\PRODUCT\10.2.0\ORADATA\TEST\USERS01.DBF SQL> create tablespace largetabs datafile 'D:\ORACLE\PRODUCT\10.2.0\ORADATA\TEST \largetabs.dbf' size 100M extent management local uniform size 16M; Tablespace created. SQL> desc dba_data_files Name Null? Type ----------------------------------------- -------- ---------------------------- FILE_NAME VARCHAR2(513) FILE_ID NUMBER TABLESPACE_NAME VARCHAR2(30) BYTES NUMBER BLOCKS NUMBER STATUS VARCHAR2(9) RELATIVE_FNO NUMBER AUTOEXTENSIBLE VARCHAR2(3) MAXBYTES NUMBER MAXBLOCKS NUMBER INCREMENT_BY NUMBER USER_BYTES NUMBER USER_BLOCKS NUMBER ONLINE_STATUS VARCHAR2(7) SQL> select AUTOEXTENSIBLE from dba_data_files where TABLESPACE_NAME=upper('la rgetabs'); AUT --- NO SQL> create tablespace largetabs1 datafile 'D:\ORACLE\PRODUCT\10.2.0\ORADATA\TES T\largetabs1.dbf' size 100M extent management local AUTOALLOCATE ; Tablespace created. SQL> select AUTOEXTENSIBLE from dba_data_files where TABLESPACE_NAME=upper('la rgetabs1'); AUT --- NO SQL>These parameter, then use to know how to extend automatically allocate size by oracle or manually size by before defined number. All these to sometimes as documentation written as

If you are planning that the tablespace contains objects of various sizes, requiring many scopes with varying degrees > sizes, then AUTOALLOCATE is the best choice. AUTOALLOCATE is also a good choice if it is not important for > you have much control over the allocation of space and the deallocation, because it simplifies the management of the table space. A space may be wasted with this parameter, but the advantage of having the Oracle database manage your space > probably outweigh this disadvantage.

If you want exact control on unused space, and you can predict exactly the space to allocate for a > object or objects and the number and size of extensions, and then UNIFORM is a good choice. This setting ensures > you'll ever unusable space in your tablespace. -

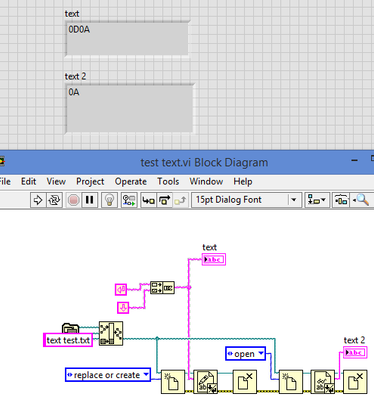

Hey guys,.

Quick question on LF and CR. As you can see I'm LF and CR of writing to a text file and because directly.

However, I don't get the CR at the time where I read it. Why is this? Is it because Windows ignores the CR?

Thank you

Hi dora,

read the Help for the WriteText function.

There is an option for handling of CR/LF characters and explained mentioned in the "Advanced" section of the article for help!

-

Question about on VM consolidation number and percentage of CPU Ready

Hi people,

I'm trying to consolidate as many VM in simple ESXi servers on HP on AMD Opteron blades with 32 CPU available logic (based on the number on the vSphere console)

Can anyone here please share some thoughts and comments about what is the best indication to avoid adding more VMS that ESXi hosts can treat?

I must ensure that the number of vCPU altogether exceed is not 32 logic processor available, or it can be more than that?

Running a script powershell LucD to collect CPU ready percentage shows that some virtual machines had prepared more than 10% CPU (for the most part 2 x vCPU) up to 22% (this one with 8 x vCPU)

Hello

I had the same situation on HP Blade and DL serer with two different CPU vendors.

Intel CPU based on my experiences, has better performance compared to AMD CPU when the CPU is too committed on ESXi server.

I have HP BL460c G6 and G7 on one of our sites, the server has 12 physical and 24 cores logic core.

I assigned four cores to each customers (to fix the voice problem) and virtual machines are working properly, RDY CPU is less than 5% in general and there is no performance problem.

Each blade server hosts 20 VMs and total virtual cores are 80 cores, so we attribute 1:3 (Physics: virtual) core.

On the other hand, I DL585 G7 on our sites some server has 64 physical cores (4 Sockets and each catches have 16 cores). Servers host 50 active VMs usually but RDY CPU is greater than 10% or 7% on them. Total virtual cores are 200 and CPU assignment ratio is 3.

I think that, if you want to optimize performance on AMD CPU, 1:1 or 1:2 CPU assignment report is the best choice.

-

Hi guys

Actually I got an export with the utility expdp Oracle 11 g database.

It is important to mention that the source database has a 8K db_block_size and the target a db_block_size = 16K, so I want to ask if there are any isue referring to this case. +

Thanks in advance.

Concerning

Hello

Yes, you'll only face from data while doing impdp due to character set conversion, in some paintings

and you encounter this symbol-> where that oracle cannot perform the conversion.

Thank you

Himanshu

-

Satellite A100-003: Question about RAM and recording model number

Dear Sir/Madam,

1. the first problem is that I bought the notebook of Toshiba Satellite A100-003 model. But when I tried to save the European web site of Toshiba with my serial number it says Satellite A100-011. I do not understand what it means that. But I do not know my phone model A100-003-PSAARE-0470257Z. The serial number of my laptop is: 17739127G

2. Another problem is the European website of Toshiba and Turkey Toshiba official site (http://www.toshibatr.com/notebook_filter.asp?ktg=A100) says Rams are 533 MHz "2 048 (1, 024 + 1, 024) MB of RAM DDR2 (533 MHz)"but mine is at 266 MHz. "

http://img510.imageshack.us/my.php?image=satellitea100003xg4.jpg

I was wondering in Toshiba laptops of the Turkey has problems or handed in state something? I sent e-mail to the web site of Toshiba Turkey but they don't send me emails or information.

Thank you for helping me,

HalinHello

First of all your registration number is not a 'problem '. Maybe it s just a small mistake in their database. When I registered my P200 registration reported me another type as mine was, but after a call to the hotline of toshiba told me, that my machine is listed correctly in the database.

Don t worry, register just she.With regard to the RAM:

Do you know why's called it 'DDR' ram? That means 'RAM memory' and the goal is to double the frontsidebus.

In your case, that means: the REAL AND PHYSICAL frontsidebus is 266 Mhz, but because the flow is double you have a 533 MHz frontsidebus (532, 34.Mhz).

CPU - Z and WCPUID are simply display the physical clock of the RAM, but the frontsidebus double the signal and then you pure and authentic to 533 MHZ.I hope I could explain something to you. ;)

Have a nice weekend

-

expdp and impdp between TWO different tables

DBVersion: 10g and 11g

I have two tables with the same data structure, same columnames and wide except name difference

orders and order_history

I got export orders for a date range and now want to import in order_history

What do I do? If so can you please provide me with the syntax

Thank youREMAP_TABLE:

http://docs.Oracle.com/CD/E11882_01/server.112/e22490/dp_import.htm#BABIGHCC

Maybe you are looking for

-

HP Envy: the NVida driver update

HP sent me a driver update that dutifully, I installed and now my computer screen keeps going on and outside. What should do?

-

Laptop HP 2000: problem laptop GPU

After the upgrade to Windows 10 a few days ago, I realized something. Games and movies, I ran before the upgrade no longer well perfroming; FPS, decreased dramatically to the point were it makes games unplayable and very bad films. After doing some

-

Seagate Hard Drive Firmware Update for the drive model: ST1000LM024

I have (HP Pavilion dv6-7180se Entertainment Notebook PC). All the drivers for this model installed correctly except the drivers Frimware (Seagate hard drive Firmware Update) and (PLDS Optical Drive Firmware Update). the first one says "this hard dri

-

Enumeration e = FileSystemRegistry.listRoots(); while (e.hasMoreElements()) { root = (String) e.nextElement(); Logger.logEventInfo("File System Registry: " + root); if (root.equalsIgnoreCase("sdcard/")) { sdCardPresent = true; break; } } If I put a b

-

My wifi blackBerry smartphones does not work

(Sorry if I put this in the wrong place, I'm new). Just a little background - my phone was * completely * fuck for more than a week after he had a nice bathroom, but yesterday it worked perfectly, including the WiFi. Today ' today-no WiFi. My compute