Restrict the LabVIEW deployment on NOR sbRIO

Hi all

I searched a lot to find a solution, but could not find one.

I have to protect my controller sbRIO OR deploy any application of LV

For example, if anyone with LV connects to the network of the controller, it should be not able to deploy anything to sbRIO before filling the credentials of the controller.

Web configuration of the controller, I deleted all permissions to "everyone" group and set password for the user "admin".

After that, MAX OR requires a password before you save anything to the controller, but LV does not require anything before you proceed with the connection of the programmer and the deployment of a VI on it.

Could you please help me?

Thank you in advance.

Khoren

What Single-Board RIO device do you use (Linux or VxWorks RTOS based?)

The following white paper explains how to protect a Single-Board RIO and CompactRIO against unauthorized access.

Overview of best practices for safety in RIO systems

http://www.NI.com/white-paper/13069/en/

Looks like you need configure access to the server VI in part 1 to read the Security white paper.

Kind regards

Tags: NI Software

Similar Questions

-

RT code works since the LabVIEW environment, but not when I create and deploy

I think there are a lot of reasons why this can happen, but I can't seem to pin one.

I have a classic controls program that runs on a cRIO. We recently decided to change the communication of a ProfiBus comsoft on Ethernet/IP card. (Industrial Protocol for Allen Renaud automata). For various reasons, we put the fuse in communication in the control loop.

Now when I run the LabVIEW now, it works fine. I can see data going to and coming from the controller. I can sniff packets and they look good. I get about 30 milliseconds on the loop, which is long, but since I am running in the IDE, I think is not bad. (In other words, I get data in and out every 30 milliseconds).

When I compile and set the binary on the cRIO, it breaks. I can still sniff packets, but what I'm getting now, is that all traffic to the controller of read requests. My write requests are missing. In addition to reading queries are poorly trained. Rather than ask 43 items in a table, they ask 1.

A test that I tried was to disable the read request. For the binary file, I don't see any traffic. For the IDE, I see write queries.

I use LV 2009 SP1. I have the version of NOR-Labs of the Ethernet/IP driver. (We have a request for a quote, but do not have the official driver.) In the meantime, faster I get this done, the happier everyone will be ;-)

Any suggestions?

Attention to the nodes of property which is usually my problem when it happens. Some who say they work in RT do not work in compiled RT

-

The USRP CSD requires the LabVIEW Communications?

I recently installed LabVIEW 2013 on my machine, as well as a whole bunch of toolboxes:

LabVIEW English 2013

VI Package Manager

Module LabVIEW Control Design and Simulation 2013

2013 LabVIEW Datalogging and Supervisory Control Module

2013 LabVIEW MathScript RT Module

NI LabVIEW 2013 LEGO (R) MINDSTORMS (R) NXT Module (in English)

Module OR Vision Development 2013

Module LabVIEW FPGA of 2013 (English)

Xilinx toolchain 14.4

Module time real LabVIEW 2013 (English)

2013 LabVIEW Touch Panel module

2013 LabVIEW Robotics module

Software OR SignalExpress 2013

LabVIEW Sound and Vibration Measurement Suite 2013

Module LabVIEW Statechart of the 2013

LabVIEW 2013 for myRIO Module

Toolkit OR run real time Trace 2013

2013 LabVIEW System Identification Toolkit

LabVIEW Toolkit 2013 Digital Filter Design

4.3.4 for LabVIEW Modulation Toolkit

2013 LabVIEW VI Analyzer Toolkit

2013 LabVIEW Database Connectivity Toolkit

2013 LabVIEW Report Generation Toolkit for Microsoft Office

LabVIEW Spectral Measurements Toolkit 2.6.4

2013 LabVIEW Advanced signal processing Toolkit

LabVIEW 2013 PID and Fuzzy Logic Toolkit

Kit filter LabVIEW Adaptive, 2013

Toolkit LabVIEW DataFinder of the 2013

2013 LabVIEW Desktop Execution Trace Toolkit

LabVIEW 2013 Multicore analysis and matrices hollow Toolkit

LabVIEW 2013 power electric Suite

Toolkit LabVIEW 2013 GPU analysis

Biomedical Toolkit LabVIEW 2013

Module LabVIEW 2013 OR SoftMotion

NEITHER Motion Assistant 2013

NEITHER Vision Builder for Automated Inspection 2012 SP1

OR DIAdem Professional 2012 SP1 (English)

LabWindows/CVI 2013 development system

Module time real LabWindows/CVI 2013

LabWindows/CVI Spectral Measurements Toolkit 2.6.4

Spectral measures of LabWindows/CVI DURATION 2.6.4

LabWindows/CVI SQL Toolkit 2.2

Toolkit for processing Signal of LabWindows/CVI 7.0.2

LabWindows/CVI PID Control Toolkit 2.1

Execution of LabWindows/CVI Profiler 1.0

Measurement Studio Enterprise Edition for Visual Studio 2012 2013

General safety NI Patch 2nd quarter of 2013

NEITHER TestStand 2013

NEITHER ELVISmx 4.5

NOR-DAQmx 9.7.5

Xilinx 10.1 Compilation tools (requires the build tools additional Xilinx DVD)

Device drivers or - February 2013I tried to follow this tutorial with the USRP 2932, coming soon, but I found out later that I have seem to have none of the LabVIEW Communications. No not those who prevent me from using the USRP radio? If not, then is there any restrictions on what I can do with the radio without communication?

Hi BreadLB,

The link to the tutorial you posted is based on LabVIEW Communications System Design Suite, a new software environment designed to accelerate the prototyping of the algorithm and stable air. It is a completely separate and independent of LabVIEW environment. See my post here for more details. You can also download a free 30 day trial copy here. Your hardware is supported with LabVIEW and LabVIEW Communications.

The 2932 NOR is a network based USRP, and there a small on-board FPGA. For this reason, the FPGA on that specific product is not a target of LabVIEW FPGA. The NI 294 x / 5 x family has a large Kintex 7 FPGA and can be programmed using LabVIEW FPGA and LabVIEW Communications, as in the tutorial you posted. The 2932 OR can be used with your host PC and LabVIEW for a variety of applications. Unfortunately the tutorial that you have linked to your post requires the NI 294 x / 5 x hardware and Communications of LabVIEW. If you have questions about a specific application for your 2932, please post more details and we would be happy to help you.

-

A question about 'value spots' in the Vision Assistant of NOR

Hello, all,.

I am a new learner or vision.

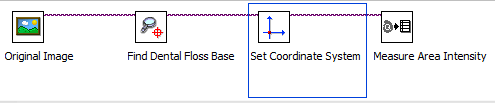

When I use 'mark set' in the Vision Assistant of NOR. It works well. for example

(The image above is the example in the Vision Assistant of NOR, please see help > Assistant Solution > Inspection of dental floss)

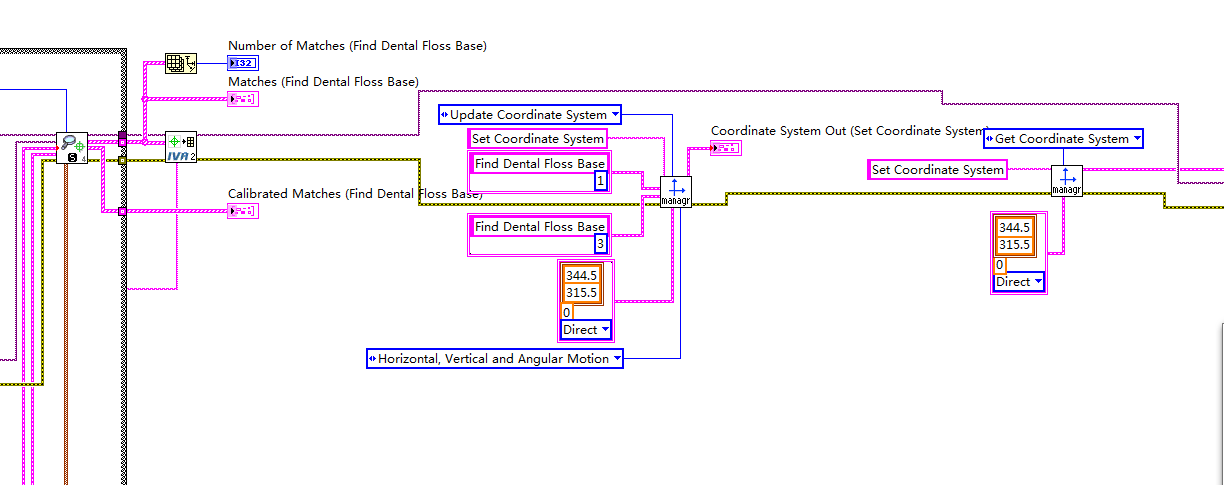

However, when I put the program NI Vision Assistant to create a Labview VI program. (Tools > create a LabVIEW VI), then run the VI, it no longer works.

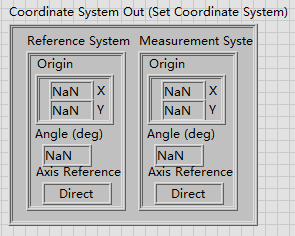

The result of the system of coordinates outside is always like that.

I can't find where is the error. I tried a few other examples in the Vision Assistant of NOR. If the command "set mark" is used in the program in the Vision Assistant of NOR. then create the LabVIEW VI.

The problem still occurs.

I hope some man experienced in NI Vision could help me.

Thank you.

Hi all

This problem has been reported under Corrective Action Request (CAR) 441410 and has since been fixed in 2014 of VDM. I highly recommend upgrading to the latest version here: www.ni.com/download/vision-development-module-2014-f1/4971/en/ because it contains other patches. If you are unable to do so, I have attached the patched file, you need to change. Replace the following file (assuming that the default installation location):

\National Instruments\LabVIEW 2013\vi.lib\vision\Vision Utils.llb Assistant with the version I have attached here, and that should solve the problem you see with NaN values of reference in the VG of the generated code.Kind regards

Joseph

-

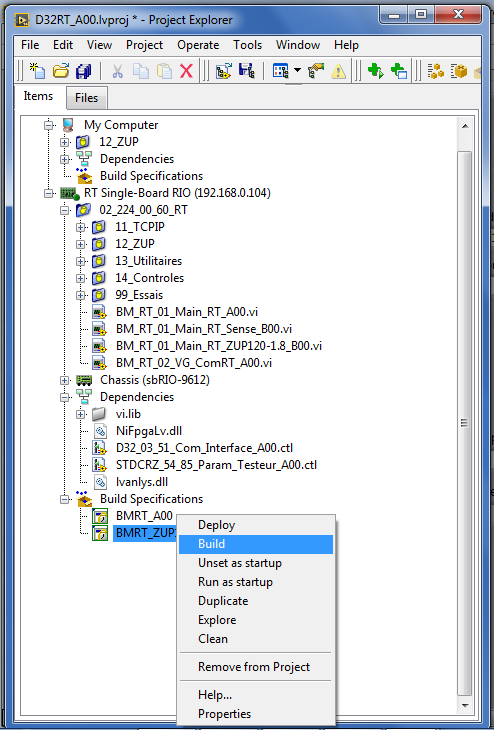

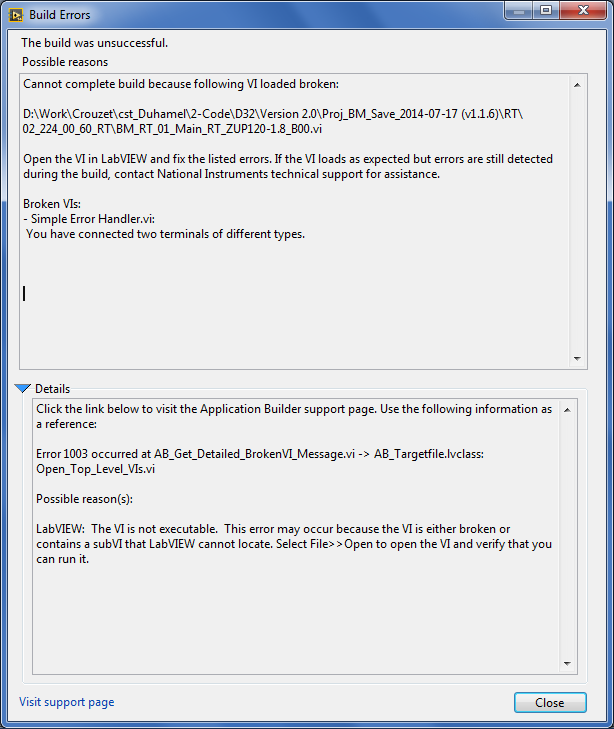

Labview 2011 project with sbRIO cannot build app with Lv 2014 RT: error 1003

Hello

I have a project developed with labview 2011. It contains a PC application and a SbRIO-RT-FPGA app.

I made a simple change in a RT VI. then I tryied to build the new application for my SbRIO.

the problem is when I click on 'Build', an error dialog box appears saying that there is a VI with broken arrow. but my VI are OK.

Can you help me please!

Best regards

-

Any camera regardless of the interface is available for use with the LabView interface.

Hello

I intend to go for some CMOS camera,

but I have a huge doubt before buying, the camera of menttioned above is not anywhere in this list. Nor can I see any type being supported USB device.

The question is

- is a camera regardless of the interface is available for use with the LabView interface?

- Can I build a VI to communicate with any device image and recording of camera and take the data?

Any kind of help or advice is greatly appreciated... I have to buy a CMOS camera and begin to run.

Thank you...

Hello Virginia,.

I am pleased that this information has been useful, one thing I wanted to mention is that USB 3.0 has its own standard USB 3.0 Vision which is currently not supported. If this camera is also Direct Show compatible then you will be able to acquire an image using IMAQdx and manipulate all the attributes that are published to the API Live Show.

I hope that USB 3.0 Vision will be supported in the near future, and we tentatively announced for this standard of communication for the August 2013 Vision Acquisition Softwareupdate.

See you soon,.

-Joel

-

How to calibrate the PCI-6110 with NOR-DAQmx

Hello

I am a new user of the PCI-6110 Council tries to run the calibration using LabVIEW procedure. I look at the document "Calibration" on the page of the manuals for the Board of Directors,

http://sine.NI.com/NIPs/nisearchservlet?nistype=psrelcon&NID=11888&lang=us&q=FQL: 28locale % 3Aen % 29 + AND + % 28phwebnt % 3 A 1081 + OR + phwebnt % 3 A 7075% 29 + AND + 28nicontenttype % 3Aproductmanual % 29 + AND + % 28docstatus % 3Acurrent % 29% 20RANK % 20nilangs: en & title = NOR + PCI-6110 + manual

One of the first steps in the document is to call the AI_Configure command to set the input mode, beach, etc. I'm using LabVIEW 8.5 with the NOR-DAQmx software, and I can not find the command (which, in LabVIEW, seems to be "AI Config.vi") anywhere. The calibration paper was written in 2003, and I gather from Google searches (please, correct me if wrong) that this command is actually a part of NOR-DAQ traditional, who was replaced by driver OR DAQmx.

My question is this: what is the equivalent to AI_Configure command in the latest software? Is it perhaps a subsequent document describing how to calibrate using NOR-DAQmx?

Thanks much for any help.

Tom McLaughlin

Hi Tom,

The calibration Procedure series B, E, M, S, which is also linked from this page, describes how to calibrate the PCI-6110 with NOR-DAQmx.

Brad

-

Question about the Acquisition continues through NOR-DAQmx

I'm a bit new to NIDAQmx methodology and I was wondering if someone could could give me some advice on accelerating certain measures of tension that I do with a case of DAQ NI USB-6363.

I have a python script that controls and takes measurements with a few pieces of equipment of laboratory by GPIB and also takes measurements in the area of DAQ OR DAQmx via (I use a library wrapper called pylibdaqmx that interfaces with the libraries C native). As I do with the data acquisition unit is 32 k samples at 2 MHz with a differential pair to AI0. An example of code that performs this operation is:

from nidaqmx import AnalogInputTask # set up task & input channeltask = AnalogInputTask() task.create_voltage_channel(phys_channel='Dev1/ai0', terminal='diff', min_val=0., max_val=5.) task.configure_timing_sample_clock(rate=2e6, sample_mode='finite', samples_per_channel=32000) for i in range(number_of_loops): < ... set up/adjust instruments ... > task.start() # returns an array of 32k float64 samples # (same as DAQmxReadAnalogF64 in the C API) data = task.read(32000) task.stop() < ... process data ... > # clear task, release resourcestask.clear()del task< ... etc ... >The code works fine and I can all the 32 k spot samples, but if I want to repeat this step several times in a loop, I start and stop the job every time, which takes some time and is really slow down my overall measure.

I thought that maybe there is a way to speed up by configuring the task for continuous sample mode and just read from the channel when I want the data, but when I configure the sample for the continuous mode clock and you issue the command of reading, NOR-DAQmx gives me an error saying that the samples are no longer available , and I need to slow the rate of acquisition or increase the size of the buffer. (I'm guessing the API wants to shoot the first 32 k samples in the buffer zone, but they have already been replaced at the time wherever I can playback control).

What I wonder is: How can I configure the task to make the box DAQ acquire samples continuously, but give me only the last 32 samples buffer on demand k? Looks like I'm missing something basic here, maybe some kind of trigger that I need to put in place before reading? It doesn't seem like it should be hard to do, but as I said, I'm kinda a newbie to this.

I understand the implementation of python that I use is not something that is supported by NEITHER, but if someone could give me some examples of how to perform a measure like this in LabView or C (or any other ideas you have to accelerate such action), I can test in these environments and to implement on my own with python.

Thanks in advance!

Toki

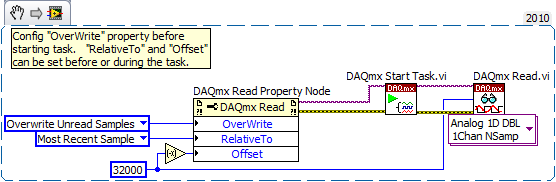

This is something I do a bit, but I can only describe how I would do it in LabVIEW - I'm no help on the details of the C function prototypes or the python wrapper.

In LabVIEW, there are accessed via the 'DAQmx Read property node' properties that help to implement. One is the Mode "crush" which I'm sure must be set before performing the operation. The other pair is known as "RelativeTo" and "Offset" and they allow you to specify what part of the CQI data buffer to read data from. If you the config to "RelativeTo" = 'most recent sample' and 'Offset' =-32000, then whenever you read 32000 samples, they are the very latest 32000 which are already available in the buffer of data acq. Between the readings, the task is free to overwrite the old data indefinitely.

Note that you will need to do this continuous sampling mode and that you can explicitly set a buffer size smaller than the default which will choose DAQmx based on your fast sampling rate.

An excerpt from LV 2010:

-Kevin P

-

Error launching of the ' LabVIEW.Application [error Code:-18001]

Hello

I have a TS 3.4 mind sequence LabView 8.21 - Teststeps.

The sequence is called from a Labview-OperatorInterface.

In a step that run 100 times before without problem I receive all of a sudden the error message:ErrorMessage: Error running substep 'run '. Unable to launch the "LabVIEW.Application" ActiveX automation server [error Code:-18001]

After a restart of the software al NOR-everything works normally again.

What is this error, and what should I do with it?

Should I ignore the error and try to execute the step again or not this average tht the ActiveX server has been lost forever?

How can this problem occur?Thanks for your help

-

Restrict the application of Digital Signatures

Hello

I am trying to determine what options are available natively in Acrobat to restrict the type of certificate that is used to apply a digital signature. AFAIK, Acrobat, default, seems to allow the use of a certificate that has the extension 'Digital Signature' This has the potential to create big problems in a regulated environment.

In my organization, our users receive multiple certificates for different applications. For example, a user can have a certificate for VPN, one for SMIME email access and, finally, one that is specifically for the application of electronic signatures. There is a more rigorous selection process for obtaining a certificate of electronic signature, and it also invites for a password every time that the private key is available. Each type of certificate has a separate certification authority.

We have configured the identities approved each user in Acrobat by deploying a file of address book for them. We have only our CA electronic signature as a CA approved in Acrobat (with some other universally approved authorities). The result is that, when a signature that has been applied by using any other AC, Acrobat does not check the signature. It's good, but it isn't enough since the dynamic images that come with Acrobat digital signatures do not appear on the documents printed or flattened.

Basically, I am trying to determine if there is a way to configure Acrobat (in native, out-of-the-box mode) such that it will only request digital signatures using certificates that are issued by an authority that is defined as a trusted identity.

If anyone has encountered this before?

Hi Mike,.

You should apply the starting value for each signature field that you want to restrict. The starting value becomes part of the properties of the signature field.

You can use the JavaScript debugger included in Acrobat. To select bring him the Advanced > Document Processing > JavaScript debugger menu item (providing you use Acrobat Pro v9).

Here is an example of code that you can apply where you need a specific issuer and the use of the key:

var f = this.getField ("Signature1");

caCert var = security.importFromFile ("Certificate", "/ C/Documents and Settings / / My Documents/myCA.cer"); f.signatureSetSeedValue({)

certspec: {}

Issuer: [caCert],

keyUsage: [0x7FFFFFF7], //Insure the certificate has value KeyUsage nonrepudiation

flags: 0 x 34 / / 2 requires transmitter + 32 requires the use of keys

},

});Let me know if you are looking to apply a different condition.

Steve

-

The watch is not, nor can it sync with reminders.

I bought at the Apple Watch last week. I'm back this week. Why? The watch is not, nor can it sync with reminders. For me, this is the reason more and more useful to have the watch. Without the reminders and the ability to easily sync with other devices, it is not a value of the purchase. I am disappointed by the lack of foresight of Apple and didn't want to patch the problem with a third-party application. It will be difficult to come back to me.

< re-titled by host >

Hello

Apple recently announced reminders will be added to Apple Watch as an application integrated in the software to come, watch OS update 3, which is scheduled for release this autumn / fall.

-

How to make the button to set the properties of auto-scale one of the Axes on the graphical indicator so I can turn on or off when I press on it.

I need to change the adjustment vaguely Autoscale property for my graphic indicator. Can someone help me please.

Thank you!

Hello

I have confirmed that there is currently no way programmatically enable/disable autoscaling for axes on the LabVIEW graphical indicator generator of the user Web interface. We noted this to possibly be implemented in the future; Sorry for the inconvenience.

-

Download the data recorder of NOR

How can I download the data recorder of NOR?

It depends on what OS you are using and what data logger you talk. Do you mean Signal Express or the data recorder included with the NOR-DAQmx Base driver?

NOR-DAQmx Base for Mac OS X, Windowsor Linux (Mandriva, OpenSUSE, Red Hat WS 4 or 5 only).

-

Freescale in the LabVIEW ARM controller?

Hi all

Is it possible to use the freescale MC9S12XEP100RMV controller in the ARMS of LabVIEW?

Thanks and greetings

Ben aljia P

Hi Suren,

The LabVIEW Embedded Module for ARM Microcontrollers using the "Keil uVision development toolchain to compile, download and debug applications", as this article explains. (Taken peripheral ARM supported by LabVIEW)

With this in mind, you should be able to use any microcontroller that supports the "RL-ARM real-time library." Keil device database lists microcontrollers currently supported with this library. (RL-ARM real-time library)

The Freescale MC9S12XEP100RMV MCU is not listed as being supported. Knowing this, you will not be able to use the LabVIEW Embedded Module for ARM, but you can use the LabVIEW Microprocessor SDK of Module. The only difference is that the ARM Module has been built on the Module SDK. The Module SDK is more "generic" and allows to develop support for virtually any 32-bit microcontroller software.

I hope this helps.

Kevin S.

Technical sales engineer

National Instruments

-

Change the language of the LabView controls?

I just need confirmation. If LabView controls, as a graph, have a context menu, then this menu will be first in the language of the LabView IDE, it is used with. If I create a new application, then the manufacturer includes the LabView RTE. It will also be the same language as the IDE version.

Now, if I wanted to change the language of the context menu we will say to the french, it would be sufficient for the user has just installed a version in french of the RTE? Is this correct?

Yes, thanks. Another regional setting of RTE would replace the one we provide in our Installer and then the user would have the menu in its language. Very well!AJMAL says:

This will be useful?

Maybe you are looking for

-

It gets better or is this all happening?

-

Scanner not working not, fax all or nothing

I bought the HP Color laser Jet Pro MFP M177fw ago, for about 2 weeks then we are difficult to receive faxes. It sounds and then send a message saying no fax not detected, this is problem no. 1. And problem # 2 is that I can't get the scanner to wo

-

parental control for windows and IE8 lists

could you tell me where to find lists allow/block for access in ie8 Manager and parental control under accounts of users and parental control?

-

Arrg - Eclipse questions - projects BlackBerry break after close and reopen

Whenever I get close and reopen the eclipse, all my IDE BlackBerry stuff has disappeared and my previous; working all fine applications have red Xs beside them. If I want to start a new project of BlackBerry, it is not an option. I re - install the B

-

I am trying to connect to one of our computers to the HP printer wireless. The message said "need driver software." What is the disc that came with the printer wireless?