Smaller data logs

I use SignalExpress, full version, with a cDAQ9174 3.5.0, 9205 and 9217.

The temperature data are 1 sample/s. The tension strings are 1000/s. I use the DC function on data V and which seems to be of 10 samples/s. I also monitor the AC RMS voltage after a high-pass filter.

The test runs for a day or two, I would like to log in order to keep the data every 10 seconds or more.

From what I found, I should be able to use the statistical function, set it to the average, set the time for the calculation of the average of ten seconds, and then check the measurement to restart at each iteration, and then connect the statistical data.

However, I can't find the setting for the length of the iteration, and the reboot box is not available on the DC database.

Thanks for your help!

Hi Arvo,

The acquisition steps DAQmx returns an array of data sampled at treat - for the length of the iteration, I would change the number of samples on DAQmx Acquire input. For example, if you buy 1000 Hz, acquiring 10 k samples will cause DAQmx Acquire back 10 seconds of data to be processed. Log on the DC values and value at the end of this treatment and the result should be a point every 10 seconds.

Best regards

John

Tags: NI Products

Similar Questions

-

Access ntuser.dat.log in NETWORK services folder refused even in safe MODE

I try to accessntuser.dat.log in the file services NETWORK even in safe MODE but not private. I am logged in as ADMINISTRATOR. I guess that's the case as well if I connected any other user?

I looked in the MGR TASK to try and identify what process this block, but I am at a loss. Anyone know which circles I need to pass through power to life this file?

Guessing that you mean this file:

c:\Documents and Settings\NetworkService

You cannot open the file because XP is running and the file is in use and the Task Manager will not help you indicate what (s) blocking process your effort to open the file (it is not what is the Task Manager for).

Sometimes, you can copy a file open and then open the copy, but it will not work with ntuser.dat.log and your efforts to access the file will give results like:

If you want to open the file, you can open a session under a different name and access the file of ntuser.dat.log of another user in this way (since the other user will not in use).

For example, if I am logged on as user ElderK I can't access my ntuser.dat.log file but I can access the file owned by another user as Jose in looking here:

c:\Documents and Settings\Jose

Or, you can start on something like a Hiren Boot CD and access the file from there since your XP will not work.

I see no reason to watch the ntuser.dat.log file is binary data, then maybe you can tell us what you're trying to do and why (or you just practice).

-

Hello

I found this hidden file (located in the C:\Document and Settings\MyUsername\ folder) ntuser.dat.log , keeps changing itself... (every few seconds/minutes)its size is 1 KB to 400kb ~Is this normal?I thought that he should only updated when logging in or out, am I wrong?My OS is Windows XP SP3.Hello

The NTUSER. DAT is a registry file. NTUSER each user. DAT file contains the registry settings to their individual account. The Windows registry, is a "central hierarchical database" that contains information about user profiles, hardware and software contained on a computer. Does Windows constantly reference registry throughout its operation of its files. The configuration of the "HKEY_CURRENT_USER" of the registry branch is supported by NTUSER the current user. DAT file.

Note: this section, method, or task contains steps that tell you how to modify the registry. However, serious problems can occur if you modify the registry incorrectly. Therefore, make sure that you proceed with caution. For added protection, back up the registry before you edit it. Then you can restore the registry if a problem occurs. For more information about how to back up and restore the registry. Check out the following link.

http://support.Microsoft.com/kb/322756 -

Ntuser.dat.LOG files are important to include in a backup to transfer files to a new computer?

Ntuser.dat.LOG files are important to include in a backup that is used to transfer files to a new computer? When you try to backup or copy files I get the message that these files (there are 4 of them) cannot be saved or copied because they have already opened and used on the computer. A single file is 256 KB and another has 0 KB. Can not see the other two

Hi MAnnetteFox,

Thanks for posting the query on Microsoft Community.

You do not need to take backup of these files and they are normal files that will be generated.

In the future, if you have problems with Windows, get back to us. We will be happy to help you.

-

Unable to show the data logged in simple cdc?

Hello

I want to get the changed data only inserted into the target table.

For this,.

I create model & select JKM Oracle Simple.

I create the data store (with data) source & target (empty) table.

Next. I add source table to cdc and start log.

again, I insert a row in the source table. After I check source table-> cdc > data logged--> "changed data are coming with success.

I create interface for the changed data only inserted into the target table.

I drag the Table logged as a source and the required target. On the data from the Source store, check the option box " JOURNALISÉ DATA ONLY " for this example I used IKM SQL update SQL command.

I checked the source data after selecting " JOURNALISÉ DATA ONLY " option in the properties of the source. I have not logged data.

Please help me,

Thanks in advance,

A.Kavya.

You must define who subscribed using Jornalized data, in this case, 'CONTROLLER '.

-

Hello

Our environment is Essbase 11.1.2.2 and work on Essbase EAS and components of Shared Services. One of our user tried to execute the Script of Cal of a single application and in the face of this error.

Dynamics Processor Calc does not reach more than [100] ESM blocks during the calculation, please increase the CalcLockBlock setting, and then try again (a small data cache setting can also cause this problem, check the size of data cache setting).

I did a few Google and found that we need to add something in the Essbase.cfg file as below.

Dynamics Processor Calc 1012704 fails to more blocks ESM number for the calculation, please increase the CalcLockBlock setting, and then try again (a small data cache setting can also cause this problem, check the size of data cache setting).

Possible problems

Analytical services cannot lock enough blocks to perform the calculation.

Possible solutions

Increase the number of blocks of analytical Services can allocate to a calculation:

- Set the maximum number of blocks of analytical Services can allocate at least 500.

- If you are not a

$ARBORPATH/bin/essbase.cfgon the file server computer, create one using a text editor. - In the

essbase.cfgfolder on the server computer, set CALCLOCKBLOCKHIGH to 500. - Stopping and restarting Analysis server.

- If you are not a

- Add the command SET LOCKBLOCK STUDENT at the beginning of the calculation script.

- Set the cache of data large enough to hold all the blocks specified in the CALCLOCKBLOCKHIGH parameter.

In fact in our queue (essbase.cfg) Config Server we have given below added.

CalcLockBlockHigh 2000

CalcLockBlockDefault 200

CalcLockBlocklow 50

So my question is if edit us the file Essbase.cfg and add the above settings restart services will work? and if yes, why should change us the configuration file of server if the problem concerns a Cal Script application. Please guide me how to do this.

Kind regards

Naveen

Yes it must *.

Make sure that you have "migrated settings cache of database as well. If the cache is too small, you will have similar problems.

- Set the maximum number of blocks of analytical Services can allocate at least 500.

-

Error ORA to rename data/log files

Hello

I wanted to move my data files to the new location, and now my TEMP was not moving properly.

SQL > bootable media.

ORACLE instance started.

Total System Global Area 4259082240 bytes

Bytes of size 2166488 fixed

922747176 variable size bytes

3321888768 of database buffers bytes

Redo buffers 12279808 bytes

Mounted database.

SQL > ALTER DATABASE RENAME FILE ' / oracleGC/oem11g/oradata/oem11g/temp01.dbf' TO ' / oradata/oem11g/data/temp01.dbf';

Database altered.

SQL >

SQL >

SQL > alter database open;

Database altered.

SQL > SELECT name FROM v$ datafile;

NAME

--------------------------------------------------------------------------------

/oradata/oem11g/data/System01.dbf

/oradata/oem11g/data/undotbs01.dbf

/oradata/oem11g/data/sysaux01.dbf

/oradata/oem11g/data/users01.dbf

/oradata/oem11g/data/Mgmt.dbf

/oradata/oem11g/data/mgmt_ecm_depot1.dbf

Now, I get the following errors:

When I try to rename, I get the error below: the dbf is in both places.

SQL > ALTER DATABASE RENAME FILE ' / oracleGC/oem11g/oradata/oem11g/temp01.dbf' TO ' / oradata/oem11g/data/temp01.dbf';

ALTER DATABASE RENAME FILE ' / oracleGC/oem11g/oradata/oem11g/temp01.dbf' TO ' / oradata/oem11g/data/temp01.dbf'

*

ERROR on line 1:

ORA-01511: Error renaming data/log files

ORA-01516: file nonexistent log, datafile or tempfile

"/ oracleGC/oem11g/oradata/oem11g/temp01.dbf".user771256 wrote:

Yes is working now.

Wouldn't it appears with the following?SELECT NAME FROM V$ DATAFILE;

Nope,

Given that the file that you are interested in is a temporary file (temporary tablespace) and not of datafile he show up in v$ datafile but v$ tempfileConcerning

Anurag -

questions of small scale log values

I have a strange problems with tracing values in graphs for NI Measurement studio WPF. This problem occurs when the axis is log based on and the value on the axis spans less than 1 to 1 (i.e. 0.001 to 1000). The chart automatically change the lower x-axis to limit to 1. The behavior is similar to the one reported here (http://forums.ni.com/t5/Measurement-Studio-for-NET/log-scale-won-t-scale-to-show-small-values/m-p/27...) but in my case, none of the mapped value is 0 or less than the lower limit of 0 and the axis is set to greater than 0.

The code below will reproduce the problem:

Focus() pt = new Point [100];

for (int i = 1; i)<=>

{

PT [i-1] = new Point (0,1 * I, me)

}Field pl = new Plot ("test");

PL. data = pt;

Graph Plots.Add (pl);

Where the x-axis of the graph are log10 scale (and the boundaries are defined on {0,1, 10} in the code .xaml) and the y axis is linear (no matter the scale really). The chart automatically x-axis it 1 and this can be changed to 0.1 but it comes back again to 1 if the visible property of the chart changed.

This behavious doesn't happen if all the x-axis values are less than 1 or greater than 1 that is the code below to draw correctly and the axis is not reset to 1, etc.:

Focus() pt = new Point [100];

for (int i = 1; i)<=>

{

PT [i-1] = new Point (0.001 * I, me)

}Field pl = new Plot ("test");

PL. data = pt;

Graph Plots.Add (pl);

Someone knows it why it behaves like that and what can we do to fix this?

Which seems to be the case, it's that the default

FitLooselyrange adjustment see values ranging from 10-1 to 10-1, and then choose a range of0,10for data. Since no one can be represented on a logarithmic scale, forced the scale to an arbitrary value, less than ten years, thus giving a final range of1,10and truncating low values. I created a task to solve this problem.To work around the problem, you can change the

Adjusteron the scale to another value, such asFitExactly. -

I designed the VI attached to achieve a certain task. I've been on it since yesterday but decided to take a different approach.

I want to save data in the log.xls file every five seconds (or as the timer would clarify), continuously. When the counter reaches 2 loop the inner circle, ends the inner loop. This means that there will always be three records in the log at any time.

By clicking on the button, a 'snapshot' of the file log.xls must be taken, which means that all the content of the log.xls file should be stored in the snapshot.xls file. This should happen only once and the inner loop will continue to run afterwards (IE continue to record data in the log.xls file). Please make sure that the snapshot.xls file is not overridded with new data from the log.xls file.

Indeed the Instant file would contain all the log.xls file at the point of the inner loop ends once the button is pressed.

I ran the code several times, but nothing in the file snapshot.xls.

Where should I go from here please?

-

Hello

I'm trying to create a scope that will record a data section so that it can be consulted at a later date... I use a queue to store the data that I log on, although when the section of my code is run that stores the data in the queue, no data ends here and no error is produced. I really don't see what I'm doing wrong. Is it possible someone could have a look att the attached vi (recorded for LabVIEW 8.0) and see what I'm doing wrong, it would be greatly appreciated!

Thank you, Alec

You AND-ing, the event to place of Capture with a FALSE constant, which will always give you false and you'll never go to the "Stored update" event. Remove this piece of code and it will work as you can hear.

The best way to determine which button was pressed would be to use a structure of the event. In addition, it would be better to have a one Stop button that stops all the loops. See this post for options on how to do it: http://forums.ni.com/t5/LabVIEW/What-is-the-preferred-way-to-stop-multiple-loops/m-p/1035851

-

CPU, RAM and disk, file system information for the data, logs, temp, Archive for database

Hello gurus,

How can we see what size of CPU and RAM for the database? According to the previous steps of this great site, I could able to do

Therefore, the size of the RAM for the database to which I have connectedSQL> show parameter sga_max_size NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ sga_max_size big integer 800M SQL> exit

The one above was the command give the OS level that I don't know what information he gives and what database, since there are 12 databases on this server.rootd2n3v5# ioscan -kfn |grep -i processor processor 0 13/120 processor CLAIMED PROCESSOR Processor processor 1 13/121 processor CLAIMED PROCESSOR Processor processor 2 13/122 processor CLAIMED PROCESSOR Processor processor 3 13/123 processor CLAIMED PROCESSOR Processor rootd2n3v5#

How can I get the CPU information?

And where can I find information for information of system data files, logs, temp, Archive?

<div class="jive-quote">select * from v$version</div> BANNER ---------------------------------------------------------------- Oracle Database 10g Enterprise Edition Release 10.2.0.5.0 - 64bi PL/SQL Release 10.2.0.5.0 - Production CORE 10.2.0.5.0 Production TNS for HPUX: Version 10.2.0.5.0 - Production NLSRTL Version 10.2.0.5.0 - ProductionAsk the system administrator if the database server is a physical machine, if it is hardware partitioned or created under a virtual machine Manager? Check the operating system utilities. On AIX uname to identify if you are on an LPAR.

In Oracle, you can query the parameter $ v to cpu_count to see how many cpu Oracle says he sees.

HTH - Mark D Powell.

-

Using the data logged in an interface with the aggragate function

Hello

I'm trying to use logged data from a source table in one of my interfaces in ODI. The problem is that one of the mappings on the columns target implies a function (sum) overall. When I run the interface, I get an error saying not "a group by expression. I checked the code and found that the columns jrn_subscriber, jrn_flag, and jrn_date are included in the select statement, but not in the group by statement (the statement group contains only remiaining two columns of the target table).

Is there a way to get around this? I have to manually change the km? If so how would I go to do it?

Also I'm using Oracle GoldenGate JKM (OGG oracle for oracle).

Thanks and really appreciate the help

Ajay"ORA-00979"when the CDC feature (logging) using ODI with Modules of knowledge including the aggregate SQL function works [ID 424344.1]

Updated 11 March 2009 Type status MODERATE PROBLEMIn this Document

Symptoms

Cause

Solution

Alternatives:This document is available to you through process of rapid visibility (RaV) of the Oracle's Support and therefore was not subject to an independent technical review.

Applies to:

Oracle Data Integrator - Version: 3.2.03.01

This problem can occur on any platform.

Symptoms

After successfully testing UI integration ODI using a function of aggregation such as MIN, MAX, SUM, it is necessary to implement change using tables of Journalized Data Capture operations.However, during the execution of the integration Interface to retrieve only records from Journalized, has problems to step load module loading knowledge data and the following message appears in the log of ODI:

ORA-00979: not a GROUP BY expression

Cause

Using the two CDC - logging and functions of aggregation gives rise to complex problems.

SolutionTechnically, there is a work around for this problem (see below).

WARNING: Problem of engineers Oracle a severe cautioned that such a type of establishment may give results that are not what could be expected. This is related to how ODI logging is applied in the form of specific logging tables. In this case, the aggregate function works only on the subset that is stored (referenced) in the table of logging and on completeness of the Source table.We recommend that you avoid this type of integration set ups Interface.

Alternatives:1. the problem is due to the JRN_ * missing columns in the clause of "group by" SQL generated.

The work around is to duplicate the knowledge (LKM) loading Module and the clone, change step "Load Data" by editing the tab 'Source on command' and substituting the following statement:

<%=odiRef.getGrpBy()%>with

<%=odiRef.getGrpBy()%>

<%if ((odiRef.getGrpBy().length() > 0) && (odiRef.getPop("HAS_JRN").equals("1"))) {%>

JRN_FLAG, JRN_SUBSCRIBER, JRN_DATE

<%}%>2. it is possible to develop two alternative solutions:

(a) develop two separate and distinct integration Interfaces:

* The first integration Interface loads the data into a temporary Table and specify aggregate functions to use in this initial integration Interface.

* The second integration Interfaces uses the temporary Table as Source. Note that if you create the Table in the Interface, it is necessary to drag and drop Interface for integration into the Source Panel.(b) define the two connections to the database so that separate and distinct references to the Interface of two integration server Data Sources (one for the newspaper, one of the other Tables). In this case, the aggregate function will be executed on the schema of the Source.

Display related information regarding

Products* Middleware > Business Intelligence > Oracle Data Integrator (ODI) > Oracle Data Integrator

Keywords

ODI; AGGREGATE; ORACLE DATA INTEGRATOR; KNOWLEDGE MODULES; CDC; SUNOPSIS

Errors

ORA-979Please find above the content of the RTO.

It should show you this if you search this ID in the Search Knowledge BaseSee you soon

Sachin -

How can I keep 'Other' small data storage?

I have a 6-16GB iPhone running on iOS 9.3.2. A few months ago I started to run out of storage space, and I found it was used mainly by 'other' data upward. I tried many, MANY tips given here and on other sites, in order to reduce others' my data, to the point of finally breaking and restore my iPhone to factory settings, then individually re-download my mail, applications, photos, etc. Mac support. Initially, this great worked, now here we are a few months later, and my 'other' is back up to 7GB. I am so frustrated, that I do not understand why my 'other' data are so large. I thought by loading everything individually instead of simply to restore a backup, I would have managed to get rid of the corrupt files, etc. It is not possible to have to restore my phone settings whenever this happens, I need a more permanent solution to keep this 'other' data in check. Any suggestions?

You are right. Restaurant as new every time is not the way to handle this. I suspect that one of your applications creates the issue but identifying that one would be difficult, likely error. 7 GB of others is much too large, I tend to run about 1 or 2 GB maximum. These articles can help, but like I said, I think the problem is with a single application.

http://osxdaily.com/2013/07/24/remove-other-data-storage-iPhone-iPad/

http://www.IMore.com/how-find-and-remove-other-files-iPhone-and-iPad

-

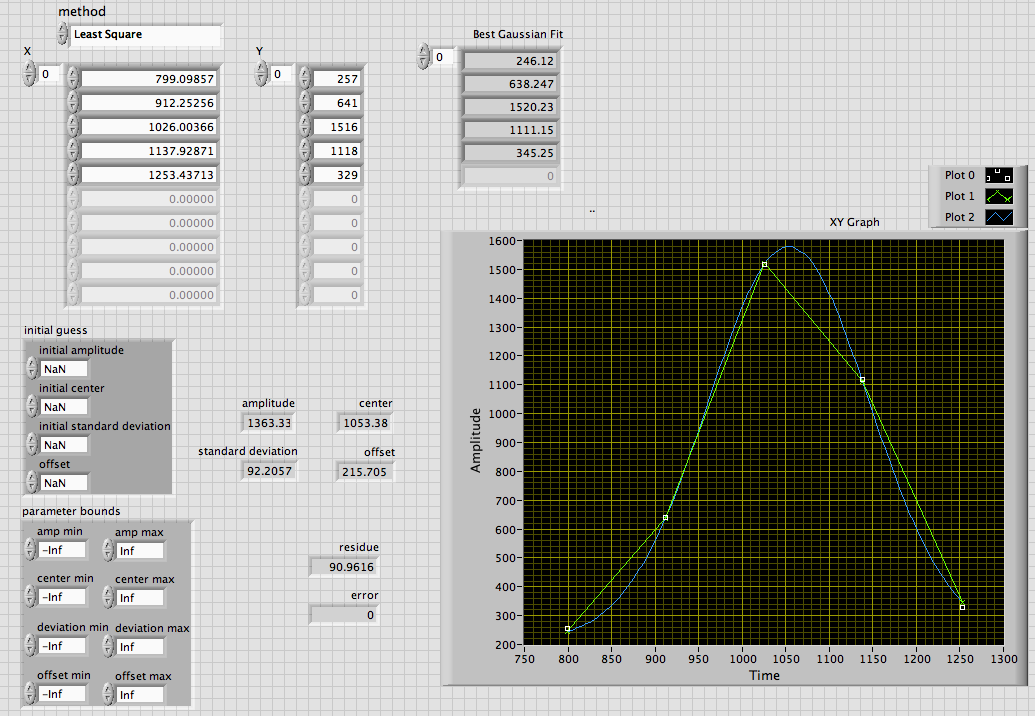

Fit Gaussian Peak and non-linear curve Fit on small data differ from the PEAK of origin made

Hi all

I'm developing a program in which I have to adjust the curve of Gauss on only 4 or 5 data points. When I use the Gaussian Ridge Fit or adjustment of the curve non-linear, it connects linearly all the points so that other editing software like origin's curve fitting of Gauss on the same set of data that I have attached two images is LabVIEW with Fit Gaussian of Peak and nonlinear adjustment and other is original.

The data are

X Y

799.09857 257

912.25256 6411026.00366 1516

1137.92871 1118

1253.43713 329Interesting.

The initial default values assume all are NaN, which causes the LV calculate conjecture. The default values for the parameter Bounds +/-Inf with the exception of the offset that are both zero. This, of course, forces the output zero offset. It seems a strange fault, but they may have a good reason for it.

Change the limits of compensation to something else translates the output being offset ~ 215 and the Center moves to ~ 1053. These correspond the original result to 5 significant digits.

Lynn

-

Hello

I'm constantly measure data (i.e. a voltage signal) using DAQmx and storing data.

In an old facility, I stored the data in a table, and whenever the table reaches a certain size, I recorded it in a file. However, this resulted in the loss of data during storage.

Then I read on the use of queues, had a look at the examples, read a few tutorials and thought I understood the principle.

However, I can't get my example works.

Thanks for any help.

Kind regards

Jack

If the 2nd so that the loop is executed then it means that the local variable always keeps the old true value even if every time that you run the code, the local variable stops the 2nd loop. Clear the local at the beginning of the code and run it and check variable with the vi I joined.

Maybe you are looking for

-

HP EliteBook 8560p: can not turn on WiFi: the button is orange, and nothing happens when you press

Hello I have a problem with my wifi. The button always orange and nothing happens when I press on it: no errors, no message, no reaction at all. This is why I can't use WiFi. The laptop is a little new: I use only 2 months. 1. number and product name

-

World Traveler in Querétaro - Mexico adapter Kit?

Hello everyone, I m staying for two months in Querétaro for research and writing. Unfortunately, I forgot my World Travel Adapter in Belgium... I looked and asked around town, but I find couldn t one. Even the didn t of official dealers iShop know wh

-

ITunes screen keeps flashing on and off since the last update on windows PC.

Hi, since the latest itunes update, my screen flashes on and off whenever I am in itunes. ITunes crashes regularly. I am able to download music without problem. The flashing light does not occur when I'm in my own music, just at the moment when I

-

No Interface of SERAGLIO OR couldn't find NI PXI-8430/8 RS232?

Hello to all, I tried to instaler a serial NI PXI-8430/8 RS - 232, the installation went well SERIES OR software on PC Material on the module a PXI-1042 chssis and when I do the verification test on Troubleshooting Wizard a message tells me that the

-

Windows 7 VB 6.0 project exe does not work in windows XP

Hello! Currently, I switched from windows Xp(sp 3) to Windows 7 (32 bit). Now, I did more recent SalesProject.exe by using Visual Basic 6.0 in Windows 7, its fine on Win work 7systems. But even SalesProject.exe does not work when I run it on Windows