Table of Build using Pseudo-file of waiting

I'm moving to use a queue by simply creating a table. I writing to a write DAQmx values in a loop at 50 ms, but I won't update the chart every 50 ms (for obvious reasons). I use a global functional for transmitting data from a slower loop to 500 ms.

Problem is that I get that each point of the tenth. Y at - it an easy way to build an array and pass (without overwrite or skip data) to the slower without having to queue loop?

Thanks for the help.

Why not use a queue? It is by far the most effective way to do what you want.

What you have run in with the FGV is a race condition. They you used it, it might as well be a local variable or a global variable. FGVs do NOT prevent the race conditions in the way that you use it. Here's a good article about the race conditions in the field of LabVIEW log:http://labviewjournal.com/2011/08/race-conditions-and-functional-global-variables-in-labview/

Tags: NI Software

Similar Questions

-

Dimensions of building using rule files

Hi team,

I have EMP 11.1.2.2 and I build the dimension of accounts using the rules file. I use ESSCMD command INCBUILDDIM to build the size of the data file. Whenever I build the old military dimension is removed when I add new members of the dimension. Is it possible to add members without deleting existing members while strengthening the dimension using rule files?

Thanks for your help.

There is an option in Dimension build settings in the file rules - "Merge" or "Delete without precision. If you do not want to remove existing members, you must select "merge".

-

How to build a photo file to be able to print poster size? I would use an outside vendor to print.

How to build a photo file to be able to print poster size? I would like to use an external vendor to print

Talk to who that it is made to feel and see what their needs are. Most often a jpg high quality enough. Often, you have a PPP high setting for posters due to the viewing distance. 300 DPI to max, but I went up to 100 dpi for prints 30 X 40.

-

Table creation of externally using XML files.

I'm trying to load data from the XML file into the database table. I want to use external Table for this loading feature. Although we have the functionality of external table with text/plain file, is there any what process of the XML data in the database of loading table using the external table?

I use Oracle 9i.

Appreciate your answers.

Concerning

Published by: user652422 on December 16, 2008 23:00Hir user652422!

Here is an example:

CREATE TABLE tab_ext_books

(authors VARCHAR2(2000),

title VARCHAR2(2000),

publisher VARCHAR2(2000)

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_LOADER

DEFAULT DIRECTORY my_ext_tab_dir

ACCESS PARAMETERS

(

records delimited by ""

badfile my_ext_tab:'empxt%a_%p.bad'

logfile my_ext_tab:'empxt%a_%p.log'

fields

(

filler char(2000) terminated by "",

authors char(2000) enclosed by "" and " ",

title char(2000) enclosed by "" and " ",

publisher char(2000) enclosed by "" and "

"

)

)

LOCATION ('books.xml')

)

PARALLEL

REJECT LIMIT UNLIMITED;This is books.xml

Thomas Kyte

Oracle Expert One On One

Wrox Press

Gaja Vaidyanatha, Kirtikumar Deshpande, John Kostelac

Oracle Performance Tuning 101

Oracle Press

-

Creating form/table layout dynamically using different web services

Hello

I have the following requirement:

We have a web service for each data center device control settings for this device. To update the settings we provide an ADF UI or the form layout table layout by using the web service.

The number of servers being, it is not possible to create a different page for each web service.

Thus, the requirement is to create a user interface where the user can give the location of the wsdl file and choose if he wants to see details in a formatting or the table layout and click on the button to view the details,

Then, we need to read the web service and create control of data bindings, page links (in the binding container) and view the details on the user interface.

Please let me know if this is possible or not.

We create data programmatically control? We can add data connections to the container of link programmatically?

We can make the data on the user interface bindings dynamically? Is there a public API is to create links by programming?

or if control data and data links option is not available then is there any other alternative approach for it?

Please help me in this. The pointers in this regard could greatly help me.

Thank you

StephanieHello

assuming that the information that you provide to the servers are identical, you use a POJO that accesses the WSDL file by using a proxy client. Then, you can expose a common set of attributes on the POJO and list and generate a JavaBEan data control that you can build forms and lists. POJO basically will use you to transform the different web services in a format of individual business service.

Frank

-

It happened when I tried to update c. 16 to 17 as well. I had go it and download v. 18 and he told me that she had and he asked me to restart Firefox. I clicked on the "Restart Firefox" button. He closed Firefox 17. I waited an hour and no Firefox had begun. I clicked on the icon on my desktop for Firefox, and I got a window with "another program is using the file.

The last time that happened, I had to uninstall v. 16, use Opera 12.12 to download version 17 and then installed, to have to set my preferences just a little and then I could start v. 17.

Why update your program allows me to update, instead of downloading a new program?

I'm on Windows XP SP3 and a very fast motherboard.

Finally, I decided to check if my AVG antivirus or IOBit Malware Fighter software is interfering and I disabled them temporarily. I restarted and then turned off these programs and then opens Firefox. It was as usual, 17.0.1 version not updated. I went to help, on Firefox, click Find updates. Downloading updates, invited to restart Firefox, and after closing Firefox, I got the window that says that he was installing the updates, for the first time for an attempt to update to version 17 or 18. He installed the updates and restarted in fact without any problems.

I was about to turn off all of my Add-ons for the next test. I never had to disable my AVG or IOBit before and they are the latest versions, too. AVG is the same version that allowed the update to v. 16 before. If something is different both Firefox 17 and 18, that it will not allow updates with AVG in force (or possibly IOBit Malware Fighter).

-

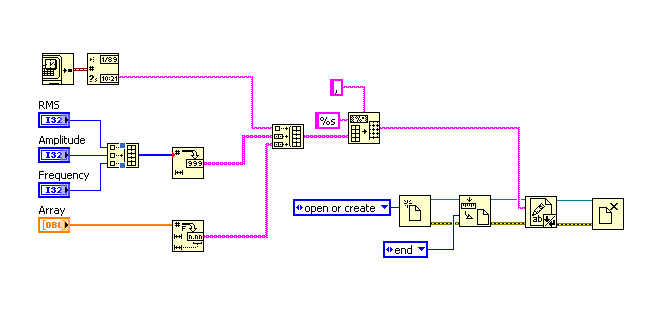

Write a string and an integer and a table all in the same file?

Hello

I am currently it several different types of values with LabView.

I have a shot, a few numbers and several paintings.

Thus, for example, I have a timestamp of the chain, several values of "integer" amplitude of the signal for example, RMS value, frequency and I have several paintings - table of signal, the FFT (PIC and location) values.

Basically, I'm trying to find a way to write all the values in a single file. I can write all the individual types to separate files (so I can write the RMS, amplitude and frequency to a single file, some of the tables in the other)

but is it possible to write a string and an integer and a table all in the same file?Pointers would be much appreciated,

Thank you

Paula

Your file will be all text... any format in a table of text, to build as a single table, "table chain worksheet", to write to the file.

(I'm sure this has been on the forums before... a search it would have thrown upward)

-

read an AVI using "Binary file reading" vi

My question is to know how to read an avi file using vi «The binary read»

My goal is to create a series of small avi files using IMAQ AVI write framework with the mpeg-4 codec to long 2 seconds (up to 40 images in each file with 20 frames per second) and then send them one by one in order to create a video stream. The image has entered USB camera. If I read these frameworks using IMAQ AVI read framework then compression advantage would be lost if I want to read the entire file itself.

I've read the avi file using "Binary file reading" with 8 bit unsigned data format and then sent to the remote end and save it and then post it, but it did not work. Later, I found that if I read an image using "Binary file reading" file with 8 bit unsigned data format and save it to local computer itself, the format should be changed and it would be unrecognizable. I'm doing wrong by reading the file format of number integer 8 bit unsined or should I have used other types of data.

I'm using Labview 8.5 and Labview vision development module and module vision 8.5 acquisition

Your help would be very appreciated.

Thank you.

Hello

Discover the help (complete) message to "write in binary.

"Precede the size of array or string" entry by default true, so in your example the data written to the file will be added at the beginning information on the size of the table and your output file will be (four bytes) longer than your input file. Wire a constant False "to precede the array or string of size" to avoid this problem.

Rod.

-

How to use image files in another library project?

I put the images of resource in another project of library due to the limitation of the size.

So I create another project and put the files in the folder "res".

So how can I use them? They have a unique way for me to invoke?

Example:

Project 1: (added project2 to build the path)

|

|

Project 2:

-res

(some jpg png files)

1 project wants to use the files in the project 2.

How should I use that?

I think that this thread covers the same ground as you go on:

It may be another option?

-

Hello

Question 1 - I have a list of duplicate on my computer files. Very often, the same file appears in two or more different locations. So I guess this could mean different copies of the same file is used by various programs. It would then be important to know what program is using this file prior to the removal of duplicates.

Question 2 - it would be possible to point all programs that use a file given in one place? That is to centralize the location of a file. What I think, could eliminate the need to have different copies at different locations. If so, how is it please?

Thank you for your help.

The duplication that you see is the result of a bad installation program written by eSupport. It has nothing to do with Microsoft. I could do this:

- Rename the folder c:\eSupport in eSupport.junk

- Wait a week or two.

- If all goes well, remove the renamed folder.

-

Hello world

DB 11.2.0.1 - hearts 32 - 64 GB of RAM

I have a database of 1.5 million records are inserting all hours into it. This database is suffering too log sync wait. Googling the question I found that the reason is the way enforcement is the insertion of data in the database that has an insert preceded a commit after each of them. Currently, we are unable to change the method of insertion to use batch inserts instead. The number of files in the database redolog is 22 each of size 200 MB and the log_buffer is about 150 MB.

is there a solution to reduce the number of log file sync waits?

I tried to increase the size of log file to roll forward to 700 MB of each, but there was once some claim buffer waits. the SGA is 38 GB.

Thanks for any guidance

concerning

You could try commit year writing setting method. In the example here,.

One of the ways to eliminate the log_file_sync awaits

I eliminate log file sync.

-

Impossible to add date in dumpfile using a file & export

Hi all

OS = SOLARIS SPARC 64-bit 11

DB = Oracle 12 c

I am planning a daily backup of the database using cron tasks, do I have to create a simple script. In this script, I will inaugurate the export dump using parameter files. The dump file must contain the date of the backup.

Since this is my first time this is done, I was looking around and found a method on the date of the addition in the dump file. But I still couldn't it works when I get home all in a parfile.

At first, I tried to run the backup without using the nominal file, and it works as below.

export ORACLE_SID = ELMTEST

Export mydate = "date"+ %Y %m %d"'"

echo $mydate

20141117

oraelm@hrmdbd01:/backup/Elm$ expdp system/manager@ELMTEST directory dpump_dir1 dumpfile = hrtestexp_ = $ {mydate} .dmp nologfile = y

Export: Release 12.1.0.1.0 - Production on Mon Nov 17 11:15:38 2014

Copyright (c) 1982, 2013, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle 12 c Enterprise Edition Release 12.1.0.1.0 - 64 bit Production database

With partitioning, Automatic Storage Management, OLAP, analytical advanced

and Real Application Testing options

Start "SYSTEM". "" SYS_EXPORT_SCHEMA_01 ": directory of system/***@ELMTEST = dpump_dir1 = hrtestexp_20141117.dmp nologfile dumpfile = y

Current estimation using BLOCKS method...

Processing object type SCHEMA_EXPORT/TABLE/TABLE_DATA

Total estimation using BLOCKS method: 0 KB

Processing object type SCHEMA_EXPORT/USER

Processing object type SCHEMA_EXPORT/ROLE_GRANT

Processing object type SCHEMA_EXPORT/DEFAULT_ROLE

Processing object type SCHEMA_EXPORT/PRE_SCHEMA/PROCACT_SCHEMA

Object type SCHEMA_EXPORT/SYNONYM/SYNONYM of treatment

Object type SCHEMA_EXPORT/TABLE/TABLE processing

Object type SCHEMA_EXPORT/TABLE/COMMENT of treatment

Processing object type SCHEMA_EXPORT/TABLE/INDEX/INDEX

Object type SCHEMA_EXPORT/TABLE/CONSTRAINT/treatment

Object type SCHEMA_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS of treatment

Object type SCHEMA_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS treatment

Object type SCHEMA_EXPORT/STATISTICS/MARKER of treatment

Processing object type SCHEMA_EXPORT/POST_SCHEMA/PROCACT_SCHEMA

Main table 'SYSTEM '. "" SYS_EXPORT_SCHEMA_01 "properly load/unloaded

******************************************************************************

Empty the file system set. SYS_EXPORT_SCHEMA_01 is:

/Backup/Elm/hrtestexp_20141117.dmp

Work 'SYSTEM '. "" SYS_EXPORT_SCHEMA_01 "completed Mon Nov 17 11:16:39 2014 elapsed 0 00:00:59

However when I came all in my parameter file it failed.

UserID=System/Manager@ELMTEST

Directory = dpump_dir1

dumpfile = hrtestexp_$ {mydate} .dmp

logfile = test_exp.log

Export: Release 12.1.0.1.0 - Production on Mon Nov 17 11:18:25 2014

Copyright (c) 1982, 2013, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle 12 c Enterprise Edition Release 12.1.0.1.0 - 64 bit Production database

With partitioning, Automatic Storage Management, OLAP, analytical advanced

and Real Application Testing options

ORA-39001: invalid argument value

ORA-39000: bad dump file specification

ORA-39157: Error adding extension to file ' hrtestexp_$ {mydate} .dmp ".

ORA-07225: sldext: translation error, impossible to expand file name.

Additional information: 7217

Can someone help me on this please?

Thank you very much

Jason

To expand a bit on Richard to answer...

The reason why it works on the command line, not in the file because the command line itself is processed by the processor of the shell. The shell bed processor line, performs substitutions (like your $mydate) environment variables and THEN load the requested executable (expdp) it passing the fully expanded command line.

On the other hand, him parfile is read and processed ONLY by expdp, which knows no substitution of environment variables.

There are several common ways to deal with this.

We have to leave everything right on the command line.

Another would be to have the shell script to write the nominal file as well:

export ORACLE_SID = ELMTEST

Export mydate = "date"+ %Y %m %d"'"

echo $mydate

20141117

export parfile = myparfile.txt

Directory = dpump_dir1 echo > $parfile

dumpfile = hrtestexp_ echo $ {mydate} .dmp > $parfile

echo nologfile = y > $parfile

oraelm@hrmdbd01:/backup/Elm$ system/manager@ELMTEST expdp parfile = $parfile

Another technique is to use the redirection of input:

oraelm@hrmdbd01:/backup/Elm$ expdp system/manager@ELMTEST<>

Directory = dpump_dir1

dumpfile = hrtestexp_$ {mydate} .dmp

nologfile = y

MOE

A few other comments

First of all, as already pointed out, the export is not a backup, at least not a physical backup. To use export for recovery, you must first have a working database. It will be no help if you have a failure of a support on a data file.

Second, you really need to capture a log file, rather than nologfile = y. This log file can be valuable in any number of situations. Why in the world would you delete?

-

SQL Ldr-load from multiple files to multiple tables in a single control file

Hello

It is possible to load data from files dishes mutiple at several tables using a control file in SQL Loader? The flat file to database table relation is 1:1. All data from a flat file goes to a table in the database.

I have about 10 flat files (large volume) that must be loaded into 10 different tables. And this must be done on a daily basis. Can I start charging for all 10 tables in parallel and a file command? Should I consider a different approach.

Thank you

SisiWhat operating system? ('Command Prompt' looks like Windows)

UNIX/Linux: a shell script with multiple calls to sqlldr run in the background with '&' (and possibly nohup)

Windows: A file of commands using 'start' to start multiple copies of sqlldr.

http://www.PCTOOLS.com/Forum/showthread.php?42285-background-a-process-in-batch-%28W2K%29

http://www.Microsoft.com/resources/documentation/Windows/XP/all/proddocs/en-us/start.mspx?mfr=truePublished by: Brian Bontrager on May 31, 2013 16:04

-

Hello

I am trying to improve the performance of a 10.2.0.5 database who is suffering from the high value of the log file sync wait. AWR reports show that it is almost never less than 20% of the time of database, normally it is 20-30%, and it is consistently above not-idle wait event. Many sessions he takes 80-90% of the time or more.

Log file parallel write is an order of magnitude lower, probably isn't an I/O problem. The database performs about 100 commits per second, the ratio of user-call-to-commit is about 20, again generated 500 k/s, log buffer is big 14 MB. There are about 6-7 journal file switches per hour on average.

There are several oddities on log file sync wait here and I'd appreciate any help unravel them:

1) there are almost two file sync wait events in the log by a posting on average (number of parallel expectations of log file is about the same number of validations). Why?

(2) according to the ASH, nearly half of log file sync waits take near Ms. 97.7 is something special reason for this pic?

(3) approximately 0.5% of the log file sync waits captured by ASH see the multisecond wait times. No idea where they may come from or how to diagnose this kind of problem?

Some parts of an AWR report below, please let me know if anything else is needed.

Best regards

Nikolai

Cache Sizes ~~~~~~~~~~~ Begin End ---------- ---------- Buffer Cache: 44,592M 44,672M Std Block Size: 16K Shared Pool Size: 3,104M 3,024M Log Buffer: 14,288K Load Profile ~~~~~~~~~~~~ Per Second Per Transaction --------------- --------------- Redo size: 545,833.77 6,659.29 Logical reads: 225,711.23 2,753.73 Block changes: 1,788.11 21.82 Physical reads: 1,195.98 14.59 Physical writes: 119.02 1.45 User calls: 2,368.64 28.90 Parses: 737.35 9.00 Hard parses: 94.58 1.15 Sorts: 261.75 3.19 Logons: 5.93 0.07 Executes: 1,796.12 21.91 Transactions: 81.97 Top 5 Timed Events Avg %Total ~~~~~~~~~~~~~~~~~~ wait Call Event Waits Time (s) (ms) Time Wait Class ------------------------------ ------------ ----------- ------ ------ ---------- CPU time 23,580 19.8 log file sync 491,840 21,976 45 18.5 Commit db file sequential read 1,902,069 12,604 7 10.6 User I/O read by other session 743,414 4,159 6 3.5 User I/O log file parallel write 220,772 3,069 14 2.6 System I/O ------------------------------------------------------------- Instance Activity Stats ****************** Statistic Total per Second per Trans -------------------------------- ------------------ -------------- ------------- Cached Commit SCN referenced 11,130 3.1 0.0 Commit SCN cached 3 0.0 0.0 DB time 12,547,531 3,483.3 42.5 DBWR checkpoint buffers written 145,437 40.4 0.5 DBWR checkpoints 6 0.0 0.0 ... IMU CR rollbacks 2,566 0.7 0.0 IMU Flushes 36,411 10.1 0.1 IMU Redo allocation size 187,480,124 52,045.3 635.0 IMU commits 255,350 70.9 0.9 IMU contention 11,998 3.3 0.0 IMU ktichg flush 11 0.0 0.0 IMU pool not allocated 4,295 1.2 0.0 IMU recursive-transaction flush 175 0.1 0.0 IMU undo allocation size 2,029,937,952 563,519.5 6,875.1 IMU- failed to get a private str 4,295 1.2 0.0 ... background checkpoints completed 6 0.0 0.0 background checkpoints started 6 0.0 0.0 background timeouts 11,400 3.2 0.0 ... change write time 9,398 2.6 0.0 cleanout - number of ktugct call 54,233 15.1 0.2 cleanouts and rollbacks - consis 9,436 2.6 0.0 cleanouts only - consistent read 2,028 0.6 0.0 ... Instance Activity Stats ******************* Statistic Total per Second per Trans -------------------------------- ------------------ -------------- ------------- commit batch performed 61 0.0 0.0 commit batch requested 61 0.0 0.0 commit batch/immediate performed 94 0.0 0.0 commit batch/immediate requested 94 0.0 0.0 commit cleanout failures: block 51 0.0 0.0 commit cleanout failures: buffer 14 0.0 0.0 commit cleanout failures: callba 8,632 2.4 0.0 commit cleanout failures: cannot 10,575 2.9 0.0 commit cleanouts 991,186 275.2 3.4 commit cleanouts successfully co 971,914 269.8 3.3 commit immediate performed 33 0.0 0.0 commit immediate requested 33 0.0 0.0 commit txn count during cleanout 61,737 17.1 0.2 ... redo blocks written 4,078,889 1,132.3 13.8 redo buffer allocation retries 177 0.1 0.0 redo entries 1,924,106 534.1 6.5 redo log space requests 174 0.1 0.0 redo log space wait time 2,333 0.7 0.0 redo ordering marks 4,553 1.3 0.0 redo size 1,966,229,700 545,833.8 6,659.3 redo subscn max counts 38,227 10.6 0.1 redo synch time 2,233,166 619.9 7.6 redo synch writes 352,935 98.0 1.2 redo wastage 56,259,980 15,618.0 190.5 redo write time 316,495 87.9 1.1 redo writer latching time 19 0.0 0.0 redo writes 220,866 61.3 0.8 rollback changes - undo records 134 0.0 0.0 rollbacks only - consistent read 11,242 3.1 0.0 ... ... transaction rollbacks 94 0.0 0.0 transaction tables consistent re 25 0.0 0.0 transaction tables consistent re 8,704 2.4 0.0 undo change vector size 1,176,156,772 326,506.2 3,983.5 user I/O wait time 2,039,881 566.3 6.9 user calls 8,532,422 2,368.6 28.9 user commits 295,139 81.9 1.0 user rollbacks 122 0.0 0.0 ... -------------------------------------------------------------High "log file synchronization" waits arise also when LGWR is unable to get the CPU to republish on the boy that the log write is complete. The boy expected this event until LGWR may be a sign but if LGWR is unable to get the CPU, it is unable to signal quite quickly.

8 processors for 1 hour is 28 800 seconds. 7 UC is an odd number. This is equivalent to 25 200 seconds available. Your AWR shows that Oracle has represented time seconds 23 580 CPU. So, your server is probably encounter situations where processes are unable to get the CPU.

You can pin or renice LGWR - but you need to check with Oracle Support if this is doable on your platform or supported.

What you need to do is to adjust the sessions that make very high logical reads (a simple rule is 10 k blocks per CPU per second and you hit 225 K blocks for 7 processors!) to reduce the logical reads and CPU consumption.

OR add more CPU.Hemant K Collette

http://hemantoracledba.blogspot.comHemant K Collette

-

How to get data from a database table and insert into a file

Hello

I'm new to soa, I want to create an xml with the data from database tables, I'll have the xsd please suggest me how to get the data in the tables and insert in a file

concerningin your bpel process, you can use the db adapter to communicate with the database.

with this type of adapter, you can use stored procedures, selects, etc to get the data from your database into your bpel workflow.When did it call in your bpel to the db adapter process it will return an output_variable with the contents of your table data, represented in a style of xml form.

After that, you can use the second card (a file synchronization adapter) to write to the content of this variable in output to the file system

Maybe you are looking for

-

This is my laptop and I know my password install but do not understand why it gives me this message when you try to copy the new Firefox on the old Firefox application I currently have (to. 3.0.3). Any suggestions? I am also concerned about all my fa

-

Crush the recovery on Satellite L750 partition

I've updated windows 7 Home premium to Windows 7 Professional. Please could you give me some tips to update (or replace) the recovery as well as Win 7 Pro partition becomes the default o/s, and all software and drivers Toshiba Satellite L750 are incl

-

HP 802.11 wireless network adapter b/g

Hi all I have a m7580n and I currently installed windows 8.1 pro... I had xp, 7, ubuntu and linux mint recently on this machine before I was removed from my hard drive and installed windows 8... Use this adapter instantly don't read by all these bone

-

SSD in laptop computer DV8339US?

I want to know if I can put an SSD in my laptop DV8339us? He seems to have 2 100 gig SATA hard drives now with one of them partitioned for a recovery disc. I know that it is only SATA I and I will not get full benefit the SSD, but anything would be b

-

No sound with audio volume on the screen box (won't go away)

I have a computer from a1747c with a monitor of w19e with internal speakers under vista (updated) Suddenly the audio volume box appears centered on the screen and began to go to 0 and that's where he stays. And the internal speakers do not work. I tr